[ad_1]

YADB (But One other Database Benchmark)

The world has a plethora of database benchmarks, beginning with the Wisconsin Benchmark which is my favourite. Firstly, that benchmark was from Dr David Dewitt, who taught me Database Internals after I was a graduate scholar at College of Wisconsin. Secondly, it’s in all probability the earliest convention paper (circa 1983) that I ever learn. And thirdly, the outcomes of this paper displeased Larry Ellison a lot that he inserted a clause in newer Oracle releases to stop researchers from benchmarking the Oracle database.

The Wisconsin paper clearly describes how a benchmark measures very particular options of databases, so it follows that as database capabilities evolve, new benchmarks are wanted. If in case you have a database that has new habits not present in present databases, then it’s clear that you just want a brand new benchmark to measure this new habits of the database.

Immediately, we’re introducing a brand new benchmark, RockBench, that does simply this. RockBench is designed to measure a very powerful traits of a real-time database.

What Is a Actual-Time Database?

An actual-time database is one that may maintain a excessive write price of recent incoming knowledge, whereas on the identical time permitting purposes to make queries based mostly on the freshest of information. It’s completely different from a transactional database the place essentially the most important attribute is the power to carry out transactions, which is why TPC-C is essentially the most cited benchmark for transactional databases.

In typical database ACID parlance, a real-time database offers Atomicity and Sturdiness of updates identical to most different databases. It helps an eventual Consistency mannequin, the place updates present up in question outcomes as rapidly as potential. This time lag is known as knowledge latency. An actual-time database is one that’s designed to reduce knowledge latency.

Totally different purposes want completely different knowledge latencies, and the power to measure knowledge latency permits customers to decide on one real-time database configuration over one other based mostly on the wants of their software. RockBench is the one benchmark at current that measures the information latency of a database at various write charges.

Knowledge latency is completely different from question latency, which is what is usually used to benchmark transactional databases. We posit that one of many distinguishing components that differentiates one real-time database from one other is knowledge latency. We designed a benchmark known as RockBench that may measure the information latency of a real-time database.

Why Is This Benchmark Related within the Actual World?

Actual-time analytics use instances. There are a lot of decision-making techniques that leverage giant volumes of streaming knowledge to make fast selections. When a truck arrives at a loading dock, a fleet administration system would want to provide a loading checklist for the truck by analyzing supply deadlines, delay-charge estimates, climate forecasts and modeling of different vans which can be arriving within the close to future. Any such decision-making system would use a real-time database. Equally, a product staff would have a look at product clickstreams and person suggestions in actual time to find out which function flags to set within the product. The quantity of incoming click on logs could be very excessive and the time to collect insights from this knowledge is low. That is one other use case for a real-time database. Such use instances have gotten the norm today, which is why measuring the information latency of a real-time database is helpful. It permits customers to choose the correct database for his or her wants based mostly on how rapidly they need to extract insights from their knowledge streams.

Excessive write charges. Essentially the most essential measurement for a real-time database is the write price it may well maintain whereas supporting queries on the identical time. The write price could possibly be bursty or periodic, relying on the time of the day or the day of the week. This habits is sort of a streaming logging system that may soak up giant volumes of writes. Nevertheless, one distinction between a real-time database and a streaming logging system is that the database offers a question API that may carry out random queries on the occasion stream. With writing and querying of information, there may be at all times an inherent tradeoff between excessive write charges and the visibility of information in queries, and that is exactly what RockBench measures.

Semi-structured knowledge. Most of real-life decision-making knowledge is in semi-structured kind, e.g. JSON, XML or CSV. New fields get added to the schema and older fields are dropped. The identical subject can have multi-typed values. Some fields have deeply nested objects. Earlier than the appearance of real-time databases, a person would sometimes use an information pipeline to wash and homogenize all of the fields, flatten nested fields, denormalize nested objects after which write it out it to a knowledge warehouse like Redshift or Snowflake. The information warehouse is then used to collect insights from their knowledge. These knowledge pipelines add to knowledge latency. However, a real-time database eliminates the necessity for a few of these knowledge pipelines and concurrently provides decrease knowledge latency. This benchmark makes use of knowledge in JSON format to simulate extra of all these real-life eventualities.

Overview of RockBench

RockBench includes a Knowledge Generator and a Knowledge Latency Evaluator. The Knowledge Generator simulates a real-life occasion workload, the place each generated occasion is in JSON format and schemas can change often. The Knowledge Generator produces occasions at varied write charges and writes them to the database. The Knowledge Latency Evaluator queries the database periodically and outputs a metric that measures the information latency at that instantaneous. A person can fluctuate the write price and measure the noticed knowledge latency of the system.

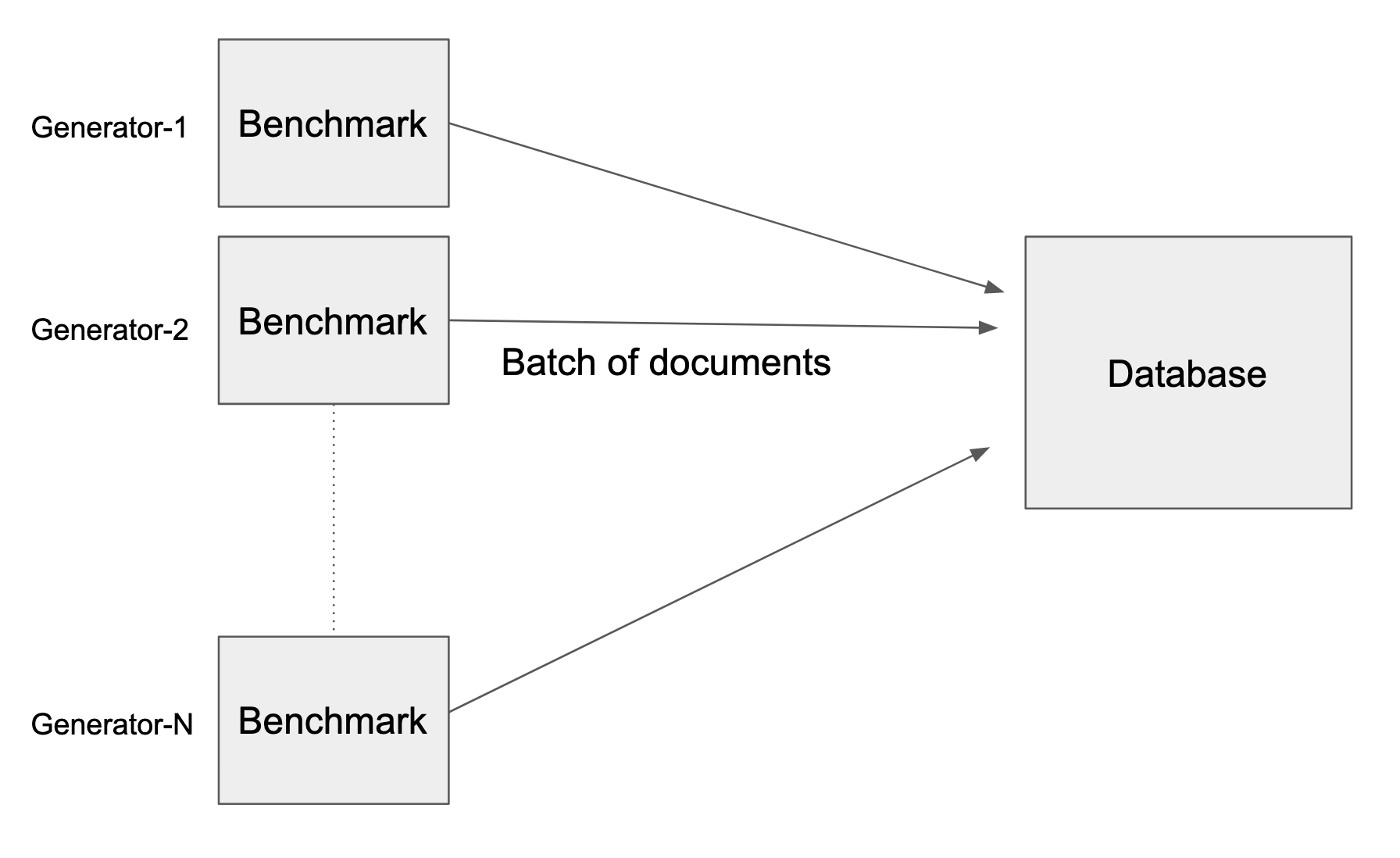

A number of cases of the benchmark connect with the database underneath take a look at

The Evaluating Knowledge Latency for Actual-Time Databases white paper offers an in depth description of the benchmark. The dimensions of an occasion is chosen to be round 1K bytes, which is what we discovered to be the candy spot for a lot of real-life techniques. Every occasion has nested objects and arrays inside it. We checked out a variety of publicly obtainable occasions streams like Twitter occasions, inventory market occasions and on-line gaming occasions to choose these traits of the information that this benchmark makes use of.

Outcomes of Working RockBench on Rockset

Earlier than we analyze the outcomes of the benchmark, let’s refresh our reminiscence of Rockset’s Aggregator Leaf Tailer (ALT) structure. The ALT structure permits Rockset to scale ingest compute and question compute individually. This benchmark measures the pace of indexing in Rockset’s Converged Index™, which maintains an inverted index, a columnar retailer and a document retailer on all fields, and effectively permits queries on new knowledge to be obtainable nearly immediately and to carry out extremely quick. Queries are quick as a result of it may well leverage any of those pre-built indices. The information latency that we document in our benchmarking is a measure of how briskly Rockset can index streaming knowledge. Full outcomes will be discovered right here.

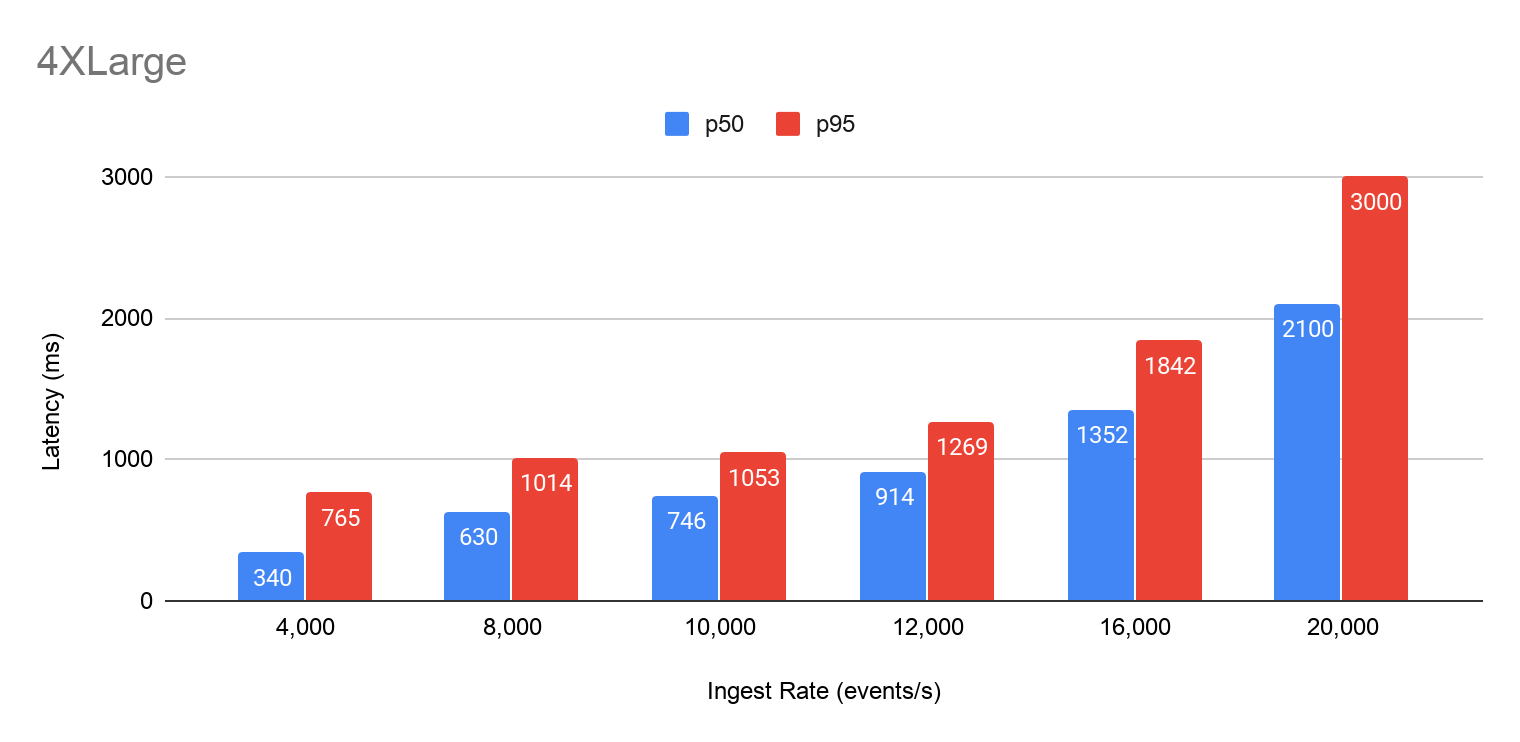

Rockset p50 and p95 knowledge latency utilizing a 4XLarge Digital Occasion at a batch dimension of fifty

The primary statement is {that a} Rockset 4XLarge Digital Occasion can assist a billion occasions flowing in day-after-day (approx. 12K occasions/sec) whereas retaining the information latency to underneath 1 second. This write price is adequate to assist a wide range of use instances, starting from fleet administration operations to dealing with occasions generated from sensors.

The second statement is that if it’s a must to assist a better write price, it is so simple as upgrading to the subsequent larger Rockset Digital Occasion. Rockset is scalable, and relying on the quantity of assets you dedicate, you’ll be able to scale back your knowledge latency or assist larger write charges. Extrapolating from these benchmark outcomes: a web based gaming system that produces 40K occasions/sec and requires an information latency of 1 second could also be glad with a Rockset 16XLarge Digital Occasion. Additionally, migrating from one Rockset Digital Occasion to a different doesn’t trigger any downtime, which makes it straightforward for customers emigrate from one occasion to a different.

The third statement is that in case you are operating on a hard and fast Rockset Digital Occasion and your write price will increase, the benchmark outcomes present that there’s a gradual and linear improve within the knowledge latency till CPU assets are saturated. In all these instances, the compute useful resource on the leaf is the bottleneck, as a result of this compute is the useful resource that makes not too long ago written knowledge queryable instantly. Rockset delegates compaction CPU to distant compactors, however some minimal CPU remains to be wanted on the leaves to repeat information to and from cloud storage.

Rockset makes use of a specialised bulk-load mechanism to index stationary knowledge and that may load knowledge at terabytes/hour, however this benchmark is to not measure that performance. This benchmark is purposely used to measure the information latency of high-velocity knowledge when new knowledge is arriving at a quick price and must be instantly queried.

Futures

In its present kind, the workload generator points writes at a specified fixed price, however one of many enhancements that customers have requested is to make this benchmark simulate a bursty write price. One other enchancment is so as to add an overwrite function that overwrites some paperwork that already exists within the database. One more requested function is to fluctuate the schema of a number of the generated paperwork in order that some fields are sparse.

RockBench is designed to be extensible, and we hope that builders within the database neighborhood would contribute code to make this benchmark run on different real-time databases as nicely.

I’m thrilled to see the outcomes of RockBench on Rockset. It demonstrates the worth of real-time databases, like Rockset, in enabling real-time analytics by supporting streaming ingest of 1000’s of occasions per second whereas retaining knowledge latencies within the low seconds. My hope is that RockBench will present builders an important instrument for measuring knowledge latency and deciding on the suitable real-time database configuration for his or her software necessities.

Assets:

[ad_2]