[ad_1]

Why and what to automate

As software builders and designers, every time we see repeating duties, we instantly take into consideration how you can automate them. This simplifies our every day work and permits us to be extra environment friendly and centered on delivering worth to the enterprise.

Typical examples of repeating duties embody scaling compute sources to optimize their utilization from a value and efficiency perspective, sending automated e-mails or Slack messages with outcomes of a SQL question, materializing views or doing periodic copies of knowledge for growth functions, exporting information to S3 buckets, and so forth.

How Rockset helps with automation

Rockset gives a set of highly effective options to assist automate frequent duties in constructing and managing information options:

- a wealthy set of APIs so that each facet of the platform will be managed by way of REST

- Question Lambdas – that are REST API wrappers round your parametrized SQL queries, hosted on Rockset

- scheduling of Question Lambdas – a just lately launched characteristic the place you’ll be able to create schedules for computerized execution of your question lambdas and put up outcomes of these queries to webhooks

- compute-compute separation (together with a shared storage layer) which permits isolation and unbiased scaling of compute sources

Let’s deep dive into why these are useful for automation.

Rockset APIs help you work together with your whole sources – from creating integrations and collections, to creating digital situations, resizing, pausing and resuming them, to working question lambdas and plain SQL queries.

Question Lambdas provide a pleasant and simple to make use of option to decouple customers of knowledge from the underlying SQL queries so as to maintain your online business logic in a single place with full supply management, versioning and internet hosting on Rockset.

Scheduled execution of question lambdas allows you to create cron schedules that may robotically execute question lambdas and optionally put up the outcomes of these queries to webhooks. These webhooks will be hosted externally to Rockset (to additional automate your workflow, for instance to put in writing information again to a supply system or ship an e-mail), however you can even name Rockset APIs and carry out duties like digital occasion resizing and even creating or resuming a digital occasion.

Compute-compute separation means that you can have devoted, remoted compute sources (digital situations) per use case. This implies you’ll be able to independently scale and measurement your ingestion VI and a number of secondary VIs which can be used for querying information. Rockset is the primary real-time analytics database to supply this characteristic.

With the mix of those options, you’ll be able to automate all the pieces you want (besides possibly brewing your espresso)!

Typical use circumstances for automation

Let’s now have a look into typical use circumstances for automation and present how you’ll implement them in Rockset.

Use case 1: Sending automated alerts

Usually occasions, there are necessities to ship automated alerts all through the day with outcomes of SQL queries. These will be both enterprise associated (like frequent KPIs that the enterprise is taken with) or extra technical (like discovering out what number of queries ran slower than 3 seconds).

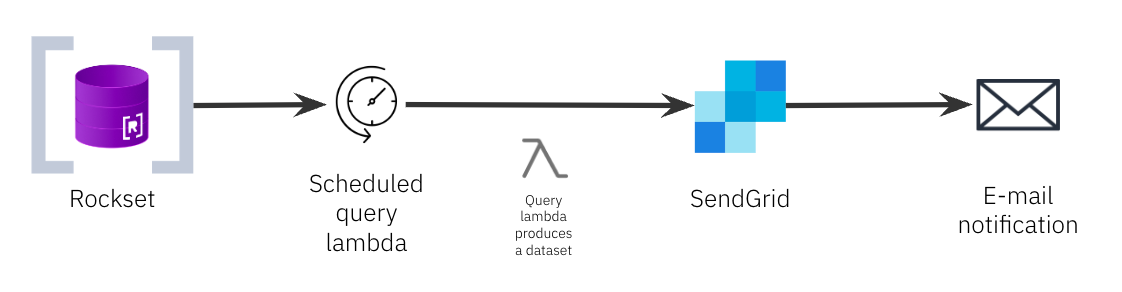

Utilizing scheduled question lambdas, we will run a SQL question in opposition to Rockset and put up the outcomes of that question to an exterior endpoint similar to an e-mail supplier or Slack.

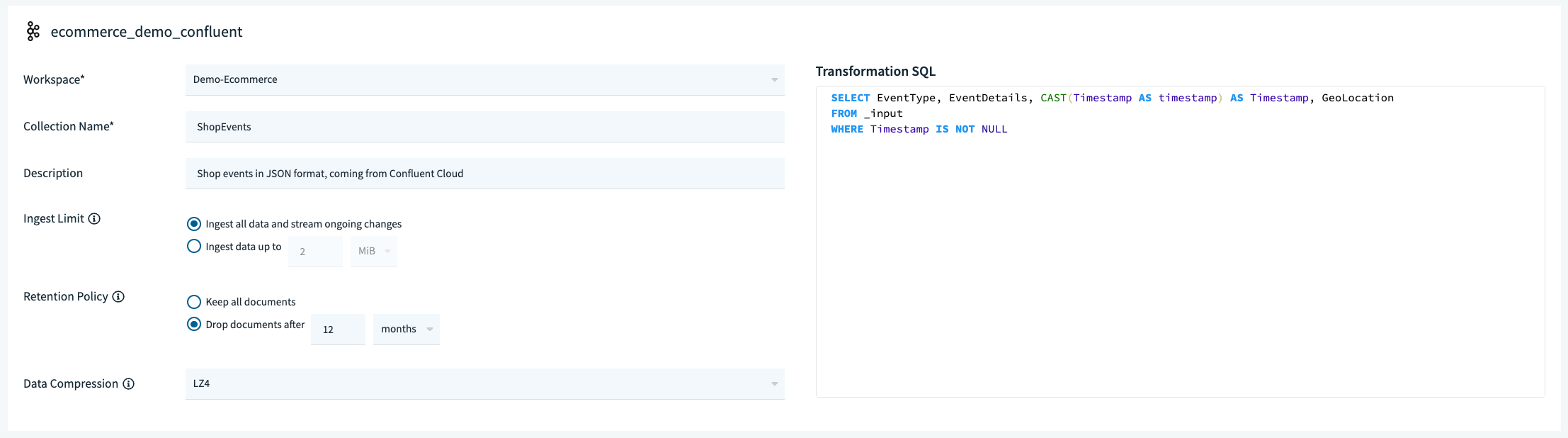

Let’s take a look at an e-commerce instance. We have now a group known as ShopEvents with uncooked real-time occasions from a webshop. Right here we monitor each click on to each product in our webshop, after which ingest this information into Rockset by way of Confluent Cloud. We’re taken with realizing what number of gadgets have been offered on our webshop in the present day and we wish to ship this information by way of e-mail to our enterprise customers each six hours.

We’ll create a question lambda with the next SQL question on our ShopEvents assortment:

SELECT

COUNT(*) As ItemsSold

FROM

"Demo-Ecommerce".ShopEvents

WHERE

Timestamp >= CURRENT_DATE() AND EventType="Checkout";

We’ll then use SendGrid to ship an e-mail with the outcomes of that question. We gained’t undergo the steps of organising SendGrid, you’ll be able to comply with that in their documentation.

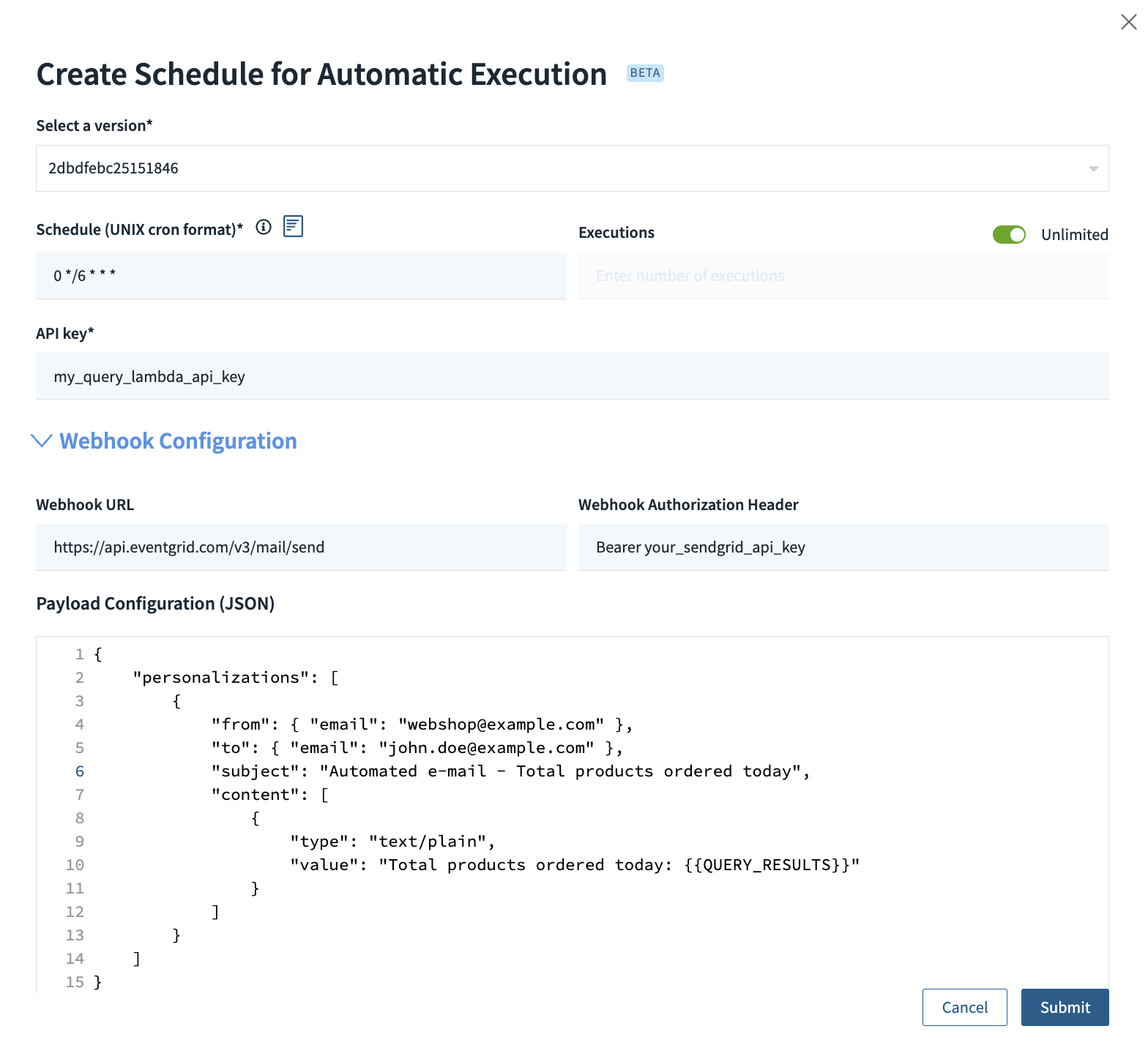

When you’ve bought an API key from SendGrid, you’ll be able to create a schedule to your question lambda like this, with a cron schedule of 0 */6 * * * for each 6 hours:

This can name the SendGrid REST API each 6 hours and can set off sending an e-mail with the overall variety of offered gadgets that day.

{{QUERY_ID}} and {{QUERY_RESULTS}} are template values that Rockset offers robotically for scheduled question lambdas so as to use the ID of the question and the ensuing dataset in your webhook calls. On this case, we’re solely within the question outcomes.

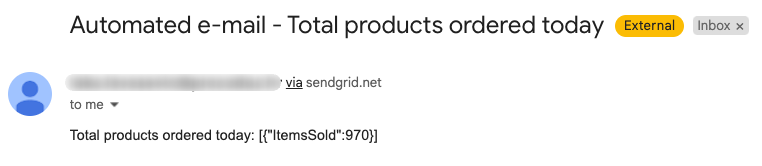

After enabling this schedule, that is what you’ll get in your inbox:

You might do the identical with Slack API or some other supplier that accepts POST requests and Authorization headers and also you’ve bought your automated alerts arrange!

When you’re taken with sending alerts for sluggish queries, take a look at organising Question Logs the place you’ll be able to see an inventory of historic queries and their efficiency.

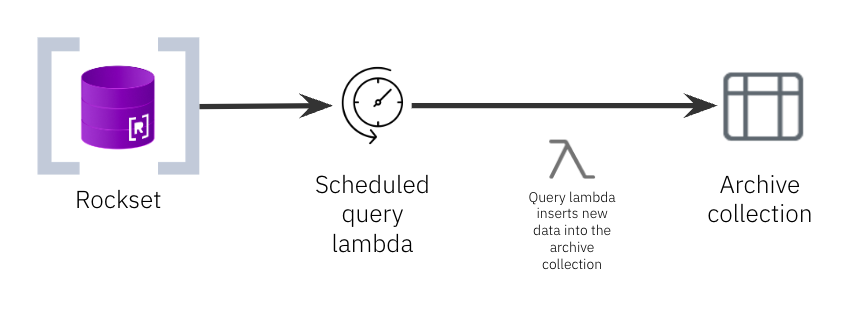

Use case 2: Creating materialized views or growth datasets

Rockset helps computerized real-time rollups on ingestion for some information sources. Nevertheless, if in case you have a must create further materialized views with extra advanced logic or if you might want to have a replica of your information for different functions (like archival, growth of recent options, and so forth.), you are able to do it periodically through the use of an INSERT INTO scheduled question lambda. INSERT INTO is a pleasant option to insert the outcomes of a SQL question into an current assortment (it could possibly be the identical assortment or a very completely different one).

Let’s once more take a look at our e-commerce instance. We have now a knowledge retention coverage set on our ShopEvents assortment in order that occasions which can be older than 12 months robotically get faraway from Rockset.

Nevertheless, for gross sales analytics functions, we wish to make a copy of particular occasions, the place the occasion was a product order. For this, we’ll create a brand new assortment known as OrdersAnalytics with none information retention coverage. We’ll then periodically insert information into this assortment from the uncooked occasions assortment earlier than the info will get purged.

We are able to do that by making a SQL question that can get all Checkout occasions for the day past:

INSERT INTO "Demo-Ecommerce".OrdersAnalytics

SELECT

e.EventId AS _id,

e.Timestamp,

e.EventType,

e.EventDetails,

e.GeoLocation,

FROM

"Demo-Ecommerce".ShopEvents e

WHERE

e.Timestamp BETWEEN CURRENT_DATE() - DAYS(1) AND CURRENT_DATE()

AND e.EventType="Checkout";

Notice the _id discipline we’re utilizing on this question – this may be certain that we don’t get any duplicates in our orders assortment. Take a look at how Rockset robotically handles upserts right here.

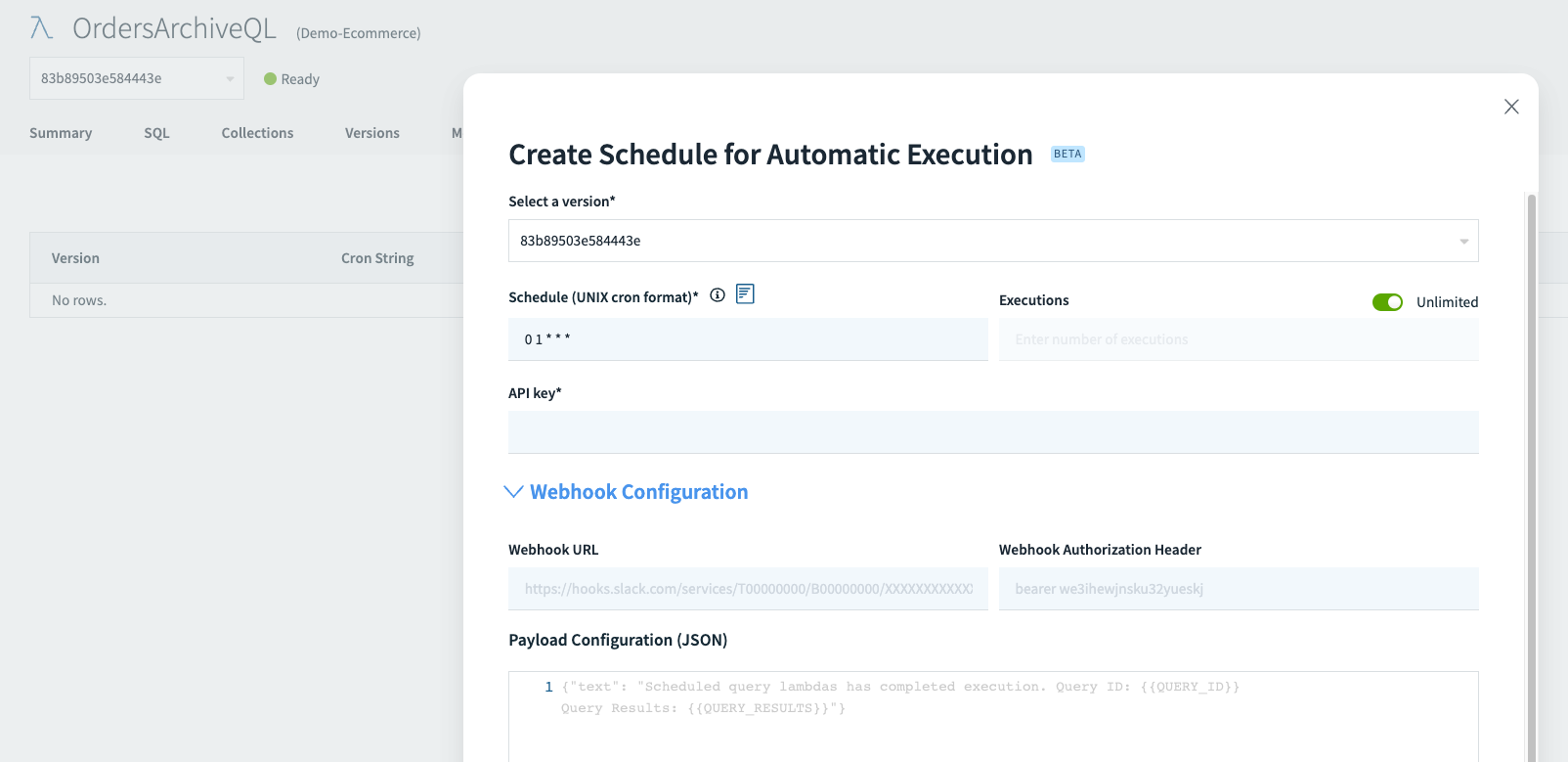

Then we create a question lambda with this SQL question syntax, and create a schedule to run this as soon as a day at 1 AM, with a cron schedule 0 1 * * *. We don’t must do something with a webhook, so this a part of the schedule definition is empty.

That’s it – now we’ll have every day product orders saved in our OrdersAnalytics assortment, prepared to be used.

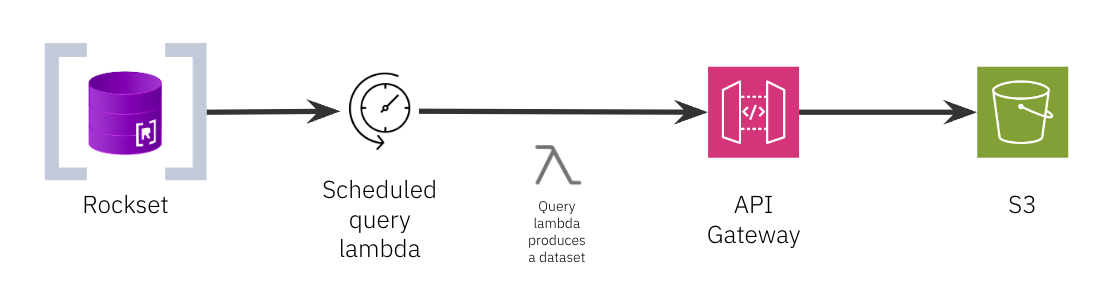

Use case 3: Periodic exporting of knowledge to S3

You should use scheduled question lambdas to periodically execute a SQL question and export the outcomes of that question to a vacation spot of your selection, similar to an S3 bucket. That is helpful for situations the place you might want to export information frequently, similar to backing up information, creating studies or feeding information into downstream programs.

On this instance, we are going to once more work on our e-commerce dataset and we’ll leverage AWS API Gateway to create a webhook that our question lambda can name to export the outcomes of a question into an S3 bucket.

Much like our earlier instance, we’ll write a SQL question to get all occasions from the day past, be part of that with product metadata and we’ll save this question as a question lambda. That is the dataset we wish to periodically export to S3.

SELECT

e.Timestamp,

e.EventType,

e.EventDetails,

e.GeoLocation,

p.ProductName,

p.ProductCategory,

p.ProductDescription,

p.Worth

FROM

"Demo-Ecommerce".ShopEvents e

INNER JOIN "Demo-Ecommerce".Merchandise p ON e.EventDetails.ProductID = p._id

WHERE

e.Timestamp BETWEEN CURRENT_DATE() - DAYS(1) AND CURRENT_DATE();

Subsequent, we’ll must create an S3 bucket and arrange AWS API Gateway with an IAM Position and Coverage in order that the API gateway can write information to S3. On this weblog, we’ll deal with the API gateway half – make sure you verify the AWS documentation on how you can create an S3 bucket and the IAM position and coverage.

Comply with these steps to arrange AWS API Gateway so it’s prepared to speak with our scheduled question lambda:

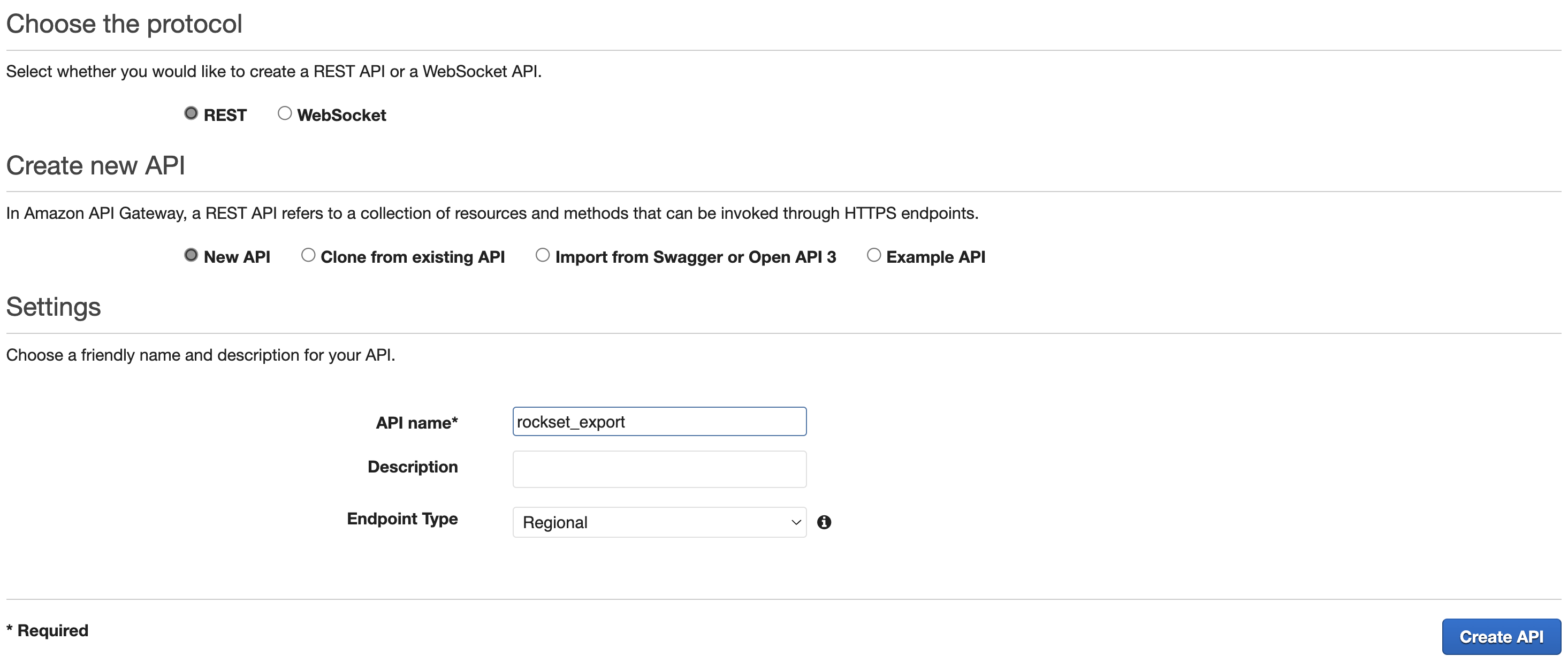

- Create a REST API software within the AWS API Gateway service, we will name it

rockset_export:

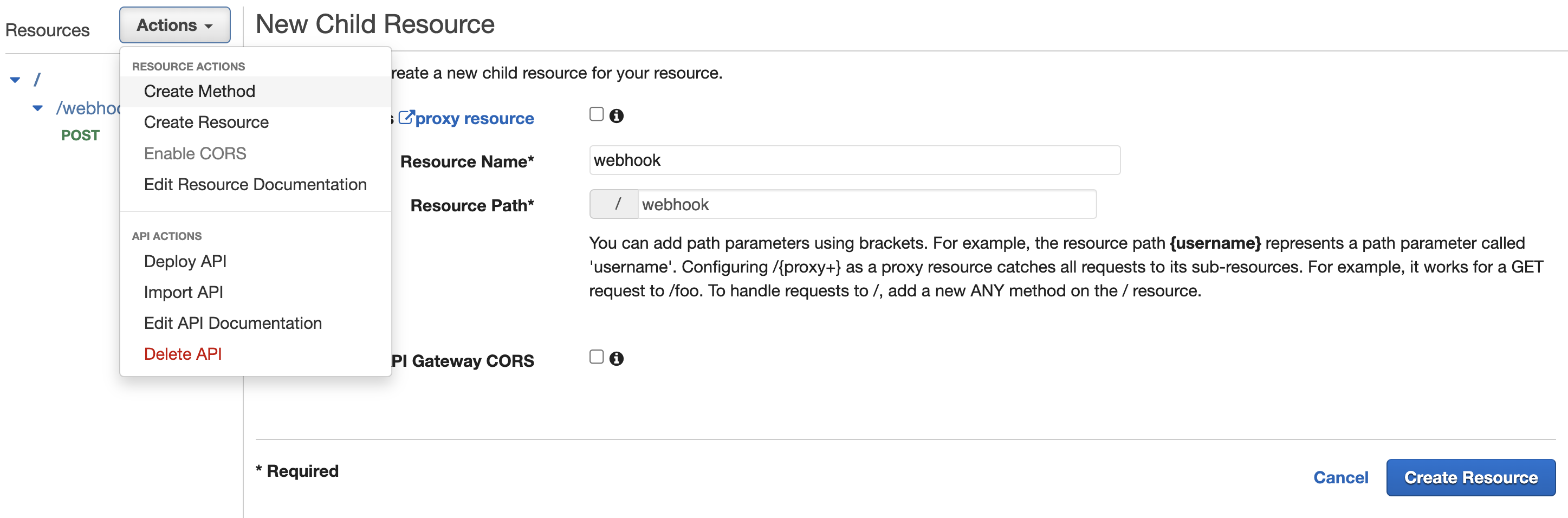

- Create a brand new useful resource which our question lambdas will use, we’ll name it

webhook:

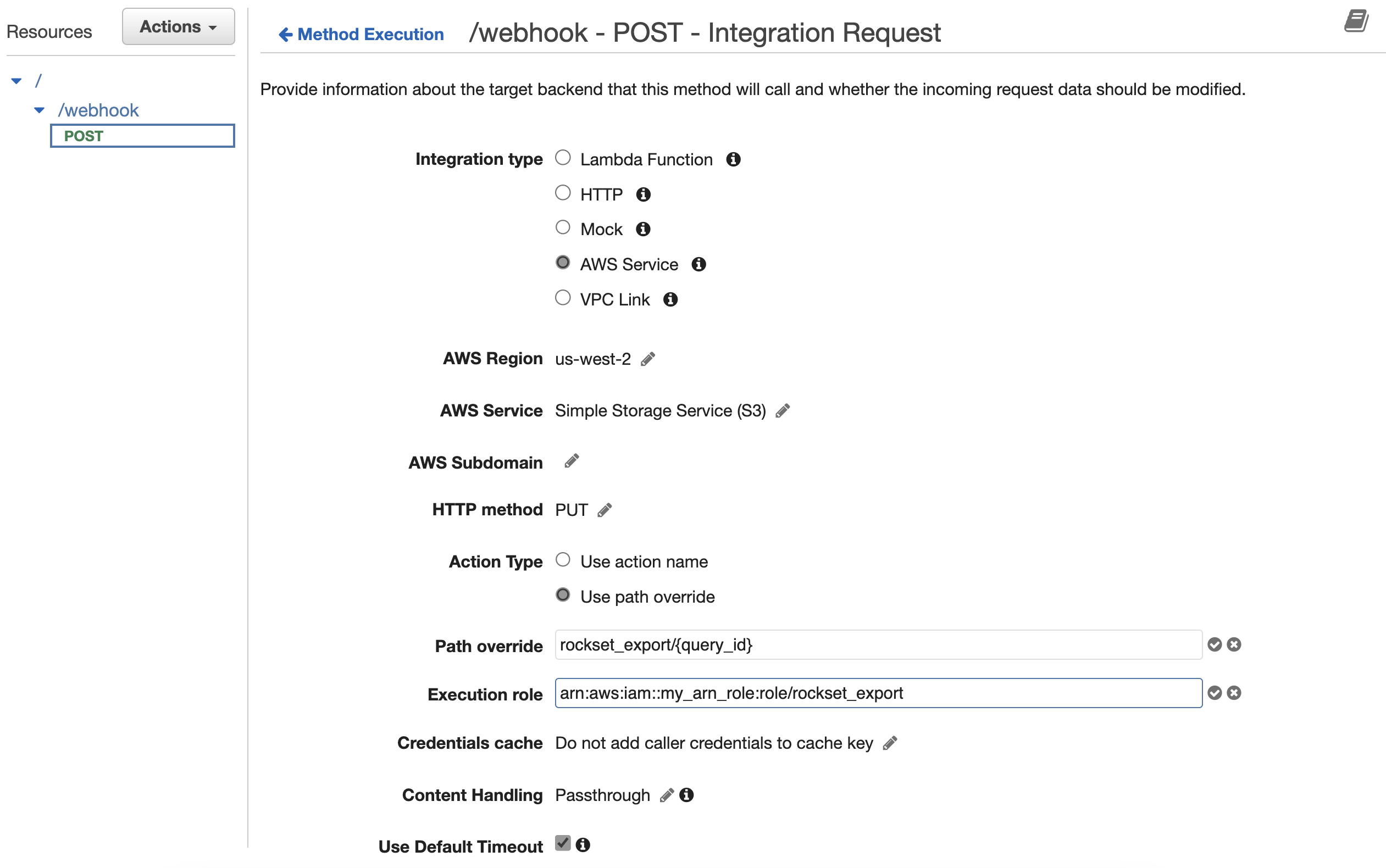

- Create a brand new POST methodology utilizing the settings beneath – this primarily permits our endpoint to speak with an S3 bucket known as

rockset_export:

- AWS Area:

Area to your S3 bucket - AWS Service:

Easy Storage Service (S3) - HTTP methodology:

PUT - Motion Kind:

Use path override - Path override (elective):

rockset_export/{question _id}(change together with your bucket identify) - Execution position:

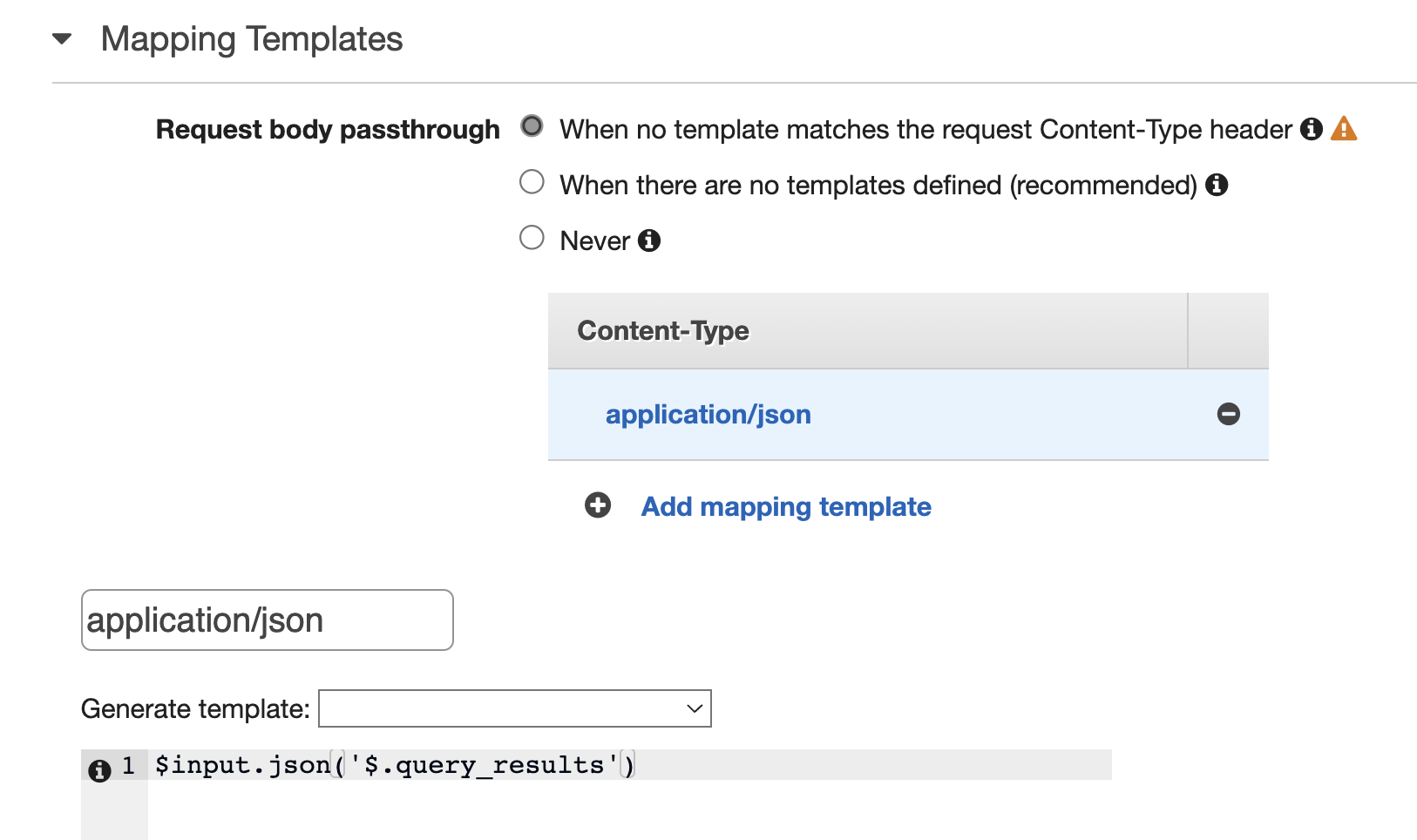

arn:awsiam::###:position/rockset_export(change together with your ARN position) - Setup URL Path Parameters and Mapping Templates for the Integration Request – this may extract a parameter known as

query_idfrom the physique of the incoming request (we’ll use this as a reputation for recordsdata saved to S3) andquery_resultswhich we’ll use for the contents of the file (that is the results of our question lambda):

As soon as that’s carried out, we will deploy our API Gateway to a Stage and we’re now able to name this endpoint from our scheduled question lambda.

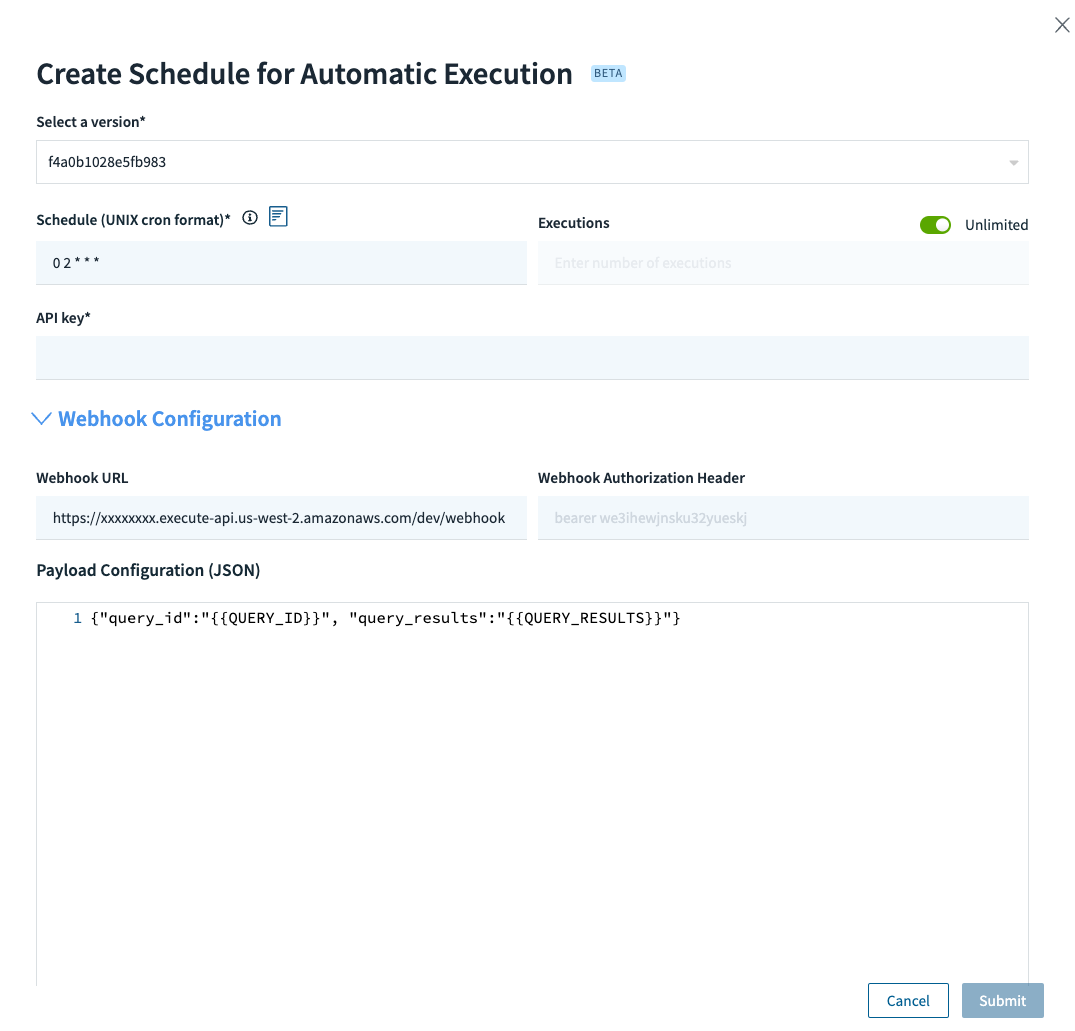

Let’s now configure the schedule for our question lambda. We are able to use a cron schedule 0 2 * * * in order that our question lambda runs at 2 AM within the morning and produces the dataset we have to export. We’ll name the webhook we created within the earlier steps, and we’ll provide query_id and query_results as parameters within the physique of the POST request:

We’re utilizing {{QUERY_ID}} and {{QUERY_RESULTS}} within the payload configuration and passing them to the API Gateway which is able to use them when exporting to S3 because the identify of the file (the ID of the question) and its contents (the results of the question), as described in step 4 above.

As soon as we save this schedule, now we have an automatic job that runs each morning at 2 AM, grabs a snapshot of our information and sends it to an API Gateway webhook which exports this to an S3 bucket.

Use case 4: Scheduled resizing of digital situations

Rockset has help for auto-scaling digital situations, but when your workload has predictable or properly understood utilization patterns, you’ll be able to profit from scaling your compute sources up or down primarily based on a set schedule.

That manner, you’ll be able to optimize each spend (so that you just don’t over-provision sources) and efficiency (so that you’re prepared with extra compute energy when your customers wish to use the system).

An instance could possibly be a B2B use case the place your prospects work primarily in enterprise hours, let’s say 9 AM to five PM all through the work days, and so that you want extra compute sources throughout these occasions.

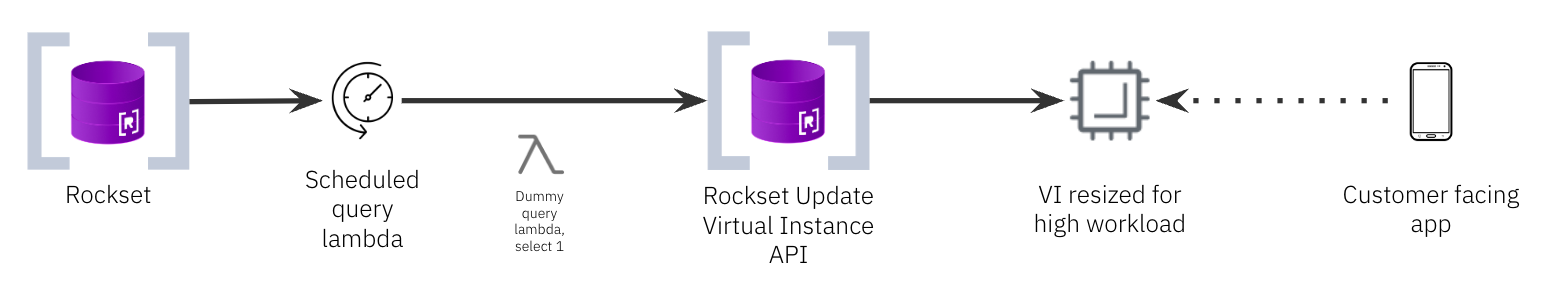

To deal with this use case, you’ll be able to create a scheduled question lambda that can name Rockset’s digital occasion endpoint and scale it up and down primarily based on a cron schedule.

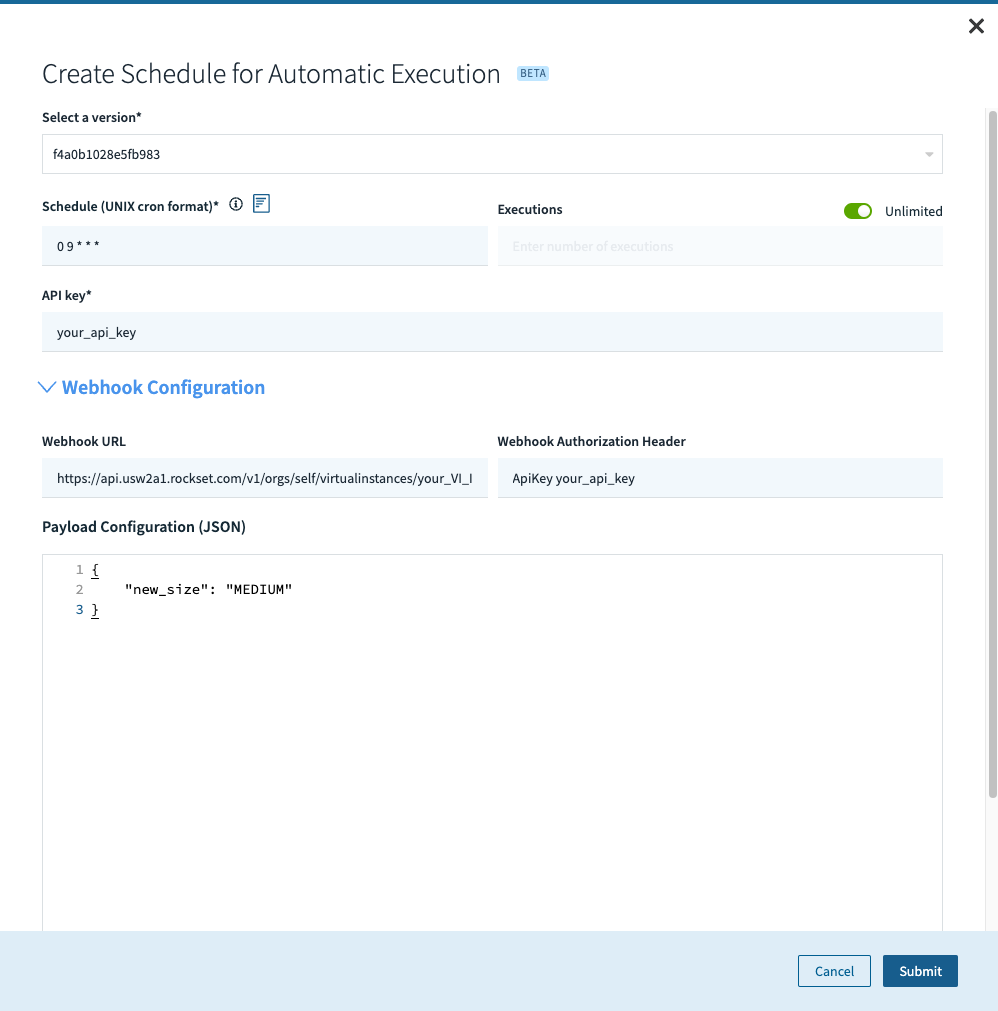

Comply with these steps:

- Create a question lambda with only a

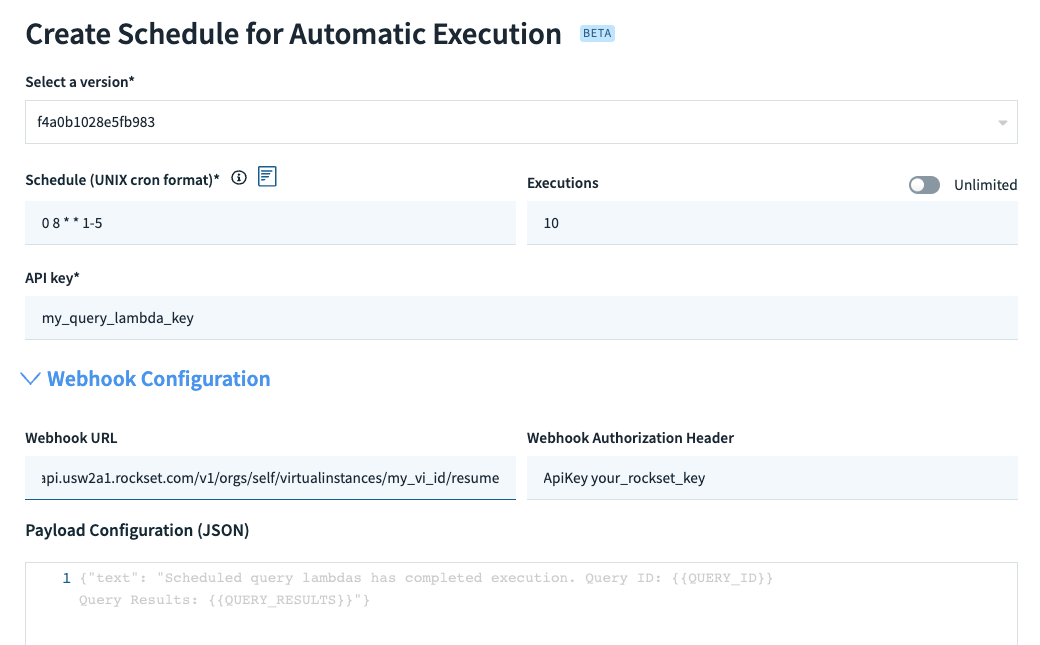

choose 1question, since we don’t really need any particular information for this to work. - Create a schedule for this question lambda. In our case, we wish to execute as soon as a day at 9 AM so our cron schedule shall be

0 9 * * *and we are going to set limitless variety of executions in order that it runs each day indefinitely. - We’ll name the replace digital occasion webhook for the precise VI that we wish to scale up. We have to provide the digital occasion ID within the webhook URL, the authentication header with the API key (it wants permissions to edit the VI) and the parameter with the

NEW_SIZEset to one thing likeMEDIUMorLARGEwithin the physique of the request.

We are able to repeat steps 1-3 to create a brand new schedule for scaling the VI down, altering the cron schedule to one thing like 5 PM and utilizing a smaller measurement for the NEW_SIZE parameter.

Use case 5: Establishing information analyst environments

With Rockset’s compute-compute separation, it’s simple to spin up devoted, remoted and scalable environments to your advert hoc information evaluation. Every use case can have its personal digital occasion, guaranteeing {that a} manufacturing workload stays steady and performant, with one of the best price-performance for that workload.

On this state of affairs, let’s assume now we have information analysts or information scientists who wish to run advert hoc SQL queries to discover information and work on numerous information fashions as a part of a brand new characteristic the enterprise desires to roll out. They want entry to collections and so they want compute sources however we don’t need them to create or scale these sources on their very own.

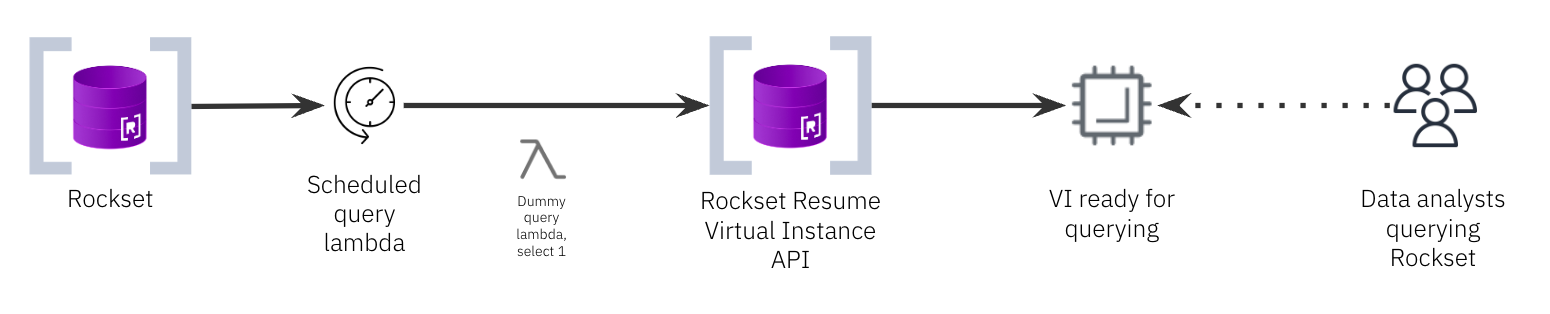

To cater to this requirement, we will create a brand new digital occasion devoted to information analysts, be certain that they’ll’t edit or create VIs by making a customized RBAC position and assign analysts to that position, and we will then create a scheduled question lambda that can resume the digital occasion each morning in order that information analysts have an surroundings prepared once they log into the Rockset console. We may even couple this with use case 2 and create a every day snapshot of manufacturing right into a separate assortment and have the analysts work on that dataset from their digital occasion.

The steps for this use case are just like the one the place we scale the VIs up and down:

- Create a question lambda with only a

choose 1question, since we don’t really need any particular information for this to work. - Create a schedule for this question lambda, let’s say every day at 8 AM Monday to Friday and we are going to restrict it to 10 executions as a result of we wish this to solely work within the subsequent 2 working weeks. Our cron schedule shall be

0 8 * * 1-5. - We’ll name the resume VI endpoint. We have to provide the digital occasion ID within the webhook URL, the authentication header with the API key (it wants permissions to renew the VI). We don’t want any parameters within the physique of the request.

That’s it! Now now we have a working surroundings for our information analysts and information scientists that’s up and working for them each work day at 8 AM. We are able to edit the VI to both auto-suspend after sure variety of hours or we will have one other scheduled execution which is able to droop the VIs at a set schedule.

As demonstrated above, Rockset gives a set of helpful options to automate frequent duties in constructing and sustaining information options. The wealthy set of APIs mixed with the facility of question lambdas and scheduling help you implement and automate workflows which can be fully hosted and working in Rockset so that you just don’t must depend on third occasion parts or arrange infrastructure to automate repeating duties.

We hope this weblog gave you just a few concepts on how you can do automation in Rockset. Give this a attempt to tell us the way it works!

[ad_2]