[ad_1]

Nothing to Worry

Migration is commonly seen as a 4 letter phrase in IT. One thing to keep away from, one thing to worry and positively not one thing to do on a whim. It’s an comprehensible place given the chance and horror tales related to “Migration Tasks”. This weblog outlines finest practices from clients I’ve helped migrate from Elasticsearch to Rockset, lowering danger and avoiding widespread pitfalls.

With our confidence boosted, let’s check out Elasticsearch. Elasticsearch has develop into ubiquitous as an index centric datastore for search and rose in tandem with the recognition of the web and Web2.0. It’s primarily based on Apache Lucene and sometimes mixed with different instruments like Logstash and Kibana (and Beats) to type the ELK stack with the anticipated accompaniment of cute elk caricatures. So in style nonetheless in the present day that Rockset engineers use it for our personal inner log search capabilities.

As any promenade queen will inform you, recognition comes at a price. Elasticsearch grew to become so in style that people wished to see what else it might do or simply assumed it might cowl a slew of use circumstances, together with real-time analytics use circumstances. The dearth of correct joins, immutable indexes that want fixed vigil, a tightly coupled compute and storage structure, and extremely particular area data wanted to develop and function it has left many engineers in search of alternate options.

Rockset has helped to shut the gaps with Elasticsearch for real-time analytics use circumstances. In consequence, firms are flocking to Rockset like Command Alkon for real-time logistics monitoring, Seesaw for product analytics, Sequoia for inner funding instruments and Whatnot and Zembula for personalization. These firms migrated to Rockset in days or perhaps weeks, not months or years leveraging the facility and ease of a cloud-native database. On this weblog, we distilled their migration journeys into 5 steps.

Step 1: Knowledge Acquisition

Elasticsearch isn’t the system of report which suggests the info in it comes from some other place for real-time analytics.

Rockset has built-in connectors to stream real-time information for testing and simulating manufacturing workloads together with Apache Kafka, Kinesis and Occasion Hubs. For database sources, you should utilize CDC streams and Rockset will materialize the change information into the present state of your desk. There isn’t any extra tooling wanted like in Elasticsearch the place it’s important to configure Logstash or Beats together with a queueing system to ingest information.

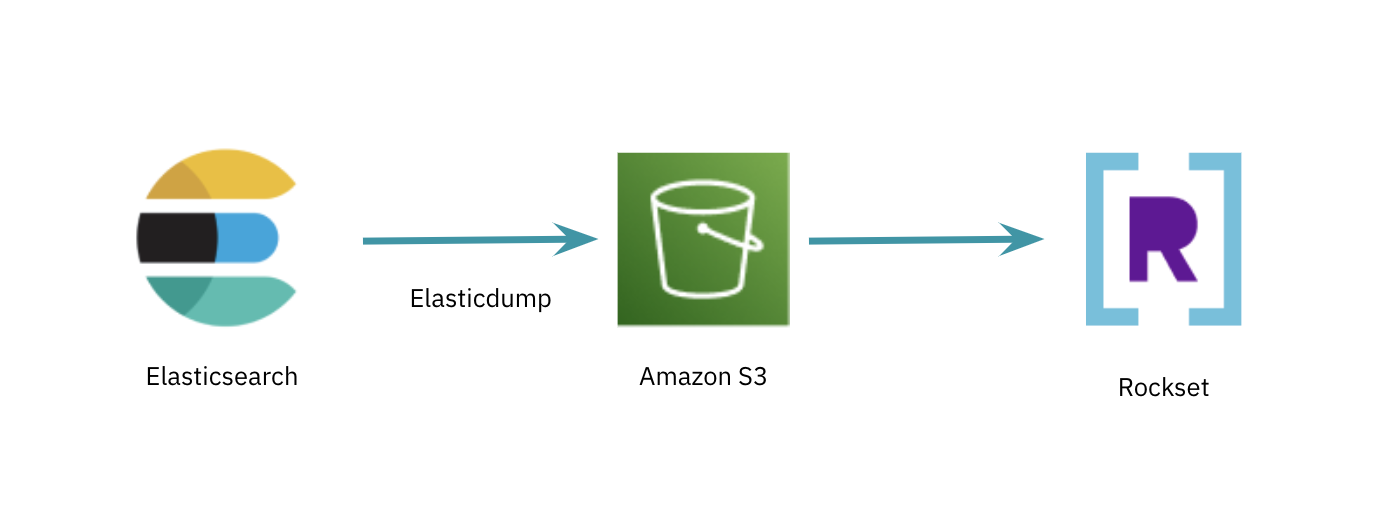

Nevertheless, if you wish to shortly take a look at question efficiency in Rockset one possibility is to do an export from Elasticsearch utilizing the aptly named elasticdump utility. The exported JSON formatted information could be deposited into an object retailer like S3, GCS or Azure Blob and ingested into Rockset utilizing managed integrations. This can be a fast method to ingest giant information units into Rockset to begin testing question speeds.

Determine 1: The method of exporting information from Elasticsearch into Rockset for doing a fast efficiency take a look at

Rockset has schemaless ingest and indexes all attributes in a completely mutable Converged Index, a search index, columnar retailer and row retailer. Moreover, Rockset helps SQL joins so there isn’t a information denormalization required upstream. This removes the necessity for advanced ETL pipelines so information could be out there for querying inside 2 seconds of when it was generated.

Step 2: Ingest Transformations

Rockset makes use of SQL to precise how information ought to be remodeled earlier than it’s listed and saved. The best type of this ingest rework SQL would seem like this:

SELECT *

FROM _input

Right here _input is supply information being ingested and doesn’t rely on supply kind. The next are some widespread ingest transformations we see with groups migrating Elasticsearch workloads.

Time Sequence

You’ll typically have occasions or data with a timestamp and need to search primarily based on a spread of time. Such a question is absolutely supported in Rockset with the easy caveat that the attribute have to be listed as the suitable information kind. Your ingest rework question make seem like this:

SELECT TRY_CAST(my_timestamp AS timestamp) AS my_timestamp,

* EXCEPT(my_timestamp)

FROM _input

Textual content Search

Rockset is able to easy textual content search, indexing arrays of scalars to assist these search queries. Rockset generates the arrays from textual content utilizing capabilities like TOKENIZE, SUFFIXES and NGRAMS. Right here’s an instance:

SELECT NGRAMS(my_text_string, 1, 3) AS my_text_array,

* FROM _input

Aggregation

It is not uncommon to pre-aggregate information earlier than it arrives into Elasticsearch to be used circumstances involving metrics.

Rockset has SQL-based rollups as a built-in functionality which might use capabilities like COUNT, SUM, MAX, MIN and even one thing extra refined like HMAP_AGG to lower the storage footprint for a big dataset and enhance question efficiency.

We regularly see ingest queries mixture information by time. Right here’s an instance:

SELECT entity_id, DATE_TRUNC(‘HOUR’, my_timestamp) AS hour_bucket,

COUNT(*),

SUM(amount),

MAX(amount)

FROM _input

GROUP BY entity_id, hour_bucket

Clustering

Many engineering groups are constructing multi-tenant functions on Elasticsearch. It’s widespread for Elasticsearch customers to isolate tenants by mapping a tenant to a cluster, avoiding noisy neighbor issues.

There’s a easier step you may absorb Rockset to speed up entry to a single tenant’s data and that’s to do clustering on the column index. Throughout assortment creation, you may optionally specify clustering for the columnar index to optimize particular question patterns. Clustering shops all paperwork with the identical clustering area values collectively to make queries which have predicates on the clustering fields sooner.

Right here is an instance of how clustering is used for multi-tenant functions:

SELECT *

FROM _input

CLUSTER BY tenant_id

Ingest transformations are non-compulsory methods that may be leveraged to optimize Rockset for particular use circumstances, lower the storage footprint and speed up question efficiency.

Step 3: Question Conversion

Question Conversion

Elastic’s Area Particular Language (DSL) has the benefit of being tightly coupled with its capabilities. After all, this comes at the price of being too particular for porting on to different methods.

Rockset is constructed from the bottom up for SQL, together with joins, aggregations and enrichment capabilities. SQL has develop into the lingua franca for expressing queries on databases of all varieties. On condition that many engineering groups are intimately acquainted with SQL, it makes it simpler to transform queries.

We advocate taking the semantics of a standard question or question sample in Elasticsearch and translating it into SQL. When you’ve carried out that for a variety of question patterns, you should utilize the question profiler to know the right way to optimize the system. At this level one of the best factor to do is save your semantically equal question as a Question Lambda or named, parameterized SQL saved in Rockset and executed from a devoted REST endpoint. This can assist as you iterate throughout question tuning since Rockset will retailer every new model.

Question Tuning

Rockset reduces the effort and time of question tuning with its Value-Based mostly Optimizer (CBO) which takes under consideration the info within the collections, the distribution of knowledge, and information varieties in figuring out the execution plan.

Whereas the CBO works properly portion of the time, there could also be some eventualities the place utilizing hints to specify indexes and be a part of methods will improve question efficiency.

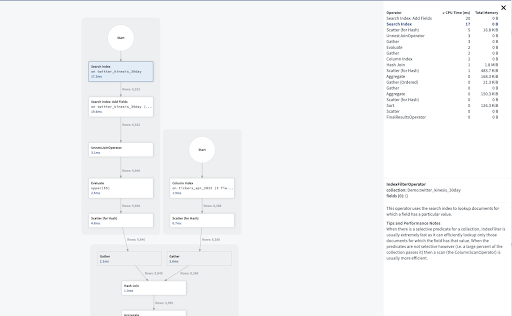

Rockset’s question profiler gives a runtime question plan with row counts and index choice. You need to use it to tune your question to attain your required latency. You might, within the technique of question tuning, revisit ingest transformations to additional cut back latency. This can find yourself providing you with a template for future translation that’s already optimized for probably the most half minus substantial variations.

Determine 2: On this question profile instance we will see two sorts of indexes getting used within the Converged Index, the search index and column index and the rows being returned from each indexes. The search index is getting used on the bigger assortment because the qualification is very selective. On the opposite facet, it’s extra environment friendly to make use of the column index on the smaller assortment with no selectivity. The output of each indexes are then joined collectively and move by the remainder of the topology. Ideally, we would like the topology to be related in form with many of the CPU utilization in the direction of the highest which retains the scalability aligned with digital occasion dimension.

Engineering groups begin optimizing queries within the first week of their migration journey with the assistance of the options engineering crew. We advocate initially specializing in single question efficiency utilizing a small quantity of compute sources. When you get to your required latency, you may stress take a look at Rockset in your workload.

Step 4: Stress Take a look at

Load testing or efficiency testing allows you to know the higher bounds of a system so you may decide its scalability. As talked about above, your queries ought to be optimized and capable of meet the one question latency required in your utility earlier than beginning to stress take a look at.

Being a cloud-native system, Rockset is very scalable with on-demand elasticity. Rockset makes use of digital cases or a set of compute and reminiscence sources used to serve queries. You’ll be able to change the digital occasion dimension at any time with out interrupting your working queries.

For stress testing we advocate beginning with the smallest digital occasion dimension that can deal with each single question latency and information ingestion.

Now that you’ve got your beginning digital occasion dimension, you’ll need to use a testing framework to permit for reproducible take a look at runs at numerous digital occasion sizes. HTTP testing frameworks JMeter and Locust are generally utilized by clients and we advocate utilizing the framework that finest simulates your workload.

To check efficiency, many engineers take a look at queries per second (QPS) at sure question latency intervals. These intervals are expressed in percentiles like P50 or P95. For user-facing functions, P95 or P99 latencies are widespread intervals as they categorical worst case efficiency. In different circumstances the place the necessities are extra relaxed you would possibly take a look at P50 and P90 intervals.

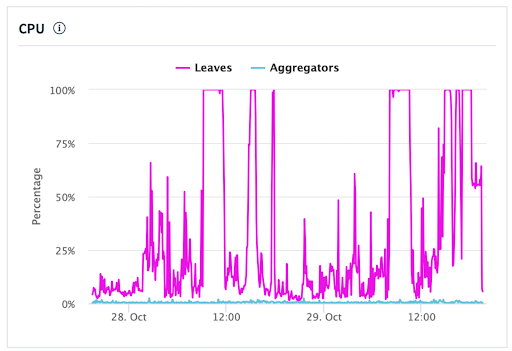

As you enhance your digital occasion dimension, you must see your QPS double because the compute sources related to every digital occasion double. In case your QPS is flatlining, verify Rockset CPU utilization utilizing metrics within the console as it could be attainable that your testing framework shouldn’t be capable of saturate the system with its present configuration. If as an alternative Rockset is saturated and CPU utilization is near 100%, then you must discover rising the digital occasion dimension or return to single question optimization.

Determine 3: This chart reveals factors the place the CPU is saturated and you might have used a bigger digital occasion dimension. Underneath the hood, Rockset makes use of an Aggregator-Leaf-Tailer structure which disaggregates question compute, ingest compute and storage. On this case, the leaves, or the place the info is saved, are the service being saturated which suggests this workload is leaf certain. That is normally the specified sample as leaves deal with information entry and scale properly with digital occasion dimension. Aggregators, or question compute, deal with decrease components of the question topology like filters and joins and better aggregator CPU than leaf CPU is an indication of a tuning alternative.

The thought with stress testing is to construct confidence, not an ideal simulation, so as soon as you are feeling comfy transfer on to the subsequent step and know you could additionally take a look at once more later.

Step 5: Manufacturing Implementation

It’s now time to place the Ops in DevOps and begin the method of taking what has been up thus far a safely managed experiment and releasing it to the wild.

For extremely delicate workloads the place question latencies are measured within the P90 and above buckets, we frequently see engineering groups utilizing an A/B method for manufacturing transitions. The appliance will route a proportion of queries to each Rockset and Elasticsearch. This permits groups to watch the efficiency and stability earlier than transferring 100% of queries to Rockset. Even in case you are not utilizing the A/B testing method, we advocate having your deployment course of written as code and treating your SQL as code as properly.

Rockset gives metrics within the console and thru an API endpoint to watch system utilization, ingest efficiency and question efficiency. Metrics may also be captured on the consumer facet or by utilizing Question Lambdas. The metrics endpoint allows you to visualize Rockset and different system efficiency utilizing instruments Prometheus, Grafana, DataDog and extra.

The Actual First Step

We mapped the migration from Elasticsearch to Rockset in 5 steps. Most firms can migrate a workload in 8 days, leveraging the assist and technical experience of our options engineering crew. If there’s nonetheless a touch of hesitancy on migrating, simply know that Rockset and engineers like me will likely be there with you on the journey. Go forward and take the primary step- begin your trial of Rockset and get $300 in free credit.

[ad_2]