[ad_1]

As organizations throughout sectors grapple with the alternatives and challenges introduced through the use of massive language fashions (LLMs), the infrastructure wanted to construct, prepare, take a look at, and deploy LLMs presents its personal distinctive challenges. As a part of the SEI’s current investigation into use circumstances for LLMs inside the Intelligence Neighborhood (IC), we would have liked to deploy compliant, cost-effective infrastructure for analysis and improvement. On this put up, we describe present challenges and cutting-edge of cost-effective AI infrastructure, and we share 5 classes discovered from our personal experiences standing up an LLM for a specialised use case.

The Problem of Architecting MLOps Pipelines

Architecting machine studying operations (MLOps) pipelines is a troublesome course of with many transferring elements, together with information units, workspace, logging, compute assets, and networking—and all these elements should be thought of through the design section. Compliant, on-premises infrastructure requires superior planning, which is commonly a luxurious in quickly advancing disciplines similar to AI. By splitting duties between an infrastructure workforce and a improvement workforce who work intently collectively, venture necessities for undertaking ML coaching and deploying the assets to make the ML system succeed could be addressed in parallel. Splitting the duties additionally encourages collaboration for the venture and reduces venture pressure like time constraints.

Approaches to Scaling an Infrastructure

The present cutting-edge is a multi-user, horizontally scalable atmosphere situated on a company’s premises or in a cloud ecosystem. Experiments are containerized or saved in a manner so they’re straightforward to duplicate or migrate throughout environments. Knowledge is saved in particular person parts and migrated or built-in when essential. As ML fashions turn into extra advanced and because the quantity of information they use grows, AI groups might have to extend their infrastructure’s capabilities to take care of efficiency and reliability. Particular approaches to scaling can dramatically have an effect on infrastructure prices.

When deciding scale an atmosphere, an engineer should think about components of price, velocity of a given spine, whether or not a given venture can leverage sure deployment schemes, and total integration targets. Horizontal scaling is using a number of machines in tandem to distribute workloads throughout all infrastructure obtainable. Vertical scaling supplies extra storage, reminiscence, graphics processing items (GPUs), and many others. to enhance system productiveness whereas reducing price. Any such scaling has particular utility to environments which have already scaled horizontally or see an absence of workload quantity however require higher efficiency.

Usually, each vertical and horizontal scaling could be price efficient, with a horizontally scaled system having a extra granular degree of management. In both case it’s potential—and extremely really useful—to determine a set off operate for activation and deactivation of expensive computing assets and implement a system underneath that operate to create and destroy computing assets as wanted to attenuate the general time of operation. This technique helps to cut back prices by avoiding overburn and idle assets, which you’re in any other case nonetheless paying for, or allocating these assets to different jobs. Adapting sturdy orchestration and horizontal scaling mechanisms similar to containers, supplies granular management, which permits for clear useful resource utilization whereas reducing working prices, notably in a cloud atmosphere.

Classes Discovered from Challenge Mayflower

From Could-September 2023, the SEI carried out the Mayflower Challenge to discover how the Intelligence Neighborhood may arrange an LLM, customise LLMs for particular use circumstances, and consider the trustworthiness of LLMs throughout use circumstances. You possibly can learn extra about Mayflower in our report, A Retrospective in Engineering Massive Language Fashions for Nationwide Safety. Our workforce discovered that the power to quickly deploy compute environments based mostly on the venture wants, information safety, and making certain system availability contributed on to the success of our venture. We share the next classes discovered to assist others construct AI infrastructures that meet their wants for price, velocity, and high quality.

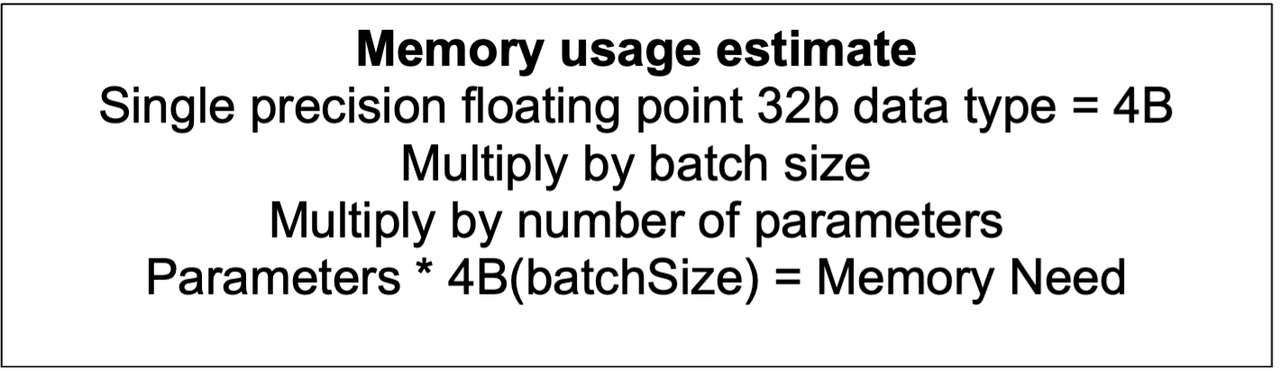

1. Account on your property and estimate your wants up entrance.

Take into account each bit of the atmosphere an asset: information, compute assets for coaching, and analysis instruments are just some examples of the property that require consideration when planning. When these parts are recognized and correctly orchestrated, they will work collectively effectively as a system to ship outcomes and capabilities to finish customers. Figuring out your property begins with evaluating the info and framework the groups can be working with. The method of figuring out every part of your atmosphere requires experience from—and ideally, cross coaching and collaboration between—each ML engineers and infrastructure engineers to perform effectively.

2. Construct in time for evaluating toolkits.

Some toolkits will work higher than others, and evaluating them generally is a prolonged course of that must be accounted for early on. In case your group has turn into used to instruments developed internally, then exterior instruments might not align with what your workforce members are conversant in. Platform as a service (PaaS) suppliers for ML improvement provide a viable path to get began, however they could not combine nicely with instruments your group has developed in-house. Throughout planning, account for the time to guage or adapt both instrument set, and examine these instruments towards each other when deciding which platform to leverage. Value and usefulness are the first components you need to think about on this comparability; the significance of those components will range relying in your group’s assets and priorities.

3. Design for flexibility.

Implement segmented storage assets for flexibility when attaching storage parts to a compute useful resource. Design your pipeline such that your information, outcomes, and fashions could be handed from one place to a different simply. This method permits assets to be positioned on a typical spine, making certain quick switch and the power to connect and detach or mount modularly. A typical spine supplies a spot to retailer and name on massive information units and outcomes of experiments whereas sustaining good information hygiene.

A apply that may assist flexibility is offering a regular “springboard” for experiments: versatile items of {hardware} which are independently highly effective sufficient to run experiments. The springboard is much like a sandbox and helps speedy prototyping, and you’ll reconfigure the {hardware} for every experiment.

For the Mayflower Challenge, we carried out separate container workflows in remoted improvement environments and built-in these utilizing compose scripts. This technique permits a number of GPUs to be referred to as through the run of a job based mostly on obtainable marketed assets of joined machines. The cluster supplies multi-node coaching capabilities inside a job submission format for higher end-user productiveness.

4. Isolate your information and shield your gold requirements.

Correctly isolating information can clear up quite a lot of issues. When working collaboratively, it’s straightforward to exhaust storage with redundant information units. By speaking clearly along with your workforce and defining a regular, widespread, information set supply, you’ll be able to keep away from this pitfall. Because of this a major information set should be extremely accessible and provisioned with the extent of use—that’s, the quantity of information and the velocity and frequency at which workforce members want entry—your workforce expects on the time the system is designed. The supply ought to be capable to assist the anticipated reads from nevertheless many workforce members might have to make use of this information at any given time to carry out their duties. Any output or reworked information should not be injected again into the identical space by which the supply information is saved however ought to as a substitute be moved into one other working listing or designated output location. This method maintains the integrity of a supply information set whereas minimizing pointless storage use and permits replication of an atmosphere extra simply than if the info set and dealing atmosphere weren’t remoted.

5. Save prices when working with cloud assets.

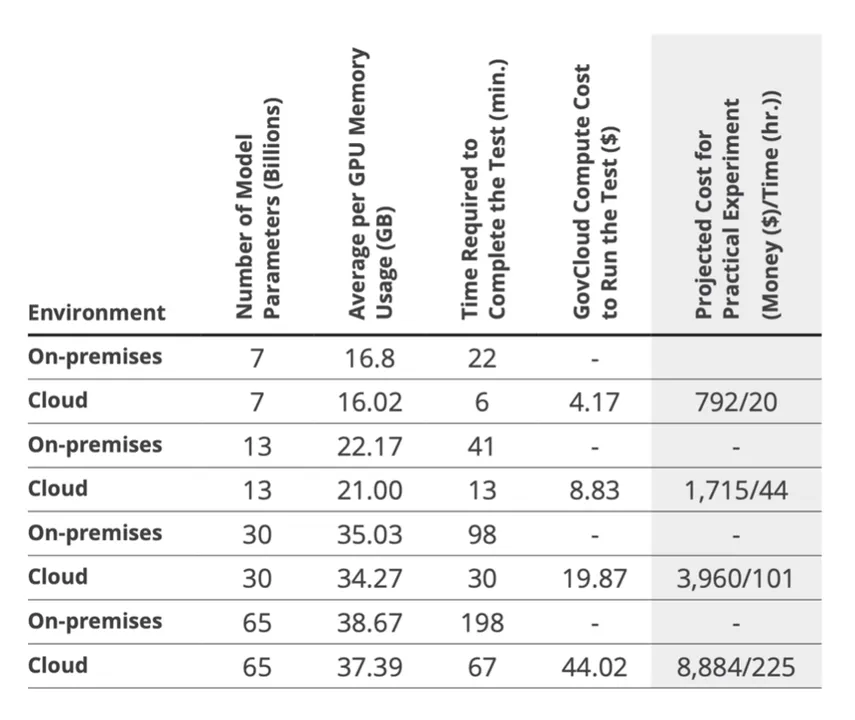

Authorities cloud assets have totally different availability than industrial assets, which regularly require extra compensations or compromises. Utilizing an current on-premises useful resource may help cut back prices of cloud operations. Particularly, think about using native assets in preparation for scaling up as a springboard. This apply limits total compute time on costly assets that, based mostly in your use case, could also be much more highly effective than required to carry out preliminary testing and analysis.

Determine 1: On this desk from our report A Retrospective in Engineering Massive Language Fashions for Nationwide Safety, we offer info on efficiency benchmark assessments for coaching LlaMA fashions of various parameter sizes on our customized 500-document set. For the estimates within the rightmost column, we outline a sensible experiment as LlaMA with 10k coaching paperwork for 3 epochs with GovCloud at $39.33/ hour, LoRA (r=1, α=2, dropout = 0.05), and DeepSpeed. On the time of the report, High Secret charges had been $79.0533/hour.

Wanting Forward

Infrastructure is a serious consideration as organizations look to construct, deploy, and use LLMs—and different AI instruments. Extra work is required, particularly to fulfill challenges in unconventional environments, similar to these on the edge.

Because the SEI works to advance the self-discipline of AI engineering, a powerful infrastructure base can assist the scalability and robustness of AI techniques. Specifically, designing for flexibility permits builders to scale an AI resolution up or down relying on system and use case wants. By defending information and gold requirements, groups can make sure the integrity and assist the replicability of experiment outcomes.

Because the Division of Protection more and more incorporates AI into mission options, the infrastructure practices outlined on this put up can present price financial savings and a shorter runway to fielding AI capabilities. Particular practices like establishing a springboard platform can save time and prices in the long term.

[ad_2]