[ad_1]

Massive language fashions (LLMs) like GPT, LLaMA, and others have taken the world by storm with their exceptional capacity to grasp and generate human-like textual content. Nonetheless, regardless of their spectacular capabilities, the usual technique of coaching these fashions, referred to as “next-token prediction,” has some inherent limitations.

In next-token prediction, the mannequin is educated to foretell the following phrase in a sequence given the previous phrases. Whereas this method has confirmed profitable, it could actually result in fashions that battle with long-range dependencies and sophisticated reasoning duties. Furthermore, the mismatch between the teacher-forcing coaching regime and the autoregressive technology course of throughout inference may end up in suboptimal efficiency.

A latest analysis paper by Gloeckle et al. (2024) from Meta AI introduces a novel coaching paradigm referred to as “multi-token prediction” that goals to handle these limitations and supercharge giant language fashions. On this weblog submit, we’ll dive deep into the core ideas, technical particulars, and potential implications of this groundbreaking analysis.

What’s Multi-token Prediction?

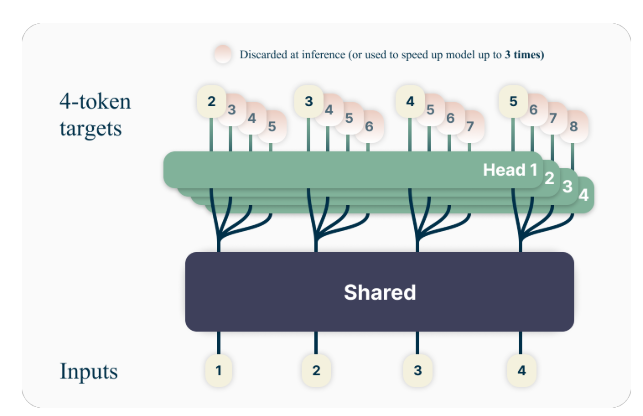

The important thing concept behind multi-token prediction is to coach language fashions to foretell a number of future tokens concurrently, slightly than simply the following token. Particularly, throughout coaching, the mannequin is tasked with predicting the following n tokens at every place within the coaching corpus, utilizing n impartial output heads working on high of a shared mannequin trunk.

For instance, with a 4-token prediction setup, the mannequin could be educated to foretell the following 4 tokens directly, given the previous context. This method encourages the mannequin to seize longer-range dependencies and develop a greater understanding of the general construction and coherence of the textual content.

A Toy Instance

To higher perceive the idea of multi-token prediction, let’s contemplate a easy instance. Suppose we’ve got the next sentence:

“The fast brown fox jumps over the lazy canine.”

In the usual next-token prediction method, the mannequin could be educated to foretell the following phrase given the previous context. As an example, given the context “The fast brown fox jumps over the,” the mannequin could be tasked with predicting the following phrase, “lazy.”

With multi-token prediction, nevertheless, the mannequin could be educated to foretell a number of future phrases directly. For instance, if we set n=4, the mannequin could be educated to foretell the following 4 phrases concurrently. Given the identical context “The fast brown fox jumps over the,” the mannequin could be tasked with predicting the sequence “lazy canine .” (Word the house after “canine” to point the top of the sentence).

By coaching the mannequin to foretell a number of future tokens directly, it’s inspired to seize long-range dependencies and develop a greater understanding of the general construction and coherence of the textual content.

Technical Particulars

The authors suggest a easy but efficient structure for implementing multi-token prediction. The mannequin consists of a shared transformer trunk that produces a latent illustration of the enter context, adopted by n impartial transformer layers (output heads) that predict the respective future tokens.

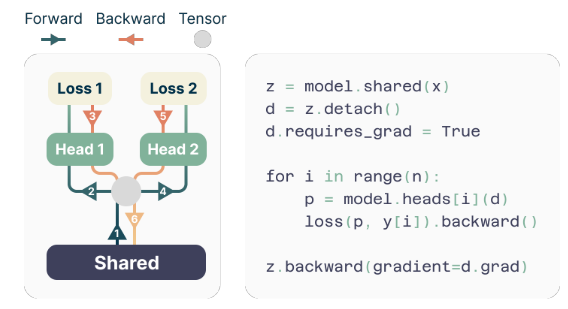

Throughout coaching, the ahead and backward passes are rigorously orchestrated to reduce the GPU reminiscence footprint. The shared trunk computes the latent illustration, after which every output head sequentially performs its ahead and backward move, accumulating gradients on the trunk degree. This method avoids materializing all logit vectors and their gradients concurrently, lowering the height GPU reminiscence utilization from O(nV + d) to O(V + d), the place V is the vocabulary measurement and d is the dimension of the latent illustration.

The Reminiscence-efficient Implementation

One of many challenges in coaching multi-token predictors is lowering their GPU reminiscence utilization. Because the vocabulary measurement (V) is usually a lot bigger than the dimension of the latent illustration (d), logit vectors develop into the GPU reminiscence utilization bottleneck.

To handle this problem, the authors suggest a memory-efficient implementation that rigorously adapts the sequence of ahead and backward operations. As an alternative of materializing all logits and their gradients concurrently, the implementation sequentially computes the ahead and backward passes for every impartial output head, accumulating gradients on the trunk degree.

This method avoids storing all logit vectors and their gradients in reminiscence concurrently, lowering the height GPU reminiscence utilization from O(nV + d) to O(V + d), the place n is the variety of future tokens being predicted.

Benefits of Multi-token Prediction

The analysis paper presents a number of compelling benefits of utilizing multi-token prediction for coaching giant language fashions:

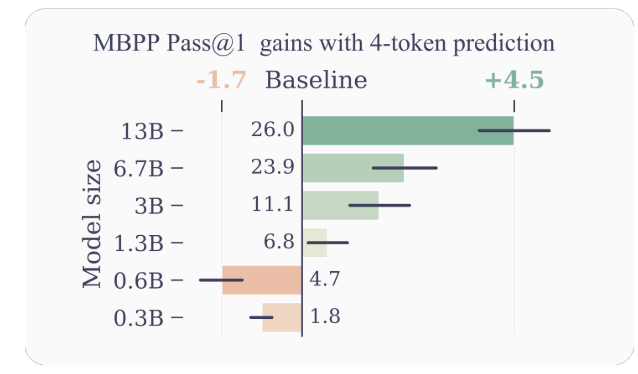

- Improved Pattern Effectivity: By encouraging the mannequin to foretell a number of future tokens directly, multi-token prediction drives the mannequin in direction of higher pattern effectivity. The authors reveal important enhancements in efficiency on code understanding and technology duties, with fashions as much as 13B parameters fixing round 15% extra issues on common.

- Quicker Inference: The extra output heads educated with multi-token prediction may be leveraged for self-speculative decoding, a variant of speculative decoding that enables for parallel token prediction. This ends in as much as 3x quicker inference instances throughout a variety of batch sizes, even for big fashions.

- Selling Lengthy-range Dependencies: Multi-token prediction encourages the mannequin to seize longer-range dependencies and patterns within the knowledge, which is especially useful for duties that require understanding and reasoning over bigger contexts.

- Algorithmic Reasoning: The authors current experiments on artificial duties that reveal the prevalence of multi-token prediction fashions in growing induction heads and algorithmic reasoning capabilities, particularly for smaller mannequin sizes.

- Coherence and Consistency: By coaching the mannequin to foretell a number of future tokens concurrently, multi-token prediction encourages the event of coherent and constant representations. That is notably useful for duties that require producing longer, extra coherent textual content, resembling storytelling, artistic writing, or producing tutorial manuals.

- Improved Generalization: The authors’ experiments on artificial duties recommend that multi-token prediction fashions exhibit higher generalization capabilities, particularly in out-of-distribution settings. That is probably because of the mannequin’s capacity to seize longer-range patterns and dependencies, which will help it extrapolate extra successfully to unseen eventualities.

Examples and Intuitions

To offer extra instinct on why multi-token prediction works so properly, let’s contemplate a number of examples:

- Code Era: Within the context of code technology, predicting a number of tokens concurrently will help the mannequin perceive and generate extra advanced code constructions. As an example, when producing a operate definition, predicting simply the following token may not present sufficient context for the mannequin to generate all the operate signature appropriately. Nonetheless, by predicting a number of tokens directly, the mannequin can higher seize the dependencies between the operate title, parameters, and return sort, resulting in extra correct and coherent code technology.

- Pure Language Reasoning: Take into account a situation the place a language mannequin is tasked with answering a query that requires reasoning over a number of steps or items of knowledge. By predicting a number of tokens concurrently, the mannequin can higher seize the dependencies between the completely different elements of the reasoning course of, resulting in extra coherent and correct responses.

- Lengthy-form Textual content Era: When producing long-form textual content, resembling tales, articles, or experiences, sustaining coherence and consistency over an prolonged interval may be difficult for language fashions educated with next-token prediction. Multi-token prediction encourages the mannequin to develop representations that seize the general construction and circulation of the textual content, probably resulting in extra coherent and constant long-form generations.

Limitations and Future Instructions

Whereas the outcomes offered within the paper are spectacular, there are a number of limitations and open questions that warrant additional investigation:

- Optimum Variety of Tokens: The paper explores completely different values of n (the variety of future tokens to foretell) and finds that n=4 works properly for a lot of duties. Nonetheless, the optimum worth of n might depend upon the precise process, dataset, and mannequin measurement. Creating principled strategies for figuring out the optimum n might result in additional efficiency enhancements.

- Vocabulary Dimension and Tokenization: The authors be aware that the optimum vocabulary measurement and tokenization technique for multi-token prediction fashions might differ from these used for next-token prediction fashions. Exploring this facet might result in higher trade-offs between compressed sequence size and computational effectivity.

- Auxiliary Prediction Losses: The authors recommend that their work might spur curiosity in growing novel auxiliary prediction losses for big language fashions, past the usual next-token prediction. Investigating various auxiliary losses and their combos with multi-token prediction is an thrilling analysis course.

- Theoretical Understanding: Whereas the paper supplies some intuitions and empirical proof for the effectiveness of multi-token prediction, a deeper theoretical understanding of why and the way this method works so properly could be useful.

Conclusion

The analysis paper “Higher & Quicker Massive Language Fashions by way of Multi-token Prediction” by Gloeckle et al. introduces a novel coaching paradigm that has the potential to considerably enhance the efficiency and capabilities of huge language fashions. By coaching fashions to foretell a number of future tokens concurrently, multi-token prediction encourages the event of long-range dependencies, algorithmic reasoning talents, and higher pattern effectivity.

The technical implementation proposed by the authors is elegant and computationally environment friendly, making it possible to use this method to large-scale language mannequin coaching. Moreover, the flexibility to leverage self-speculative decoding for quicker inference is a major sensible benefit.

Whereas there are nonetheless open questions and areas for additional exploration, this analysis represents an thrilling step ahead within the area of huge language fashions. Because the demand for extra succesful and environment friendly language fashions continues to develop, multi-token prediction might develop into a key element within the subsequent technology of those highly effective AI techniques.

[ad_2]