[ad_1]

Open supply PyTorch runs tens of hundreds of checks on a number of platforms and compilers to validate each change as our CI (Steady Integration). We monitor stats on our CI system to energy

- customized infrastructure, comparable to dynamically sharding check jobs throughout totally different machines

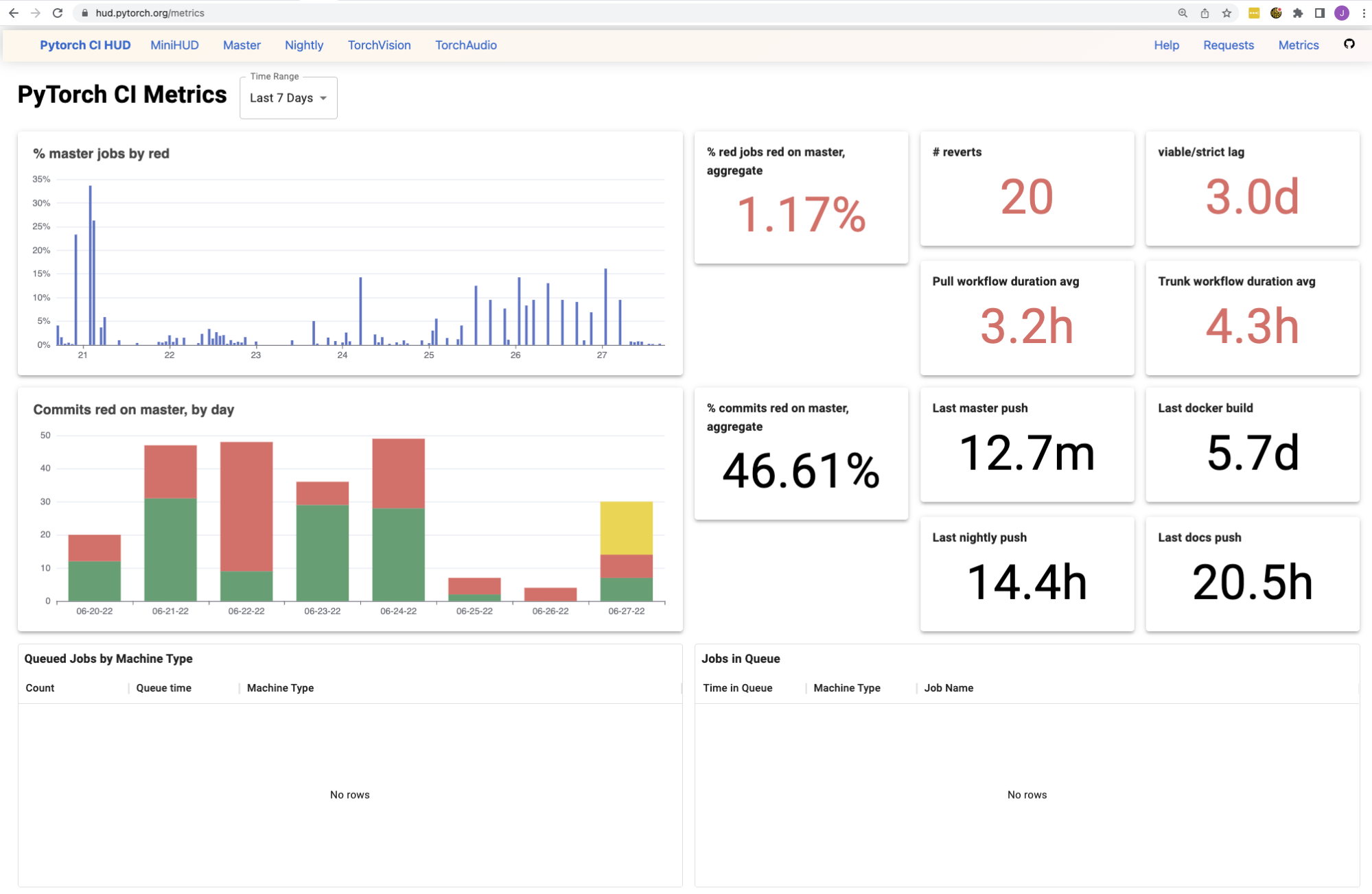

- developer-facing dashboards, see hud.pytorch.org, to trace the greenness of each change

- metrics, see hud.pytorch.org/metrics, to trace the well being of our CI when it comes to reliability and time-to-signal

Our necessities for an information backend

These CI stats and dashboards serve hundreds of contributors, from corporations comparable to Google, Microsoft and NVIDIA, offering them invaluable info on PyTorch’s very advanced check suite. Consequently, we would have liked an information backend with the next traits:

What did we use earlier than Rockset?

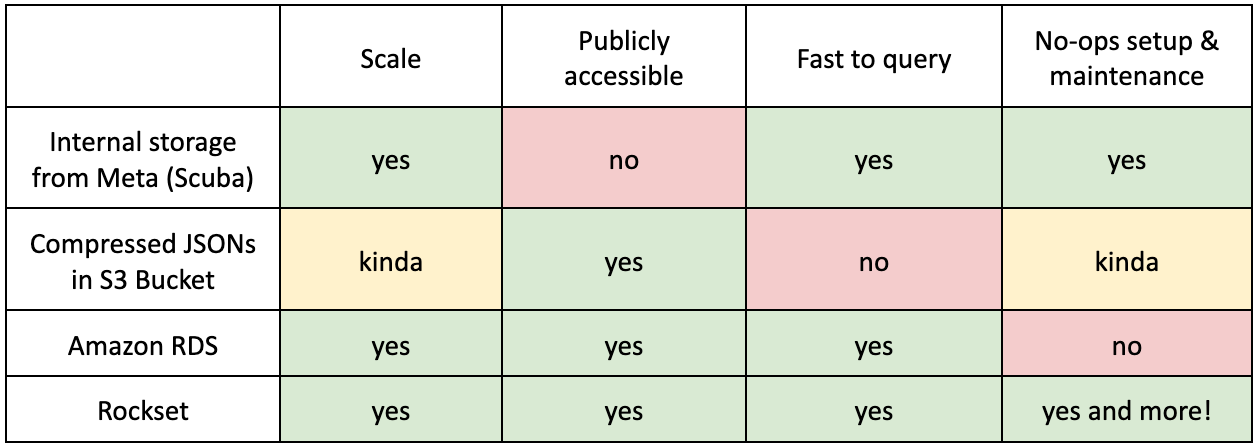

Inside storage from Meta (Scuba)

TL;DR

- Execs: scalable + quick to question

- Con: not publicly accessible! We couldn’t expose our instruments and dashboards to customers despite the fact that the info we have been internet hosting was not delicate.

As many people work at Meta, utilizing an already-built, feature-full knowledge backend was the answer, particularly when there weren’t many PyTorch maintainers and undoubtedly no devoted Dev Infra group. With assist from the Open Supply group at Meta, we arrange knowledge pipelines for our many check circumstances and all of the GitHub webhooks we might care about. Scuba allowed us to retailer no matter we happy (since our scale is principally nothing in comparison with Fb scale), interactively slice and cube the info in actual time (no have to study SQL!), and required minimal upkeep from us (since another inside group was preventing its fires).

It feels like a dream till you do not forget that PyTorch is an open supply library! All the info we have been gathering was not delicate, but we couldn’t share it with the world as a result of it was hosted internally. Our fine-grained dashboards have been considered internally solely and the instruments we wrote on high of this knowledge couldn’t be externalized.

For instance, again within the previous days, once we have been trying to trace Home windows “smoke checks”, or check circumstances that appear extra more likely to fail on Home windows solely (and never on every other platform), we wrote an inside question to characterize the set. The thought was to run this smaller subset of checks on Home windows jobs throughout improvement on pull requests, since Home windows GPUs are costly and we wished to keep away from operating checks that wouldn’t give us as a lot sign. Because the question was inside however the outcomes have been used externally, we got here up with the hacky answer of: Jane will simply run the interior question from time to time and manually replace the outcomes externally. As you may think about, it was liable to human error and inconsistencies because it was straightforward to make exterior modifications (like renaming some jobs) and neglect to replace the interior question that just one engineer was .

Compressed JSONs in an S3 bucket

TL;DR

- Execs: sort of scalable + publicly accessible

- Con: terrible to question + not truly scalable!

Sooner or later in 2020, we determined that we have been going to publicly report our check instances for the aim of monitoring check historical past, reporting check time regressions, and automated sharding. We went with S3, because it was pretty light-weight to put in writing and skim from it, however extra importantly, it was publicly accessible!

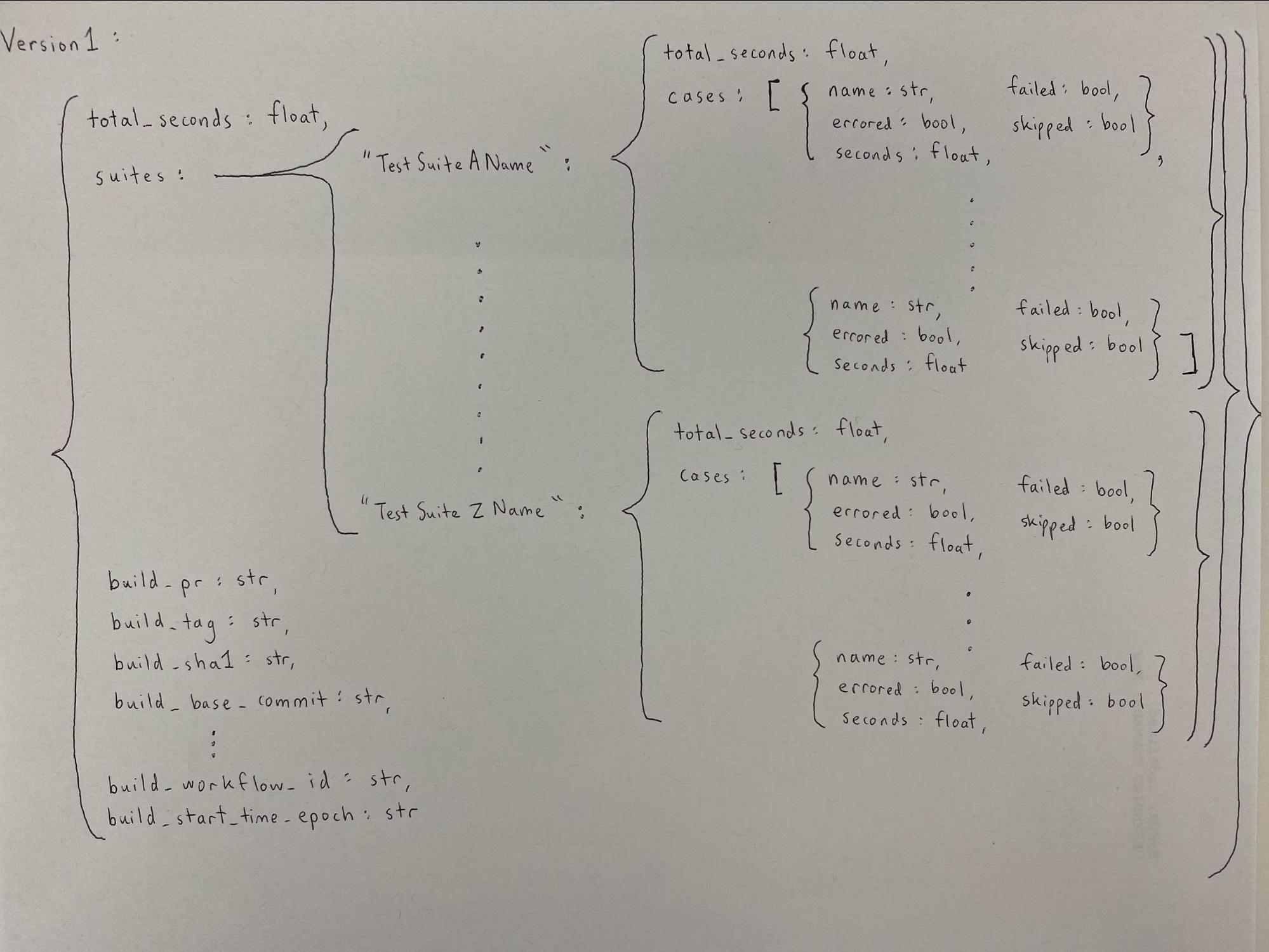

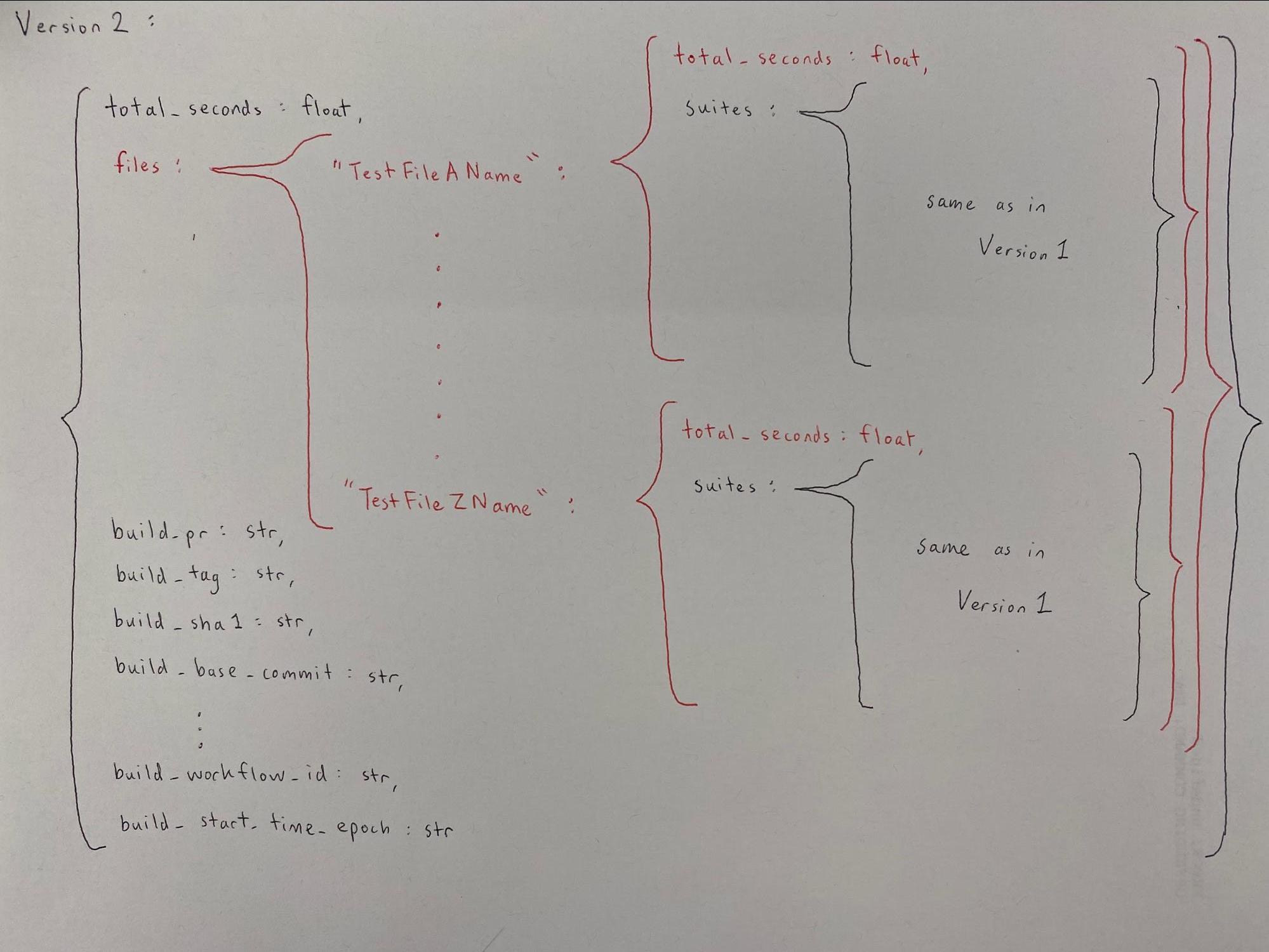

We handled the scalability downside early on. Since writing 10000 paperwork to S3 wasn’t (and nonetheless isn’t) an excellent possibility (it might be tremendous sluggish), we had aggregated check stats right into a JSON, then compressed the JSON, then submitted it to S3. After we wanted to learn the stats, we’d go within the reverse order and doubtlessly do totally different aggregations for our varied instruments.

The truth is, since sharding was a use case that solely got here up later within the format of this knowledge, we realized a number of months after stats had already been piling up that we must always have been monitoring check filename info. We rewrote our complete JSON logic to accommodate sharding by check file–if you wish to see how messy that was, try the category definitions on this file.

I frivolously chuckle right this moment that this code has supported us the previous 2 years and is nonetheless supporting our present sharding infrastructure. The chuckle is just gentle as a result of despite the fact that this answer appears jank, it labored wonderful for the use circumstances we had in thoughts again then: sharding by file, categorizing sluggish checks, and a script to see check case historical past. It grew to become a much bigger downside once we began wanting extra (shock shock). We wished to check out Home windows smoke checks (the identical ones from the final part) and flaky check monitoring, which each required extra advanced queries on check circumstances throughout totally different jobs on totally different commits from extra than simply the previous day. The scalability downside now actually hit us. Bear in mind all of the decompressing and de-aggregating and re-aggregating that was taking place for each JSON? We’d have had to do this massaging for doubtlessly lots of of hundreds of JSONs. Therefore, as an alternative of going additional down this path, we opted for a distinct answer that might permit simpler querying–Amazon RDS.

Amazon RDS

TL;DR

- Execs: scale, publicly accessible, quick to question

- Con: larger upkeep prices

Amazon RDS was the pure publicly out there database answer as we weren’t conscious of Rockset on the time. To cowl our rising necessities, we put in a number of weeks of effort to arrange our RDS occasion and created a number of AWS Lambdas to assist the database, silently accepting the rising upkeep value. With RDS, we have been capable of begin internet hosting public dashboards of our metrics (like check redness and flakiness) on Grafana, which was a significant win!

Life With Rockset

We most likely would have continued with RDS for a few years and eaten up the price of operations as a necessity, however one in every of our engineers (Michael) determined to “go rogue” and check out Rockset close to the top of 2021. The thought of “if it ain’t broke, don’t repair it,” was within the air, and most of us didn’t see speedy worth on this endeavor. Michael insisted that minimizing upkeep value was essential particularly for a small group of engineers, and he was proper! It’s normally simpler to think about an additive answer, comparable to “let’s simply construct another factor to alleviate this ache”, however it’s normally higher to go along with a subtractive answer if out there, comparable to “let’s simply take away the ache!”

The outcomes of this endeavor have been rapidly evident: Michael was capable of arrange Rockset and replicate the principle parts of our earlier dashboard in underneath 2 weeks! Rockset met all of our necessities AND was much less of a ache to take care of!

Whereas the primary 3 necessities have been persistently met by different knowledge backend options, the “no-ops setup and upkeep” requirement was the place Rockset received by a landslide. Other than being a completely managed answer and assembly the necessities we have been in search of in an information backend, utilizing Rockset introduced a number of different advantages.

-

Schemaless ingest

- We do not have to schematize the info beforehand. Nearly all our knowledge is JSON and it is very useful to have the ability to write every part straight into Rockset and question the info as is.

- This has elevated the speed of improvement. We are able to add new options and knowledge simply, with out having to do additional work to make every part constant.

-

Actual-time knowledge

- We ended up transferring away from S3 as our knowledge supply and now use Rockset’s native connector to sync our CI stats from DynamoDB.

Rockset has proved to fulfill our necessities with its means to scale, exist as an open and accessible cloud service, and question massive datasets rapidly. Importing 10 million paperwork each hour is now the norm, and it comes with out sacrificing querying capabilities. Our metrics and dashboards have been consolidated into one HUD with one backend, and we will now take away the pointless complexities of RDS with AWS Lambdas and self-hosted servers. We talked about Scuba (inside to Meta) earlier and we discovered that Rockset may be very very similar to Scuba however hosted on the general public cloud!

What Subsequent?

We’re excited to retire our previous infrastructure and consolidate much more of our instruments to make use of a standard knowledge backend. We’re much more excited to seek out out what new instruments we might construct with Rockset.

This visitor put up was authored by Jane Xu and Michael Suo, who’re each software program engineers at Fb.

[ad_2]