[ad_1]

Amazon Kinesis is a platform to ingest real-time occasions from IoT gadgets, POS methods, and functions, producing many sorts of occasions that want real-time evaluation. Because of Rockset‘s capacity to supply a extremely scalable answer to carry out real-time analytics of those occasions in sub-second latency with out worrying about schema, many Rockset customers select Kinesis with Rockset. Plus, Rockset can intelligently scale with the capabilities of a Kinesis stream, offering a seamless high-throughput expertise for our prospects whereas optimizing value.

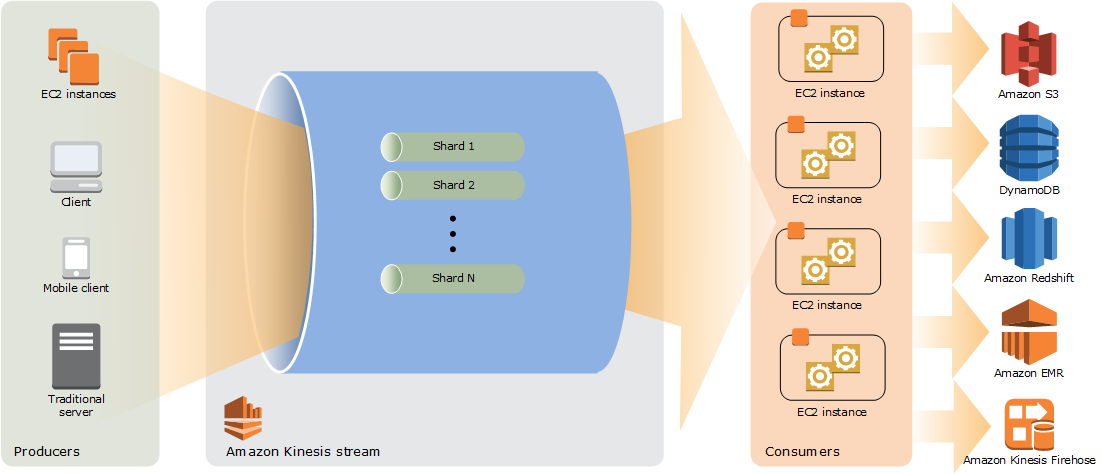

Background on Amazon Kinesis

Picture Supply: https://docs.aws.amazon.com/streams/newest/dev/key-concepts.html

A Kinesis stream consists of shards, and every shard has a sequence of knowledge information. A shard might be considered an information pipe, the place the ordering of occasions is preserved. See Amazon Kinesis Information Streams Terminology and Ideas for extra info.

Throughput and Latency

Throughput is a measure of the quantity of knowledge that’s transferred between supply and vacation spot. A Kinesis stream with a single shard can not scale past a sure restrict due to the ordering ensures supplied by a shard. To handle excessive throughput necessities when there are a number of functions writing to a Kinesis stream, it is smart to extend the variety of shards configured for the stream in order that completely different functions can write to completely different shards in parallel. Latency may also be reasoned equally. A single shard accumulating occasions from a number of sources will improve end-to-end latency in delivering messages to the customers.

Capability Modes

On the time of creation of a Kinesis stream, there are two modes to configure shards/capability mode:

- Provisioned capability mode: On this mode, the variety of Kinesis shards is consumer configured. Kinesis will create as many shards as specified by the consumer.

- On-demand capability mode: On this mode, Kinesis responds to the incoming throughput to regulate the shard depend.

With this because the background, let’s discover the implications.

Price

AWS Kinesis prices prospects by the shard hour. The larger the variety of shards, the larger the price. If the shard utilization is predicted to be excessive with a sure variety of shards, it is smart to statically outline the variety of shards for a Kinesis stream. Nonetheless, if the site visitors sample is extra variable, it could be more cost effective to let Kinesis scale shards primarily based on throughput by configuring the Kinesis stream with on-demand capability mode.

AWS Kinesis with Rockset

Shard Discovery and Ingestion

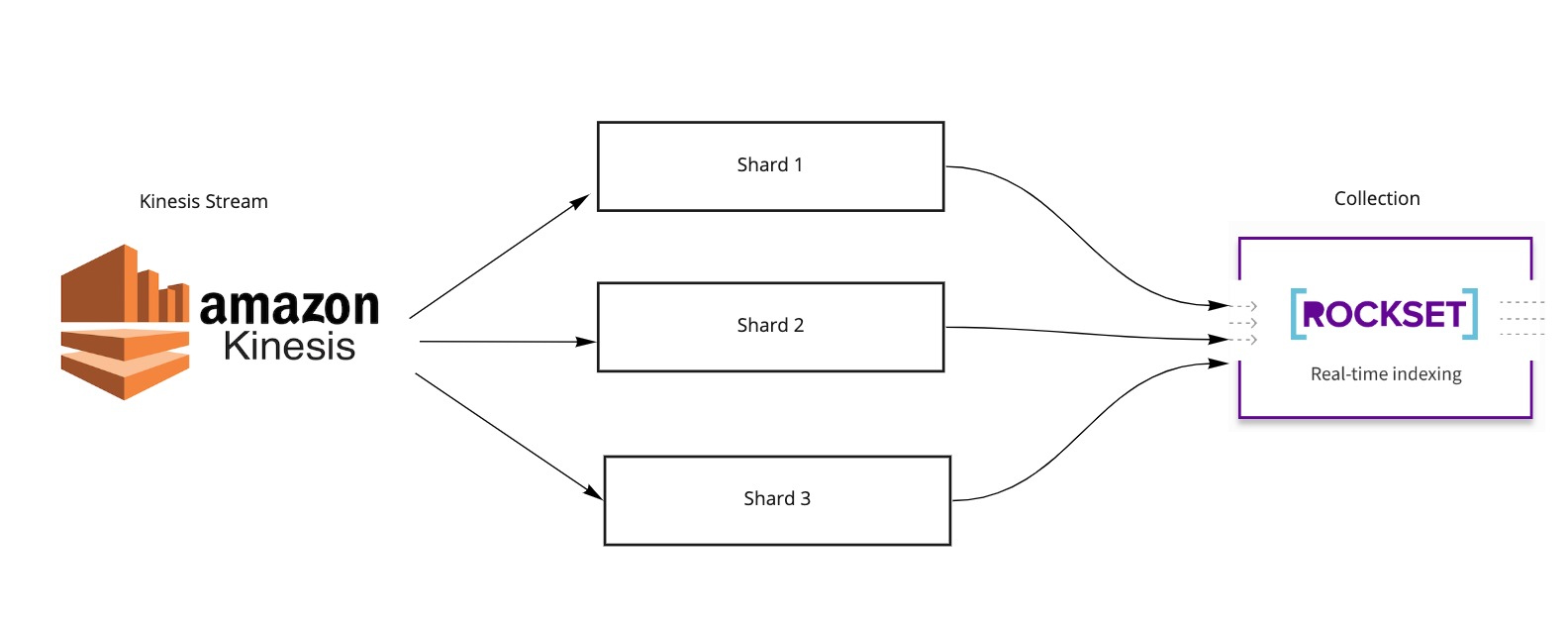

Earlier than we discover ingesting information from Kinesis into Rockset, let’s recap what a Rockset assortment is. A group is a container of paperwork that’s sometimes ingested from a supply. Customers can run analytical queries in SQL in opposition to this assortment. A typical configuration consists of mapping a Kinesis stream to a Rockset Assortment.

Whereas configuring a Rockset assortment for a Kinesis stream it isn’t required to specify the supply of the shards that must be ingested into the gathering. The Rockset assortment will mechanically uncover shards which are a part of the stream and give you a blueprint for producing ingestion jobs. Based mostly on this blueprint, ingestion jobs are coordinated that learn information from a Kinesis shard into the Rockset system. Throughout the Rockset system, ordering of occasions inside every shard is preserved, whereas additionally profiting from parallelization potential for ingesting information throughout shards.

If the Kinesis shards are created statically, and simply as soon as throughout stream initialization, it’s simple to create ingestion jobs for every shard and run these in parallel. These ingestion jobs may also be long-running, probably for the lifetime of the stream, and would constantly transfer information from the assigned shards to the Rockset assortment. If nevertheless, shards can develop or shrink in quantity, in response to both throughput (as within the case of on-demand capability mode) or consumer re-configuration (for instance, resetting shard depend for a stream configured within the provisioned capability mode), managing ingestion is just not as simple.

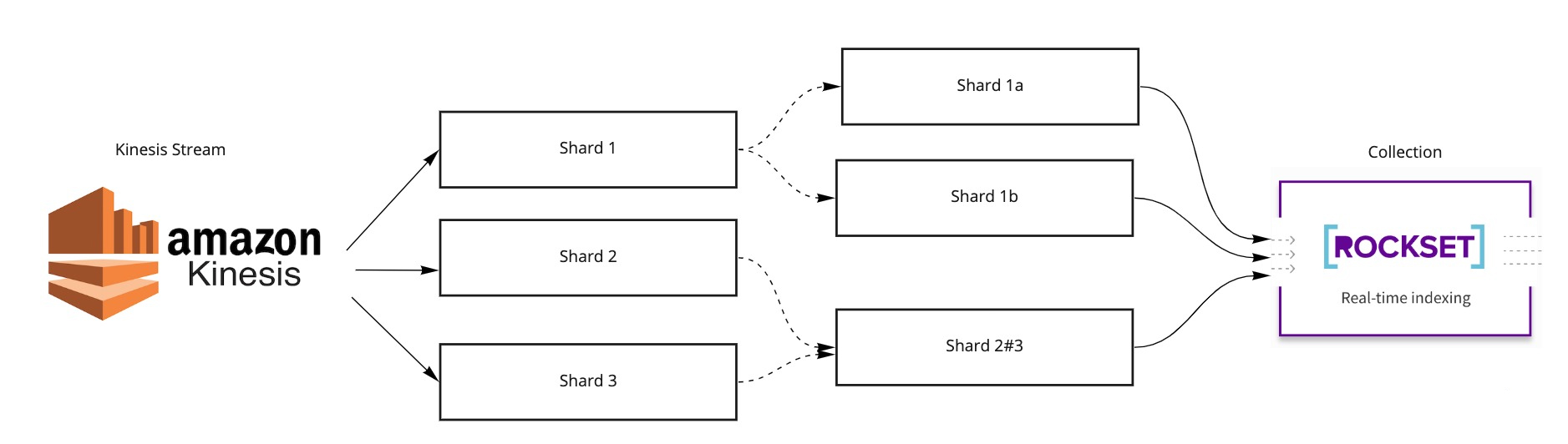

Shards That Wax and Wane

Resharding in Kinesis refers to an current shard being cut up or two shards being merged right into a single shard. When a Kinesis shard is cut up, it generates two little one shards from a single father or mother shard. When two Kinesis shards are merged, it generates a single little one shard that has two dad and mom. In each these circumstances, the kid shard maintains a again pointer or a reference to the father or mother shards. Utilizing the LIST SHARDS API, we will infer these shards and the relationships.

Selecting a Information Construction

Let’s go a bit under the floor into the world of engineering. Why can we not maintain all shards in a flat checklist and begin ingestion jobs for all of them in parallel? Keep in mind what we stated about shards sustaining occasions so as. This ordering assure have to be honored throughout shard generations, too. In different phrases, we can not course of a toddler shard with out processing its father or mother shard(s). The astute reader would possibly already be excited about a hierarchical information construction like a tree or a DAG (directed acyclic graph). Certainly, we select a DAG as the information construction (solely as a result of in a tree you can’t have a number of father or mother nodes for a kid node). Every node in our DAG refers to a shard. The blueprint we referred to earlier has assumed the type of a DAG.

Placing the Blueprint Into Motion

Now we’re able to schedule ingestion jobs by referring to the DAG, aka blueprint. Traversing a DAG in an order that respects ordering is achieved by way of a typical method generally known as topological sorting. There’s one caveat, nevertheless. Although a topological sorting leads to an order that doesn’t violate dependency relationships, we will optimize a bit additional. If a toddler shard has two father or mother shards, we can not course of the kid shard till the father or mother shards are absolutely processed. However there isn’t a dependency relationship between these two father or mother shards. So, to optimize processing throughput, we will schedule ingestion jobs for these two father or mother shards to run in parallel. This yields the next algorithm:

void schedule(Node present, Set<Node> output) {

if (processed(present)) {

return;

}

boolean flag = false;

for (Node father or mother: present.getParents()) {

if (!processed(father or mother)) {

flag = true;

schedule(father or mother, output);

}

}

if (!flag) {

output.add(present);

}

}

The above algorithm leads to a set of shards that may be processed in parallel. As new shards get created on Kinesis or current shards get merged, we periodically ballot Kinesis for the most recent shard info so we will modify our processing state and spawn new ingestion jobs, or wind down current ingestion jobs as wanted.

Protecting the Home Manageable

Sooner or later, the shards get deleted by the retention coverage set on the stream. We will clear up the shard processing info we’ve got cached accordingly in order that we will preserve our state administration in examine.

To Sum Up

We now have seen how Kinesis makes use of the idea of shards to take care of occasion ordering and on the identical time present means to scale them out/in in response to throughput or consumer reconfiguration. We now have additionally seen how Rockset responds to this virtually in lockstep to maintain up with the throughput necessities, offering our prospects a seamless expertise. By supporting on-demand capability mode with Kinesis information streams, Rockset ingestion additionally permits our prospects to profit from any value financial savings provided by this mode.

In case you are involved in studying extra or contributing to the dialogue on this subject, please be part of the Rockset Group. Blissful sharding!

Rockset is the real-time analytics database within the cloud for contemporary information groups. Get sooner analytics on more energizing information, at decrease prices, by exploiting indexing over brute-force scanning.

[ad_2]