[ad_1]

What Is Change Information Seize?

Change knowledge seize (CDC) is the method of recognising when knowledge has been modified in a supply system so a downstream course of or system can motion that change. A standard use case is to mirror the change in a special goal system in order that the info within the techniques keep in sync.

There are numerous methods to implement a change knowledge seize system, every of which has its advantages. This publish will clarify some widespread CDC implementations and talk about the advantages and disadvantages of utilizing every. This publish is beneficial for anybody who needs to implement a change knowledge seize system, particularly within the context of maintaining knowledge in sync between two techniques.

Push vs Pull

There are two fundamental methods for change knowledge seize techniques to function. Both the supply system pushes adjustments to the goal, or the goal periodically polls the supply and pulls the modified knowledge.

Push-based techniques usually require extra work for the supply system, as they should implement an answer that understands when adjustments are made and ship these adjustments in a means that the goal can obtain and motion them. The goal system merely must hear out for adjustments and apply them as a substitute of regularly polling the supply and maintaining monitor of what it is already captured. This method usually results in decrease latency between the supply and goal as a result of as quickly because the change is made the goal is notified and may motion it instantly, as a substitute of polling for adjustments.

The draw back of the push-based method is that if the goal system is down or not listening for adjustments for no matter purpose, they’ll miss adjustments. To mitigate this, queue- based mostly techniques are applied in between the supply and the goal in order that the supply can publish adjustments to the queue and the goal reads from the queue at its personal tempo. If the goal must cease listening to the queue, so long as it remembers the place it was within the queue it may possibly cease and restart the place it left off with out lacking any adjustments.

Pull-based techniques are sometimes lots easier for the supply system as they usually require logging {that a} change has occurred, often by updating a column on the desk. The goal system is then accountable for pulling the modified knowledge by requesting something that it believes has modified.

The good thing about this is similar because the queue-based method talked about beforehand, in that if the goal ever encounters a problem, as a result of it is maintaining monitor of what it is already pulled, it may possibly restart and decide up the place it left off with none points.

The draw back of the pull method is that it usually will increase latency. It’s because the goal has to ballot the supply system for updates relatively than being instructed when one thing has modified. This usually results in knowledge being pulled in batches wherever from giant batches pulled as soon as a day to a number of small batches pulled regularly.

The rule of thumb is that if you’re seeking to construct a real-time knowledge processing system then the push method needs to be used. If latency isn’t a giant difficulty and it is advisable to switch a excessive quantity of bulk updates, then pull-based techniques needs to be thought-about.

The following part will cowl the positives and negatives of a lot of completely different CDC mechanisms that utilise the push or pull method.

Change Information Seize Mechanisms

There are numerous methods to implement a change knowledge seize system. Most patterns require the supply system to flag {that a} change has occurred to some knowledge, for instance by updating a selected column on a desk within the database or placing the modified file onto a queue. The goal system then has to both look ahead to the replace on the column and fetch the modified file or subscribe to the queue.

As soon as the goal system has the modified knowledge it then must mirror that in its system. This might be so simple as making use of an replace to a file within the goal database. This part will break down a number of the mostly used patterns. The entire mechanisms work equally; it’s the way you implement them that adjustments.

Row Versioning

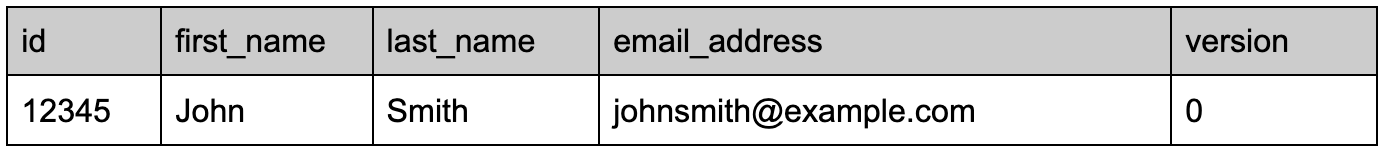

Row versioning is a standard CDC sample. It really works by incrementing a model quantity on the row in a database when it’s modified. Let’s say you’ve got a database that shops buyer knowledge. Each time a file for a buyer is both created or up to date within the buyer desk, a model column is incremented. The model column simply shops the model quantity for that file telling you what number of instances it’s modified.

It’s fashionable as a result of not solely can or not it’s used to inform a goal system {that a} file has been up to date, it additionally lets you understand how many instances that file has modified prior to now. This can be helpful data in sure use circumstances.

It’s most typical to start out the model quantity off from 0 or 1 when the file is created after which increment this quantity any time a change is made to the file.

For instance, a buyer file storing the client’s title and e mail handle is created and begins with a model variety of 0.

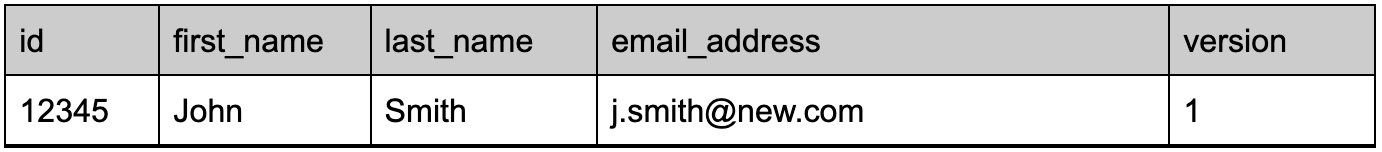

At a later date, the client adjustments their e mail handle, this is able to then increment the model quantity by 1. The file within the database would now look as follows.

For the supply system, this implementation is pretty straight ahead. Some databases like SQL Server have this performance in-built; others require database triggers to increment the quantity any time a modification is made to the file.

The complexity with the row versioning CDC sample is definitely within the goal system. It’s because every file can have completely different model numbers so that you want a approach to perceive what its present model quantity is after which if it has modified.

That is usually executed utilizing reference tables that for every ID, shops the final identified model for that file. The goal then checks if any rows have a model quantity higher than that saved within the reference desk. In the event that they do then these information are captured and the adjustments mirrored within the goal system. The reference desk then additionally wants updating to mirror the brand new model quantity for these information.

As you may see, there’s a little bit of an overhead on this answer however relying in your use case it is perhaps value it. An easier model of this method is roofed subsequent.

Replace Timestamps

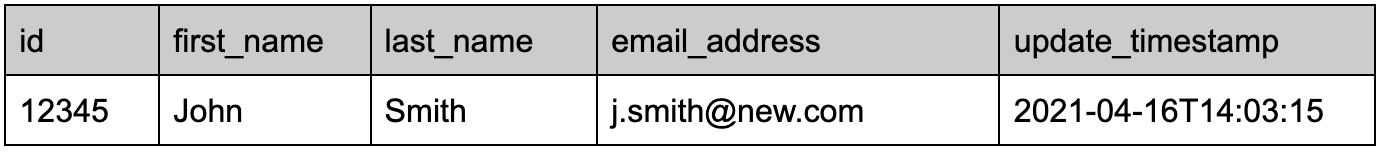

In my expertise, replace timestamps are the most typical and easiest CDC mechanisms to implement. Much like the row versioning answer, each time a file within the database adjustments you replace a column. As an alternative of this column storing the model variety of the file, it shops a timestamp of when the file was modified.

With this answer, you lose a bit of additional knowledge as you now not know what number of instances the file has been modified, but when this isn’t essential then the downstream advantages are value it.

When a file is first created, the replace timestamp column is ready to the date and time that the file was inserted. Each subsequent replace then overwrites that timestamp with the present one, once more relying on the database expertise you might be utilizing this can be taken care of for you, you might use a database set off or construct this into your software logic.

When the file is created the replace timestamp is ready.

If the file is modified, the replace timestamp is ready to the most recent date and time.

The good thing about timestamps particularly over row versioning is that the goal system now not has to maintain a reference desk. The goal system can now simply request any information from the supply system which have an replace timestamp higher than the most recent one they’ve of their system.

That is a lot much less overhead for the goal system because it doesn’t should maintain monitor of each file’s model quantity. It may well merely ballot the supply based mostly on the utmost replace timestamp it has and subsequently will all the time decide up any new or modified information.

Publish and Subscribe Queues

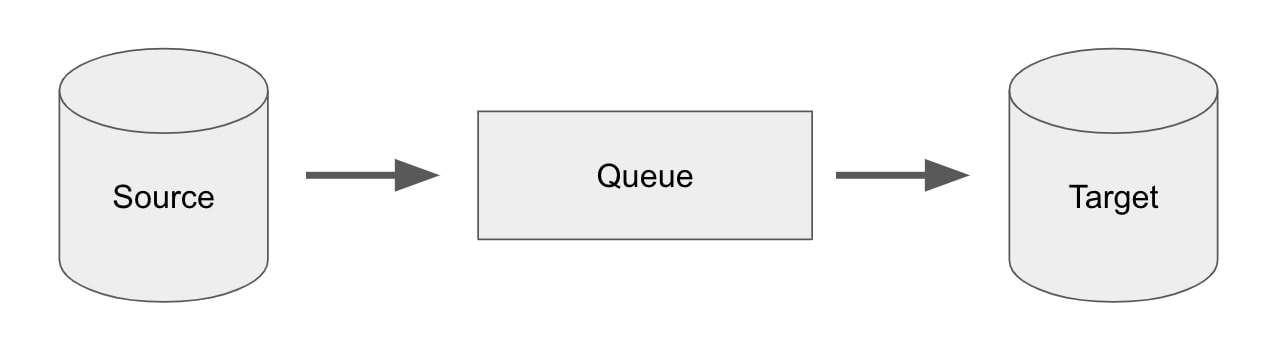

The publish and subscribe (pub/sub) sample is the primary sample that makes use of a push relatively than pull method. The row versioning and replace timestamp options all require the goal system to “pull” the info that has modified, in a pub/sub mannequin the supply system pushes the modified knowledge.

Usually, this answer requires a center man that sits in between the supply and the goal as proven in Fig 1. Any time a change is made to the info within the supply system, the supply pushes the change to the queue. The goal system is listening to the queue and may then eat the adjustments as they arrive. Once more, this answer requires much less overhead for the goal system because it merely has to hear for adjustments and apply them as they arrive.

Fig 1. Queue-based publish and subscribe CDC method

This answer supplies an a variety of benefits, the primary one being scalability. If throughout a interval of excessive load the supply system is updating hundreds of information in a matter of seconds, the “pull” approaches must pull giant quantities of adjustments from the supply at a time and apply all of them. This inevitably takes longer and can subsequently enhance the lag earlier than they request new knowledge and the lag time from the supply altering to the goal updating turns into bigger. The pub/sub method permits the supply to ship as many updates because it likes to the queue and the goal system can scale the variety of customers of this queue accordingly to course of the info faster if crucial.

The second profit is that the 2 techniques are actually decoupled. If the supply system needs to vary its underlying database or transfer the actual dataset elsewhere, the goal doesn’t want to vary as it will with a pull system. So long as the supply system retains pushing messages to the queue in the identical format, the goal can proceed receiving updates blissfully unaware that the supply system has modified something.

Database Log Scanners

This methodology includes configuring the supply database system in order that it logs any modifications made on the info throughout the database. Most trendy database applied sciences have one thing like this in-built. It’s pretty widespread follow to have duplicate databases for a lot of causes, together with backups or offloading giant processing from the primary database. These duplicate databases are saved in sync by utilizing these logs. When a modification is made on the grasp it information the assertion within the log and the duplicate executes the identical command and the 2 keep in sync.

In case you needed to sync knowledge to a special database expertise as a substitute of replicating, you might nonetheless use these logs and translate them into instructions to be executed on the goal system. The supply system would log any INSERT, UPDATE or DELETE statements which might be run and the goal system simply interprets and replicates them in the identical order. This answer may be helpful particularly if you happen to don’t wish to change the supply schema so as to add replace timestamp columns or one thing comparable.

There are a variety of challenges with this method. Every database expertise manages these change log information in another way.

- The information usually solely exist for a sure time frame earlier than being archived so if the goal ever encounters a problem there’s a fastened period of time to catch up earlier than shedding entry to the logs of their typical location.

- Translating the instructions from supply to focus on may be tough particularly if you happen to’re capturing adjustments to a SQL database and reflecting them in a NoSQL database, as the best way instructions are written are completely different.

- The system must take care of transactional techniques the place adjustments are solely utilized on commit. So if adjustments are made and rolled again, the goal must mirror the rollback too.

Change Scanning

Change scanning is much like the row versioning method however is often employed on file techniques relatively than on databases. Much like the row versioning methodology, change scanning includes scanning a filesystem, often in a selected listing, for knowledge information. These information might be one thing like CSV information and are captured and infrequently transformed into knowledge to be saved in a goal system.

Together with the info, the trail of the file and the supply system it was captured from can be saved. The CDC system then periodically polls the supply file system to test for any new information utilizing the file metadata it saved earlier as a reference. Any new information are then captured and their metadata saved too.

This answer is usually used for techniques that output knowledge to information, these information may comprise new information but additionally updates to current information once more permitting the goal system to remain in sync. The draw back of this method is that the latency between adjustments being made within the supply and mirrored within the goal is commonly lots greater. It’s because the supply system will usually batch adjustments up earlier than writing them to a file to forestall writing a number of very small information.

A Frequent CDC Structure with Debezium

There are a variety of applied sciences obtainable that present slick CDC implementations relying in your use case. The expertise world is changing into increasingly more actual time and subsequently options that permit adjustments to be captured in actual time are rising in popularity. One of many main applied sciences on this area is Debezium. It’s aim is to simplify change knowledge seize from databases in a scaleable means.

The rationale Debezium has change into so fashionable is that it may possibly present the real-time latency of a push-based system with usually minimal adjustments to the supply system. Debezium displays database logs to determine adjustments and pushes these adjustments onto a queue in order that they are often consumed. Typically the one change the supply database must make is a configuration change to make sure its database logs embody the appropriate degree of element for Debezium to seize the adjustments.

Fig 2. Reference Debezium Structure

To deal with the queuing of adjustments, Debezium makes use of Kafka. This permits the structure to scale for giant throughput techniques and in addition decouples the goal system as talked about within the Push vs Pull part. The draw back is that to make use of Debezium you additionally should deploy a Kafka cluster so this needs to be weighed up when assessing your use case.

The upside is that Debezium will handle monitoring adjustments to the supply database and supply them in a well timed method. It doesn’t enhance CPU utilization within the supply database system like pull techniques would, because it makes use of the database log information. Debezium additionally requires no change to supply schemas so as to add replace timestamp columns and it may possibly additionally seize deletes, one thing that “replace timestamp” based mostly implementations discover troublesome. These options usually outweigh the price of implementing a Debezium and a Kafka cluster and is why this is likely one of the hottest CDC options.

CDC at Rockset

Rockset is a real-time analytics database that employs a lot of these change knowledge seize techniques to ingest knowledge. Rockset’s fundamental use case is to allow real-time analytics and subsequently many of the CDC strategies it makes use of are push based mostly. This permits adjustments to be captured in Rockset as rapidly as potential so analytical outcomes are as updated as potential.

The principle problem with any new knowledge platform is the motion of information between the prevailing supply system and the brand new goal system, and Rockset simplifies this by offering built-in connectors that leverage a few of these CDC implementations for a lot of fashionable applied sciences.

These CDC implementations are supplied within the type of configurable connectors for techniques comparable to MongoDB, DynamoDB, MySQL, Postgres and others. When you have knowledge coming from one in every of these supported sources and you might be utilizing Rockset for real-time analytics, the built-in connectors provide the only CDC answer, with out requiring individually managed Debezium and Kafka elements.

As a mutable database, Rockset permits any current file, together with particular person fields of an current deeply nested doc, to be up to date with out having to reindex the complete doc. That is particularly helpful and really environment friendly when staying in sync with OLTP databases, that are more likely to have a excessive price of inserts, updates and deletes.

These connectors summary the complexity of the CDC implementation up in order that builders solely want to supply fundamental configuration; Rockset then takes care of maintaining that knowledge in sync with the supply system. For many of the supported knowledge sources the latency between the supply and goal is beneath 5 seconds.

Publish/Subscribe Sources

The Rockset connectors that utilise the publish subscribe CDC methodology are:

Rockset utilises the inbuilt change stream applied sciences obtainable in every of the databases (excluding Kafka and Kinesis) that push any adjustments permitting Rockset to hear for these adjustments and apply them in its database. Kafka and Kinesis are already knowledge queue/stream techniques, so on this occasion, Rockset listens to those companies and it’s as much as the supply software to push the adjustments.

Change Scanning

Rockset additionally features a change scanning CDC method for file-based sources together with:

Together with an information supply that makes use of this CDC method will increase the pliability of Rockset. No matter what supply expertise you’ve got, if you happen to can write knowledge out to flat information in S3 or GCS then you may utilise Rockset on your analytics.

Which CDC Technique Ought to I Use?

There isn’t any proper or flawed methodology to make use of. This publish has mentioned most of the positives and negatives of every methodology and every have their use circumstances. All of it is determined by the necessities for capturing adjustments and what the info within the goal system will likely be used for.

If the use circumstances for the goal system are depending on the info being updated always then it’s best to undoubtedly look to implement a push-based CDC answer. Even when your use circumstances proper now aren’t real-time based mostly, you should still wish to contemplate this method versus the overhead of managing a pull-based system.

If a push-based CDC answer isn’t potential then pull-based options are depending on a lot of elements. Firstly, if you happen to can modify the supply schema then including replace timestamps or row variations needs to be pretty trivial by creating some database triggers. The overhead of managing an replace timestamp system is far lower than a row versioning system, so utilizing replace timestamps needs to be most well-liked the place potential.

If modifying the supply system isn’t potential then your solely choices are: utilising any in-built change log capabilities of the supply database or change scanning. If change scanning can’t be accommodated by the supply system offering knowledge in information, then a change scanning method at a desk degree will likely be required. This could imply pulling all the knowledge within the desk every time and determining what has modified by evaluating it to what’s saved within the goal. This an costly method and solely reasonable in supply techniques with comparatively small datasets so needs to be used as a final resort.

Lastly, a DIY CDC implementation isn’t all the time simple, so utilizing readymade CDC choices such because the Debezium and Kafka mixture or Rockset’s built-in connectors for real-time analytics use circumstances are good options in lots of situations.

Lewis Gavin has been an information engineer for 5 years and has additionally been running a blog about expertise throughout the Information neighborhood for 4 years on a private weblog and Medium. Throughout his pc science diploma, he labored for the Airbus Helicopter workforce in Munich enhancing simulator software program for army helicopters. He then went on to work for Capgemini the place he helped the UK authorities transfer into the world of Huge Information. He’s at the moment utilizing this expertise to assist remodel the info panorama at easyfundraising.org.uk, a web-based charity cashback web site, the place he’s serving to to form their knowledge warehousing and reporting functionality from the bottom up.

[ad_2]