[ad_1]

Right now, we’re thrilled to announce that Mosaic AI Mannequin Coaching’s assist for fine-tuning GenAI fashions is now out there in Public Preview. At Databricks, we consider that connecting the intelligence in general-purpose LLMs to your enterprise knowledge – knowledge intelligence – is the important thing to constructing high-quality GenAI techniques. Advantageous-tuning can specialize fashions for particular duties, enterprise contexts, or area data, and will be mixed with RAG for extra correct functions. This varieties a essential pillar of our Knowledge Intelligence Platform technique, which allows you to adapt GenAI to your distinctive wants by incorporating your enterprise knowledge.

Mannequin Coaching

Our clients have skilled over 200,000 customized AI fashions within the final 12 months, and we’ve distilled the teachings into Mosaic AI Mannequin Coaching, a completely managed service. Advantageous-tune or pretrain a variety of fashions – together with Llama 3, Mistral, DBRX, and extra – along with your enterprise knowledge. The ensuing mannequin is then registered to Unity Catalog, offering full possession and management over the mannequin and its weights. Moreover, simply deploy your mannequin with Mosaic AI Mannequin Serving in only one click on.

We’ve designed Mosaic AI Mannequin Coaching to be:

- Easy: Choose your base mannequin and coaching dataset, and begin coaching instantly. We deal with the GPU and environment friendly coaching complexities so you’ll be able to deal with the modeling.

- Quick: Powered by a proprietary coaching stack that’s as much as 2x sooner than open supply, iterate shortly to construct your fashions. From fine-tuning on just a few thousand examples to continued pre-training on billions of tokens, our coaching stack scales with you.

- Built-in: Simply ingest, remodel, and preprocess your knowledge on the Databricks platform, and pull straight into coaching.

- Tunable: Rapidly tune the important thing hyperparameters, particularly studying charge and coaching period, to construct the very best high quality mannequin.

- Sovereign: You’ve full possession of the mannequin and its weights. You management the permissions and entry lineage — monitoring the coaching dataset in addition to downstream shoppers.

“At Experian, we’re innovating within the space of fine-tuning for open supply LLMs. The Mosaic AI Mannequin Coaching diminished the typical coaching time of our fashions considerably, which allowed us to speed up our GenAI improvement cycle to a number of iterations per day. The tip result’s a mannequin that behaves in a style that we outline, outperforms industrial fashions for our use instances, and prices us considerably much less to function.” James Lin, Head of AI/ML Innovation, Experian

Advantages

Mosaic AI Mannequin Coaching lets you adapt open supply fashions to carry out effectively on specialised enterprise duties to realize increased high quality. Advantages embrace:

- Larger high quality: Enhance the mannequin high quality together with particular duties and capabilities, whether or not that be summarization, chatbot conduct, instruments use, multilingual dialog, or extra.

- Decrease latency at decrease prices: Giant, normal intelligence fashions will be costly and gradual in manufacturing. Lots of our clients discover that fine-tuning small fashions (<13B parameters) can dramatically cut back latency and value whereas sustaining high quality.

- Constant, structured formatting or fashion: Generate outputs that observe a particular format or fashion, like entity extraction or creating JSON schemas in a compound AI system.

- Light-weight, manageable system prompts: Combine many enterprise logic or person suggestions into the mannequin itself. It may be exhausting to include end-user suggestions into a fancy immediate and small immediate modifications could cause regressions for different questions.

- Increase the data base: With Continued Pretraining, lengthen a mannequin’s data base, whether or not that be specific subjects, inner paperwork, languages, or up to date current occasions previous the mannequin’s authentic data cut-off. Keep tuned for future blogs on the advantages of continued pretraining!

“With Databricks, we might automate tedious handbook duties by utilizing LLMs to course of a million+ information day by day for extracting transaction and entity knowledge from property information. We exceeded our accuracy targets by fine-tuning Meta Llama3 8b and utilizing Mosaic AI Mannequin Serving. We scaled this operation massively with out the necessity to handle a big and costly GPU fleet.” – Prabhu Narsina, VP Knowledge and AI, First American

RAG and Advantageous-Tuning

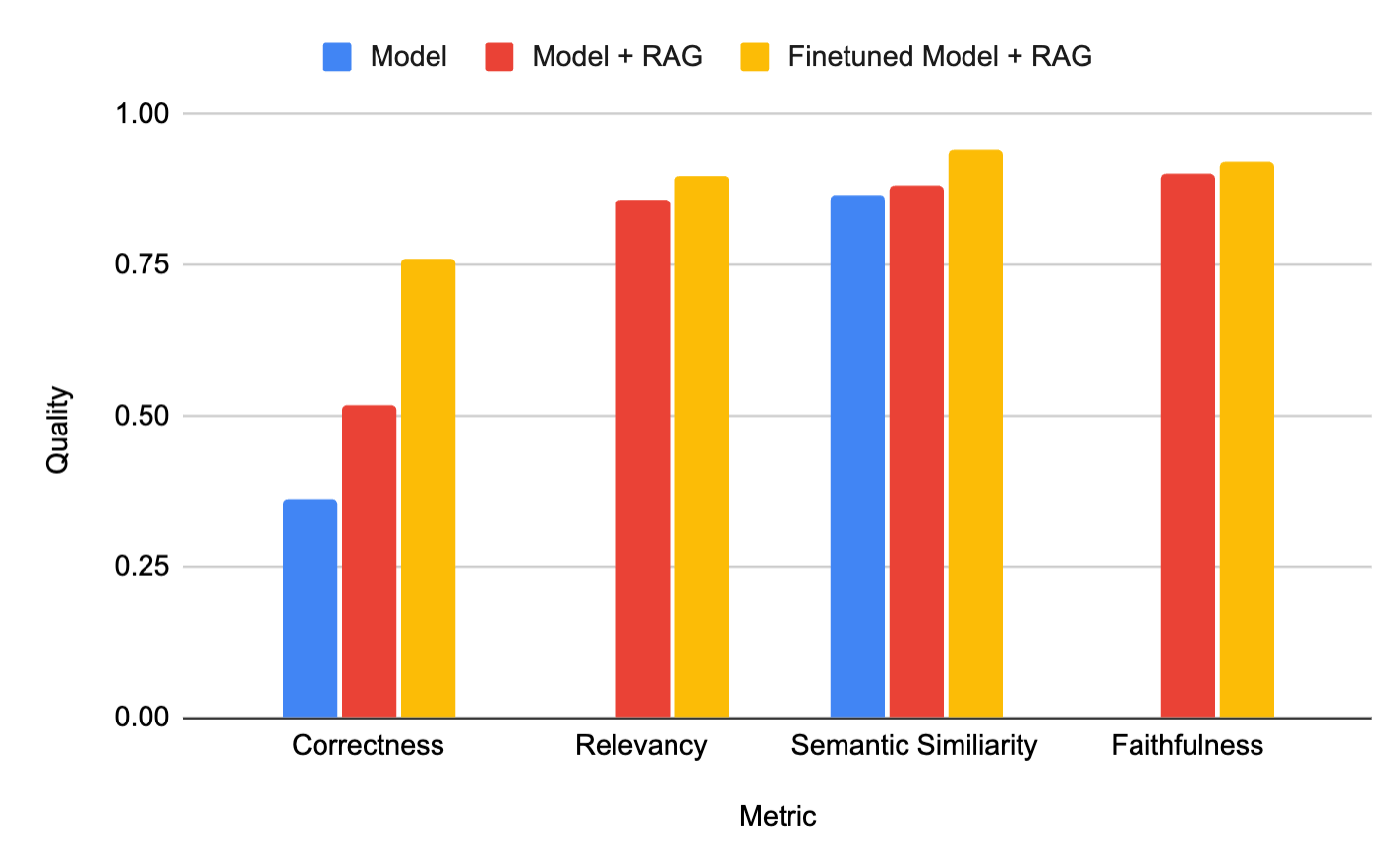

We frequently hear from clients: ought to I take advantage of RAG or fine-tune fashions with a purpose to incorporate my enterprise knowledge? With Retrieval Augmented Advantageous-tuning (RAFT), mix each! For instance, our buyer Celebal Tech constructed a top quality domain-specific RAG system by finetuning their era mannequin to enhance summarization high quality from retrieved context, decreasing hallucinations and enhancing high quality (see Determine beneath).

Determine 1: Combining a finetuned mannequin with RAG (yellow) produced the very best high quality system for buyer Celebal Tech. Tailored from their weblog.

“We felt we hit a ceiling with RAG- we needed to write a number of prompts and directions, it was a trouble. We moved on to fine-tuning + RAG and Mosaic AI Mannequin Coaching made it really easy! It not solely adopted the mannequin for Knowledge Linguistics and Area, however it additionally diminished hallucinations and elevated velocity in RAG techniques. After combining our Databricks fine-tuned mannequin with our RAG system, we received a greater utility and accuracy with the utilization of much less tokens.” Anurag Sharma, AVP Knowledge Science, Celebal Applied sciences

Analysis

Analysis strategies are essential to serving to you iterate on mannequin high quality and base mannequin selections throughout fine-tuning experiments. From visible inspection checks to LLM-as-a-Decide, we’ve designed Mosaic AI Mannequin Coaching to seamlessly join all the opposite analysis techniques inside Databricks:

- Prompts: Add as much as 10 prompts to observe throughout coaching. We’ll periodically log the mannequin’s outputs to the MLflow dashboard, so you’ll be able to manually verify the mannequin’s progress throughout coaching.

- Playground: Deploy the fine-tuned mannequin and work together with the playground for handbook immediate testing and comparisons.

- LLM-as-a-Decide: With MLFlow Analysis, use one other LLM to guage your fine-tuned mannequin on an array of current or customized metrics.

- Notebooks: After deploying the fine-tuned mannequin, construct notebooks or customized scripts to run customized analysis code on the endpoint.

Get Began

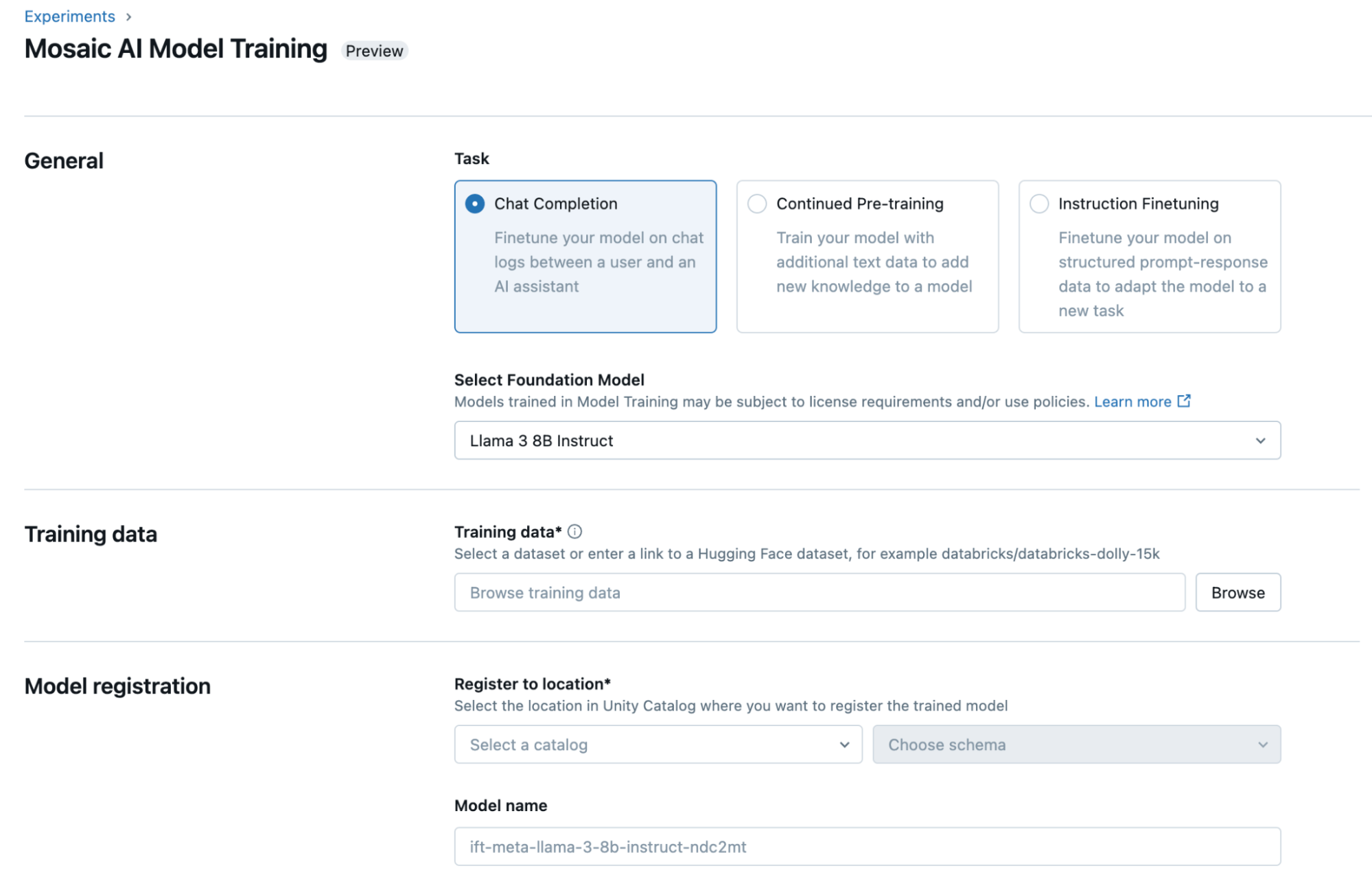

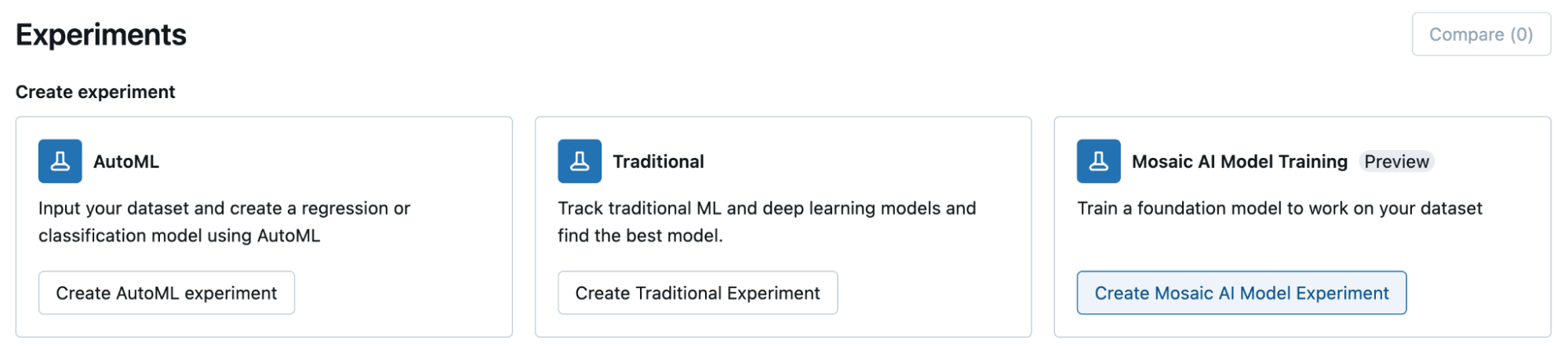

You’ll be able to fine-tune your mannequin through the Databricks UI or programmatically in Python. To get began, choose the placement of your coaching dataset in Unity Catalog or a public Hugging Face dataset, the mannequin you want to customise, and the placement to register your mannequin for 1-click deployment.

- Watch our Knowledge and AI Summit presentation on Mosaic AI Mannequin Coaching

- Learn our documentation (AWS, Azure) and go to our pricing web page

- Strive our dbdemo to shortly see tips on how to get high-quality fashions with Mosaic AI Mannequin Coaching

- Take our tutorial

[ad_2]