[ad_1]

Database efficiency is a important facet of guaranteeing an online software or service stays quick and secure. Because the service scales up, there are sometimes challenges with scaling the first database together with it. Whereas MongoDB is usually used as a main on-line database and might meet the calls for of very massive scale net purposes, it does typically change into the bottleneck as properly.

I had the chance to function MongoDB at scale as a main database at Foursquare, and encountered many of those bottlenecks. It could possibly typically be the case when utilizing MongoDB as a main on-line database for a closely trafficked net software that entry patterns corresponding to joins, aggregations, and analytical queries that scan massive or total parts of a group can’t be run as a result of hostile impacts they’ve on efficiency. Nonetheless, these entry patterns are nonetheless required to construct many software options.

We devised many methods to cope with these conditions at Foursquare. The primary technique to alleviate a number of the stress on the first database is to dump a number of the work to a secondary information retailer, and I’ll share a number of the widespread patterns of this technique on this weblog sequence. On this weblog we are going to simply proceed to solely use MongoDB, however break up up the work from a single cluster to a number of clusters. In future articles I’ll talk about offloading to different varieties of programs.

Use A number of MongoDB Clusters

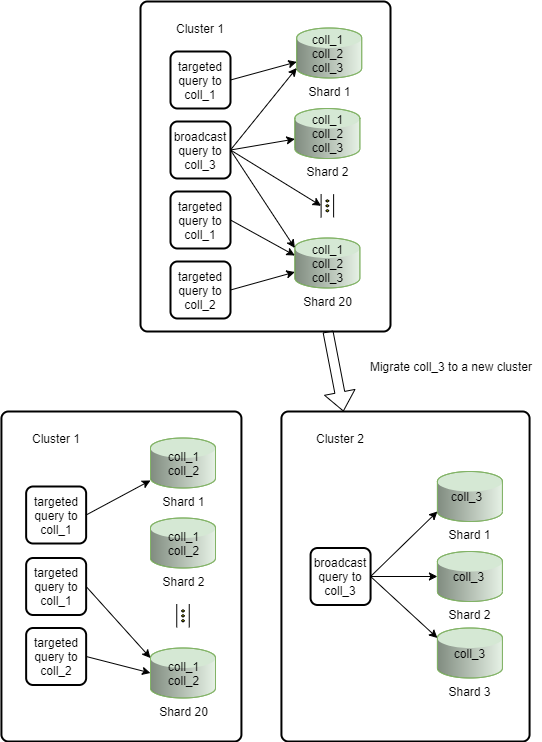

One solution to get extra predictable efficiency and isolate the impacts of querying one assortment from one other is to separate them into separate MongoDB clusters. If you’re already utilizing service oriented structure, it might make sense to additionally create separate MongoDB clusters for every main service or group of companies. This manner you may decrease the impression of an incident to a MongoDB cluster to only the companies that must entry it. If your whole microservices share the identical MongoDB backend, then they aren’t actually unbiased of one another.

Clearly if there’s new growth you may select to start out any new collections on a model new cluster. Nonetheless you can too determine to maneuver work presently performed by present clusters to new clusters by both simply migrating a group wholesale to a different cluster, or creating new denormalized collections in a brand new cluster.

Migrating a Assortment

The extra related the question patterns are for a specific cluster, the simpler it’s to optimize and predict its efficiency. If in case you have collections with very totally different workload traits, it might make sense to separate them into totally different clusters in an effort to higher optimize cluster efficiency for every sort of workload.

For instance, you’ve a extensively sharded cluster the place a lot of the queries specify the shard key so they’re focused to a single shard. Nonetheless, there’s one assortment the place a lot of the queries don’t specify the shard key, and thus end in being broadcast to all shards. Since this cluster is extensively sharded, the work amplification of those broadcast queries turns into bigger with each extra shard. It could make sense to maneuver this assortment to its personal cluster with many fewer shards in an effort to isolate the load of the published queries from the opposite collections on the unique cluster. It is usually very probably that the efficiency of the published question may also enhance by doing this as properly. Lastly, by separating the disparate question patterns, it’s simpler to motive in regards to the efficiency of the cluster since it’s typically not clear when a number of gradual question patterns which one causes the efficiency degradation on the cluster and which of them are gradual as a result of they’re affected by efficiency degradations on the cluster.

Denormalization

Denormalization can be utilized inside a single cluster to scale back the variety of reads your software must make to the database by embedding additional info right into a doc that’s incessantly requested with it, thus avoiding the necessity for joins. It can be used to separate work into a totally separate cluster by making a model new assortment with aggregated information that incessantly must be computed.

For instance, if we now have an software the place customers could make posts about sure matters, we’d have three collections:

customers:

{

_id: ObjectId('AAAA'),

identify: 'Alice'

},

{

_id: ObjectId('BBBB'),

identify: 'Bob'

}

matters:

{

_id: ObjectId('CCCC'),

identify: 'cats'

},

{

_id: ObjectId('DDDD'),

identify: 'canine'

}

posts:

{

_id: ObjectId('PPPP'),

identify: 'My first publish - cats',

consumer: ObjectId('AAAA'),

subject: ObjectId('CCCC')

},

{

_id: ObjectId('QQQQ'),

identify: 'My second publish - canine',

consumer: ObjectId('AAAA'),

subject: ObjectId('DDDD')

},

{

_id: ObjectId('RRRR'),

identify: 'My first publish about canine',

consumer: ObjectId('BBBB'),

subject: ObjectId('DDDD')

},

{

_id: ObjectId('SSSS'),

identify: 'My second publish about canine',

consumer: ObjectId('BBBB'),

subject: ObjectId('DDDD')

}

Your software might need to know what number of posts a consumer has ever made a few sure subject. If these are the one collections obtainable, you would need to run a rely on the posts assortment filtering by consumer and subject. This is able to require you to have an index like {'subject': 1, 'consumer': 1} in an effort to carry out properly. Even with the existence of this index, MongoDB would nonetheless must do an index scan of all of the posts made by a consumer for a subject. In an effort to mitigate this, we are able to create a brand new assortment user_topic_aggregation:

user_topic_aggregation:

{

_id: ObjectId('TTTT'),

consumer: ObjectId('AAAA'),

subject: ObjectId('CCCC')

post_count: 1,

last_post: ObjectId('PPPP')

},

{

_id: ObjectId('UUUU'),

consumer: ObjectId('AAAA'),

subject: ObjectId('DDDD')

post_count: 1,

last_post: ObjectId('QQQQ')

},

{

_id: ObjectId('VVVV'),

consumer: ObjectId('BBBB'),

subject: ObjectId('DDDD')

post_count: 2,

last_post: ObjectId('SSSS')

}

This assortment would have an index {'subject': 1, 'consumer': 1}. Then we might be capable of get the variety of posts made by a consumer for a given subject with scanning just one key in an index. This new assortment can then additionally dwell in a totally separate MongoDB cluster, which isolates this workload out of your unique cluster.

What if we additionally needed to know the final time a consumer made a publish for a sure subject? It is a question that MongoDB struggles to reply. You may make use of the brand new aggregation assortment and retailer the ObjectId of the final publish for a given consumer/subject edge, which then enables you to simply discover the reply by operating the ObjectId.getTimestamp() operate on the ObjectId of the final publish.

The tradeoff to doing that is that when making a brand new publish, you might want to replace two collections as a substitute of 1, and it can’t be performed in a single atomic operation. This additionally means the denormalized information within the aggregation assortment can change into inconsistent with the information within the unique two collections. There would then must be a mechanism to detect and proper these inconsistencies.

It solely is smart to denormalize information like this if the ratio of reads to updates is excessive, and it’s acceptable to your software to typically learn inconsistent information. If you may be studying the denormalized information incessantly, however updating it a lot much less incessantly, then it is smart to incur the price of costlier and complicated updates.

Abstract

As your utilization of your main MongoDB cluster grows, rigorously splitting the workload amongst a number of MongoDB clusters might help you overcome scaling bottlenecks. It could possibly assist isolate your microservices from database failures, and likewise enhance efficiency of queries of disparate patterns. In subsequent blogs, I’ll discuss utilizing programs apart from MongoDB as secondary information shops to allow question patterns that aren’t potential to run in your main MongoDB cluster(s).

Different MongoDB sources:

[ad_2]