[ad_1]

(Anders78/Shutterstock)

The appearance of generative AI has supercharged the world’s urge for food for knowledge, particularly high-quality knowledge of recognized provenance. Nevertheless, as giant language fashions (LLMs) get larger, consultants are warning that we could also be working out of knowledge to coach them.

One of many large shifts that occurred with transformer fashions, which have been invented by Google in 2017, is using unsupervised studying. As a substitute of coaching an AI mannequin in a supervised vogue atop smaller quantities of upper high quality, human-curated knowledge, using unsupervised coaching with transformer fashions opened AI as much as the huge quantities of knowledge of variable high quality on the Internet.

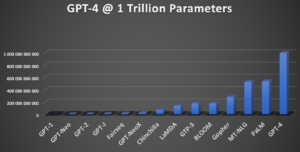

As pre-trained LLMs have gotten larger and extra succesful over time, they’ve required larger and extra elaborate coaching units. As an example, when OpenAI launched its unique GPT-1 mannequin in 2018, the mannequin had about 115 million parameters and was skilled on BookCorpus, which is a set of about 7,000 unpublished books comprising about 4.5 GB of textual content.

GPT-2, which OpenAI launched in 2019, represented a direct 10x scale-up of GPT-1. The parameter depend expanded to 1.5 billion and the coaching knowledge expanded to about 40GB through the corporate’s use of WebText, a novel coaching set it created based mostly on scraped hyperlinks from Reddit customers. WebText contained about 600 billion phrases and weighed in round 40GB.

LLM development by variety of parameters (Picture courtesy Corus Greyling, HumanFirst)

With GPT-3, OpenAI expanded its parameter depend to 175 billion. The mannequin, which debuted in 2020, was pre-trained on 570 GB of textual content culled from open sources, together with BookCorpus (Book1 and Book2), Widespread Crawl, Wikipedia, and WebText2. All instructed, it amounted to about 499 billion tokens.

Whereas official measurement and coaching set particulars are scant for GPT-4, which OpenAI debuted in 2023, estimates peg the dimensions of the LLM at someplace between 1 trillion and 1.8 trillion, which might make it 5 to 10 instances larger than GPT-3. The coaching set, in the meantime, has been reported to be 13 trillion tokens (roughly 10 trillion phrases).

Because the AI fashions get larger, the AI mannequin makers have scoured the Internet for brand spanking new sources of knowledge to coach them. Nevertheless, that’s getting tougher, because the creators and collectors of Internet knowledge have more and more imposed restrictions on using knowledge for coaching AI.

Dario Amodei, the CEO of Anthropic, not too long ago estimated there’s a ten% probability that we might run out of sufficient knowledge to proceed scaling fashions.

“…[W]e might run out of knowledge,” Amodei instructed Dwarkesh Patel in a latest interview. “For varied causes, I feel that’s not going to occur however in the event you have a look at it very naively we’re not that removed from working out of knowledge.”

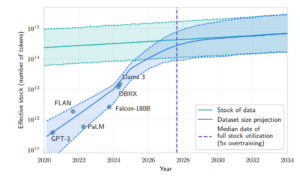

We are going to quickly burn up all novel human textual content knowledge for LLM coaching, researchers say (Will we run out of knowledge? Limits of LLM scaling based mostly on human-generated knowledge”)

This matter was additionally taken up in a latest paper titled “Will we run out of knowledge? Limits of LLM scaling based mostly on human-generated knowledge,” the place researchers recommend that the present tempo of LLM improvement on human-based knowledge isn’t sustainable.

At present charges of scaling, an LLM that’s skilled on all accessible human textual content knowledge can be created between 2026 and 2032, they wrote. In different phrases, we might run out of contemporary knowledge that no LLM has seen in lower than two years.

“Nevertheless, after accounting for regular enhancements in knowledge effectivity and the promise of strategies like switch studying and artificial knowledge technology, it’s doubtless that we are going to be

in a position to overcome this bottleneck within the availability of public

human textual content knowledge,” the researchers write.

In a brand new paper from the Knowledge Provenance Initiative titled “Consent in Disaster: The Fast Decline of the AI Knowledge Commons” (pdf), researchers affiliated with the Massachusetts Institute of Know-how analyzed 14,000 web sites to find out to what extent web site operators are making their knowledge “crawlable” by automated knowledge harvesters, similar to these utilized by Widespread Crawl, the biggest publicly accessible crawl of the Web.

Their conclusion: A lot of the information more and more is off-limits to Internet crawlers, both by coverage or technological incompatibility. What’s extra, the phrases of use dictating how web site operators’ enable their knowledge for use more and more don’t mesh with what web sites truly enable by way of their robotic.txt information, which comprise guidelines that block entry to content material.

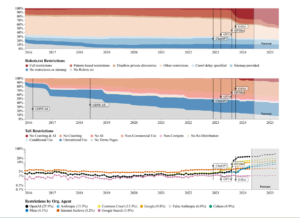

Web site operators are placing restrictions on knowledge harvesting (Courtesy “Consent in Disaster: The Fast Decline of the AI Knowledge Commons”)

“We observe a proliferation of AI-specific clauses to restrict use, acute variations in restrictions on AI builders, in addition to basic inconsistencies between web sites’ expressed intentions of their Phrases of Service and their robots.txt,” the Knowledge Provenance Initiative researchers wrote. “We diagnose these as signs of ineffective net protocols, not designed to deal with the widespread re-purposing of the web for AI.”

Widespread Crawl has been recording the Web since 2007, and right this moment consists of greater than 250 billion Internet pages. The repository is free and open for anybody to make use of, and grows by 3 billion to five billion new pages per thirty days. Teams like C4, RefinedWeb, and Dolma, which have been analyzed by the MIT researchers, provide cleaned up variations of the information in Widespread Crawl.

The Knowledge Provenance Initiative researchers discovered that, since OpenAI’s ChatGPT exploded onto the scene in late 2022, many web sites have imposed restrictions on crawling for the aim of harvesting knowledge. At present charges, almost 50% of internet sites are projected to have full or partial restrictions by 2025, the researchers conclude. Equally, restrictions have additionally been imposed on web site phrases of service (ToS), with the share of internet sites with no restrictions dropping from about 50% in 2023 to about 40% by 2025.

The Knowledge Provenance Initiative researchers discover that crawlers from OpenAI are restricted essentially the most typically, about 26% of the time, adopted by crawlers from Anthropic and Widespread Crawl (about 13%), Google’s AI crawler (about 10%), Cohere (about 5%), and Meta (about 4%).

Patrick Collison interviews OpenAI CEO Sam Altman

The Web was not created to offer knowledge for coaching AI fashions, the researchers write. Whereas bigger web sites are in a position to implement refined consent controls that enable them to reveal some knowledge units with full provenance whereas retricting others, many smaller web sites operators don’t have the sources to implement such programs, which suggests they’re hiding all of their content material behind paywalls, the researchers write. That stops AI corporations from attending to it, however it additionally prevents that knowledge from getting used for extra reputable makes use of, similar to educational analysis, taking us farther from the Web’s open beginnings.

“If we don’t develop higher mechanisms to provide web site house owners management over how their knowledge is used, we must always count on to see additional decreases within the open net,” the Knowledge Provenance Initiative researchers write.

AI giants have not too long ago began to look to different sources for knowledge to coach their fashions, together with big collections of movies posted to the Web. As an example, a dataset referred to as YouTube Subtitles, which is a part of bigger, open-source knowledge set created by EleutherAI referred to as the Pile, is being utilized by corporations like Apple, Nvidia, and Anthropic to coach AI fashions.

The transfer has angered some smaller content material creators, who say they by no means agreed to have their copyrighted work used to coach AI fashions and haven’t been compensated as such. What’s extra, they’ve expressed concern that their content material could also be used to coach generative fashions that create content material that competes with their very own content material.

The AI corporations are conscious of the looming knowledge dam, however they’ve potentials workarounds already within the works. OpenAI CEO Sam Altman acknowledged the state of affairs in a latest interview with Irish entrepreneur Patrick Collison.

“So long as you will get over the artificial knowledge occasion horizon the place the mannequin is sensible sufficient to create artificial knowledge, I feel it will likely be alright,” Altman stated. “We do want new strategies for positive. I don’t need to fake in any other case in any manner. However the naïve plan of scaling up a transformer with pre-trained tokens from the Web–that may run out. However that’s not the plan.”

Associated Gadgets:

Are Tech Giants ‘Piling’ On Small Content material Creators to Prepare Their AI?

Anger Builds Over Massive Tech’s Massive Knowledge Abuses

curation, knowledge provenance, GenAI, human knowledge, LLM, provenance, artificial knowledge, textual content knowledge, coaching knowledge, coaching dataset, transformer mannequin

[ad_2]