[ad_1]

Apache Kafka has seen broad adoption because the streaming platform of selection for constructing purposes that react to streams of information in actual time. In lots of organizations, Kafka is the foundational platform for real-time occasion analytics, appearing as a central location for accumulating occasion knowledge and making it out there in actual time.

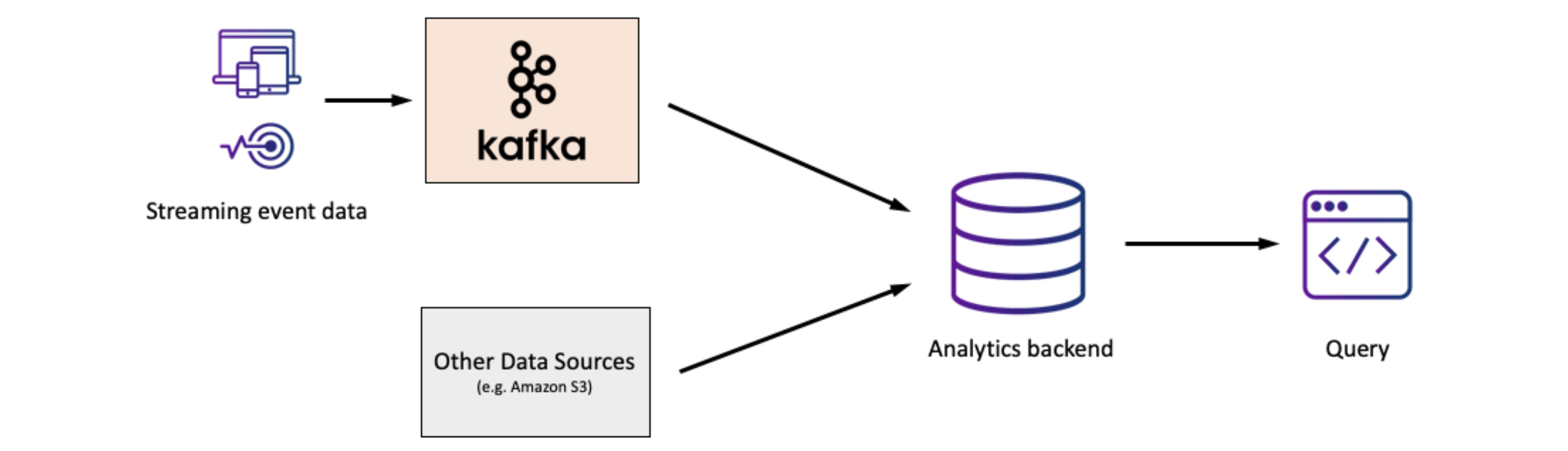

Whereas Kafka has turn into the usual for occasion streaming, we regularly want to investigate and construct helpful purposes on Kafka knowledge to unlock essentially the most worth from occasion streams. On this e-commerce instance, Fynd analyzes clickstream knowledge in Kafka to know what’s occurring within the enterprise over the previous few minutes. Within the digital actuality area, a supplier of on-demand VR experiences makes determinations on what content material to supply primarily based on massive volumes of person conduct knowledge generated in actual time and processed by Kafka. So how ought to organizations take into consideration implementing analytics on knowledge from Kafka?

Issues for Actual-Time Occasion Analytics with Kafka

When deciding on an analytics stack for Kafka knowledge, we will break down key concerns alongside a number of dimensions:

- Information Latency

- Question Complexity

- Columns with Combined Varieties

- Question Latency

- Question Quantity

- Operations

Information Latency

How updated is the info being queried? Needless to say advanced ETL processes can add minutes to hours earlier than the info is accessible to question. If the use case doesn’t require the freshest knowledge, then it could be ample to make use of a knowledge warehouse or knowledge lake to retailer Kafka knowledge for evaluation.

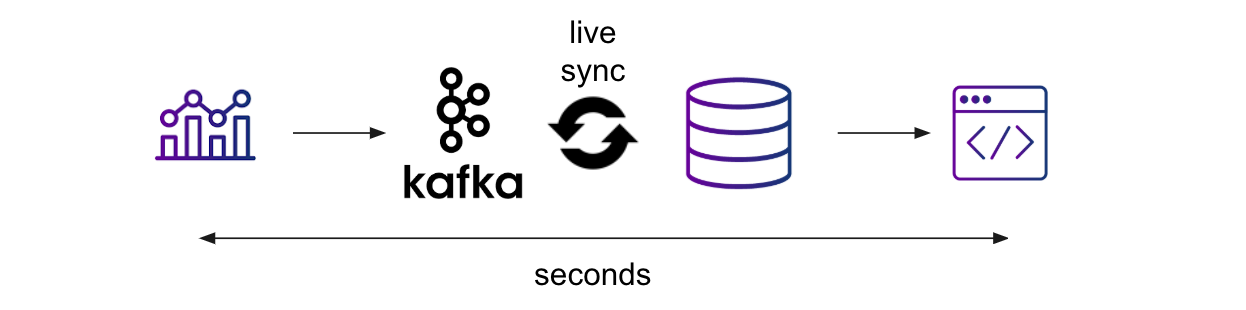

Nevertheless, Kafka is a real-time streaming platform, so enterprise necessities typically necessitate a real-time database, which might present quick ingestion and a steady sync of recent knowledge, to have the ability to question the newest knowledge. Ideally, knowledge must be out there for question inside seconds of the occasion occurring so as to help real-time purposes on occasion streams.

Question Complexity

Does the appliance require advanced queries, like joins, aggregations, sorting, and filtering? If the appliance requires advanced analytic queries, then help for a extra expressive question language, like SQL, can be fascinating.

Observe that in lots of cases, streams are most helpful when joined with different knowledge, so do take into account whether or not the power to do joins in a performant method can be necessary for the use case.

Columns with Combined Varieties

Does the info conform to a well-defined schema or is the info inherently messy? If the info matches a schema that doesn’t change over time, it could be potential to keep up a knowledge pipeline that hundreds it right into a relational database, with the caveat talked about above that knowledge pipelines will add knowledge latency.

If the info is messier, with values of various varieties in the identical column as an illustration, then it could be preferable to pick out a Kafka sink that may ingest the info as is, with out requiring knowledge cleansing at write time, whereas nonetheless permitting the info to be queried.

Question Latency

Whereas knowledge latency is a query of how contemporary the info is, question latency refers back to the velocity of particular person queries. Are quick queries required to energy real-time purposes and reside dashboards? Or is question latency much less important as a result of offline reporting is ample for the use case?

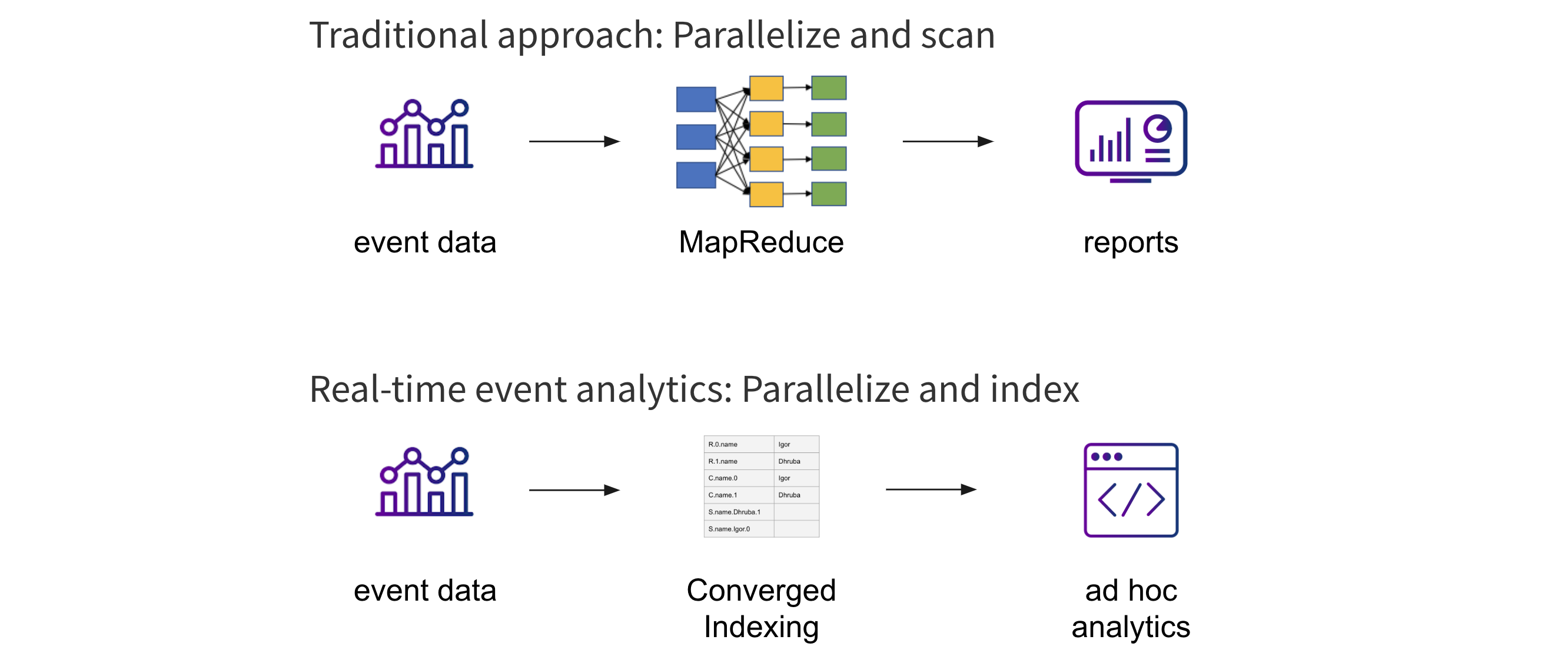

The standard method to analytics on massive knowledge units entails parallelizing and scanning the info, which can suffice for much less latency-sensitive use instances. Nevertheless, to satisfy the efficiency necessities of real-time purposes, it’s higher to contemplate approaches that parallelize and index the info as a substitute, to allow low-latency advert hoc queries and drilldowns.

Question Quantity

Does the structure have to help massive numbers of concurrent queries? If the use case requires on the order of 10-50 concurrent queries, as is widespread with reporting and BI, it could suffice to ETL the Kafka knowledge into a knowledge warehouse to deal with these queries.

There are numerous trendy knowledge purposes that want a lot increased question concurrency. If we’re presenting product suggestions in an e-commerce state of affairs or making selections on what content material to function a streaming service, then we will think about 1000’s of concurrent queries, or extra, on the system. In these instances, a real-time analytics database can be the higher selection.

Operations

Is the analytics stack going to be painful to handle? Assuming it’s not already being run as a managed service, Kafka already represents one distributed system that needs to be managed. Including one more system for analytics provides to the operational burden.

That is the place totally managed cloud companies can assist make real-time analytics on Kafka way more manageable, particularly for smaller knowledge groups. Search for options don’t require server or database administration and that scale seamlessly to deal with variable question or ingest calls for. Utilizing a managed Kafka service may assist simplify operations.

Conclusion

Constructing real-time analytics on Kafka occasion streams entails cautious consideration of every of those elements to make sure the capabilities of the analytics stack meet the necessities of your utility and engineering workforce. Elasticsearch, Druid, Postgres, and Rockset are generally used as real-time databases to serve analytics on knowledge from Kafka, and you must weigh your necessities, throughout the axes above, in opposition to what every answer offers.

For extra data on this matter, do try this associated tech speak the place we undergo these concerns in larger element: Greatest Practices for Analyzing Kafka Occasion Streams.

[ad_2]