[ad_1]

All of the code is on the market in this GitHub repository.

Introduction

Synchronizing information from exterior relational databases like Oracle, MySQL, or an information warehouse into the Databricks Information Intelligence Platform is a typical use case. Databricks gives a number of approaches starting from LakeFlow Join’s easy and environment friendly ingestion connectors to Delta Dwell Tables’ (DLT) flexibility with APPLY CHANGES INTO assertion, which accepts change information seize (CDC) enter datasets. Beforehand, in “Simplifying Change Information Seize with Databricks Delta Dwell Tables“, we described how DLT pipelines allow you to develop scalable, dependable, and low-latency information pipelines to carry out CDC processing in your information lake with the minimal required computation assets and automated out-of-order information dealing with.

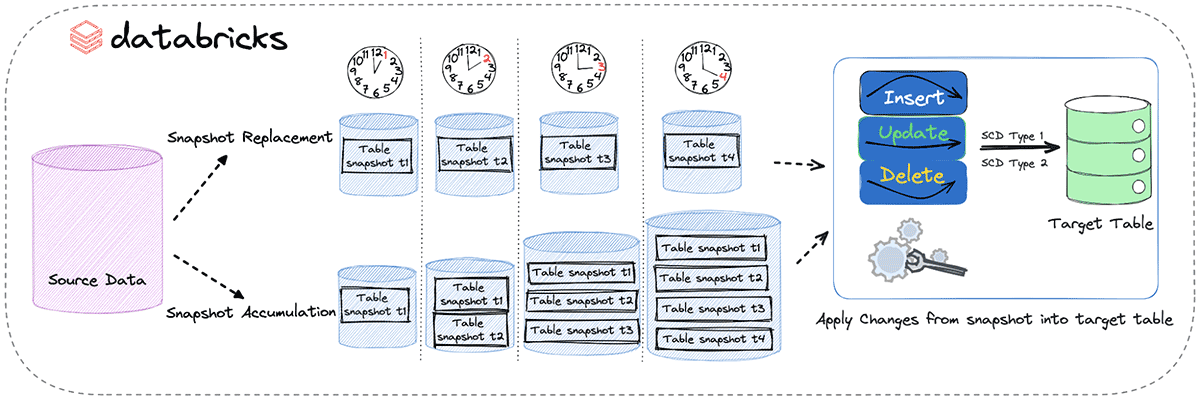

Nevertheless, whereas LakeFlow Join and DLT APPLY CHANGES INTO work seamlessly with databases that may present a change information feed (CDF) to stream adjustments from, there are environments and methods the place a CDF stream will not be accessible. For these sources, you may evaluate snapshots to determine adjustments and course of them. On this weblog, we’ll present you learn how to implement SCD Sort 1 and SCD Sort 2 in Databricks Delta Dwell Tables utilizing desk snapshots.

Understanding Slowly Altering Dimensions

Slowly altering dimensions (SCD) refers back to the unpredictable and sporadic change of knowledge throughout sure dimensions over time. These adjustments may result from correcting an error within the information or can signify a real replace and worth change in that specific dimension, equivalent to buyer location info or product element info. A basic instance is when a buyer strikes and adjustments their tackle.

When working with information, it’s essential to make sure that adjustments are precisely mirrored with out compromising information consistency. The choice to overwrite outdated values with new ones or seize adjustments whereas maintaining historic information can considerably influence your information pipelines and enterprise processes. This determination relies upon closely in your particular enterprise necessities. To deal with completely different use instances, there are numerous forms of Slowly Altering Dimensions (SCD). This weblog will concentrate on the 2 most typical ones: SCD Sort 1, the place the dimension is overwritten with new information, and SCD Sort 2, the place each new and outdated information are maintained over time.

What are snapshots and why do they matter?

Snapshots signify a secure view of the information at a selected time limit and may be explicitly or implicitly timestamped at a desk or file degree. These timestamps enable the upkeep of temporal information. A sequence of snapshots over time can present a complete view of the enterprise’s historical past.

With out monitoring the historical past of information, any analytical report constructed on outdated information will probably be inaccurate and may be deceptive for the enterprise. Thus, monitoring the adjustments in dimensions precisely is essential in any information warehouse. Whereas these adjustments are unpredictable, evaluating snapshots makes it simple to trace adjustments over time so we will make correct stories based mostly on the freshest information.

Environment friendly Methods for RDBMS Desk Snapshots Administration: Push vs. Pull

Push-Primarily based Snapshots: Direct and Environment friendly

The Push-Primarily based method includes instantly copying your entire content material of a desk and storing this copy in one other location. This technique may be carried out utilizing database vendor-specific desk replication or bulk operations. The important thing benefit right here is its directness and effectivity. You, because the consumer, provoke the method, leading to an instantaneous and full replication of the information.

Pull-Primarily based Snapshots: Versatile however Useful resource-Intensive

However, the Pull-Primarily based method requires you to question the supply desk to retrieve its complete content material. That is sometimes carried out over a JDBC connection from Databricks, and the retrieved information is then saved as a snapshot. Whereas this technique gives extra flexibility by way of when and the way information is pulled, it may be costly and won’t scale effectively with very massive desk sizes.

On the subject of dealing with a number of variations of those snapshots, there are two major methods:

Snapshot Alternative Method (Method 1): This technique is about sustaining solely the most recent model of a snapshot. When a brand new snapshot turns into accessible, it replaces the outdated one. This method is good for situations the place solely probably the most present information snapshot is related, lowering storage prices and simplifying information administration.

Snapshot Accumulation Method (Method 2): Opposite to the Alternative Method, right here you retain a number of variations of desk snapshots. Every snapshot is saved at a singular path, permitting for historic information evaluation and monitoring adjustments over time. Whereas this technique offers a richer historic context, it calls for extra storage and a extra advanced system administration.

Introduction to Delta Dwell Tables Apply Modifications From Snapshot

DLT has a functionality known as “APPLY CHANGES FROM SNAPSHOT“, which permits information to be learn incrementally from a sequence of full snapshots. Full snapshot consists of all information and their corresponding states, providing a complete view of the information because it exists at that second. Utilizing APPLY CHANGES FROM SNAPSHOT assertion now you can seamlessly synchronize exterior RDBMS sources into the Databricks platform utilizing full snapshots of the supply databases.

APPLY CHANGES FROM SNAPSHOT gives a easy, declarative syntax to effectively decide the adjustments made to the supply information by evaluating a sequence of in-order snapshots whereas permitting customers to simply declare their CDC logic and observe historical past as SCD sorts 1 or 2.

Earlier than we dive deeper and undergo an instance utilizing this new characteristic, let’s take a look at the necessities and notes a consumer ought to assessment earlier than leveraging this new functionality in DLT:

- This characteristic solely helps Python.

- The characteristic is supported on serverless DLT pipelines, and on the non-serverless DLT pipeline with Professional and Superior product editions,

- Snapshots handed into the assertion should be in ascending order by their model.

- The snapshot model parameter within the APPLY CHANGES FROM SNAPSHOT assertion should be a sortable information sort (e.g. string and quantity sorts).

- Each SCD Sort 1 and SCD Sort 2 strategies are supported.

Following this weblog you may leverage the APPLY CHANGES FROM SNAPSHOT assertion and implement both the snapshot substitute or accumulation method in each the Hive Metastore and Unity Catalog environments.

Outline your supply desk

Let’s discover this idea utilizing on-line purchasing for example. If you store on-line, merchandise costs can fluctuate resulting from provide and demand adjustments. Your order goes via phases earlier than supply, and also you may return and reorder objects at decrease costs. Retailers profit from monitoring this information. It helps them handle stock, meet buyer expectations, and align with gross sales targets.

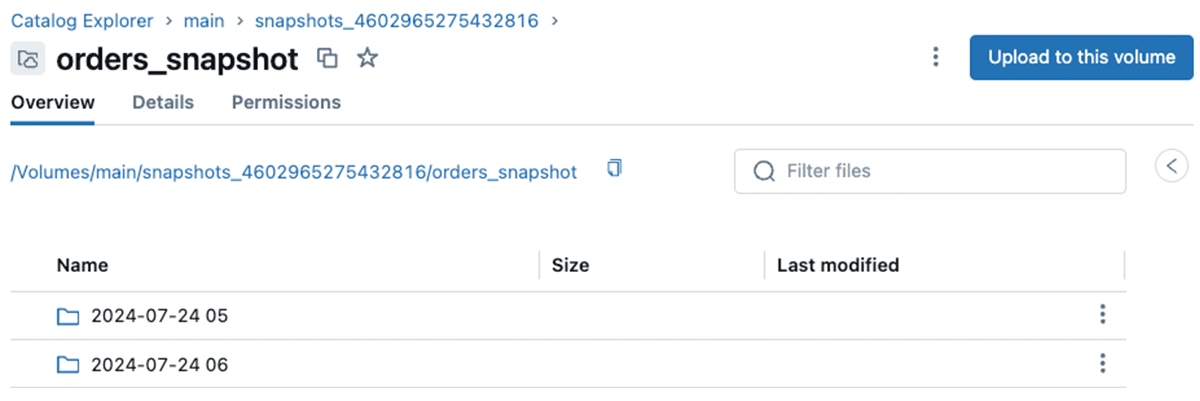

To showcase the web purchasing instance utilizing the primary method (snapshot substitute method), we’ll use the complete snapshot information saved within the storage location, and as quickly as a brand new full snapshot turns into accessible, we’ll substitute the present snapshot with the brand new one. For the second method (snapshot accumulation method), we’ll depend on the hourly full information snapshots. As every new snapshot turns into accessible, we write the newly arrived information to the storage location storing all the present snapshots. Snapshots of knowledge load frequency may be set to no matter frequency is required for processing snapshots. You may have to course of the snapshots kind of incessantly. Right here for simplicity, we decide the hourly full snapshots, that means that each hour a full copy of the information with their newest updates for that corresponding hour is loaded and saved in our storage location. Under is an instance of how our hourly full snapshots are saved within the managed Unity Catalog Volumes.

The beneath desk represents the information saved for example of a full snapshot:

| order_id | value | order_status | order_date | customer_id | product_id |

|---|---|---|---|---|---|

| 1 | 91 | re-ordered | 2023-09-16 13:59:15 | 17127 | 2058 |

| 2 | 24 | delivery | 2023-09-13 15:52:53 | 16334 | 2047 |

| 3 | 13 | delivered | 2023-10-04 01:07:35 | 10706 | 2005 |

| … | … | … | … | … | … |

When creating snapshot information, you could have a major key for every file in your information and a single timestamp or model quantity that applies to all information in every snapshot that enables easy monitoring of the order of adjustments in a sequence of ingested snapshots. On this day by day snapshot instance, order_id serves as the first key. The date related to loading of the snapshots into the storage location is used to call the recordsdata, enabling us to entry the snapshot for that particular date, and we depend on these date-based file names to trace adjustments between consecutive snapshots.

For this instance, we have created a pattern dataset utilizing the fields from the desk talked about earlier. To show an replace operation, we modify the order_status from ‘pending’ to ‘delivery’, ‘delivered’, or ‘cancelled’ for present orders. As an instance inserts, we add new orders with distinctive order_ids. Lastly, to indicate how deletes work, we take away a small, random number of present orders. This method offers a complete instance that features all key operations: INSERT, UPDATE, and DELETE. Yow will discover all of the notebooks used for this weblog, together with the information generator, right here. The processing steps and outcomes are demonstrated within the following sections.

Implementation of a DLT pipeline to course of CDC information from Full Snapshots

So as to leverage “APPLY CHANGES FROM SNAPSHOT”, much like APPLY CHANGES INTO, we should first create the goal streaming desk that will probably be used to seize and retailer the file adjustments over time. The beneath code is an instance of making a goal streaming desk.

import dlt

dlt.create_streaming_table(title="goal",

remark="Clear, merged ultimate desk from the complete snapshots")Now that we’ve got a goal streaming desk, we will look at the APPLY CHANGES FROM SNAPSHOT assertion extra carefully and look at the arguments it must course of the snapshot information successfully. In Method 1, when each present snapshot is periodically changed by a brand new snapshot, the apply_changes_from_snapshot Python perform reads and ingests a brand new snapshot from a supply desk and shops it in a goal desk.

@dlt.view(title="supply")

def supply():

return spark.learn.desk("catalog.schema.desk")

def apply_changes_from_snapshot(

goal="goal",

supply="supply",

keys=["keys"],

stored_as_scd_type,

track_history_column_list = None,

track_history_except_column_list = None)APPLY CHANGES FROM SNAPSHOT requires specifying the “keys” argument. The “keys” argument ought to discuss with the column or mixture of columns that uniquely determine a row within the snapshot information. This can be a distinctive identifier that enables the assertion to determine the row that has modified within the new snapshots. For instance in our on-line purchasing instance, “order_id” is the first key and is the distinctive identifier of orders that bought up to date, deleted, or inserted. Thus, later within the assertion we move order_id to the keys argument.

One other required argument is stored_as_scd_type. The stored_as_scd_type argument permits the customers to specify how they want to retailer information within the goal desk, whether or not as SCD TYPE 1 or SCD Sort 2.

In Method 2, the place snapshots accumulate over time and we have already got an inventory of present snapshots, as an alternative of utilizing the supply argument, we’d like one other argument known as snapshot_and_version that should be specified. The snapshot model should be explicitly offered for every snapshot. This snapshot_and_version argument takes a lambda perform. By passing a lambda perform to this argument, the perform takes the most recent processed snapshot model as an argument.

Lambda perform: lambda Any => Elective[(DataFrame, Any)]

Return: it may both be None or a tuple of two values:

- The primary worth of the returned tuple is the brand new snapshot DataFrame to be processed.

- The second worth of the returned tuple is the snapshot model that represents the logical order of the snapshot.

Every time the apply_changes_from_snapshot pipeline will get triggered, we’ll:

- Execute the snapshot_and_version lambda perform to load the following snapshot DataFrame and the corresponding snapshot model.

- If there are not any DataFrame returns, we’ll terminate the execution and mark the replace as full.

- Detect the adjustments launched by the brand new snapshot and incrementally apply them to the goal.

- Soar again to step one (#1) to load the following snapshot and its model.

Whereas the above-mentioned arguments are the obligatory fields of APPLY CHANGES FROM SNAPSHOT, different non-obligatory arguments, equivalent to track_history_column_list and track_history_except_column_list, give customers extra flexibility to customise the illustration of the goal desk if they should.

Going again to the web purchasing instance and taking a better take a look at how this characteristic works utilizing the synthetically generated information from [table 1]: Beginning with the primary run, when no preliminary snapshots existed, we generate order information to create the primary snapshot desk in case of Method 1, or retailer the generated preliminary snapshot information into the outlined storage location path utilizing managed Unity Catalog quantity in case of Method 2. Whatever the method, the generated information would seem like beneath:

| order_id | value | order_status | order_date | customer_id | product_id |

|---|---|---|---|---|---|

| 1 | 91 | re-ordered | 2023-09-16 13:59:15 | 17127 | 2058 |

| 2 | 24 | returned | 2023-09-13 15:52:53 | 16334 | 2047 |

| 3 | 13 | delivered | 2023-10-04 01:07:35 | 10706 | 2005 |

| 4 | 45 | cancelled | 2023-10-06 10:40:38 | 10245 | 2089 |

| 5 | 41 | delivery | 2023-10-08 14:52:16 | 19435 | 2057 |

| 6 | 38 | delivered | 2023-10-04 14:33:17 | 19798 | 2061 |

| 7 | 27 | pending | 2023-09-15 03:22:52 | 10488 | 2033 |

| 8 | 23 | returned | 2023-09-14 14:50:19 | 10302 | 2051 |

| 9 | 96 | pending | 2023-09-28 22:50:24 | 18909 | 2039 |

| 10 | 79 | cancelled | 2023-09-29 15:06:21 | 14775 | 2017 |

The following time the job triggers, we get the second snapshot of orders information by which new orders with order ids of 11 and 12 have been added, and a few of the present orders in preliminary snapshots (order ids of seven and 9) are getting up to date with the brand new order_status, and the order id 2 which was an outdated returned order is not exists. So the second snapshot would seem like beneath:

| order_id | value | order_status | order_date | customer_id | product_id |

|---|---|---|---|---|---|

| 1 | 91 | re-ordered | 2023-09-16 13:59:15 | 17127 | 2058 |

| 3 | 13 | delivered | 2023-10-04 01:07:35 | 10706 | 2005 |

| 4 | 45 | cancelled | 2023-10-06 10:40:38 | 10245 | 2089 |

| 5 | 41 | delivery | 2023-10-08 14:52:16 | 19435 | 2057 |

| 6 | 38 | delivered | 2023-10-04 14:33:17 | 19798 | 2061 |

| 7 | 27 | delivered | 2023-10-10 23:08:24 | 10488 | 2033 |

| 8 | 23 | returned | 2023-09-14 14:50:19 | 10302 | 2051 |

| 9 | 96 | delivery | 2023-10-10 23:08:24 | 18909 | 2039 |

| 10 | 79 | cancelled | 2023-09-29 15:06:21 | 14775 | 2017 |

| 11 | 91 | returned | 2023-10-10 23:24:01 | 18175 | 2089 |

| 12 | 24 | returned | 2023-10-10 23:39:13 | 13573 | 2068 |

Within the case of Method 1, the snapshot desk of “orders_snapshot” is now being overwritten by the newest snapshot information. To course of the snapshot information we first create a goal streaming desk of “orders”.

import dlt

from datetime import datetime

import datetime

database_name = spark.conf.get("snapshot_source_database")

desk = "orders_snapshot"

table_name = f"{database_name}.{desk}"

snapshot_source_table_name = f"{database_name}.orders_snapshot"

@dlt.view(title="supply")

def supply():

return spark.learn.desk(snapshot_source_table_name)

dlt.create_streaming_table(

title = "orders"

)Then we use the apply_changes_from_snapshot as beneath to use the most recent adjustments on each order_id from the newest snapshot information into the goal desk. On this instance, as a result of we need to course of the brand new snapshot, we learn the brand new snapshot from the snapshot information supply and retailer the processed snapshot information within the goal desk.

dlt.apply_changes_from_snapshot(

goal = "orders",

supply = "supply",

keys = ["order_id"],

stored_as_scd_type = 1

)Much like Method 1, to course of the snapshots information for Method 2 we first have to create a goal streaming desk. We name this goal desk “orders”.

import dlt

from datetime import timedelta

from datetime import datetime

dlt.create_streaming_table(title="orders",

remark= "Clear, merged ultimate desk from the complete snapshots",

table_properties={

"high quality": "gold"

}

)For Method 2, each time the job is triggered and new snapshot information is generated, the information is saved in the identical outlined storage path the place the preliminary snapshot information was saved. So as to consider if this path exists and to search out the preliminary snapshot information, we listing the contents of the outlined path, then we convert the datetime strings extracted from the paths into datetime objects, and compile an inventory of those datetime objects. After we’ve got the entire listing of datetime objects, by discovering the earliest datetime on this listing we determine the preliminary snapshot saved within the root path listing.

snapshot_root_path = spark.conf.get("snapshot_path")

def exist(path):

attempt:

if dbutils.fs.ls(path) is None:

return False

else:

return True

besides:

return False

# Checklist all objects within the bucket utilizing dbutils.fs

object_paths = dbutils.fs.ls(snapshot_root_path)

datetimes = []

for path in object_paths:

# Parse the datetime string to a datetime object

datetime_obj = datetime.strptime(path.title.strip('/"'), '%Y-%m-%d %H')

datetimes.append(datetime_obj)

# Discover the earliest datetime

earliest_datetime = min(datetimes)

# Convert the earliest datetime again to a string if wanted

earliest_datetime_str = earliest_datetime.strftime('"%Y-%m-%d %H"')

print(f"The earliest datetime within the bucket is: {earliest_datetime_str}")As talked about earlier in Method 2, each time the apply_changes_from_snapshot pipeline will get triggered, the lambda perform must determine the following snapshot that must be loaded and the corresponding snapshot model or timestamp to detect the adjustments from the earlier snapshot.

As a result of we’re utilizing hourly snapshots and the job triggers each hour, we will use increments of 1 hour together with the extracted datetime of the preliminary snapshot to search out the following snapshot path, and the datetime related to this path.

def next_snapshot_and_version(latest_snapshot_datetime):

latest_datetime_str = latest_snapshot_datetime or earliest_datetime_str

if latest_snapshot_datetime is None:

snapshot_path = f"{snapshot_root_path}/{earliest_datetime_str}"

print(f"Studying earliest snapshot from {snapshot_path}")

earliest_snapshot = spark.learn.format("parquet").load(snapshot_path)

return earliest_snapshot, earliest_datetime_str

else:

latest_datetime = datetime.strptime(latest_datetime_str, '%Y-%m-%d %H')

# Calculate the following datetime

increment = timedelta(hours=1) # Increment by 1 hour as a result of we're

offered hourly snapshots

next_datetime = latest_datetime + increment

print(f"The following snapshot model is : {next_datetime}")

# Convert the next_datetime to a string with the specified format

next_snapshot_datetime_str = next_datetime.strftime('%Y-%m-%d %H')

snapshot_path = f"{snapshot_root_path}/{next_snapshot_datetime_str}"

print("Trying to learn subsequent snapshot from " + snapshot_path)

if (exist(snapshot_path)):

snapshot = spark.learn.format("parquet").load(snapshot_path)

return snapshot, next_snapshot_datetime_str

else:

print(f"Could not discover snapshot information at {snapshot_path}")

return NoneAs soon as we outline this lambda perform and might determine adjustments in information incrementally, we will use the apply_changes_from_snapshot assertion to course of the snapshots and incrementally apply them to the created goal desk of “orders”.

dlt.apply_changes_from_snapshot(

goal="orders",

snapshot_and_version=next_snapshot_and_version,

keys=["order_id"],

stored_as_scd_type=2,

track_history_column_list=["order_status"]

)Whatever the method, as soon as the code is prepared, to make use of the apply_changes_from_snapshot assertion, a DLT pipeline utilizing the Professional or Superior product version should be created.

Develop Workflows with Delta Dwell Tables Pipeline as Duties Utilizing Databricks Asset Bundles (DABs)

To simplify the event and deployment of our pattern workflow, we used Databricks Asset Bundles (DABs). Nevertheless, the APPLY CHANGES performance doesn’t mandate using DABs, however it’s thought-about a finest apply to automate the event and deployment of Databricks Workflows and DLT pipelines.

For each widespread approaches we’re protecting on this weblog we leveraged from DABs in this repo. Thus within the repo there are supply recordsdata known as databricks.yml which function an end-to-end undertaking definition. These supply recordsdata embrace all of the parameters and details about how DLT pipelines as duties inside workflows may be examined and deployed. On condition that DLT pipelines present you two storage choices of Hive Metastore and Unity Catalog, within the databricks.yml file we thought-about each storage choices for implementations of each Method 1 and Method 2 jobs. The goal “growth” in databricks.yml file refers back to the implementation of each approaches utilizing Hive Metastore and in DBFS location, whereas goal known as “development-uc” within the databricks.yml file refers back to the implementation of each approaches utilizing Unity Catalog and storing information in managed UC Volumes. Following the README.md file within the repo it is possible for you to to deploy each approaches in both storage choice of your alternative solely by utilizing a couple of bundle instructions.

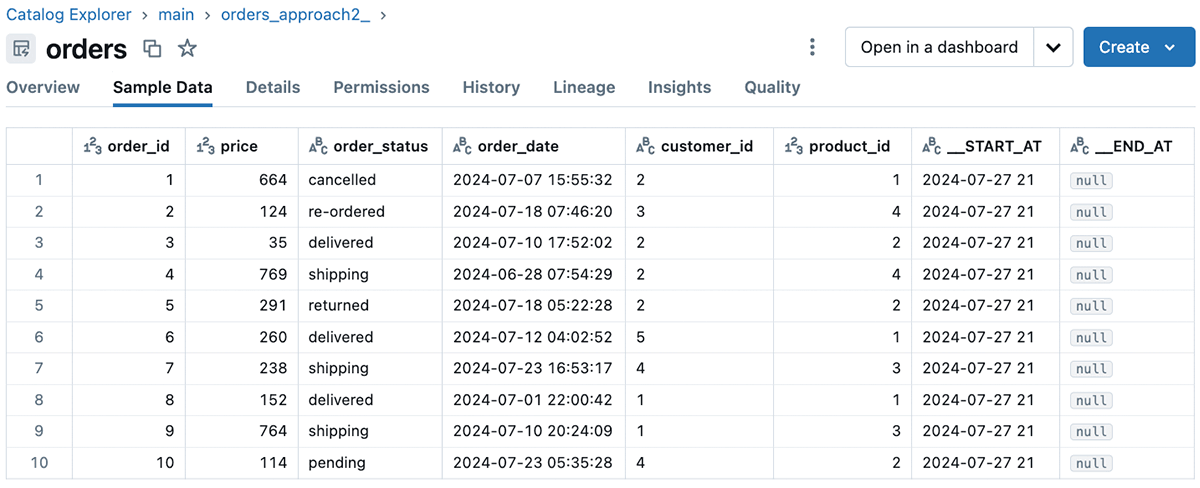

Within the instance we lined Method 2 we used SCD Sort 2 goal desk via passing 2 to the stored_as_scd_type argument to retailer all of the historic and present values of the order ids within the goal desk. Navigating to the goal desk via Catalog Explorer, we will see the columns of the goal desk, pattern information, particulars, and extra insightful fields related to the goal desk. For SCD Sort 2 adjustments, Delta Dwell Tables propagates the suitable sequencing values to the __START_AT and __END_AT columns of the goal desk. See beneath for an instance of pattern information from the goal desk in Catalog Explorer when utilizing Unity Catalog. The catalog “major” within the picture beneath is the default catalog within the Unity Catalog metastore, which we’re counting on on this instance for simplicity.

Getting Began

Constructing a scalable, dependable incremental information pipeline based mostly on snapshots has by no means been simpler. Strive Databricks without spending a dime to run this instance.

[ad_2]