[ad_1]

Introduction

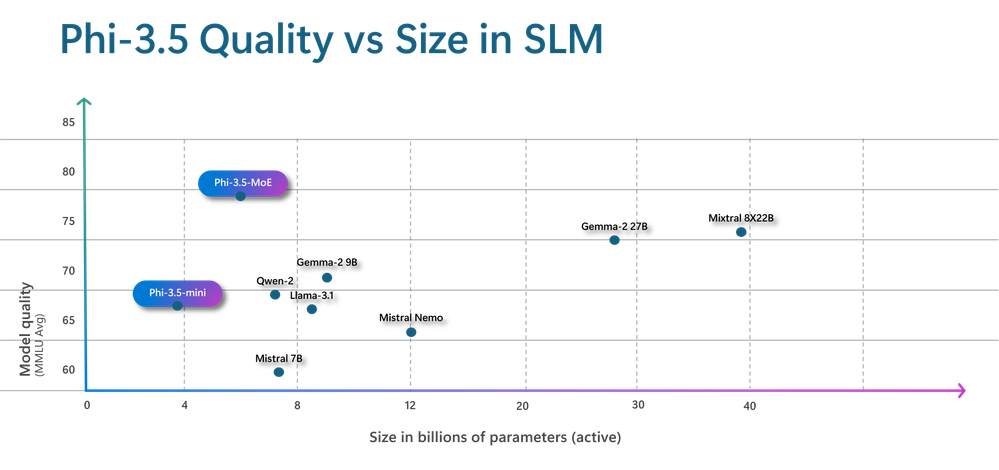

The latest mannequin assortment from Microsoft’s Small Language Fashions (SLMs) household is known as Phi-3. They surpass fashions of comparable and higher sizes on a wide range of benchmarks in language, reasoning, coding, and math. They’re made to be extraordinarily highly effective and economical. With Phi-3 fashions out there, Azure purchasers have entry to a wider vary of fantastic fashions, offering them with extra helpful choices for creating and growing generative AI functions. Because the April 2024 launch, Azure has gathered a wealth of insightful enter from customers and group members relating to areas the place the Phi-3 SLMs may use enchancment.

They’re now happy to current Phi-3.5 SLMs – Phi-3.5-mini, Phi-3.5-vision, and Phi-3.5-MoE, a Combination-of-Specialists (MoE) mannequin, as the latest members of the Phi household. Phi-3.5-mini provides a 128K context size to enhance multilingual help. Phi-3.5-vision enhances the comprehension and reasoning of multi-frame photos, enhancing efficiency on single-image benchmarks. Phi-3.5-MoE surpasses bigger fashions whereas sustaining the efficacy of Phi fashions with its 16 specialists, 6.6B energetic parameters, low latency, multilingual help, and robust security options.

Phi-3.5-MoE: Combination-of-Specialists

Phi-3.5-MoE is the most important and newest mannequin among the many newest Phi 3.5 SLMs releases. It contains 16 specialists, every containing 3.8B parameters. With a complete mannequin dimension of 42B parameters, it prompts 6.6B parameters utilizing two specialists. This MoE mannequin performs higher than a dense mannequin of a comparable dimension relating to high quality and efficiency. Greater than 20 languages are supported. The MoE mannequin, like its Phi-3 counterparts, makes use of a mixture of proprietary and open-source artificial instruction and choice datasets in its strong security post-training approach. Utilizing artificial and human-labeled datasets, our post-training process combines Direct Choice Optimisation (DPO) with Supervised Superb-Tuning (SFT). These comprise a number of security classes and datasets emphasizing harmlessness and helpfulness. Furthermore, Phi-3.5-MoE can help a context size of as much as 128K, which makes it able to dealing with a wide range of long-context workloads.

Additionally learn: Microsoft Phi-3: From Language to Imaginative and prescient, this New AI Mannequin is Remodeling AI

Coaching Information of Phi 3.5 MoE

Coaching knowledge of Phi 3.5 MoE consists of all kinds of sources, totaling 4.9 trillion tokens (together with 10% multilingual), and is a mixture of:

- Publicly out there paperwork filtered rigorously for high quality chosen high-quality academic knowledge and code;

- Newly created artificial, “textbook-like” knowledge to show math, coding, frequent sense reasoning, common data of the world (science, each day actions, concept of thoughts, and many others.);

- Excessive-quality chat format supervised knowledge overlaying varied matters to mirror human preferences, equivalent to instruct-following, truthfulness, honesty, and helpfulness.

Azure focuses on the standard of knowledge that would probably enhance the mannequin’s reasoning means, and it filters the publicly out there paperwork to comprise the proper degree of data. For instance, the results of a recreation within the Premier League on a specific day is likely to be good coaching knowledge for frontier fashions, but it surely wanted to take away such info to go away extra mannequin capability for reasoning for small-size fashions. Extra particulars about knowledge could be discovered within the Phi-3 Technical Report.

Phi 3.5 MoE coaching takes 23 days and makes use of 4.9T tokens of coaching knowledge. The supported languages are Arabic, Chinese language, Czech, Danish, Dutch, English, Finnish, French, German, Hebrew, Hungarian, Italian, Japanese, Korean, Norwegian, Polish, Portuguese, Russian, Spanish, Swedish, Thai, Turkish, and Ukrainian.

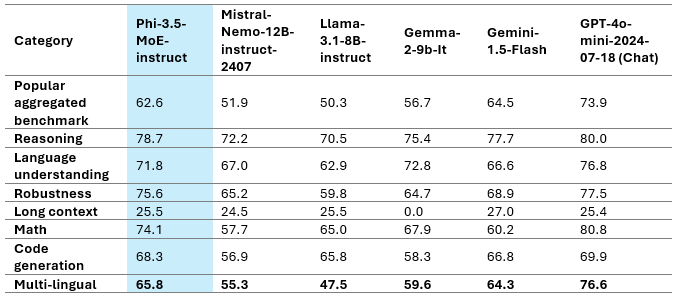

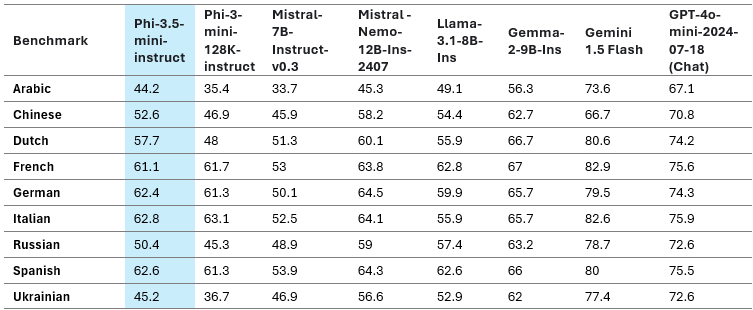

The above desk represents Phi-3.5-MoE Mannequin High quality on varied capabilities. We will see that Phi 3.5 MoE is performing higher than some bigger fashions in varied classes. Phi-3.5-MoE with solely 6.6B energetic parameters achieves the same degree of language understanding and math as a lot bigger fashions. Furthermore, the mannequin outperforms greater fashions in reasoning functionality. The mannequin offers good capability for finetuning for varied duties.

The multilingual MMLU, MEGA, and multilingual MMLU-pro datasets are used within the above desk to show the Phi-3.5-MoE’s multilingual capability. We discovered that the mannequin outperforms competing fashions with considerably bigger energetic parameters on multilingual duties, even with solely 6.6B energetic parameters.

Phi-3.5-mini

The Phi-3.5-mini mannequin underwent further pre-training utilizing multilingual artificial and high-quality filtered knowledge. Subsequent post-training procedures, equivalent to Direct Choice Optimization (DPO), Proximal Coverage Optimization (PPO), and Supervised Superb-Tuning (SFT), have been then carried out. These procedures used artificial, translated, and human-labeled datasets.

Coaching Information of Phi 3.5 Mini

Coaching knowledge of Phi 3.5 Mini consists of all kinds of sources, totaling 3.4 trillion tokens, and is a mixture of:

- Publicly out there paperwork filtered rigorously for high quality chosen high-quality academic knowledge and code;

- Newly created artificial, “textbook-like” knowledge to show math, coding, frequent sense reasoning, common data of the world (science, each day actions, concept of thoughts, and many others.);

- Excessive-quality chat format supervised knowledge overlaying varied matters to mirror human preferences, equivalent to instruct-following, truthfulness, honesty, and helpfulness.

Mannequin High quality

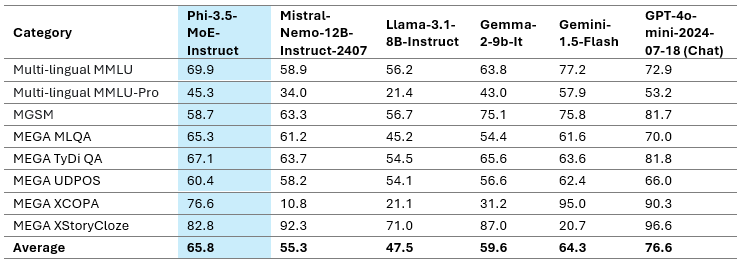

The above desk offers a fast overview of the mannequin high quality on essential benchmarks. This efficient mannequin meets, if not outperforms, different fashions with higher sizes regardless of having a compact dimension of solely 3.8B parameters.

Additionally learn: Microsoft Phi 3 Mini: The Tiny Mannequin That Runs on Your Telephone

Multi-lingual Functionality

Our latest replace to the three.8B mannequin is Phi-3.5-mini. The mannequin considerably improved multilingualism, multiturn dialog high quality, and reasoning capability by incorporating further steady pre-training and post-training knowledge.

Multilingual help is a significant advance over Phi-3-mini with Phi-3.5-mini. With 25–50% efficiency enhancements, Arabic, Dutch, Finnish, Polish, Thai, and Ukrainian languages benefited essentially the most from the brand new Phi 3.5 mini. Considered in a broader context, Phi-3.5-mini demonstrates the perfect efficiency of any sub-8B mannequin in a number of languages, together with English. It needs to be famous that whereas the mannequin has been optimized for increased useful resource languages and employs 32K vocabulary, it’s not suggested to make use of it for decrease useful resource languages with out further fine-tuning.

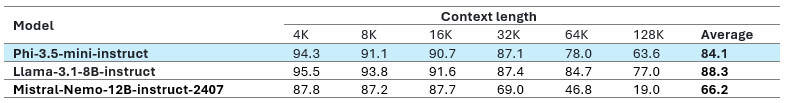

Lengthy Context

With a 128K context size help, Phi-3.5-mini is a superb alternative for functions like info retrieval, lengthy document-based high quality assurance, and summarising prolonged paperwork or assembly transcripts. In comparison with the Gemma-2 household, which might solely deal with an 8K context size, Phi-3.5 performs higher. Moreover, Phi-3.5-mini has stiff competitors from significantly bigger open-weight fashions like Mistral-7B-instruct-v0.3, Llama-3.1-8B-instruct, and Mistral-Nemo-12B-instruct-2407. Phi-3.5-mini-instruct is the one mannequin on this class, with simply 3.8B parameters, 128K context size, and multi-lingual help. It’s essential to notice that Azure selected to help extra languages whereas retaining English efficiency constant for varied duties. Because of the mannequin’s restricted functionality, English data could also be superior to different languages. Azure suggests using the mannequin within the RAG setup for duties requiring a excessive degree of multilingual understanding.

Additionally learn: Phi 3 – Small But Highly effective Fashions from Microsoft

Phi-3.5-vision with Multi-frame Enter

Coaching Information of three.5 Imaginative and prescient

Azure’s coaching knowledge consists of all kinds of sources and is a mixture of:

- Publicly out there paperwork filtered rigorously for high quality chosen high-quality academic knowledge and code;

- Chosen high-quality image-text interleave knowledge;

- Newly created artificial, “textbook-like” knowledge for the aim of instructing math, coding, frequent sense reasoning, common data of the world (science, each day actions, concept of thoughts, and many others.), newly created picture knowledge, e.g., chart/desk/diagram/slides, newly created multi-image and video knowledge, e.g., brief video clips/pair of two related photos;

- Excessive-quality chat format supervised knowledge overlaying varied matters to mirror human preferences, equivalent to instruct-following, truthfulness, honesty, and helpfulness.

The information assortment course of concerned sourcing info from publicly out there paperwork and meticulously filtering out undesirable paperwork and pictures. To safeguard privateness, we fastidiously filtered varied picture and textual content knowledge sources to take away or scrub any probably private knowledge from the coaching knowledge.

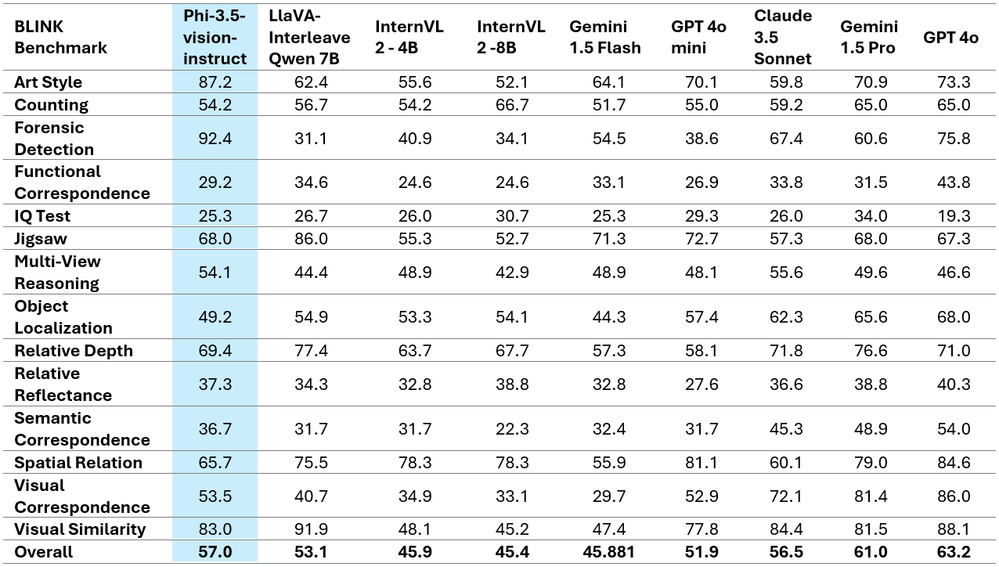

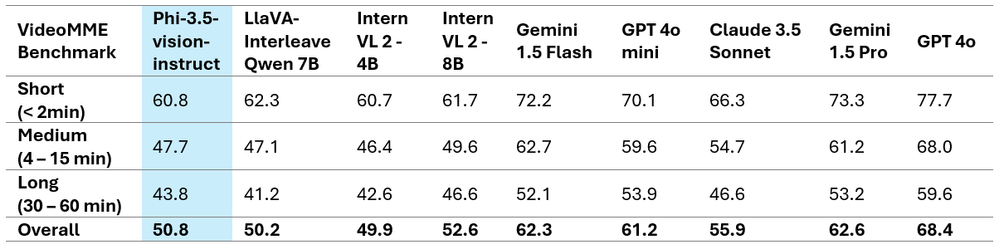

Phi-3.5-vision delivers state-of-the-art multi-frame picture understanding and reasoning capabilities because of important consumer suggestions. With a variety of functions throughout a number of contexts, this breakthrough permits exact image comparability, multi-image summarization/storytelling, and video summarisation.

Surprisingly, Phi-3.5-vision has proven notable good points in efficiency throughout a number of single-image benchmarks. As an example, it elevated the MMBench efficiency from 80.5 to 81.9 and the MMMU efficiency from 40.4 to 43.0. Moreover, the usual for doc comprehension, TextVQA, elevated from 70.9 to 72.0.

The tables above showcase the improved efficiency metrics and current the excellent comparative findings on two well-known multi-image/video benchmarks. It is very important observe that Phi-3.5-Imaginative and prescient doesn’t help multilingual use circumstances. With out further fine-tuning, it is suggested in opposition to utilizing it for multilingual situations.

Attempting out Phi 3.5 Mini

Utilizing Hugging Face

We are going to use kaggle pocket book to implement Phi 3.5 Mini because it accommodates the Phi 3.5 mini mannequin higher than Google Colab. Be aware: Make sure that to allow the accelerator to GPU T4x2.

1st Step: Importing obligatory libraries

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline

torch.random.manual_seed(0)2nd Step: Loading the Mannequin and Tokenizer

mannequin = AutoModelForCausalLM.from_pretrained(

"microsoft/Phi-3.5-mini-instruct",

device_map="cuda",

torch_dtype="auto",

trust_remote_code=True,

)

tokenizer = AutoTokenizer.from_pretrained("microsoft/Phi-3.5-mini-instruct")third Step: Getting ready messages

messages = [

{"role": "system", "content": "You are a helpful AI assistant."},

{"role": "user", "content": "Tell me about microsoft"},

]“position”: “system”: Units the conduct of the AI mannequin (on this case, as a “useful AI assistant”

“position”: “consumer”: Represents the consumer’s enter.

Step 4: Creating the Pipeline

pipe = pipeline(

"text-generation",

mannequin=mannequin,

tokenizer=tokenizer,

)This creates a pipeline for textual content era utilizing the desired mannequin and tokenizer. The pipeline abstracts the complexities of tokenization, mannequin execution, and decoding, offering a straightforward interface for producing textual content.

Step 5: Setting Technology Arguments

generation_args = {

"max_new_tokens": 500,

"return_full_text": False,

"temperature": 0.0,

"do_sample": False,

}These arguments management how the mannequin generates textual content.

- max_new_tokens=500: The utmost variety of tokens to generate.

- return_full_text=False: Solely the generated textual content (not the enter) might be returned.

- temperature=0.0: Controls randomness within the output. A price of 0.0 makes the mannequin deterministic, producing the most definitely output.

- do_sample=False: Disables sampling, making the mannequin all the time select essentially the most possible subsequent token.

Step 6: Producing Textual content

output = pipe(messages, **generation_args)

print(output[0]['generated_text'])

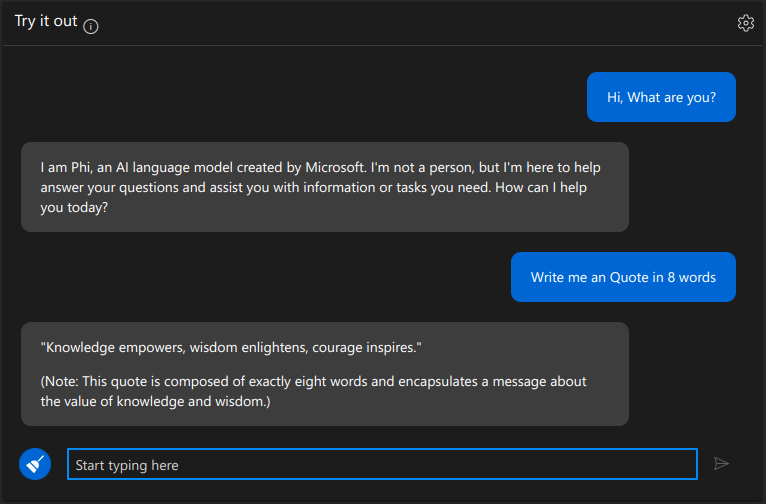

Utilizing Azure AI Studio

We will strive Phi 3.5 Mini Instruct in Azure AI Studio utilizing their Interface. There’s a part referred to as “Attempt it out” within the Azure AI Studio. Beneath is a snapshot of utilizing Phi 3.5 Mini.

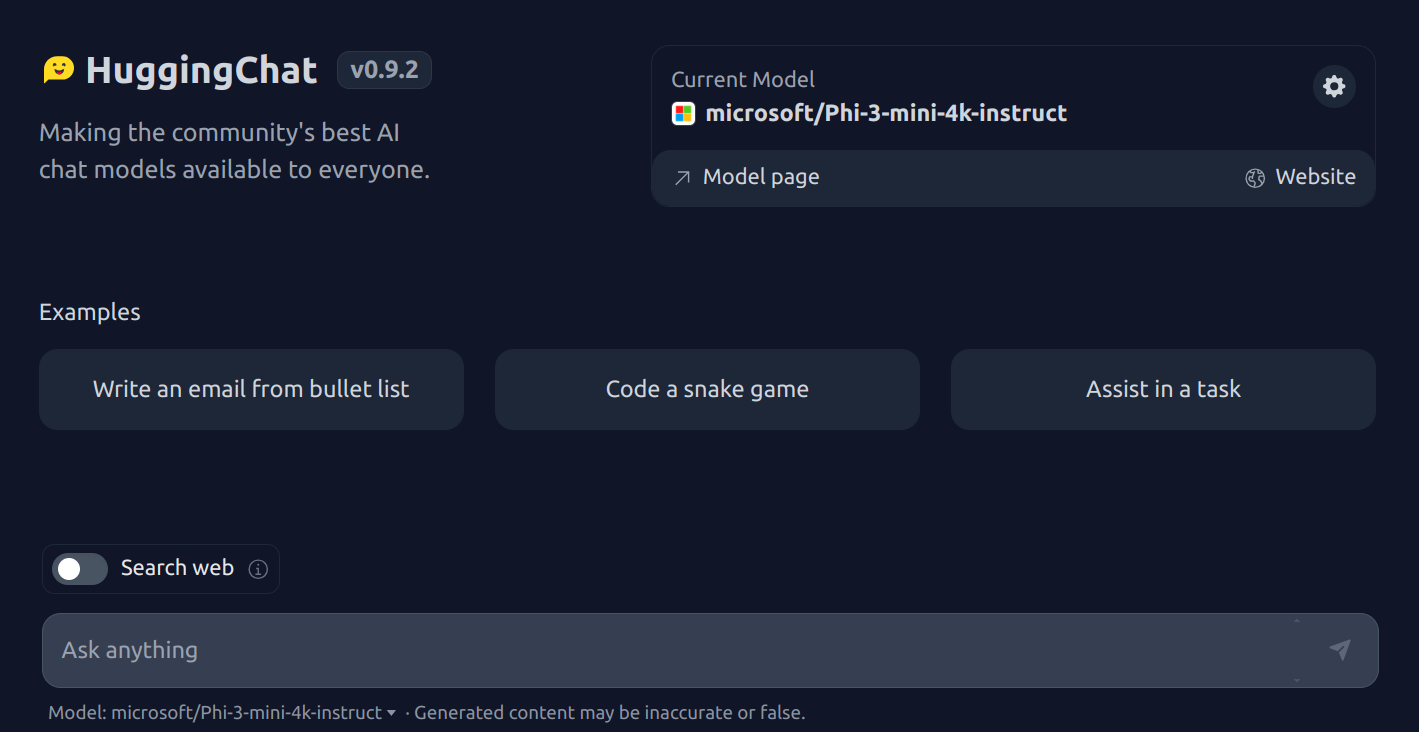

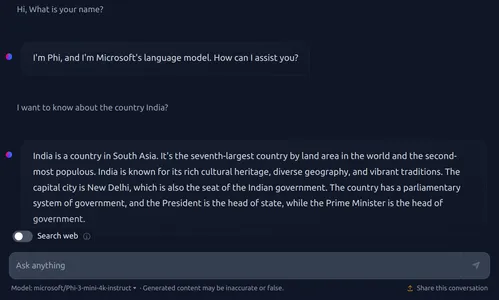

Utilizing HuggingChat from Hugging Face

Right here is the HuggingChat Hyperlink.

Attempting Phi 3.5 Imaginative and prescient

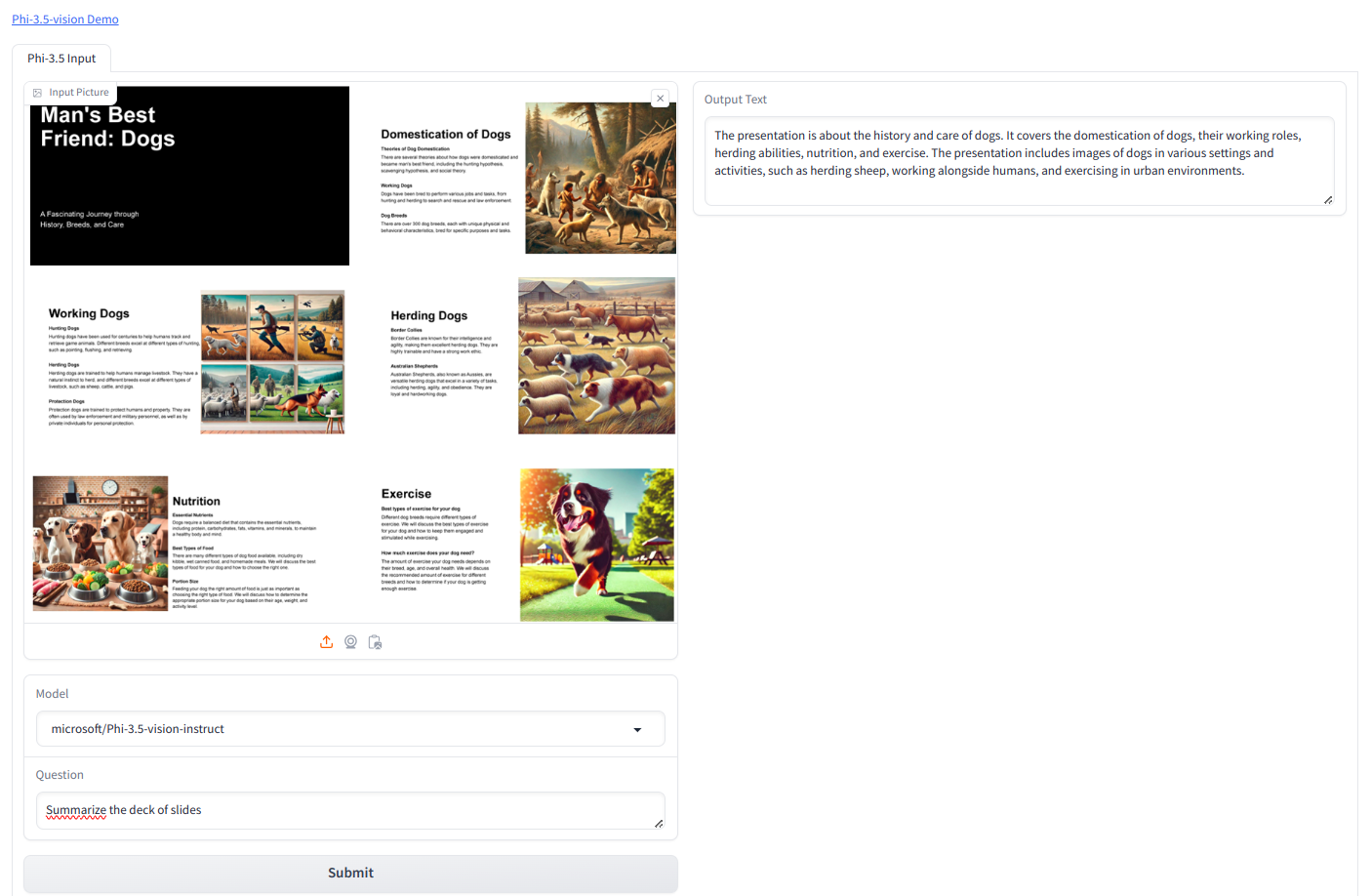

Utilizing Areas from Hugging Face

Since Phi 3.5 Imaginative and prescient is a GPU-intensive mannequin, we can’t use the mannequin with a free tier of colab and kaggle. Therefore, I’ve used hugging face areas to strive Phi 3.5 Imaginative and prescient.

We might be utilizing the under picture.

Immediate we used is “Summarize the deck of slides”

Output

The presentation is concerning the historical past and care of canines. It covers the domestication of canines, their working roles, herding talents, vitamin, and train. The presentation consists of photos of canines in varied settings and actions, equivalent to herding sheep, working alongside people, and exercising in city environments.

Conclusion

The Phi-3.5-mini is a singular LLM with 3.8B parameters, 128K context size, and multi-lingual help. It balances broad language help with English data density. It’s finest utilized in a Retrieval-Augmented Technology setup for multilingual duties. The Phi-3.5-MoE has 16 small specialists, delivers high-quality efficiency, reduces latency, and helps 128k context size and a number of languages. It may be custom-made for varied functions and has 6.6B energetic parameters. The Phi-3.5-vision enhances single-image benchmark efficiency. The Phi-3.5 SLMs household gives cost-effective, high-capability choices for the open-source group and Azure prospects.

If you’re searching for a Generative AI course on-line, then discover right now – GenAI Pinnacle Program

Steadily Requested Questions

Ans. Phi-3.5 fashions are the newest in Microsoft’s Small Language Fashions (SLMs) household, designed for prime efficiency and effectivity in language, reasoning, coding, and math duties.

Ans. Phi-3.5-MoE is a Combination-of-Specialists mannequin with 16 specialists, supporting 20+ languages, 128K context size, and designed to outperform bigger fashions in reasoning and multilingual duties.

Ans. Phi-3.5-mini is a compact mannequin with 3.8B parameters, 128K context size, and improved multilingual help. It excels in English and a number of other different languages.

Ans. You possibly can strive Phi-3.5 SLMs on platforms like Hugging Face and Azure AI Studio, the place they’re out there for varied AI functions.

[ad_2]