[ad_1]

Picture by Creator

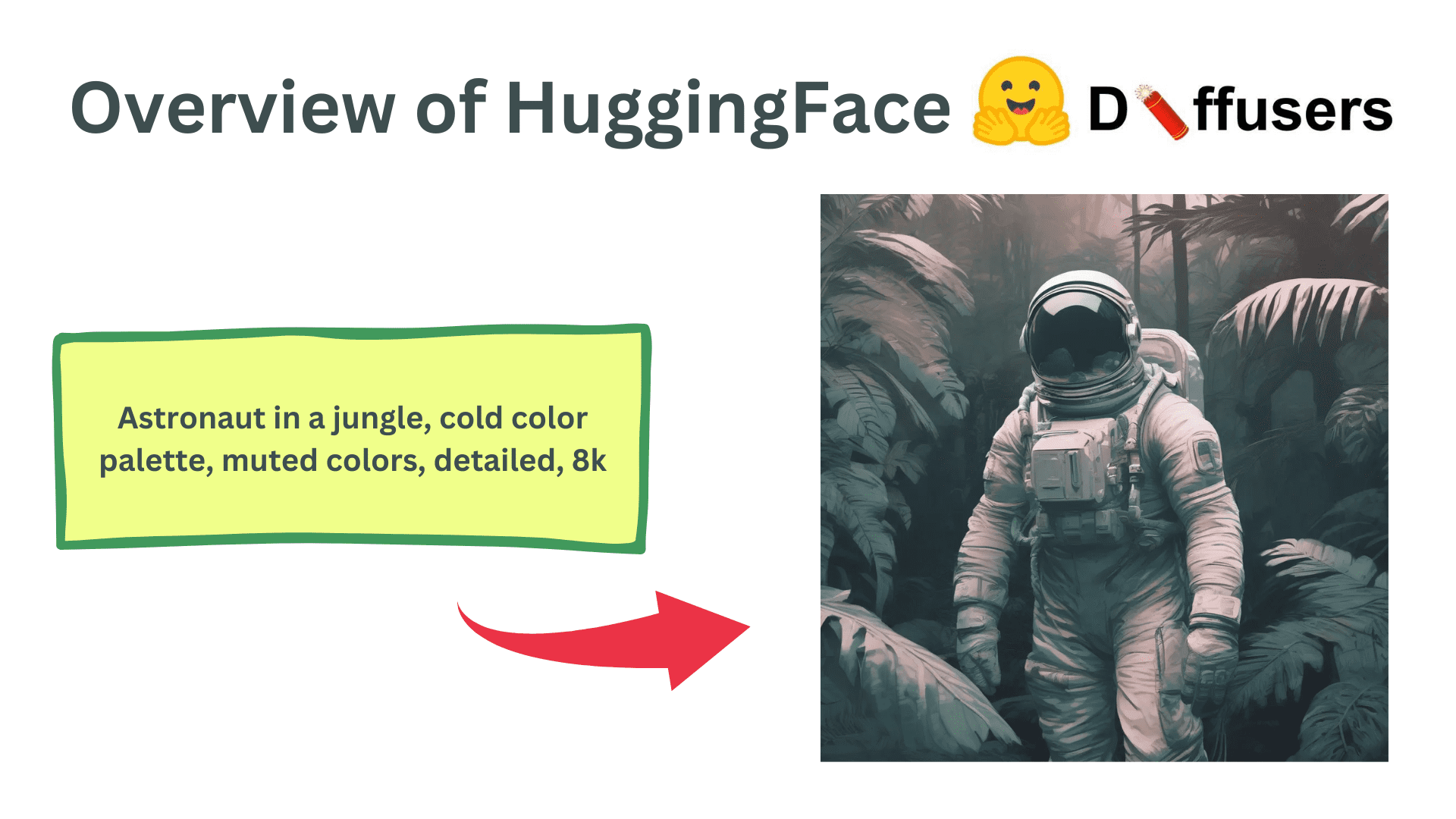

Diffusers is a Python library developed and maintained by HuggingFace. It simplifies the event and inference of Diffusion fashions for producing photos from user-defined prompts. The code is brazenly out there on GitHub with 22.4k stars on the repository. HuggingFace additionally maintains all kinds of Steady DIffusion and numerous different diffusion fashions might be simply used with their library.

Set up and Setup

It’s good to start out with a contemporary Python surroundings to keep away from clashes between library variations and dependencies.

To arrange a contemporary Python surroundings, run the next instructions:

python3 -m venv venv

supply venv/bin/activate

Putting in the Diffusers library is simple. It’s supplied as an official pip bundle and internally makes use of the PyTorch library. As well as, loads of diffusion fashions are based mostly on the Transformers structure so loading a mannequin would require the transformers pip bundle as effectively.

pip set up 'diffusers[torch]' transformers

Utilizing Diffusers for AI-Generated Pictures

The diffuser library makes it extraordinarily straightforward to generate photos from a immediate utilizing steady diffusion fashions. Right here, we are going to undergo a easy code line by line to see completely different elements of the Diffusers library.

Imports

import torch

from diffusers import AutoPipelineForText2Image

The torch bundle will probably be required for the final setup and configuration of the diffuser pipeline. The AutoPipelineForText2Image is a category that routinely identifies the mannequin that’s being loaded, for instance, StableDiffusion1-5, StableDiffusion2.1, or SDXL, and hundreds the suitable courses and modules internally. This protects us from the effort of adjusting the pipeline every time we wish to load a brand new mannequin.

Loading the Fashions

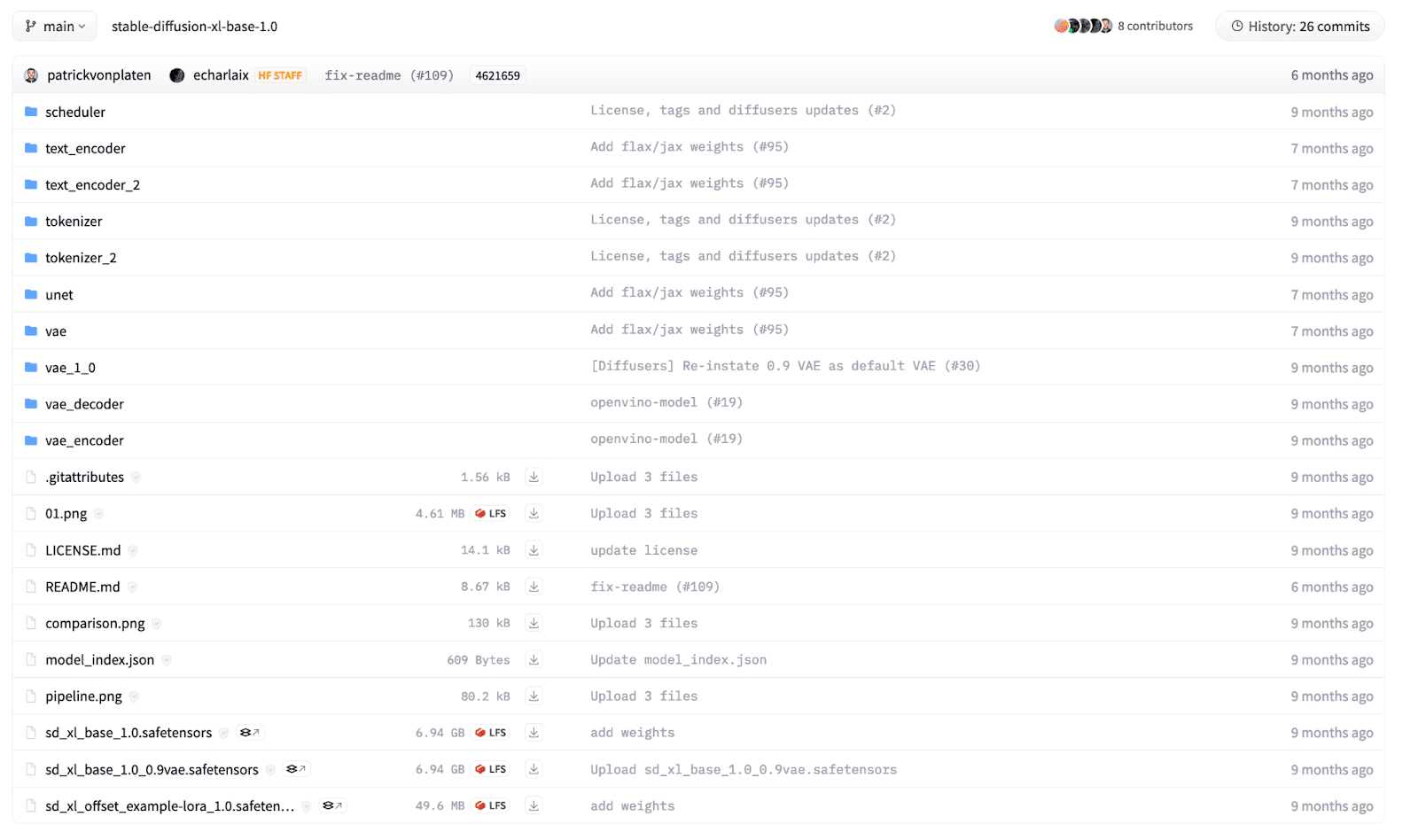

A diffusion mannequin consists of a number of parts, together with however not restricted to Textual content Encoder, UNet, Schedulers, and Variational AutoEncoder. We are able to individually load the modules however the diffusers library supplies a builder technique that may load a pre-trained mannequin given a structured checkpoint listing. For a newbie, it could be troublesome to know which pipeline to make use of, so AutoPipeline makes it simpler to load a mannequin for a particular activity.

On this instance, we are going to load an SDXL mannequin that’s brazenly out there on HuggingFace, educated by Stability AI. The information within the listing are structured in line with their names and every listing has its personal safetensors file. The listing construction for the SDXL mannequin seems to be as beneath:

To load the mannequin in our code, we use the AutoPipelineForText2Image class and name the from_pretrained perform.

pipeline = AutoPipelineForText2Image.from_pretrained(

"stability/stable-diffusion-xl-base-1.0",

torch_dtype=torch.float32 # Float32 for CPU, Float16 for GPU,

)

We offer the mannequin path as the primary argument. It may be the HuggingFace mannequin card identify as above or a neighborhood listing the place you have got the mannequin downloaded beforehand. Furthermore, we outline the mannequin weights precisions as a key phrase argument. We usually use 32-bit floating-point precision when now we have to run the mannequin on a CPU. Nevertheless, working a diffusion mannequin is computationally costly, and working an inference on a CPU gadget will take hours! For GPU, we both use 16-bit or 32-bit knowledge sorts however 16-bit is preferable because it makes use of decrease GPU reminiscence.

The above command will obtain the mannequin from HuggingFace and it might take time relying in your web connection. Mannequin sizes can differ from 1GB to over 10GBs.

As soon as a mannequin is loaded, we might want to transfer the mannequin to the suitable {hardware} gadget. Use the next code to maneuver the mannequin to CPU or GPU. Word, for Apple Silicon chips, transfer the mannequin to an MPS gadget to leverage the GPU on MacOS units.

# "mps" if on M1/M2 MacOS Machine

DEVICE = "cuda" if torch.cuda.is_available() else "cpu"

pipeline.to(DEVICE)

Inference

Now, we’re able to generate photos from textual prompts utilizing the loaded diffusion mannequin. We are able to run an inference utilizing the beneath code:

immediate = "Astronaut in a jungle, chilly coloration palette, muted colours, detailed, 8k"

outcomes = pipeline(

immediate=immediate,

peak=1024,

width=1024,

num_inference_steps=20,

)

We are able to use the pipeline object and name it with a number of key phrase arguments to regulate the generated photos. We outline a immediate as a string parameter describing the picture we wish to generate. Additionally, we will outline the peak and width of the generated picture but it surely needs to be in multiples of 8 or 16 as a result of underlying transformer structure. As well as, the whole inference steps might be tuned to regulate the ultimate picture high quality. Extra denoising steps lead to higher-quality photos however take longer to generate.

Lastly, the pipeline returns an inventory of generated photos. We are able to entry the primary picture from the array and might manipulate it as a Pillow picture to both save or present the picture.

img = outcomes.photos[0]

img.save('end result.png')

img # To indicate the picture in Jupyter pocket book

Generated Picture

Advance Makes use of

The text-2-image instance is only a primary tutorial to spotlight the underlying utilization of the Diffusers library. It additionally supplies a number of different functionalities together with Picture-2-image technology, inpainting, outpainting, and control-nets. As well as, they supply effective management over every module within the diffusion mannequin. They can be utilized as small constructing blocks that may be seamlessly built-in to create your customized diffusion pipelines. Furthermore, additionally they present further performance to coach diffusion fashions by yourself datasets and use instances.

Wrapping Up

On this article, we went over the fundamentals of the Diffusers library and the right way to make a easy inference utilizing a Diffusion mannequin. It is likely one of the most used Generative AI pipelines during which options and modifications are made every single day. There are loads of completely different use instances and options you possibly can try to the HuggingFace documentation and GitHub code is the very best place so that you can get began.

Kanwal Mehreen Kanwal is a machine studying engineer and a technical author with a profound ardour for knowledge science and the intersection of AI with drugs. She co-authored the e book “Maximizing Productiveness with ChatGPT”. As a Google Technology Scholar 2022 for APAC, she champions range and tutorial excellence. She’s additionally acknowledged as a Teradata Variety in Tech Scholar, Mitacs Globalink Analysis Scholar, and Harvard WeCode Scholar. Kanwal is an ardent advocate for change, having based FEMCodes to empower girls in STEM fields.

[ad_2]