[ad_1]

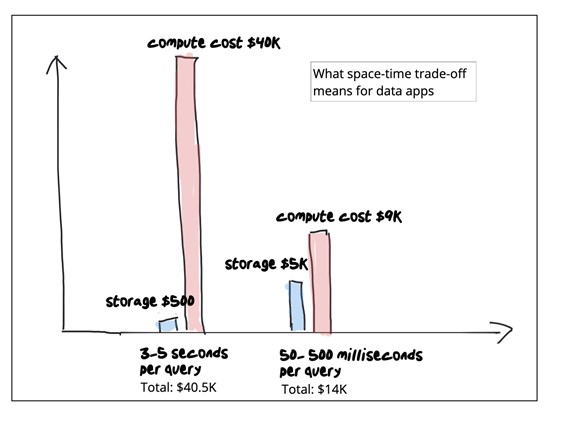

Think about you had a giant e-book, and also you have been in search of the part that talks about dinosaurs. Would you learn by way of each web page or use the index? The index will prevent quite a lot of time and power. Now think about that it’s a giant e-book with quite a lot of phrases in actually tiny print, and you’ll want to discover all of the sections that discuss animals. Utilizing the index will prevent a LOT of time and power. Extending this analogy to the world of information analytics: “time” is question latency and “power” is compute price.

What has this bought to do with Snowflake? I’m personally an enormous fan of Snowflake – it’s massively scalable, it’s straightforward to make use of and in the event you’re making the correct space-time tradeoff it’s very inexpensive. Nonetheless in the event you make the unsuitable space-time tradeoff, you’ll end up throwing increasingly compute at it whereas your crew continues to complain about latency. However when you perceive the way it actually works, you possibly can cut back your Snowflake compute price and get higher question efficiency for sure use instances. I focus on Snowflake right here, however you possibly can generalize this to most warehouses.

Understanding the space-time tradeoff in knowledge analytics

In laptop science, a space-time tradeoff is a means of fixing an issue or calculation in much less time by utilizing extra cupboard space, or by fixing an issue in little or no area by spending a very long time.

How Snowflake handles space-time tradeoff

When knowledge is loaded into Snowflake, it reorganizes that knowledge into its compressed, columnar format and shops it in cloud storage – this implies it’s extremely optimized for area which straight interprets to minimizing your storage footprint. The column design retains knowledge nearer collectively, however requires computationally intensive scans to fulfill the question. That is a suitable trade-off for a system closely optimized for storage. It’s budget-friendly for analysts working occasional queries, however compute turns into prohibitively costly as question quantity will increase as a result of programmatic entry by excessive concurrency functions.

How Rockset handles space-time tradeoff

However, Rockset is constructed for real-time analytics. It’s a real-time indexing database designed for millisecond-latency search, aggregations and joins so it indexes each discipline in a Converged Index™ which mixes a row index, column index and search index – this implies it’s extremely optimized for time which straight interprets to doing much less work and lowering compute price. This interprets to an even bigger storage footprint in change for quicker queries and lesser compute. Rockset isn’t the most effective car parking zone in the event you’re doing occasional queries on a PB-scale dataset. However it’s best suited to serving excessive concurrency functions within the sub-100TB vary as a result of it makes a wholly completely different space-time tradeoff, leading to quicker efficiency at considerably decrease compute prices.

Reaching decrease question latency at decrease compute price

Snowflake makes use of columnar codecs and cloud storage to optimize for storage price. Nonetheless for every question it must scan your knowledge. To speed up efficiency, question execution is cut up amongst a number of processors that scan massive parts of your dataset in parallel. To execute queries quicker, you possibly can exploit locality utilizing micropartitioning and clustering. Use parallelism so as to add extra compute till in some unspecified time in the future you hit the higher certain for efficiency. When every question is computationally intensive, and also you begin working many queries per second, the whole compute price per thirty days explodes on you.

In stark distinction, Rockset indexes all fields, together with nested fields, in a Converged Index™ which mixes an inverted index, a columnar index and a row index. Given that every discipline is listed, you possibly can count on area amplification which is optimized utilizing superior storage structure and compaction methods. And knowledge is served from scorching storage ie NVMe SSD so your storage price is greater. This can be a good trade-off, as a result of functions are much more compute-intensive. As of at the moment, Rockset doesn’t scan any quicker than Snowflake. It merely tries actually exhausting to keep away from full scans. Our distributed SQL question engine makes use of a number of indexes in parallel, exploiting selective question patterns and accelerating aggregations over massive numbers of information, to attain millisecond latencies at considerably decrease compute prices. The needle-in-a-haystack kind queries go straight to the inverted index and utterly keep away from scans. With every WHERE clause in your question, Rockset is ready to use the inverted index to execute quicker and use lesser compute (which is the precise reverse of a warehouse).

One instance of the kind of optimizations required to attain sub-second latencies: question parsing, optimizing, planning, scheduling takes about 1.2 ms on Rockset — in most warehouses the question startup price runs in 100s of milliseconds.

Reaching decrease knowledge latency at decrease compute price

A cloud knowledge warehouse is very optimized for batch inserts. Updates to an current document usually end in a copy-on-write on massive swaths of information. New writes are gathered and when the batch is full, that batch have to be compressed and revealed earlier than it’s queryable.

Steady Knowledge Ingestion in Minutes vs. Milliseconds

Snowpipe is Snowflake’s steady knowledge ingestion service. Snowpipe hundreds knowledge inside minutes after information are added to a stage and submitted for ingestion. In brief, Snowpipe gives a “pipeline” for loading contemporary knowledge in micro-batches, however it usually takes many minutes and incurs very excessive compute price. For instance at 4K writes per second, this method ends in tons of of {dollars} of compute per hour.

In distinction, Rockset is a totally mutable index which makes use of RocksDB LSM bushes and a lockless protocol to make writes seen to current queries as quickly as they occur. Distant compaction hastens the indexing of information even when coping with bursty writes. The LSM index compresses knowledge whereas permitting for inserts, updates and deletes of particular person information in order that new knowledge is queryable inside a second of it being generated. This mutability implies that it’s straightforward to remain in sync with OLTP databases or knowledge streams. It means new knowledge is queryable inside a second of it being generated. This method reduces each knowledge latency and compute price for real-time updates. For instance, at 4K writes per second, new knowledge is queryable in 350 milliseconds, and makes use of roughly 1/tenth of the compute in comparison with Snowpipe.

Pals don’t let mates construct apps on warehouses

Embedded content material: https://youtu.be/-vaE0uB6eqc

Cloud knowledge warehouses like Snowflake are purpose-built for enormous scale batch analytics ie massive scale aggregations and joins on PBs of historic knowledge. Rockset is constructed for serving functions with milisecond-latency search, aggregations and joins. Snowflake is optimized for storage effectivity whereas Rockset is optimized for compute effectivity. One is nice for batch analytics. The opposite is nice for real-time analytics. Knowledge apps have selective queries. They’ve low latency, excessive concurrency necessities. They’re at all times on. In case your warehouse compute price is exploding, ask your self in the event you’re making the correct space-time tradeoff in your explicit use case.

[ad_2]