[ad_1]

Performing ad-hoc evaluation is a each day a part of life for many knowledge scientists and analysts on operations groups.

They’re usually held again by not having direct and rapid entry to their knowledge as a result of the information won’t be in an information warehouse or it could be saved throughout a number of techniques in several codecs.

This sometimes implies that an information engineer might want to assist develop pipelines and tables that may be accessed to ensure that the analysts to do their work.

Nonetheless, even right here there’s nonetheless an issue.

Information engineers are normally backed-up with the quantity of labor they should do and sometimes knowledge for ad-hoc evaluation won’t be a precedence. This results in analysts and knowledge scientists both doing nothing or finagling their very own knowledge pipeline. This takes their time away from what they need to be centered on.

Even when knowledge engineers may assist develop pipelines, the time required for brand spanking new knowledge to get by the pipeline may stop operations analysts from analyzing knowledge because it occurs.

This was, and actually continues to be a significant downside in giant corporations.

Gaining access to knowledge.

Fortunately there are many nice instruments right this moment to repair this! To exhibit we will probably be utilizing a free on-line knowledge set that comes from Citi Bike in New York Metropolis, in addition to S3, DynamoDB and Rockset, a real-time cloud knowledge retailer.

Citi Bike Information, S3 and DynamoDB

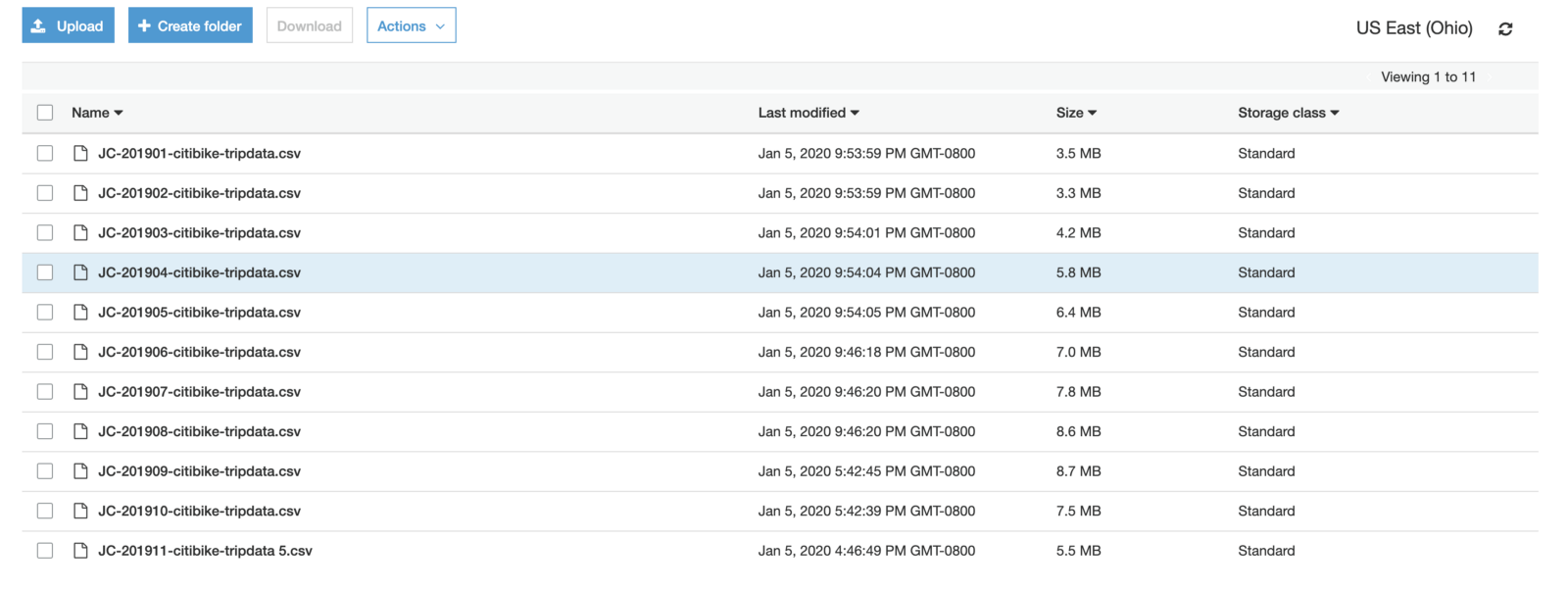

To arrange this knowledge we will probably be utilizing the CSV knowledge from Citi Bike trip knowledge in addition to the station knowledge that’s right here.

We will probably be loading these knowledge units into two totally different AWS providers. Particularly we will probably be utilizing DynamoDB and S3.

This can permit us to exhibit the truth that typically it may be troublesome to investigate knowledge from each of those techniques in the identical question engine. As well as, the station knowledge for DynamoDB is saved in JSON format which works nicely with DynamoDB. That is additionally as a result of the station knowledge is nearer to dwell and appears to replace each 30 seconds to 1 minute, whereas the CSV knowledge for the precise bike rides is up to date as soon as a month. We’ll see how we will convey this near-real-time station knowledge into our evaluation with out constructing out sophisticated knowledge infrastructure.

Having these knowledge units in two totally different techniques will even exhibit the place instruments can come in useful. Rockset, for instance, has the flexibility to simply be a part of throughout totally different knowledge sources similar to DynamoDB and S3.

As an information scientist or analysts, this may make it simpler to carry out ad-hoc evaluation with no need to have the information remodeled and pulled into an information warehouse first.

That being stated, let’s begin wanting into this Citi Bike knowledge.

Loading Information And not using a Information Pipeline

The trip knowledge is saved in a month-to-month file as a CSV, which implies we have to pull in every file with a purpose to get the entire 12 months.

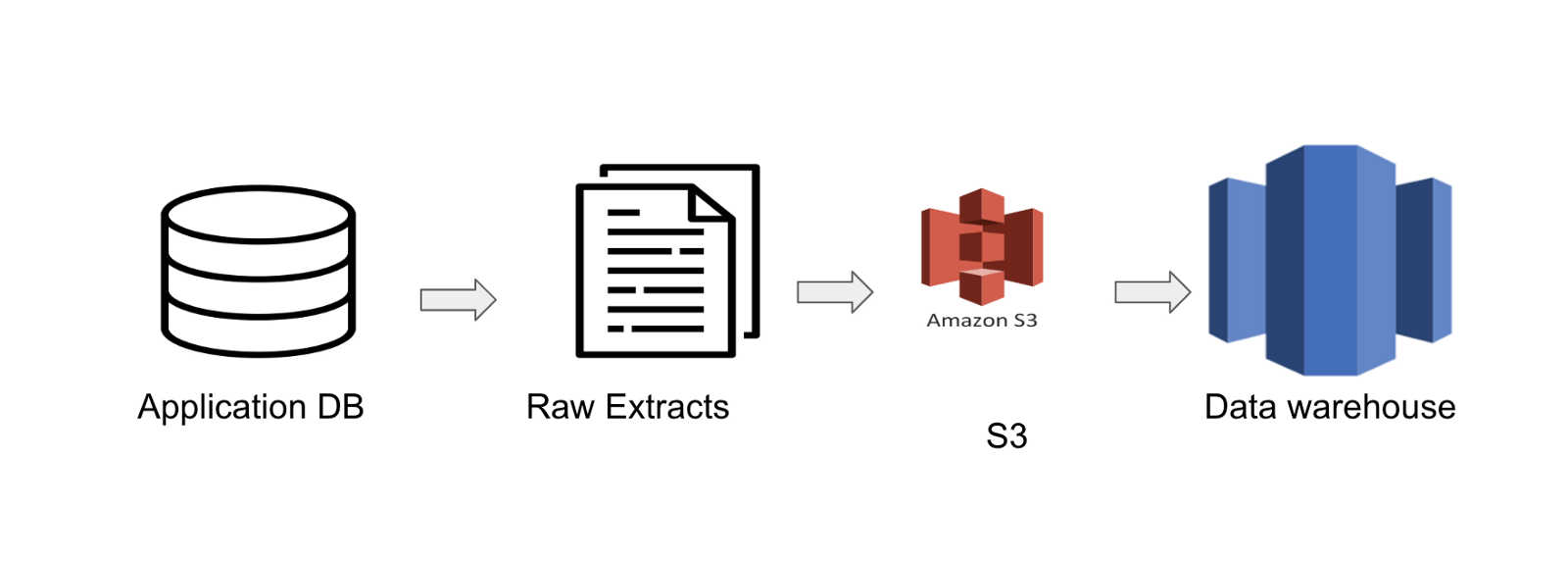

For individuals who are used to the standard knowledge engineering course of, you would want to arrange a pipeline that routinely checks the S3 bucket for brand spanking new knowledge after which masses it into an information warehouse like Redshift.

The information would observe the same path to the one laid out under.

This implies you want an information engineer to arrange a pipeline.

Nonetheless, on this case I didn’t have to arrange any form of knowledge warehouse. As a substitute, I simply loaded the recordsdata into S3 after which Rockset handled all of it as one desk.

Although there are 3 totally different recordsdata, Rockset treats every folder as its personal desk. Type of much like another knowledge storage techniques that retailer their knowledge in “partitions” which can be simply basically folders.

Not solely that, it didn’t freak out whenever you added a brand new column to the top. As a substitute, it simply nulled out the rows that didn’t have stated column. That is nice as a result of it permits for brand spanking new columns to be added with no knowledge engineer needing to replace a pipeline.

Analyzing Citi Bike Information

Typically, a great way to begin is simply to easily plot knowledge out to verify it considerably is smart (simply in case you might have dangerous knowledge).

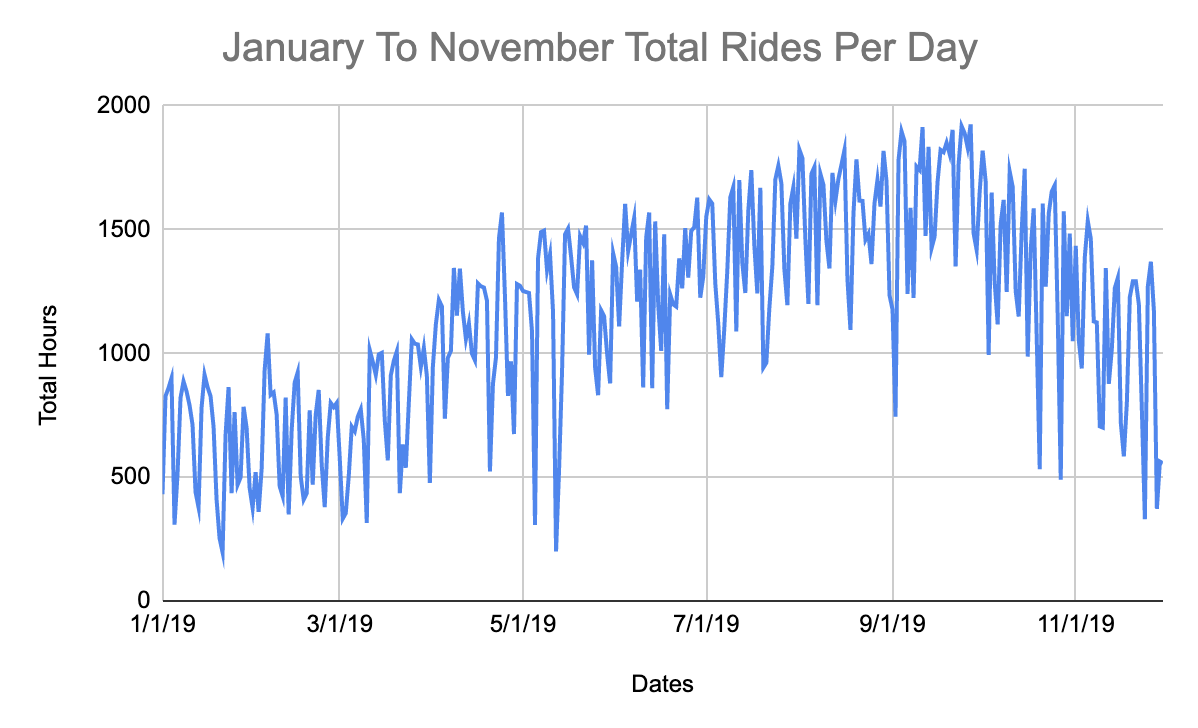

We’ll begin with the CSVs saved in S3, and we’ll graph out utilization of the bikes month over month.

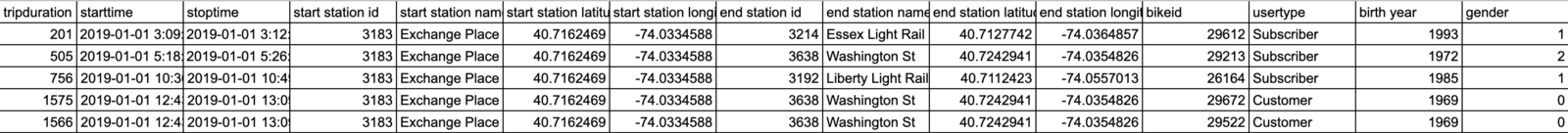

Experience Information Instance:

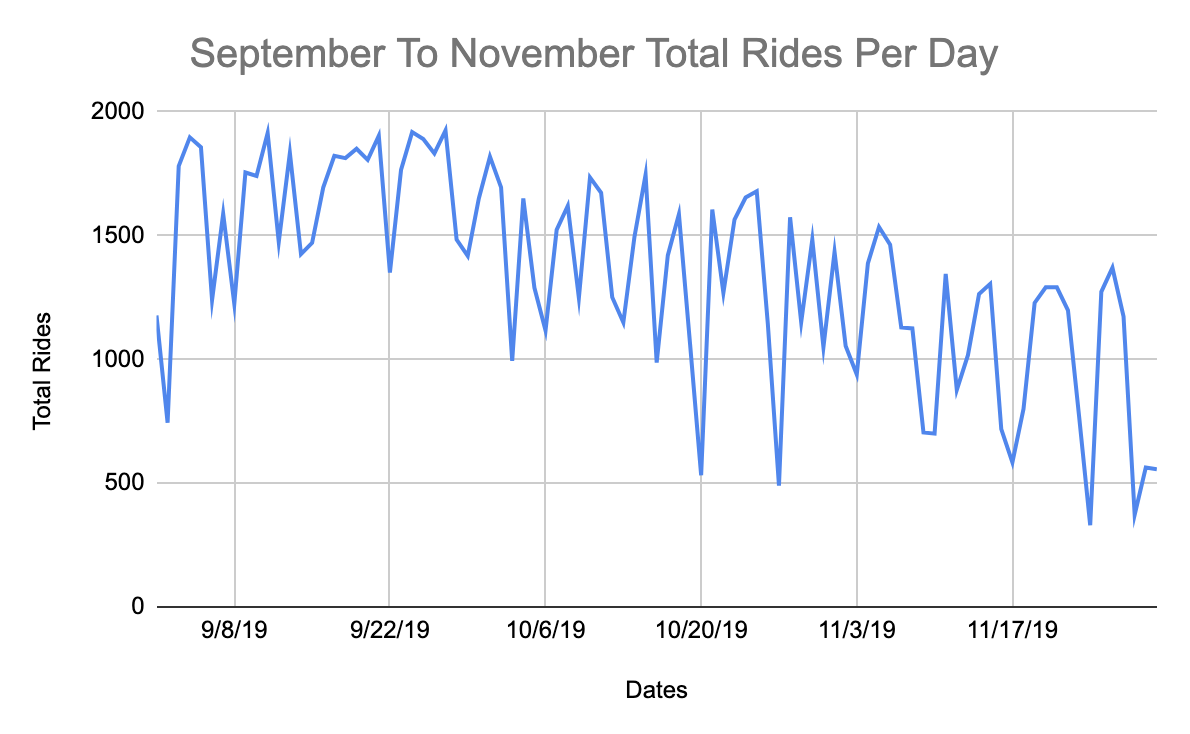

To begin off, we’ll simply graph the trip knowledge from September 2019 to November 2019. Under is all you’ll need for this question.

Embedded content material: https://gist.github.com/bAcheron/2a8613be13653d25126d69e512552716

One factor you’ll discover is that I case the datetime again to a string. It is because Rockset shops datetime date extra like an object.

Taking that knowledge I plotted it and you may see cheap utilization patterns. If we actually needed to dig into this we’d most likely look into what was driving the dips to see if there was some form of sample however for now we’re simply attempting to see the overall pattern.

Let’s say you wish to load extra historic knowledge as a result of this knowledge appears fairly constant.

Once more, no have to load extra knowledge into an information warehouse. You’ll be able to simply add the information into S3 and it’ll routinely be picked up.

You’ll be able to take a look at the graphs under, you will note the historical past wanting additional again.

From the attitude of an analyst or knowledge scientist, that is nice as a result of I didn’t want an information engineer to create a pipeline to reply my query in regards to the knowledge pattern.

Wanting on the chart above, we will see a pattern the place fewer folks appear to trip bikes in winter, spring and fall nevertheless it picks up for summer season. This is smart as a result of I don’t foresee many individuals desirous to exit when it’s raining in NYC.

All in all, this knowledge passes the intestine test and so we’ll take a look at it from a number of extra views earlier than becoming a member of the information.

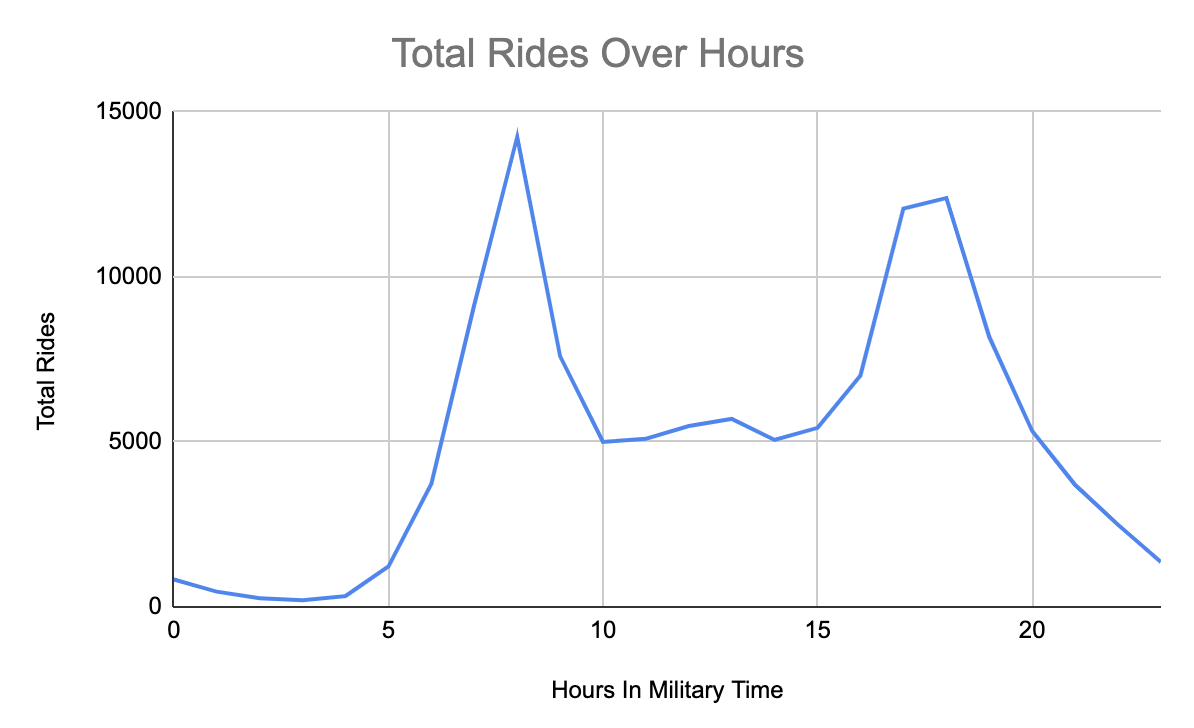

What’s the distribution of rides on an hourly foundation?

Our subsequent query is asking what’s the distribution of rides on an hourly foundation.

To reply this query, we have to extract the hour from the beginning time. This requires the EXTRACT perform in SQL. Utilizing that hour you’ll be able to then common it whatever the particular date. Our objective is to see the distribution of motorcycle rides.

We aren’t going to undergo each step we took from a question perspective however you’ll be able to take a look at the question and the chart under.

Embedded content material: https://gist.github.com/bAcheron/d505989ce3e9bc756fcf58f8e760117b

As you’ll be able to see there’s clearly a pattern of when folks will trip bikes. Particularly there are surges within the morning after which once more at evening. This may be helpful with regards to understanding when it could be a superb time to do upkeep or when bike racks are prone to run out.

However maybe there are different patterns underlying this particular distribution.

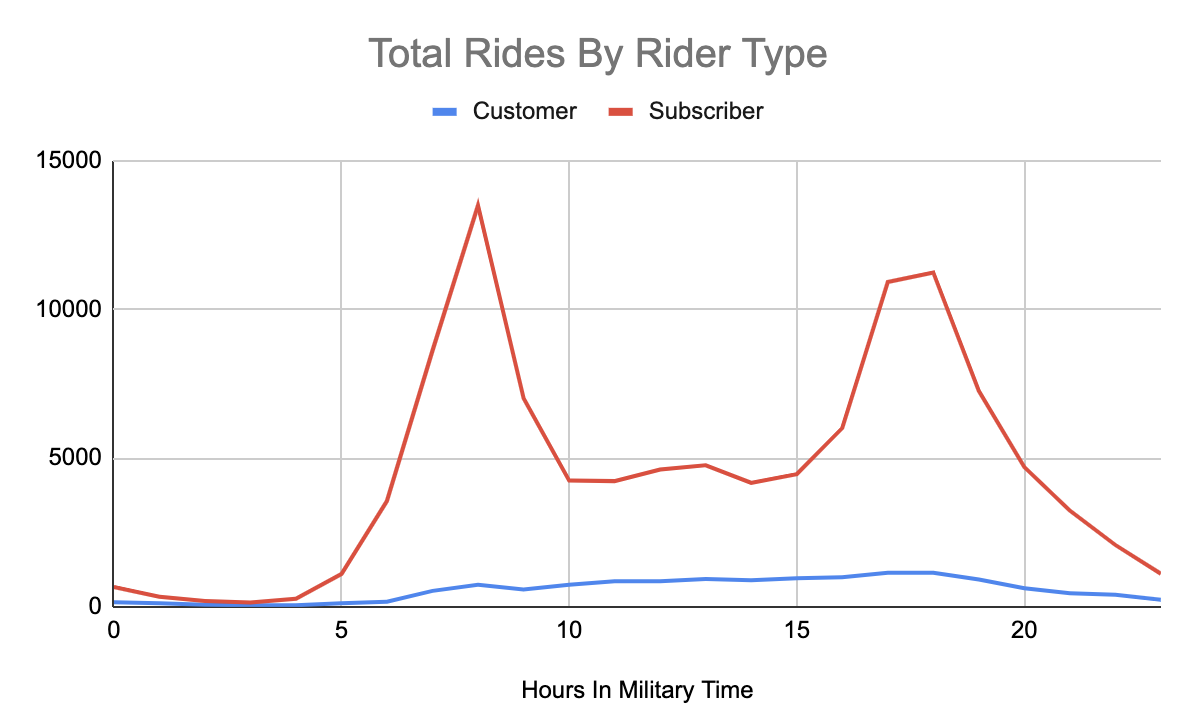

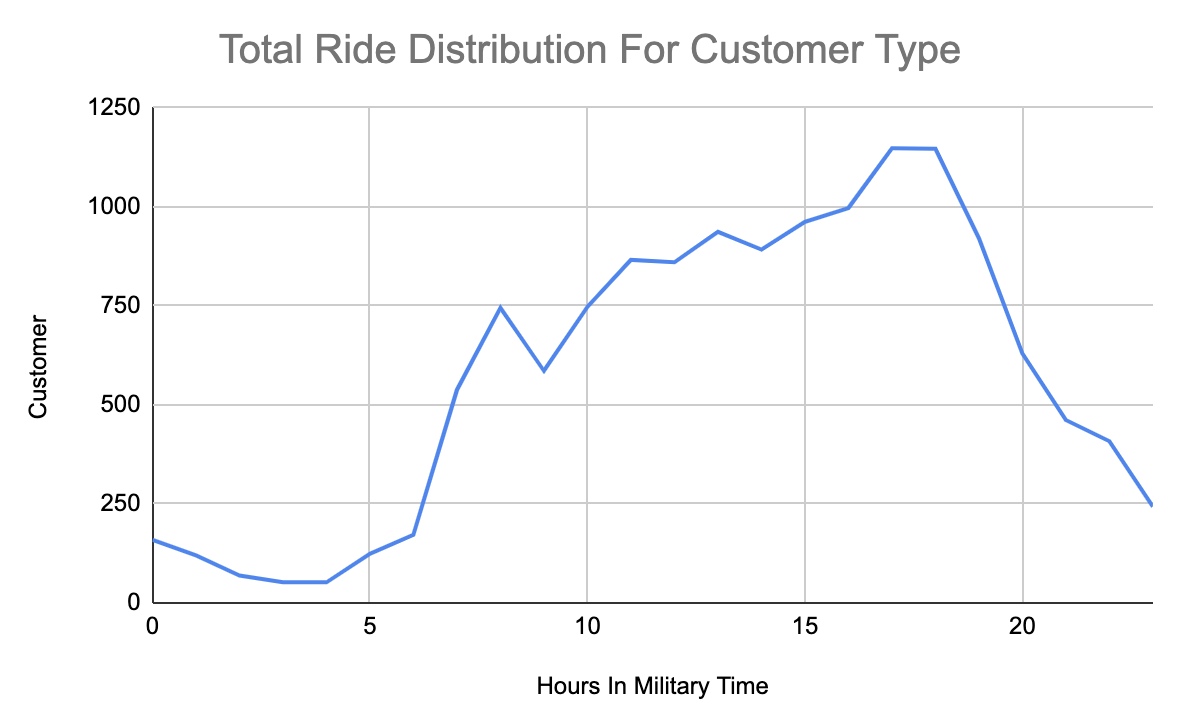

What time do totally different riders use bikes?

Persevering with on this thought, we additionally needed to see if there have been particular traits per rider sorts. This knowledge set has 2 rider sorts: 3-day buyer passes and annual subscriptions.

So we saved the hour extract and added within the trip sort area.

Wanting under on the chart we will see that the pattern for hours appears to be pushed by the subscriber buyer sort.

Nonetheless, if we study the shopper rider sort we even have a really totally different rider sort. As a substitute of getting two predominant peaks there’s a sluggish rising peak all through the day that peaks round 17:00 to 18:00 (5–6 PM).

It will be attention-grabbing to dig into the why right here. Is it as a result of individuals who buy a 3-day move are utilizing it final minute, or maybe they’re utilizing it from a particular space. Does this pattern look fixed day over day?

Becoming a member of Information Units Throughout S3 and DynamoDB

Lastly, let’s take part knowledge from DynamoDB to get updates in regards to the bike stations.

One cause we’d wish to do that is to determine which stations have 0 bikes left steadily and still have a excessive quantity of site visitors. This could possibly be limiting riders from with the ability to get a motorcycle as a result of once they go for a motorcycle it’s not there. This is able to negatively affect subscribers who would possibly count on a motorcycle to exist.

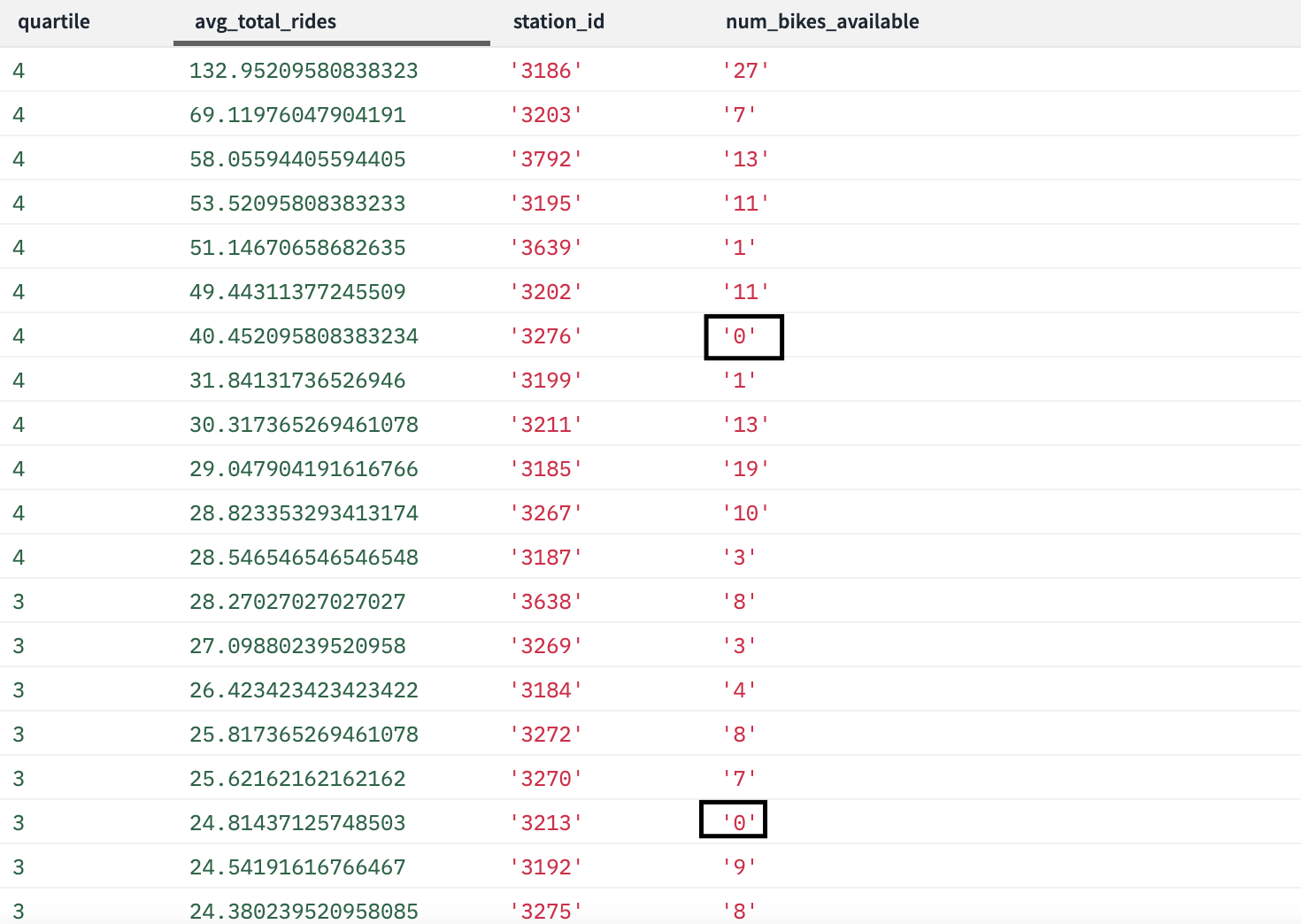

Under is a question that appears on the common rides per day per begin station. We additionally added in a quartile simply so we will look into the higher quartiles for common rides to see if there are any empty stations.

Embedded content material: https://gist.github.com/bAcheron/28b1c572aaa2da31e43044a743e7b1f3

We listed out the output under and as you’ll be able to see there are 2 stations at the moment empty which have excessive bike utilization compared to the opposite stations. We might advocate monitoring this over the course of some weeks to see if it is a widespread incidence. If it was, then Citi Bike would possibly wish to take into account including extra stations or determining a solution to reposition bikes to make sure prospects all the time have rides.

As operations analysts, with the ability to monitor which excessive utilization stations are low on bikes dwell can present the flexibility to higher coordinate groups that could be serving to to redistribute bikes round city.

Rockset’s means to learn knowledge from an software database similar to DynamoDB dwell can present direct entry to the information with none type of knowledge warehouse. This avoids ready for a each day pipeline to populate knowledge. As a substitute, you’ll be able to simply learn this knowledge dwell.

Stay, Advert-Hoc Evaluation for Higher Operations

Whether or not you’re a knowledge scientist or knowledge analyst, the necessity to wait on knowledge engineers and software program builders to create knowledge pipelines can decelerate ad-hoc evaluation. Particularly as increasingly more knowledge storage techniques are created it simply additional complicates the work of everybody who manages knowledge.

Thus, with the ability to simply entry, be a part of and analyze knowledge that isn’t in a conventional knowledge warehouse can show to be very useful they usually can lead fast insights just like the one about empty bike stations.

Ben has spent his profession centered on all types of knowledge. He has centered on creating algorithms to detect fraud, scale back affected person readmission and redesign insurance coverage supplier coverage to assist scale back the general price of healthcare. He has additionally helped develop analytics for advertising and marketing and IT operations with a purpose to optimize restricted sources similar to workers and price range. Ben privately consults on knowledge science and engineering issues. He has expertise each working hands-on with technical issues in addition to serving to management groups develop methods to maximise their knowledge.

Photograph by ZACHARY STAINES on Unsplash

[ad_2]