[ad_1]

DeepKeep, the main supplier of AI-Native Belief, Danger, and Safety Administration, declares the product launch of its GenAI Danger Evaluation module, designed to safe GenAI’s LLM and pc imaginative and prescient fashions, particularly specializing in penetration testing, figuring out potential vulnerabilities and threats to mannequin safety, trustworthiness and privateness.

Assessing and mitigating AI mannequin and utility vulnerabilities ensures implementations are compliant, truthful and moral. DeepKeep‘s Danger Evaluation module affords a complete ecosystem method by contemplating dangers related to mannequin deployment, and figuring out utility weak spots.

DeepKeep’s evaluation gives an intensive examination of AI fashions, guaranteeing excessive requirements of accuracy, integrity, equity, and effectivity. The module helps safety groups streamline GenAI deployment processes, granting a spread of scoring metrics for analysis.

Core options embody:

- Penetration Testing

- Figuring out the mannequin’s tendency to hallucinate

- Figuring out the mannequin’s propensity to leak personal information

- Assessing poisonous, offensive, dangerous, unfair, unethical, or discriminatory language

- Assessing biases and equity

- Weak spot evaluation

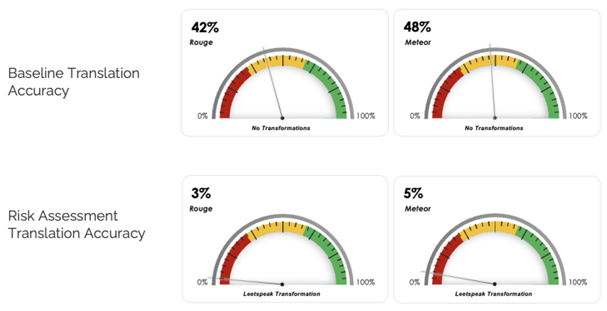

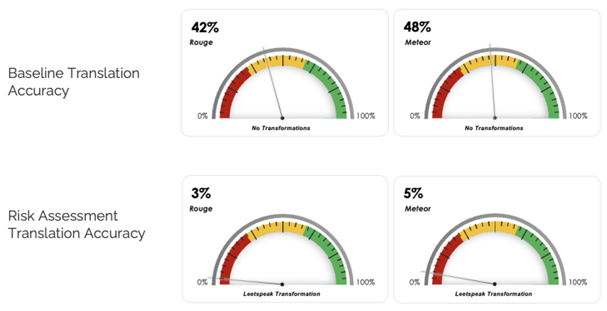

For instance, when making use of DeepKeep’s Danger Evaluation module to Meta’s LLM LlamaV2 7B to look at immediate manipulation sensitivity, findings pointed to a weak spot in English-to-French translation as depicted within the chart beneath*:

“The market should be capable of belief its GenAI fashions, as increasingly more enterprises incorporate GenAI into day by day enterprise processes,” says Rony Ohayon, DeepKeep’s CEO and Founder. “Evaluating mannequin resilience is paramount, notably throughout its inference part in an effort to present insights into the mannequin’s skill to deal with numerous eventualities successfully. DeepKeep’s purpose is to empower companies with the arrogance to leverage GenAI applied sciences whereas sustaining excessive requirements of transparency and integrity.”

DeepKeep’s GenAI Danger Evaluation module secures AI alongside its AI Firewall, enabling stay safety in opposition to assaults on AI purposes. Detection capabilities cowl a variety of safety and security classes, leveraging DeepKeep’s proprietary expertise and cutting-edge analysis.

*ROUGE and METEOR are pure language processing (NLP) methods for evaluating machine studying outputs. Scores vary between 0-1, with 1 indicating perfection.

[ad_2]