[ad_1]

Introduction

RocksDB is an LSM storage engine whose progress has proliferated tremendously in the previous couple of years. RocksDB-Cloud is open-source and is absolutely suitable with RocksDB, with the extra function that each one knowledge is made sturdy by routinely storing it in cloud storage (e.g. Amazon S3).

We, at Rockset, use RocksDB-Cloud as one of many constructing blocks of Rockset’s distributed Converged Index. Rockset is designed with cloud-native ideas, and one of many major design ideas of a cloud-native database is to have separation of compute from storage. We are going to focus on how we prolonged RocksDB-Cloud to have a clear separation of its storage wants and its compute wants.

A compactor, operated by U.S. Navy Seabees, performing soil compaction

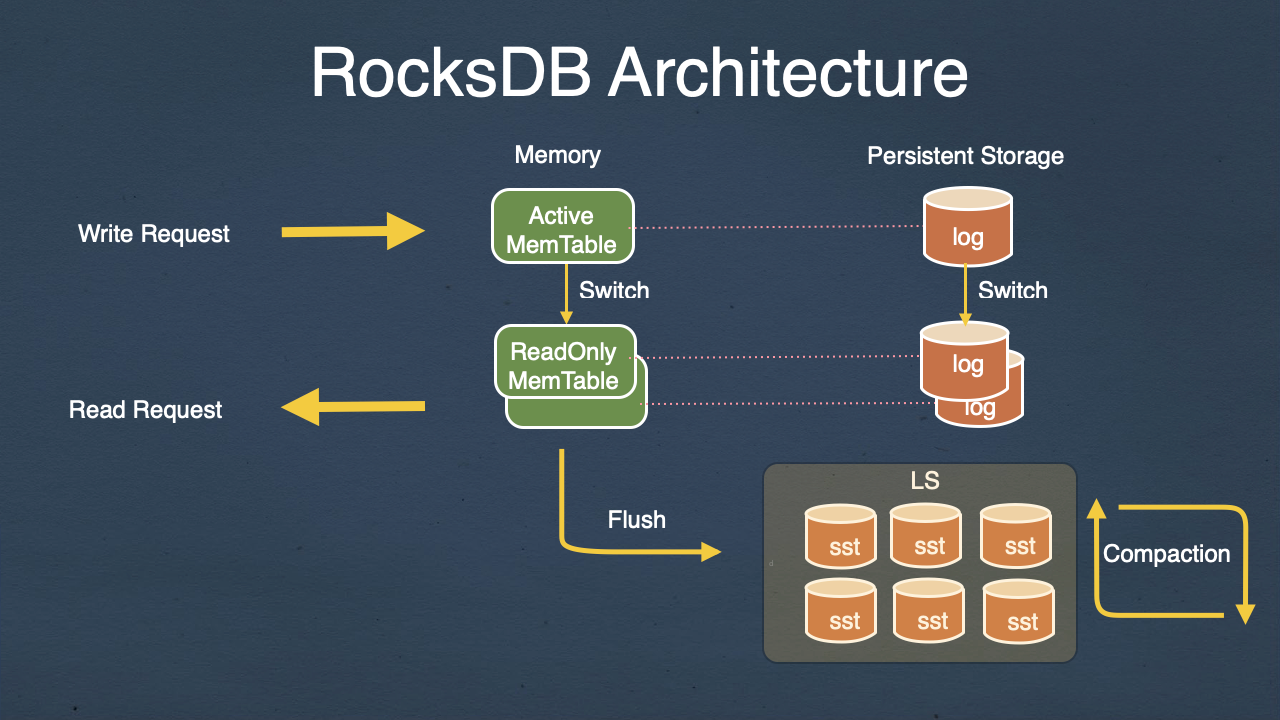

RocksDB’s LSM engine

RocksDB-Cloud shops knowledge in domestically hooked up SSD or spinning disks. The SSD or the spinning disk supplies the storage wanted to retailer the information that it serves. New writes to RocksDB-Cloud are written to an in-memory memtable, after which when the memtable is full, it’s flushed to a brand new SST file within the storage.

Being an LSM storage engine, a set of background threads are used for compaction, and compaction is a course of of mixing a set of SST recordsdata and producing new SST recordsdata with overwritten keys and deleted keys purged from the output recordsdata. Compaction wants numerous compute assets. The upper the write price into the database, the extra compute assets are wanted for compaction, as a result of the system is steady provided that compaction is ready to sustain with new writes to your database.

The issue when compute and storage usually are not disaggregated

In a typical RocksDB-based system, compaction happens on CPUs which can be native on the server that hosts the storage as effectively. On this case, compute and storage usually are not disaggregated. And which means in case your write price will increase however the whole measurement of your database stays the identical, you would need to dynamically provision extra servers, unfold your knowledge into all these servers after which leverage the extra compute on these servers to maintain up with the compaction load.

This has two issues:

- Spreading your knowledge into extra servers isn’t instantaneous as a result of you must copy numerous knowledge to take action. This implies you can’t react rapidly to a fast-changing workload.

- The storage capability utilization on every of your servers turns into very low since you are spreading out your knowledge to extra servers. You lose out on the price-to-performance ratio due to all of the unused storage in your servers.

Our answer

The first purpose why RocksDB-Cloud is appropriate for separating out compaction compute and storage is as a result of it’s an LSM storage engine. In contrast to a B-Tree database, RocksDB-Cloud by no means updates an SST file as soon as it’s created. Because of this all of the SST recordsdata in your complete system are read-only besides the miniscule portion of knowledge in your lively memtable. RocksDB-Cloud persists all SST recordsdata in a cloud storage object retailer like S3, and these cloud objects are safely accessible from all of your servers as a result of they’re read-only.

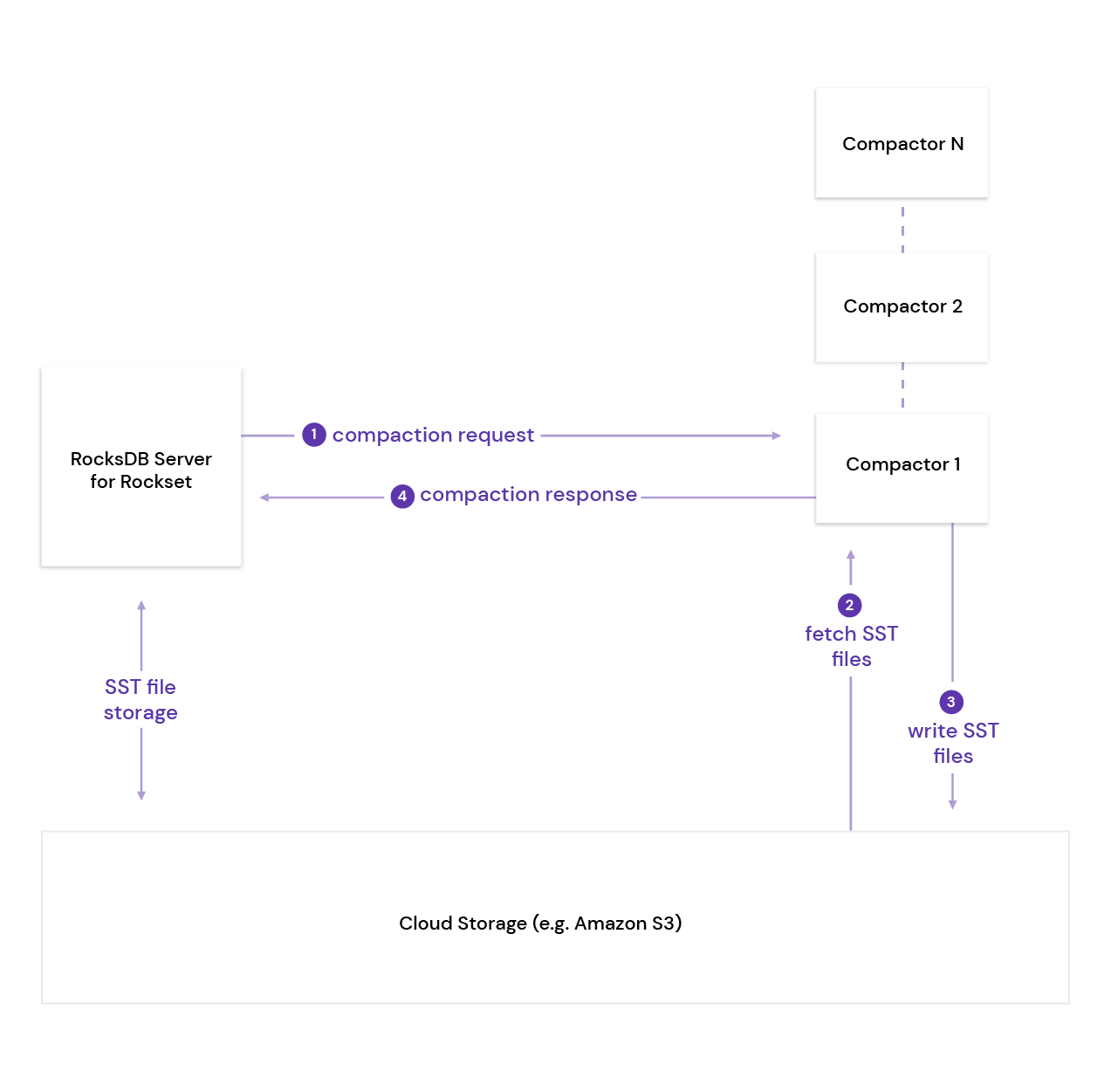

So, our thought is that if a RocksDB-Cloud server A can encapsulate a compaction job with its set of cloud objects after which ship the request to a distant stateless server B—and that server B can fetch the related objects from the cloud retailer, do the compaction, produce a set of output SST recordsdata that are written again to the cloud object retailer, after which talk that info again to server A—we’ve primarily separated out the storage (which resides in server A) from the compaction compute (which resides in server B). Server A has the storage and whereas server B has no everlasting storage however solely the compute wanted for compaction. Voila!

RocksDB pluggable compaction API

We prolonged the bottom RocksDB API with two new strategies that make the compaction engine in RocksDB externally pluggable. In db.h, we introduce a brand new API to register a compaction service.

Standing RegisterPluggableCompactionService(std::unique_ptr<PluggableCompactionService>);

This API registers the plugin which is used to execute the compaction job by RocksDB. Distant compaction occurs in two steps: Run and InstallFiles. Therefore, the plugin, PluggableCompactionService, would have 2 APIs:

Standing Run(const PluggableCompactionParam& job, PluggableCompactionResult* consequence)

std::vector<Standing> InstallFiles(

const std::vector<std::string>& remote_paths,

const std::vector<std::string>& local_paths,

const EnvOptions& env_options, Env* local_env)

Run is the place the compaction execution occurs. In our distant compaction structure, Run would ship an RPC to a distant compaction tier, and obtain a compaction consequence which has, amongst different issues, the record of newly compacted SST recordsdata.

InstallFiles is the place RocksDB installs the newly compacted SST recordsdata from the cloud (remote_paths) to its native database (local_paths).

Rockset’s compaction tier

Now we are going to present how we used the pluggable compaction service described above in Rockset’s compaction service. As talked about above, step one, Run, sends an RPC to a distant compaction tier with compaction info akin to enter SST file names and compression info. We name the host that executes this compaction job a compactor.

The compactor, upon receiving the compaction request, would open a RocksDB-Cloud occasion in ghost mode. What this implies is, RocksDB-Cloud opens the native database with solely mandatory metadata with out fetching all SST recordsdata from the cloud storage. As soon as it opens the RocksDB occasion in ghost mode, it could then execute the compaction job, together with fetching the required SST recordsdata, compact them, and add the newly compacted SST recordsdata to a short lived storage within the cloud.

Listed here are the choices to open RocksDB-Cloud within the compactor:

rocksdb::CloudOptions cloud_options;

cloud_options.ephemeral_resync_on_open = false;

cloud_options.constant_sst_file_size_in_sst_file_manager = 1024;

cloud_options.skip_cloud_files_in_getchildren = true;

rocksdb::Choices rocksdb_options;

rocksdb_options.max_open_files = 0;

rocksdb_options.disable_auto_compactions = true;

rocksdb_options.skip_stats_update_on_db_open = true;

rocksdb_options.paranoid_checks = false;

rocksdb_options.compaction_readahead_size = 10 * 1024 * 1024;

There are a number of challenges we confronted throughout the growth of the compaction tier, and our options:

Enhance the velocity of opening RocksDB-Cloud in ghost mode

Through the opening of a RocksDB cases, along with fetching all of the SST recordsdata from the cloud (which we’ve disabled with ghost mode), there are a number of different operations that would decelerate the opening course of, notably getting the record of SST recordsdata and getting the scale of every SST file. Ordinarily, if all of the SST recordsdata reside in native storage, the latency of those get-file-size operations could be small. Nonetheless, when the compactor opens RocksDB-Cloud, every of those operations would end in a distant request to the cloud storage, and the whole mixed latency turns into prohibitively costly. In our expertise, for a RocksDB-Cloud occasion with hundreds of SST recordsdata, opening it could take as much as a minute because of hundreds of get-file-size requests to S3. With a view to get round this limitation, we launched varied choices within the RocksDB-Cloud choices to disable these RPCs throughout opening. In consequence, the typical opening time goes from 7 seconds to 700 milliseconds.

Disable L0 -> L0 compaction

Distant compaction is a tradeoff between the velocity of a single compaction and the power to run extra compaction jobs in parallel. It’s as a result of, naturally, every distant compaction job could be slower than the identical compaction executed domestically because of the price of knowledge switch within the cloud. Subsequently, we wish to decrease the bottleneck of the compaction course of, the place RocksDB-Cloud can’t parallelize, as a lot as attainable.

Within the LSM structure, L0->L1 compaction are normally not parallelizable as a result of L0 recordsdata have overlapping ranges. Therefore, when a L0->L1 compaction is occuring, RocksDB-Cloud has the power to additionally execute L0->L0 compaction, with the purpose of decreasing the variety of L0 recordsdata and stopping write stalls because of RocksDB-Cloud hitting the L0 file restrict. Nonetheless, the commerce off is, every L0 file would develop larger in measurement after each L0->L0 compactions.

In our expertise, this selection causes extra bother than the advantages it brings, as a result of having bigger L0 recordsdata leads to a for much longer L0->L1 compaction, worsening the bottleneck of RocksDB-Cloud. Therefore, we disable L0->L0 compaction, and stay with the uncommon downside of write stall as a substitute. From our experiment, RocksDB-Cloud compaction catches up with the incoming writes significantly better.

You should utilize it now

RocksDB-Cloud is an open-source challenge, so our work might be leveraged by another RocksDB developer who needs to derive advantages by separating out their compaction compute from their storage wants. We’re operating the distant compaction service in manufacturing now. It’s accessible with the 6.7.3 launch of RocksDB-Cloud. We focus on all issues about RocksDB-Cloud in our group.

Be taught extra about how Rockset makes use of RocksDB:

Authors:

Hieu Pham – Software program Engineer, Rockset

Dhruba Borthakur – CTO, Rockset

[ad_2]