[ad_1]

We’re only a couple weeks faraway from the largest Information + AI Summit in historical past, the place we launched Databricks LakeFlow, a unified, clever resolution for information engineering. Whereas we’re extremely enthusiastic about this strategic path for information engineering at Databricks, we’re additionally persevering with to spend money on the product expertise for customers at the moment.

Enhancing DLT growth expertise is a core focus as a result of it instantly impacts the effectivity and satisfaction of builders constructing information pipelines with DLT. We’re excited to announce some enhancements to the DLT growth expertise with notebooks. These new options present a seamless and intuitive DLT growth interface, and assist you to construct and debug your pipelines shortly and effectively.

Delta Dwell Tables (DLT) is an revolutionary framework that simplifies and accelerates the constructing, testing, and sustaining of dependable information pipelines. It presents declarative information engineering and computerized pipeline administration, enabling customers to concentrate on defining enterprise logic whereas it handles dependency monitoring, error restoration, and monitoring. This highly effective device is a sport changer for organizations aiming to optimize their information operations with effectivity and precision, guaranteeing information scientists and analysts at all times have entry to up-to-date, high-quality information.

With this new launch, we’re bringing thrilling new options to the expertise of growing DLT with notebooks:

- No extra context switching: see the DLT graph, the occasion log and pocket book code in a single single contextual UI.

- Rapidly discover syntax errors with the brand new “Validate” motion.

- Develop code extra simply with DLT-specific autocomplete, in-line errors, and diagnostics.

No extra context switching: develop your DLT pipelines in a single single contextual UI

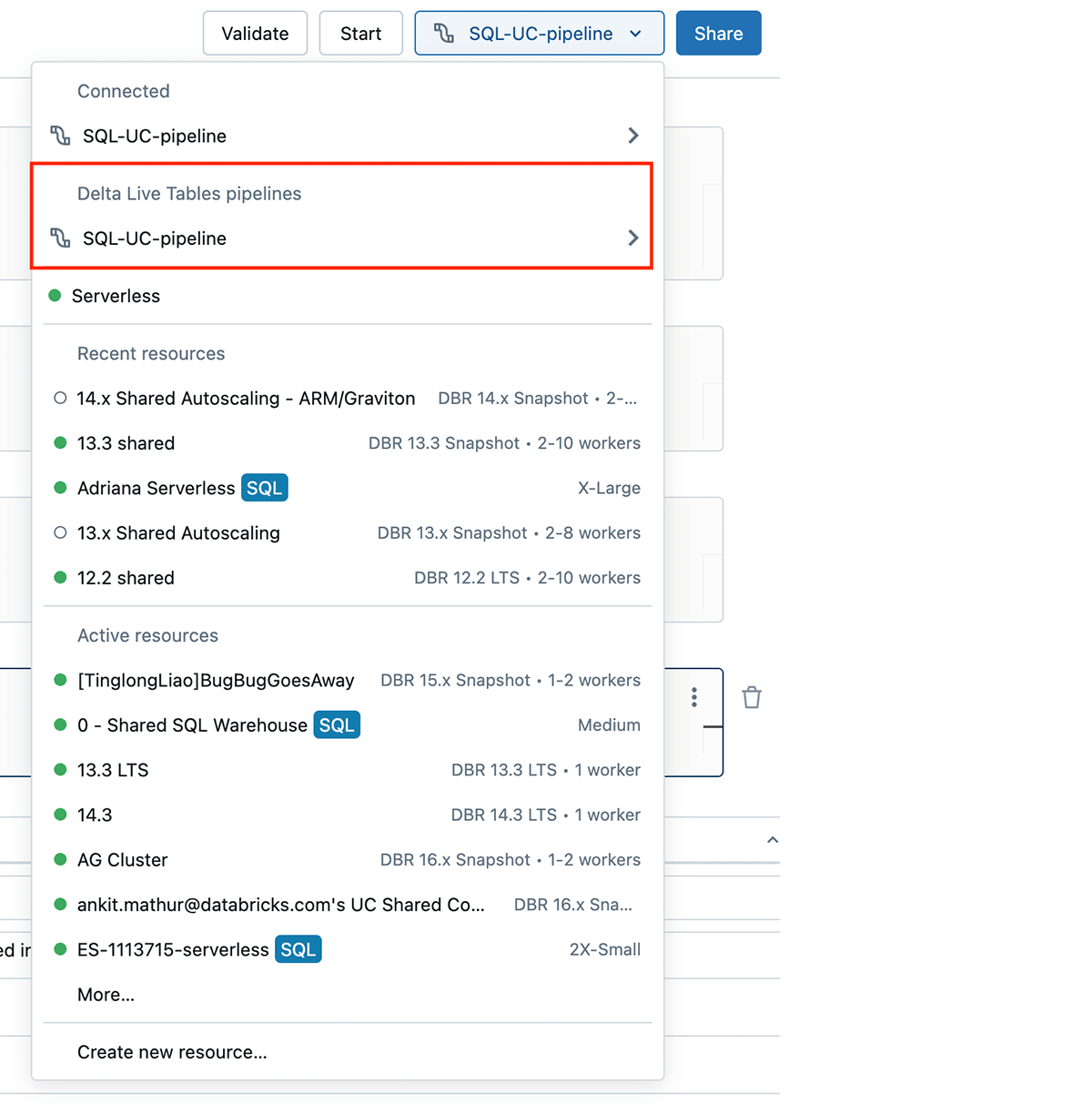

You might now “join” to your DLT pipeline instantly from the pocket book, as you’d to a SQL Warehouse or an Interactive cluster.

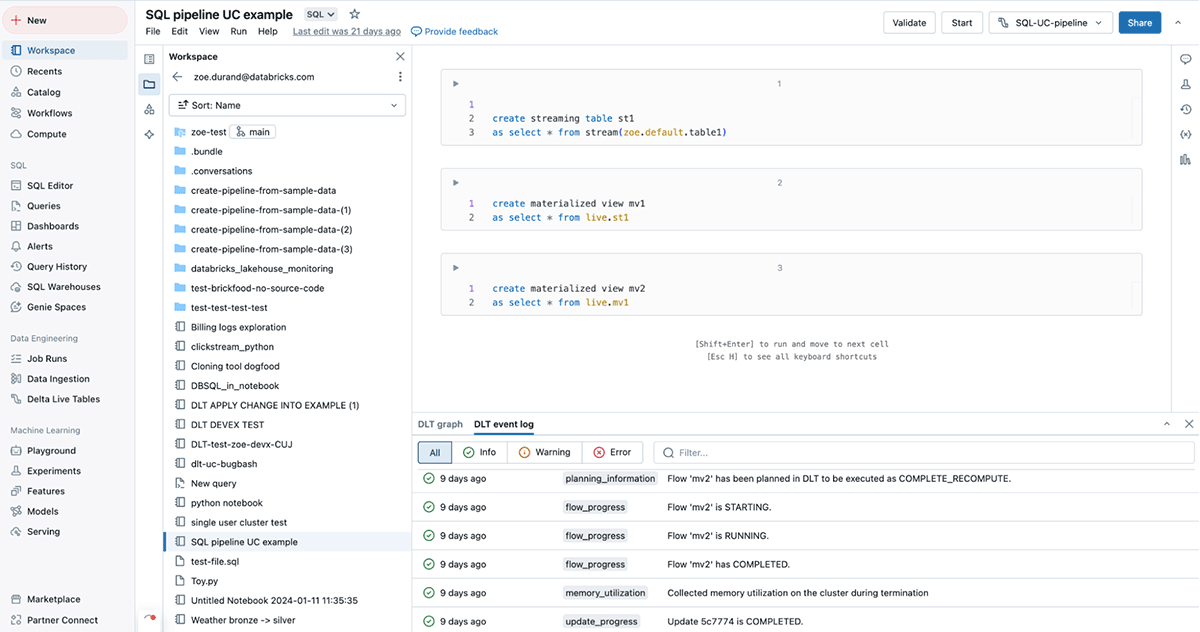

As soon as related to your DLT pipeline, you’ve got entry to a brand new all-in-one UI. You’ll be able to see the DLT graph (additionally known as the Directed Acyclic Graph or “DAG”) and the DLT occasion log in the identical UI because the code you’re modifying.

With this new all-in-one UI, you are able to do every thing you must do with out switching tabs! You’ll be able to examine the form of the DLT graph and the schema of every desk as you’re growing, to just remember to are getting the outcomes that you really want. You can too examine the occasion log for any errors that come up in the course of the growth course of.

This enormously improves the usability and ergonomics of growing a DLT pipeline.

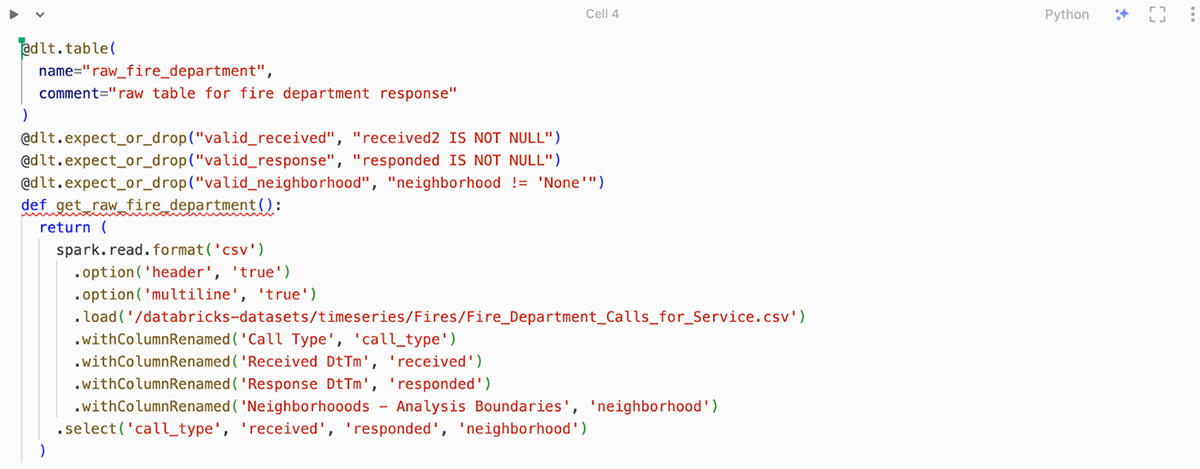

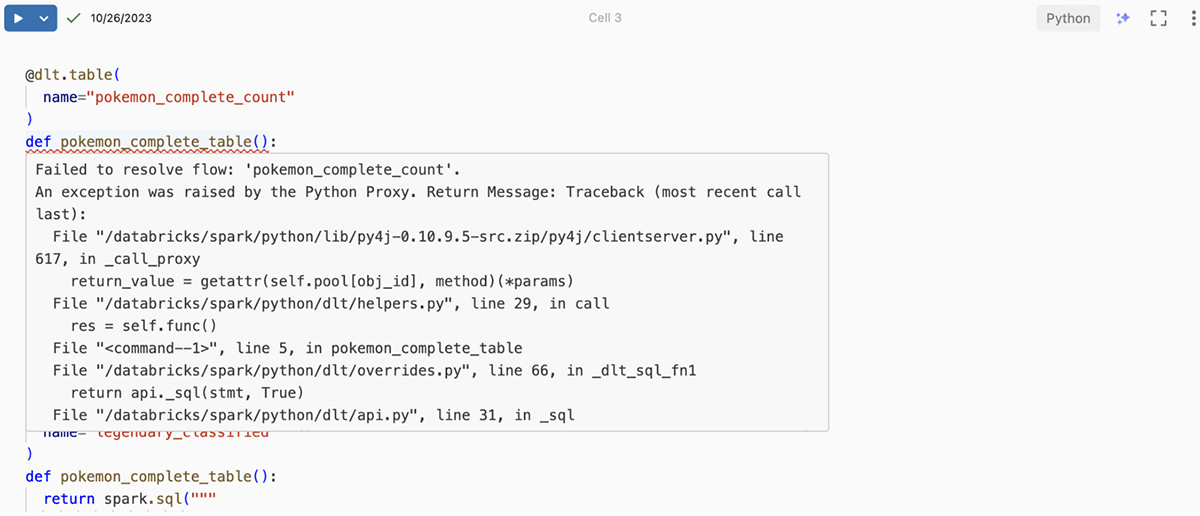

Catch errors quicker and simply develop DLT code

1. Catch syntax errors shortly with “Validate”

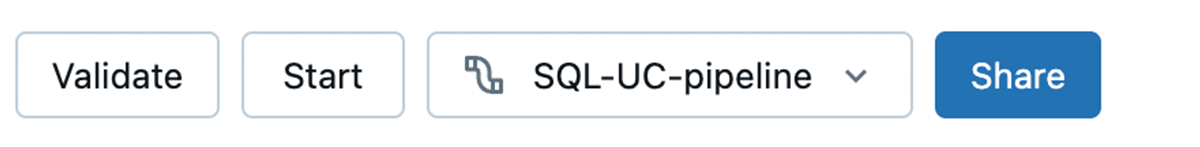

We’re introducing a “Validate” motion for DLT pipelines, alongside “begin” and “full refresh”.

With “Validate”, you possibly can examine for issues in a pipeline’s supply code with out processing any information. This function will assist you to iteratively discover and repair errors in your pipeline, similar to incorrect desk or column names, if you find yourself growing or testing pipelines.

“Validate” is obtainable as a button within the pocket book UI, and also will execute when hitting the “shift+enter” keyboard shortcut.

2. Develop your code extra simply with DLT-aware autocomplete, in-line errors and diagnostics

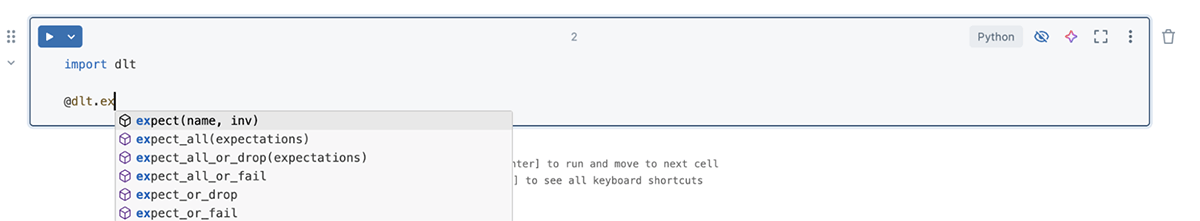

Now you can entry DLT-specific auto-complete, which makes writing code quicker and extra correct.

Moreover, you possibly can simply determine syntax errors with crimson squiggly strains that spotlight the precise error location inside your code.

Lastly, you possibly can profit from the inline diagnostic field, which shows related error particulars and recommendations proper on the pertinent line quantity. Hover over an error to see extra data:

The best way to get began?

Simply create a DLT pipeline, a pocket book, and connect with your pipeline from the compute drop-down. You’ll be able to attempt these new pocket book capabilities throughout Azure, AWS & GCP.

[ad_2]