[ad_1]

When working with a real-time analytics system you want your database to satisfy very particular necessities. This consists of making the information obtainable for question as quickly as it’s ingested, creating correct indexes on the information in order that the question latency could be very low, and far more.

Earlier than it may be ingested, there’s often an information pipeline for reworking incoming knowledge. You need this pipeline to take as little time as potential, as a result of stale knowledge doesn’t present any worth in a real-time analytics system.

Whereas there’s usually some quantity of knowledge engineering required right here, there are methods to reduce it. For instance, as a substitute of denormalizing the information, you may use a question engine that helps joins. This can keep away from pointless processing throughout knowledge ingestion and scale back the storage bloat attributable to redundant knowledge.

The Calls for of Actual-Time Analytics

Actual-time analytics purposes have particular calls for (i.e., latency, indexing, and so on.), and your resolution will solely be capable of present beneficial real-time analytics if you’ll be able to meet them. However assembly these calls for relies upon solely on how the answer is constructed. Let’s take a look at some examples.

Knowledge Latency

Knowledge latency is the time it takes from when knowledge is produced to when it’s obtainable to be queried. Logically then, latency needs to be as little as potential for real-time analytics.

In most analytics programs at the moment, knowledge is being ingested in huge portions because the variety of knowledge sources regularly will increase. It will be important that real-time analytics options be capable of deal with excessive write charges in an effort to make the information queryable as rapidly as potential. Elasticsearch and Rockset every approaches this requirement in a different way.

As a result of continuously performing write operations on the storage layer negatively impacts efficiency, Elasticsearch makes use of the reminiscence of the system as a caching layer. All incoming knowledge is cached in-memory for a sure period of time, after which Elasticsearch ingests the cached knowledge in bulk to storage.

This improves the write efficiency, however it additionally will increase latency. It is because the information will not be obtainable to question till it’s written to the disk. Whereas the cache period is configurable and you may scale back the period to enhance the latency, this implies you might be writing to the disk extra steadily, which in flip reduces the write efficiency.

Rockset approaches this drawback in a different way.

Rockset makes use of a log-structured merge-tree (LSM), a function provided by the open-source database RocksDB. This function makes it in order that every time Rockset receives knowledge, it too caches the information in its memtable. The distinction between this method and Elasticsearch’s is that Rockset makes this memtable obtainable for queries.

Thus queries can entry knowledge within the reminiscence itself and don’t have to attend till it’s written to the disk. This nearly utterly eliminates write latency and permits even present queries to see new knowledge in memtables. That is how Rockset is ready to present lower than a second of knowledge latency even when write operations attain a billion writes a day.

Indexing Effectivity

Indexing knowledge is one other essential requirement for real-time analytics purposes. Having an index can scale back question latency by minutes over not having one. Alternatively, creating indexes throughout knowledge ingestion will be achieved inefficiently.

For instance, Elasticsearch’s major node processes an incoming write operation then forwards the operation to all of the duplicate nodes. The duplicate nodes in flip carry out the identical operation regionally. Because of this Elasticsearch reindexes the identical knowledge on all duplicate nodes, time and again, consuming CPU sources every time.

Rockset takes a unique method right here, too. As a result of Rockset is a primary-less system, write operations are dealt with by a distributed log. Utilizing RocksDB’s distant compaction function, just one duplicate performs indexing and compaction operations remotely in cloud storage. As soon as the indexes are created, all different replicas simply copy the brand new knowledge and exchange the information they’ve regionally. This reduces the CPU utilization required to course of new knowledge by avoiding having to redo the identical indexing operations regionally at each duplicate.

Ceaselessly Up to date Knowledge

Elasticsearch is primarily designed for full textual content search and log analytics makes use of. For these circumstances, as soon as a doc is written to Elasticsearch, there’s decrease likelihood that it’ll be up to date once more.

The way in which Elasticsearch handles updates to knowledge will not be supreme for real-time analytics that usually entails steadily up to date knowledge. Suppose you’ve got a JSON object saved in Elasticsearch and also you need to replace a key-value pair in that JSON object. Whenever you run the replace question, Elasticsearch first queries for the doc, takes that doc into reminiscence, adjustments the key-value in reminiscence, deletes the doc from the disk, and eventually creates a brand new doc with the up to date knowledge.

Despite the fact that just one subject of a doc must be up to date, a whole doc is deleted and listed once more, inflicting an inefficient replace course of. You might scale up your {hardware} to extend the velocity of reindexing, however that provides to the {hardware} price.

In distinction, real-time analytics typically entails knowledge coming from an operational database, like MongoDB or DynamoDB, which is up to date steadily. Rockset was designed to deal with these conditions effectively.

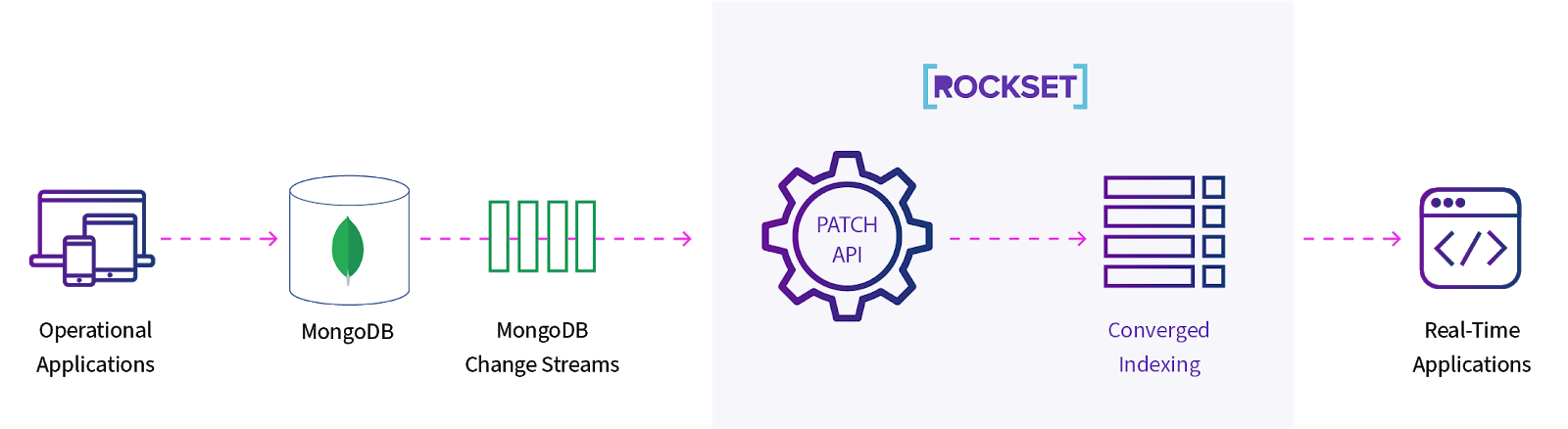

Utilizing a Converged Index, Rockset breaks the information down into particular person key-value pairs. Every such pair is saved in three other ways, and all are individually addressable. Thus when the information must be up to date, solely that subject will probably be up to date. And solely that subject will probably be reindexed. Rockset gives a Patch API that helps this incremental indexing method.

Determine 1: Use of Rockset’s Patch API to reindex solely up to date parts of paperwork

As a result of solely components of the paperwork are reindexed, Rockset could be very CPU environment friendly and thus price environment friendly. This single-field mutability is very essential for real-time analytics purposes the place particular person fields are steadily up to date.

Becoming a member of Tables

For any analytics software, becoming a member of knowledge from two or extra totally different tables is important. But Elasticsearch has no native be part of assist. Consequently, you may need to denormalize your knowledge so you possibly can retailer it in such a method that doesn’t require joins in your analytics. As a result of the information needs to be denormalized earlier than it’s written, it’s going to take further time to organize that knowledge. All of this provides as much as an extended write latency.

Conversely, as a result of Rockset offers commonplace SQL question language assist and parallelizes be part of queries throughout a number of nodes for environment friendly execution, it is rather simple to hitch tables for advanced analytical queries with out having to denormalize the information upon ingest.

Interoperability with Sources of Actual-Time Knowledge

If you find yourself engaged on a real-time analytics system, it’s a given that you just’ll be working with exterior knowledge sources. The convenience of integration is essential for a dependable, secure manufacturing system.

Elasticsearch gives instruments like Beats and Logstash, or you may discover numerous instruments from different suppliers or the neighborhood, which let you join knowledge sources—corresponding to Amazon S3, Apache Kafka, MongoDB—to your system. For every of those integrations, it’s a must to configure the device, deploy it, and likewise preserve it. You must guarantee that the configuration is examined correctly and is being actively monitored as a result of these integrations aren’t managed by Elasticsearch.

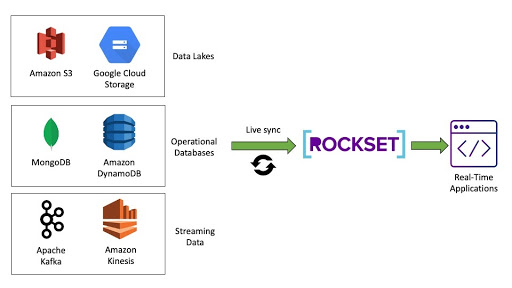

Rockset, however, offers a a lot simpler click-and-connect resolution utilizing built-in connectors. For every generally used knowledge supply (for instance S3, Kafka, MongoDB, DynamoDB, and so on.), Rockset offers a unique connector.

Determine 2: Constructed-in connectors to frequent knowledge sources make it simple to ingest knowledge rapidly and reliably

You merely level to your knowledge supply and your Rockset vacation spot, and acquire a Rockset-managed connection to your supply. The connector will repeatedly monitor the information supply for the arrival of recent knowledge, and as quickly as new knowledge is detected it will likely be mechanically synced to Rockset.

Abstract

In earlier blogs on this sequence, we examined the operational elements and question flexibility behind real-time analytics options, particularly Elasticsearch and Rockset. Whereas knowledge ingestion might not at all times be prime of thoughts, it’s however essential for improvement groups to contemplate the efficiency, effectivity and ease with which knowledge will be ingested into the system, notably in a real-time analytics state of affairs.

When deciding on the precise real-time analytics resolution in your wants, you could have to ask questions to determine how rapidly knowledge will be obtainable for querying, considering any latency launched by knowledge pipelines, how expensive it could be to index steadily up to date knowledge, and the way a lot improvement and operations effort it could take to hook up with your knowledge sources. Rockset was constructed exactly with the ingestion necessities for real-time analytics in thoughts.

You’ll be able to learn the Elasticsearch vs Rockset white paper to study extra in regards to the architectural variations between the programs and the migration information to discover shifting workloads to Rockset.

Different blogs on this Elasticsearch or Rockset for Actual-Time Analytics sequence:

[ad_2]