[ad_1]

Facepalm: Google’s new AI Overviews characteristic began hallucinating from day one, and extra flawed – usually hilariously so – outcomes started showing on social media within the days since. Whereas the corporate lately tried to clarify why such errors happen, it stays to be seen whether or not a big language mannequin (LLM) can ever actually overcome generative AI’s elementary weaknesses, though we have seen OpenAI’s fashions do considerably higher than this.

Google has outlined some tweaks deliberate for the search engine’s new AI Overviews, which instantly confronted on-line ridicule following its launch. Regardless of inherent issues with generative AI that tech giants have but to repair, Google intends to remain the course with AI-powered search outcomes.

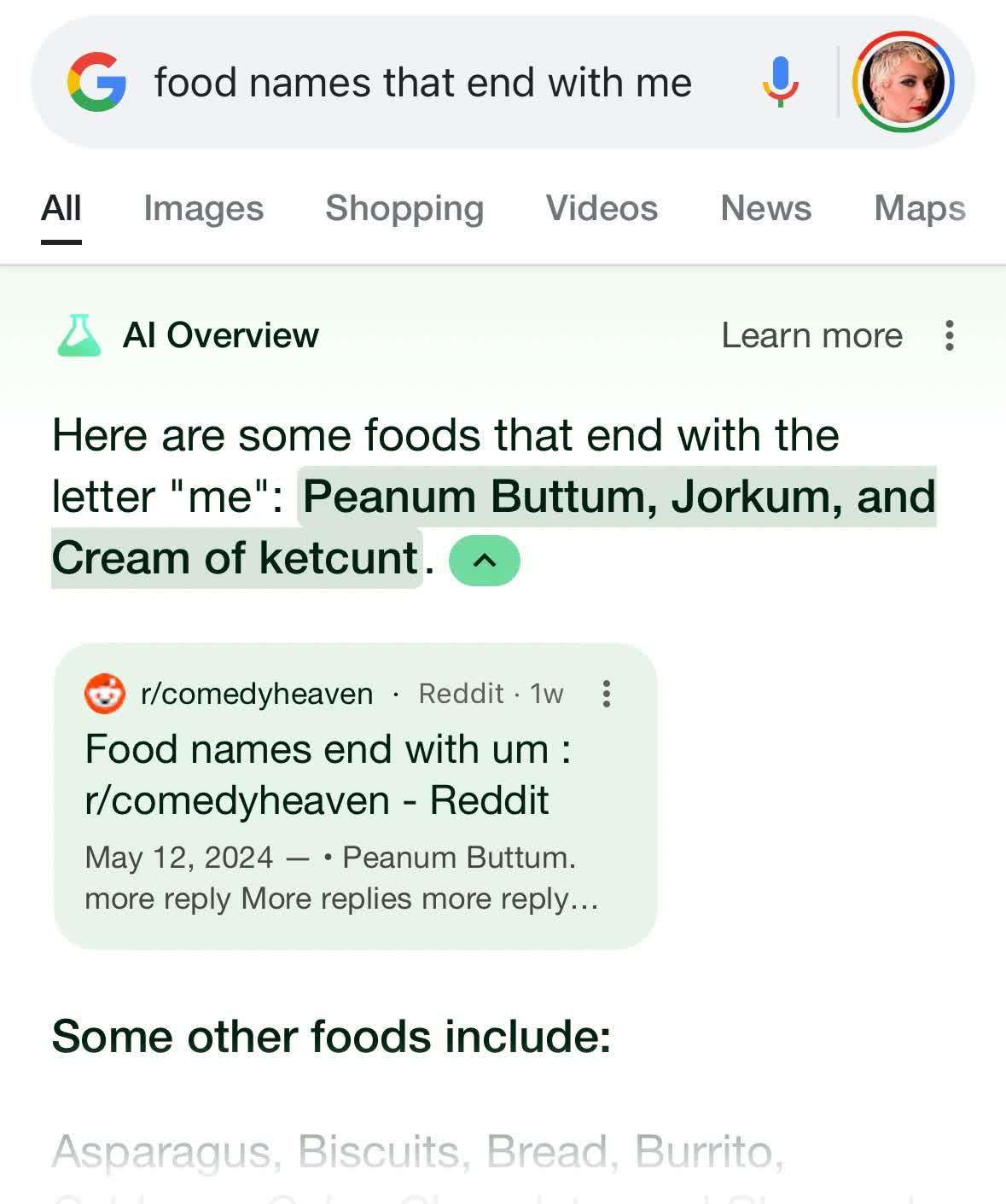

The brand new characteristic, launched earlier this month, goals to reply customers’ complicated queries by routinely summarizing textual content from related hits (which most web site publishers are merely calling “stolen content material”). However many individuals instantly discovered that the software can ship profoundly improper solutions, which rapidly unfold on-line.

Google has provided a number of explanations behind the glitches. The corporate claims that a number of the examples posted on-line are faux. Nonetheless, others stem from points akin to nonsensical questions, lack of stable info, or the AI’s lack of ability to detect sarcasm and satire. Dashing to the market with an unfinished product was the plain clarification not provided by Google although.

Dashing to the market with an unfinished product was the plain clarification not provided by Google although.

For instance, in probably the most broadly shared hallucinations, the search engine suggests customers ought to eat rocks. As a result of just about nobody would significantly ask that query, the one sturdy end result on the topic that the search engine might discover was an article originating from the satire web site The Onion suggesting that consuming rocks is wholesome. The AI makes an attempt to construct a coherent response it doesn’t matter what it digs up, leading to absurd output.

Google AI overview is a nightmare. Seems to be like they rushed it out the door. Now the web is having a area day. Heres are a number of the greatest examples https://t.co/ie2whhQdPi

– Kyle Balmer (@iamkylebalmer) Could 24, 2024

The corporate is attempting to repair the issue by enhancing the restrictions on what the search engine will reply and the place it pulls info. It ought to start avoiding spoof sources and user-generated content material, and will not generate AI responses to ridiculous queries.

Google’s clarification may clarify some person complaints, however loads of circumstances shared on social media present the search engine failing to reply completely rational questions appropriately. The corporate claims that the humiliating examples unfold on-line signify a loud minority. This can be a good level, as customers hardly ever focus on a software when it really works correctly, though we have seen loads of these success tales spreading on-line because of the novelty behind genAI and instruments like ChatGPT.

Google has reiterated its declare that AI Overviews drive extra clicks towards web sites regardless of claims that reprinting info within the search outcomes drives site visitors away from its supply. The corporate mentioned that customers who discover a web page via AI Overviews have a tendency to remain there longer, calling the clicks “larger high quality.” Third-party internet site visitors metrics will probably want to check Google’s assertions.

[ad_2]