[ad_1]

A lot of our customers implement operational reporting and analytics on DynamoDB utilizing Rockset as a SQL intelligence layer to serve stay dashboards and functions. As an engineering workforce, we’re always looking for alternatives to enhance their SQL-on-DynamoDB expertise.

For the previous few weeks, we have now been onerous at work tuning the efficiency of our DynamoDB ingestion course of. Step one on this course of was diving into DynamoDB’s documentation and doing a little experimentation to make sure that we have been utilizing DynamoDB’s learn APIs in a method that maximizes each the soundness and efficiency of our system.

Background on DynamoDB APIs

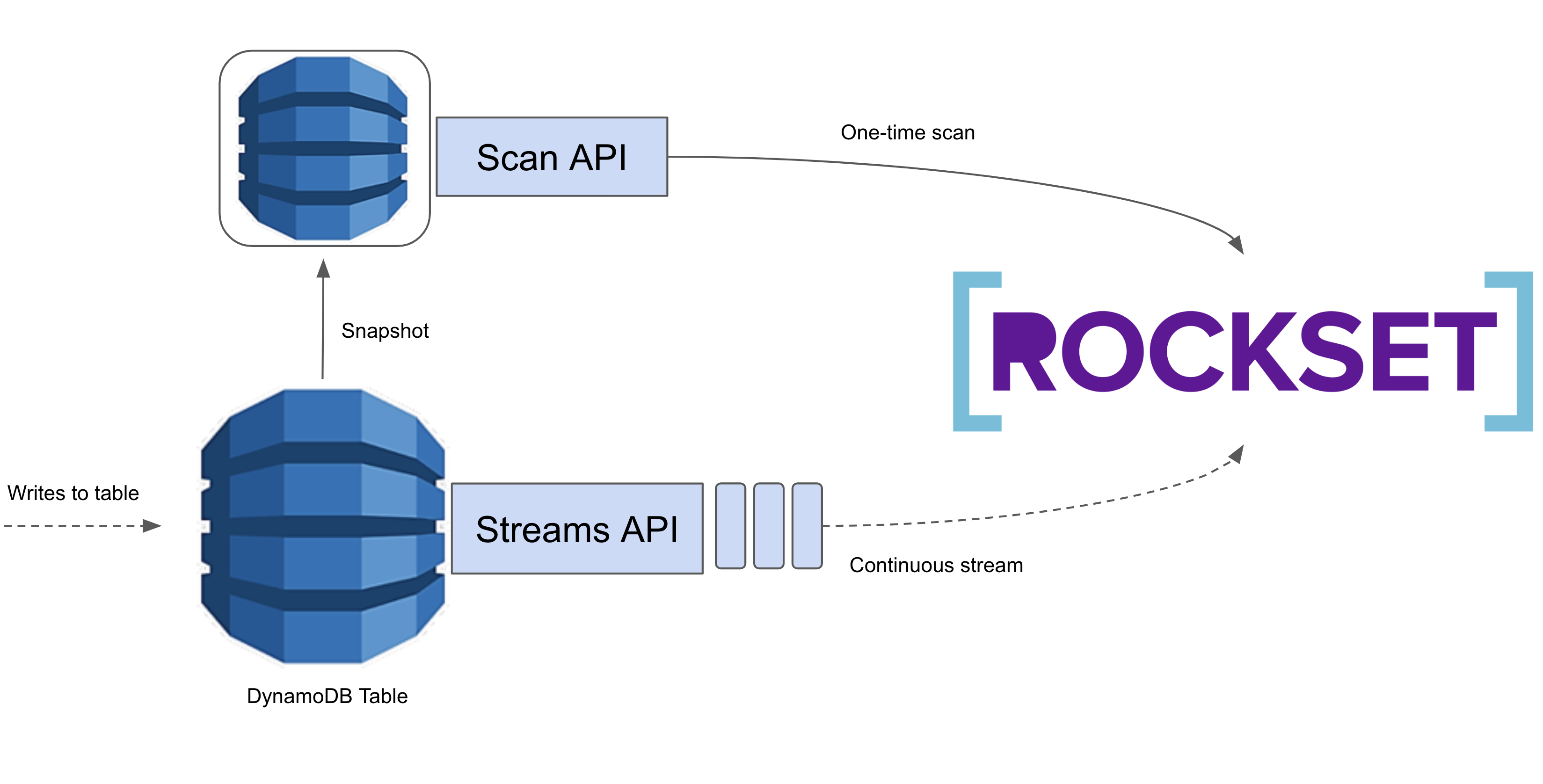

AWS provides a Scan API and a Streams API for studying information from DynamoDB. The Scan API permits us to linearly scan a complete DynamoDB desk. That is costly, however generally unavoidable. We use the Scan API the primary time we load information from a DynamoDB desk to a Rockset assortment, as we have now no technique of gathering all the information apart from scanning by means of it. After this preliminary load, we solely want to observe for updates, so utilizing the Scan API could be fairly wasteful. As a substitute, we use the Streams API which provides us a time-ordered queue of updates utilized to the DynamoDB desk. We learn these updates and apply them into Rockset, giving customers realtime entry to their DynamoDB information in Rockset!

The problem we’ve been enterprise is to make ingesting information from DynamoDB into Rockset as seamless and cost-efficient as attainable given the constraints offered by information sources, like DynamoDB. Following, I’ll focus on a number of of points we bumped into in tuning and stabilizing each phases of our DynamoDB ingestion course of whereas preserving prices low for our customers.

Scans

How we measure scan efficiency

Throughout the scanning part, we purpose to constantly maximize our learn throughput from DynamoDB with out consuming greater than a user-specified variety of RCUs per desk. We would like ingesting information into Rockset to be environment friendly with out interfering with present workloads operating on customers’ stay DynamoDB tables.

Understanding find out how to set scan parameters

From very preliminary testing, we observed that our scanning part took fairly a very long time to finish so we did some digging to determine why. We ingested a DynamoDB desk into Rockset and noticed what occurred through the scanning part. We anticipated to constantly devour the entire provisioned throughput.

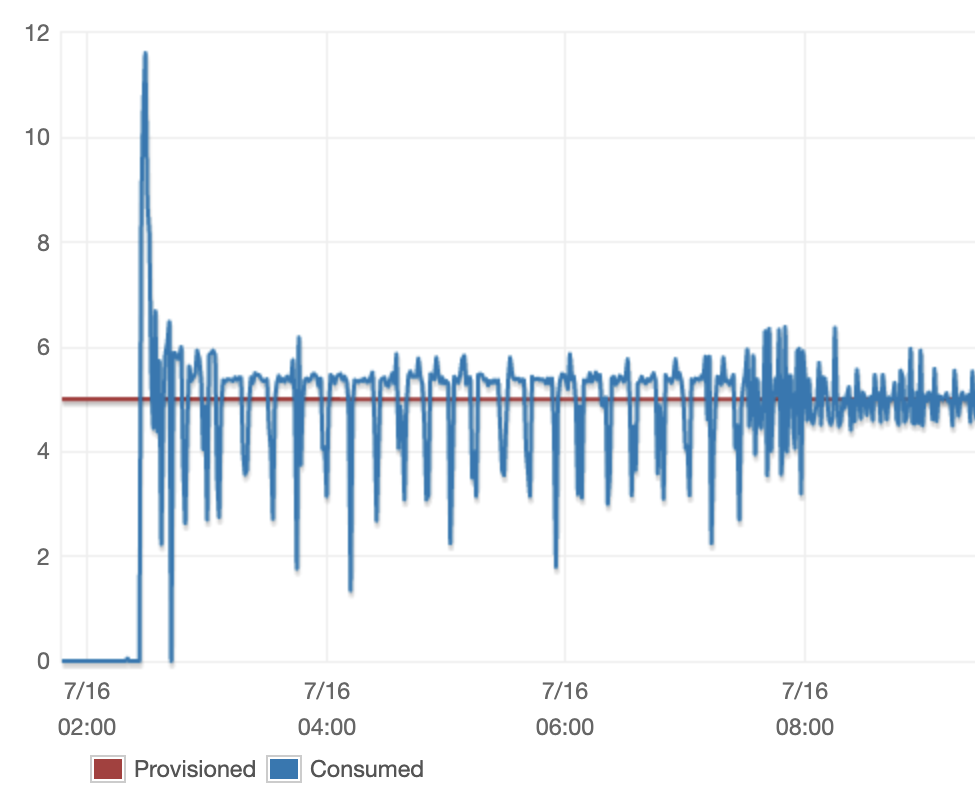

Initially, our RCU consumption regarded like the next:

We noticed an inexplicable stage of fluctuation within the RCU consumption over time, notably within the first half of the scan. These fluctuations are dangerous as a result of every time there’s a serious drop within the throughput, we find yourself lengthening the ingestion course of and growing our customers DynamoDB prices.

The issue was clear however the underlying trigger was not apparent. On the time, there have been a number of variables that we have been controlling fairly naively. DynamoDB exposes two necessary variables: web page dimension and section rely, each of which we had set to mounted values. We additionally had our personal charge limiter which throttled the variety of DynamoDB Scan API calls we made. We had additionally set the restrict this charge limiter was imposing to a hard and fast worth. We suspected that one in every of these variables being sub-optimally configured was the probably reason behind the huge fluctuations we have been observing.

Some investigation revealed that the reason for the fluctuation was primarily the speed limiter. It turned out the mounted restrict we had set on our charge limiter was too low, so we have been getting throttled too aggressively by our personal charge limiter. We determined to repair this drawback by configuring our limiter primarily based on the quantity of RCU allotted to the desk. We will simply (and do plan to) transition to utilizing a user-specified variety of RCU for every desk, which can enable us to restrict Rockset’s RCU consumption even when customers have RCU autoscaling enabled.

public int getScanRateLimit(AmazonDynamoDB shopper, String tableName,

int numSegments) {

TableDescription tableDesc = shopper.describeTable(tableName).getTable();

// Be aware: it will return 0 if the desk has RCU autoscaling enabled

closing lengthy tableRcu = tableDesc.getProvisionedThroughput().getReadCapacityUnits();

closing int numSegments = config.getNumSegments();

return desiredRcuUsage / numSegments;

}

For every section, we carry out a scan, consuming capability on our charge limiter as we devour DynamoDB RCU’s.

public void doScan(AmazonDynamoDb shopper, String tableName, int numSegments) {

RateLimiter rateLimiter = RateLimiter.create(getScanRateLimit(shopper,

tableName, numSegments))

whereas (!executed) {

ScanResult outcome = shopper.scan(/* feed scan request in */);

// do processing ...

rateLimiter.purchase(outcome.getConsumedCapacity().getCapacityUnits());

}

}

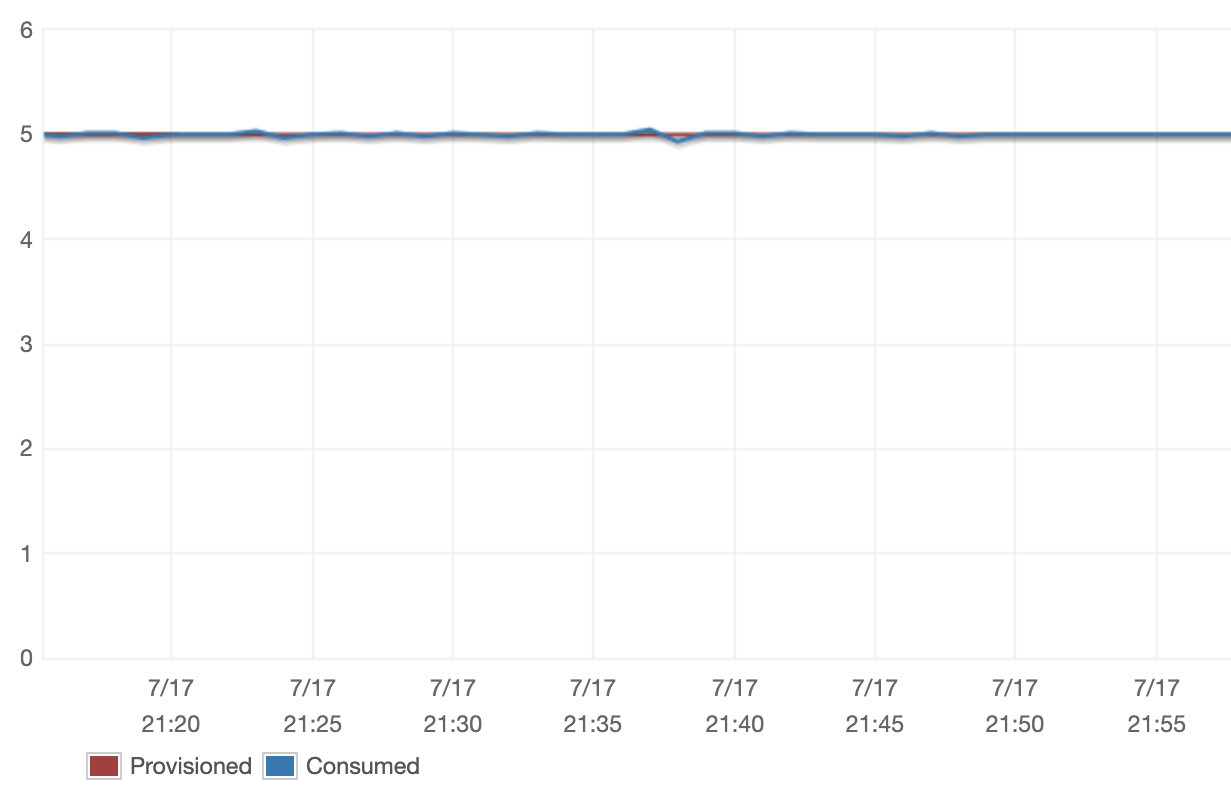

The results of our new Scan configuration was the next:

We have been completely satisfied to see that, with our new configuration, we have been in a position to reliably management the quantity of throughput we consumed. The issue we found with our charge limiter dropped at gentle our underlying want for extra dynamic DynamoDB Scan configurations. We’re persevering with to run experiments to find out find out how to dynamically set the web page dimension and section rely primarily based on table-specific information, however we additionally moved onto coping with a number of the challenges we have been dealing with with DynamoDB Streams.

Streams

How we measure streaming efficiency

Our purpose through the streaming part of ingestion is to attenuate the period of time it takes for an replace to enter Rockset after it’s utilized in DynamoDB whereas preserving the fee utilizing Rockset as little as attainable for our customers. The first price issue for DynamoDB Streams is the variety of API calls we make. DynamoDB’s pricing permits customers 2.5 million free API calls and prices $0.02 per 100,000 requests past that. We need to attempt to keep as near the free tier as attainable.

Beforehand we have been querying DynamoDB at a charge of ~300 requests/second as a result of we encountered plenty of empty shards within the streams we have been studying. We believed that we’d must iterate by means of all of those empty shards whatever the charge we have been querying at. To mitigate the load we placed on customers’ Dynamo tables (and in flip their wallets), we set a timer on these reads after which stopped studying for five minutes if we didn’t discover any new data. On condition that this mechanism ended up charging customers who didn’t even have a lot information in DynamoDB and nonetheless had a worst case latency of 5 minutes, we began investigating how we might do higher.

Lowering the frequency of streaming calls

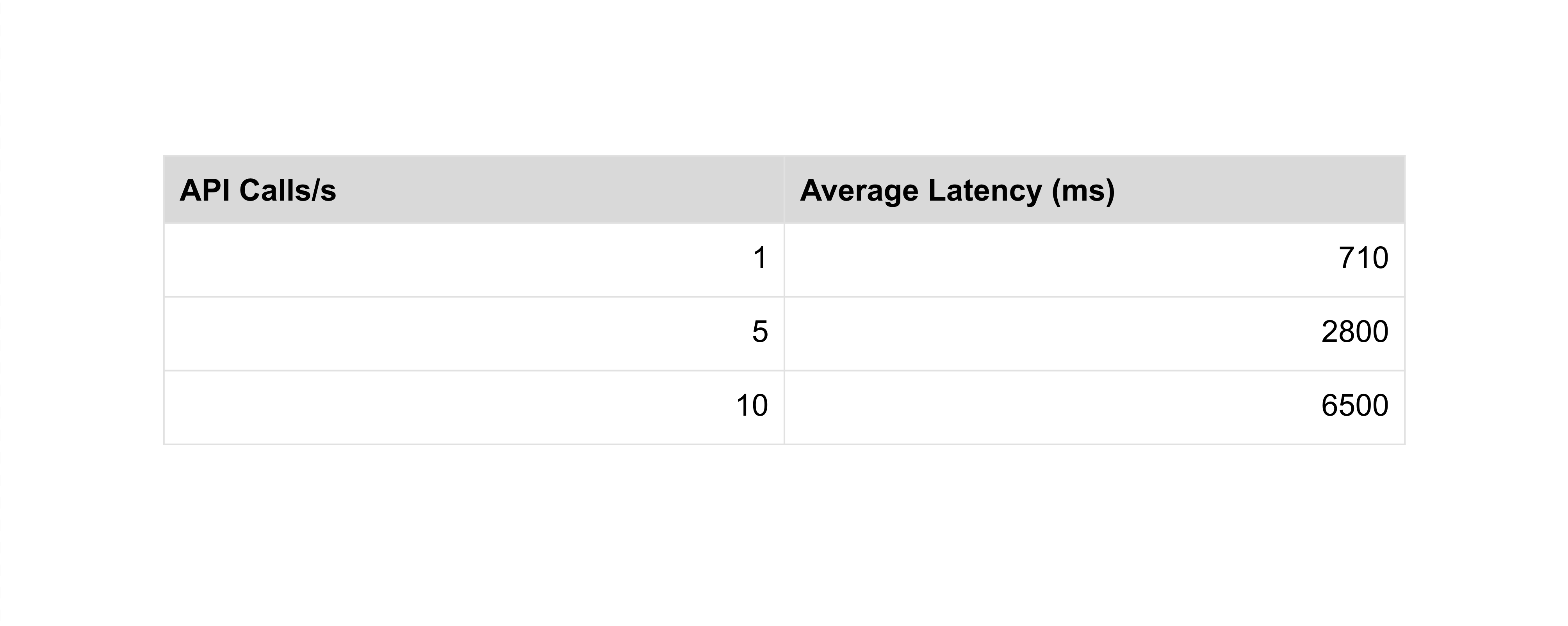

We ran a number of experiments to make clear our understanding of the DynamoDB Streams API and decide whether or not we might cut back the frequency of the DynamoDB Streams API calls our customers have been being charged for. For every experiment, we various the period of time we waited between API calls and measured the common period of time it took for an replace to a DynamoDB desk to be mirrored in Rockset.

Inserting data into the DynamoDB desk at a relentless charge of two data/second, the outcomes have been as follows:

Inserting data into the DynamoDB desk in a bursty sample, the outcomes have been as follows:

The outcomes above confirmed that making 1 API name each second is loads to make sure that we preserve sub-second latencies. Our preliminary assumptions have been incorrect, however these outcomes illuminated a transparent path ahead. We promptly modified our ingestion course of to question DynamoDB Streams for brand new information solely as soon as per second so as give us the efficiency we’re searching for at a a lot lowered price to our customers.

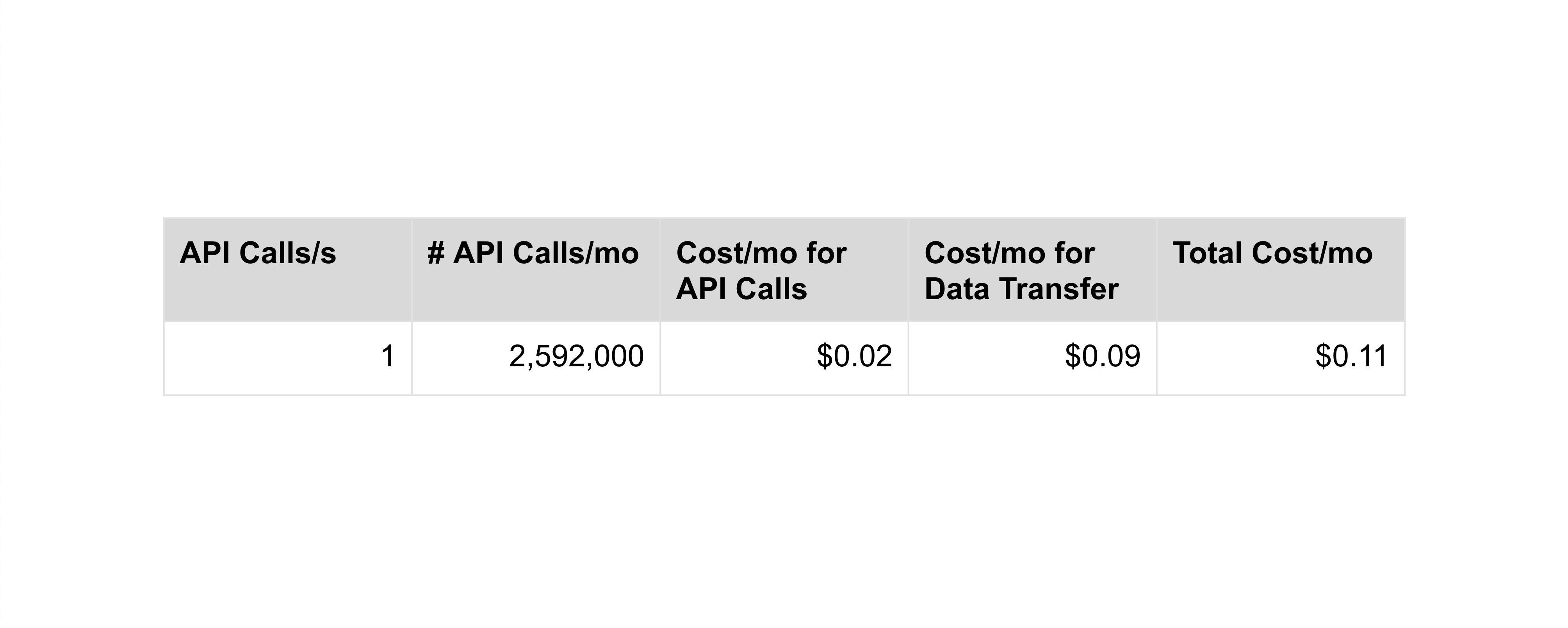

Calculating our price discount

Since with DynamoDB Streams we’re immediately answerable for our customers prices, we determined that we would have liked to exactly calculate the fee our customers incur because of the method we use DynamoDB Streams. There are two components which wholly decide the quantity that customers can be charged for DynamoDB Streams: the variety of Streams API calls made and the quantity of information transferred. The quantity of information transferred is essentially past our management. Every API name response unavoidably transfers a small quantity (768 bytes) of information. The remainder is all consumer information, which is simply learn into Rockset as soon as. We centered on controlling the variety of DynamoDB Streams API calls we make to customers’ tables as this was beforehand the driving force of our customers’ DynamoDB prices.

Following is a breakdown of the fee we estimate with our newly transformed ingestion course of:

We have been completely satisfied to see that, with our optimizations, our customers ought to incur just about no extra price on their DynamoDB tables as a result of Rockset!

Conclusion

We’re actually excited that the work we’ve been doing has efficiently pushed DynamoDB prices down for our customers whereas permitting them to work together with their DynamoDB information in Rockset in realtime!

This can be a simply sneak peek into a number of the challenges and tradeoffs we’ve confronted whereas working to make ingesting information from DynamoDB into Rockset as seamless as attainable. When you’re taken with studying extra about find out how to operationalize your DynamoDB information utilizing Rockset take a look at a few of our current materials and keep tuned for updates as we proceed to construct Rockset out!

If you would like to see Rockset and DynamoDB in motion, it’s best to take a look at our temporary product tour.

Different DynamoDB assets:

[ad_2]