[ad_1]

What simply occurred? Intel threw down the gauntlet towards Nvidia within the heated battle for AI {hardware} supremacy. At Computex this week, CEO Pat Gelsinger unveiled pricing for Intel’s next-gen Gaudi 2 and Gaudi 3 AI accelerator chips, and the numbers look disruptive.

Pricing for merchandise like these is usually saved hidden from the general public, however Intel has bucked the development and supplied some official figures. The flagship Gaudi 3 accelerator will price round $15,000 per unit when bought individually, which is 50 p.c cheaper than Nvidia’s competing H100 knowledge heart GPU.

The Gaudi 2, whereas much less highly effective, additionally undercuts Nvidia’s pricing dramatically. An entire 8-chip Gaudi 2 accelerator package will promote for $65,000 to system distributors. Intel claims that is simply one-third the worth of comparable setups from Nvidia and different rivals.

For the Gaudi 3, that very same 8-accelerator package configuration prices $125,000. Intel insists it is two-thirds cheaper than different options at that high-end efficiency tier.

At #Computex2024, Intel CEO @PGelsinger unveiled all new Intel®ï¸Â Xeon®ï¸Â 6 processors, Lunar Lake structure and 80+ new AI PC designs and commonplace AI kits together with eight Intel® Gaudi® 2 & 3 accelerators. pic.twitter.com/viHlLGQVDd

– Intel India (@IntelIndia) June 7, 2024

To offer some context to Gaudi 3 pricing, Nvidia’s newly launched Blackwell B100 GPU prices round $30,000 per unit. In the meantime, the high-performance Blackwell CPU+GPU combo, the B200, sells for roughly $70,000.

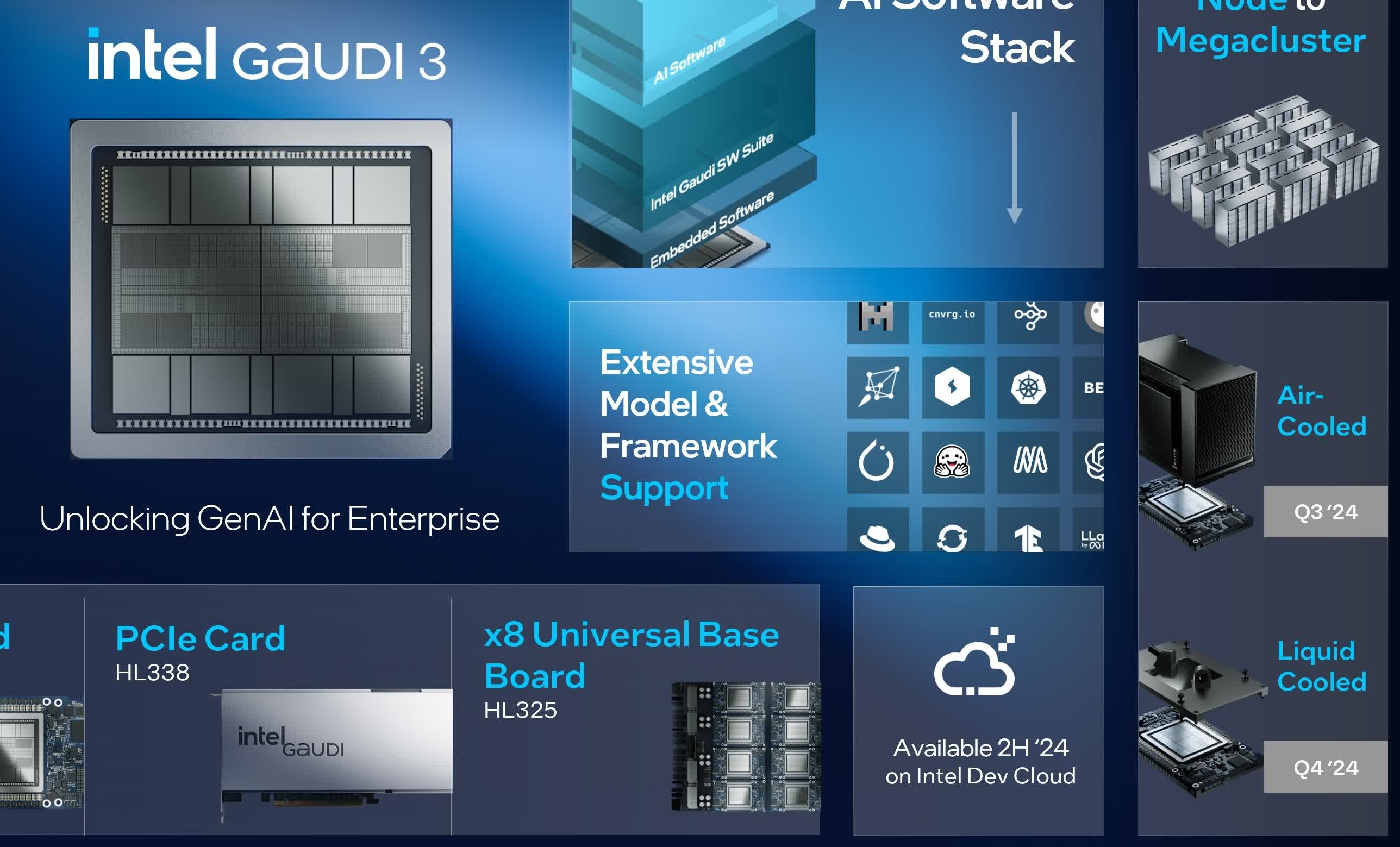

After all, pricing is only one a part of the equation. Efficiency and the software program ecosystem are equally essential issues. On that entrance, Intel insists the Gaudi 3 retains tempo with or outperforms Nvidia’s H100 throughout quite a lot of vital AI coaching and inference workloads.

Benchmarks cited by Intel present the Gaudi 3 delivering as much as 40 p.c sooner coaching occasions than the H100 in giant 8,192-chip clusters. Even a smaller 64-chip Gaudi 3 setup presents 15 p.c greater throughput than the H100 on the favored LLaMA 2 language mannequin, in keeping with the corporate. For AI inference, Intel claims a 2x velocity benefit over the H100 on fashions like LLaMA and Mistral.

Nonetheless, whereas the Gaudi chips leverage open requirements like Ethernet for simpler deployment, they lack optimizations for Nvidia’s ubiquitous CUDA platform that almost all AI software program depends on at this time. Convincing enterprises to refactor their code for Gaudi may very well be powerful.

To drive adoption, Intel says it has lined up at the least 10 main server distributors – together with new Gaudi 3 companions like Asus, Foxconn, Gigabyte, Inventec, Quanta, and Wistron. Acquainted names like Dell, HPE, Lenovo, and Supermicro are additionally on board.

Nonetheless, Nvidia is a drive to be reckoned with within the knowledge heart world. Within the closing quarter of 2023, they claimed a 73 p.c share of the information heart processor market, and that quantity has continued to rise, chipping away on the stakes of each Intel and AMD. The patron GPU market is not all that totally different, with Nvidia commanding an 88 p.c share.

It is an uphill battle for Intel, however these large value variations could assist shut the hole.

[ad_2]