[ad_1]

Each database constructed for real-time analytics has a elementary limitation. Once you deconstruct the core database structure, deep within the coronary heart of it you can find a single element that’s performing two distinct competing capabilities: real-time information ingestion and question serving. These two components operating on the identical compute unit is what makes the database real-time: queries can replicate the impact of the brand new information that was simply ingested. However, these two capabilities instantly compete for the obtainable compute sources, making a elementary limitation that makes it tough to construct environment friendly, dependable real-time functions at scale. When information ingestion has a flash flood second, your queries will decelerate or trip making your software flaky. When you have got a sudden sudden burst of queries, your information will lag making your software not so actual time anymore.

This modifications at this time. We unveil true compute-compute separation that eliminates this elementary limitation, and makes it attainable to construct environment friendly, dependable real-time functions at huge scale.

Study extra concerning the new structure and the way it delivers efficiencies within the cloud on this tech speak I hosted with principal architect Nathan Bronson Compute-Compute Separation: A New Cloud Structure for Actual-Time Analytics.

The Problem of Compute Competition

On the coronary heart of each real-time software you have got this sample that the info by no means stops coming in and requires steady processing, and the queries by no means cease – whether or not they come from anomaly detectors that run 24×7 or end-user-facing analytics.

Unpredictable Information Streams

Anybody who has managed real-time information streams at scale will let you know that information flash floods are fairly frequent. Even essentially the most behaved and predictable real-time streams can have occasional bursts the place the quantity of the info goes up in a short time. If left unchecked the info ingestion will utterly monopolize your total real-time database and end in question sluggish downs and timeouts. Think about ingesting behavioral information on an e-commerce web site that simply launched an enormous marketing campaign, or the load spikes a fee community will see on Cyber Monday.

Unpredictable Question Workloads

Equally, whenever you construct and scale functions, unpredictable bursts from the question workload are par for the course. On some events they’re predictable primarily based on time of day and seasonal upswings, however there are much more conditions when these bursts can’t be predicted precisely forward of time. When question bursts begin consuming all of the compute within the database, then they are going to take away compute obtainable for the real-time information ingestion, leading to information lags. When information lags go unchecked then the real-time software can not meet its necessities. Think about a fraud anomaly detector triggering an intensive set of investigative queries to grasp the incident higher and take remedial motion. If such question workloads create further information lags then it’s going to actively trigger extra hurt by rising your blind spot on the precise unsuitable time, the time when fraud is being perpetrated.

How Different Databases Deal with Compute Competition

Information warehouses and OLTP databases have by no means been designed to deal with excessive quantity streaming information ingestion whereas concurrently processing low latency, excessive concurrency queries. Cloud information warehouses with compute-storage separation do supply batch information hundreds operating concurrently with question processing, however they supply this functionality by giving up on actual time. The concurrent queries won’t see the impact of the info hundreds till the info load is full, creating 10s of minutes of information lags. So they aren’t appropriate for real-time analytics. OLTP databases aren’t constructed to ingest huge volumes of information streams and carry out stream processing on incoming datasets. Thus OLTP databases usually are not fitted to real-time analytics both. So, information warehouses and OLTP databases have hardly ever been challenged to energy huge scale real-time functions, and thus it’s no shock that they haven’t made any makes an attempt to handle this problem.

Elasticsearch, Clickhouse, Apache Druid and Apache Pinot are the databases generally used for constructing real-time functions. And in the event you examine each one among them and deconstruct how they’re constructed, you will notice all of them battle with this elementary limitation of information ingestion and question processing competing for a similar compute sources, and thereby compromise the effectivity and the reliability of your software. Elasticsearch helps particular goal ingest nodes that offload some components of the ingestion course of reminiscent of information enrichment or information transformations, however the compute heavy a part of information indexing is finished on the identical information nodes that additionally do question processing. Whether or not these are Elasticsearch’s information nodes or Apache Druid’s information servers or Apache Pinot’s real-time servers, the story is just about the identical. A few of the techniques make information immutable, as soon as ingested, to get round this problem – however actual world information streams reminiscent of CDC streams have inserts, updates and deletes and never simply inserts. So not dealing with updates and deletes shouldn’t be actually an possibility.

Coping Methods for Compute Competition

In follow, methods used to handle this problem usually fall into one among two classes: overprovisioning compute or making replicas of your information.

Overprovisioning Compute

It is vitally frequent follow for real-time software builders to overprovision compute to deal with each peak ingest and peak question bursts concurrently. It will get value prohibitive at scale and thus shouldn’t be an excellent or sustainable answer. It’s common for directors to tweak inner settings to arrange peak ingest limits or discover different methods to both compromise information freshness or question efficiency when there’s a load spike, whichever path is much less damaging for the appliance.

Make Replicas of your Information

The opposite method we’ve seen is for information to be replicated throughout a number of databases or database clusters. Think about a main database doing all of the ingest and a reproduction serving all the appliance queries. When you have got 10s of TiBs of information this method begins to change into fairly infeasible. Duplicating information not solely will increase your storage prices, but in addition will increase your compute prices because the information ingestion prices are doubled too. On high of that, information lags between the first and the reproduction will introduce nasty information consistency points your software has to take care of. Scaling out would require much more replicas that come at a fair greater value and shortly your complete setup turns into untenable.

How We Constructed Compute-Compute Separation

Earlier than I’m going into the main points of how we solved compute rivalry and carried out compute-compute separation, let me stroll you thru a number of vital particulars on how Rockset is architected internally, particularly round how Rockset employs RocksDB as its storage engine.

RocksDB is among the hottest Log Structured Merge tree storage engines on the planet. Again once I used to work at fb, my group, led by superb builders reminiscent of Dhruba Borthakur and Igor Canadi (who additionally occur to be the co-founder and founding architect at Rockset), forked the LevelDB code base and turned it into RocksDB, an embedded database optimized for server-side storage. Some understanding of how Log Structured Merge tree (LSM) storage engines work will make this half simple to comply with and I encourage you to confer with some glorious supplies on this topic such because the RocksDB Structure Information. If you’d like absolutely the newest analysis on this house, learn the 2019 survey paper by Chen Lou and Prof. Michael Carey.

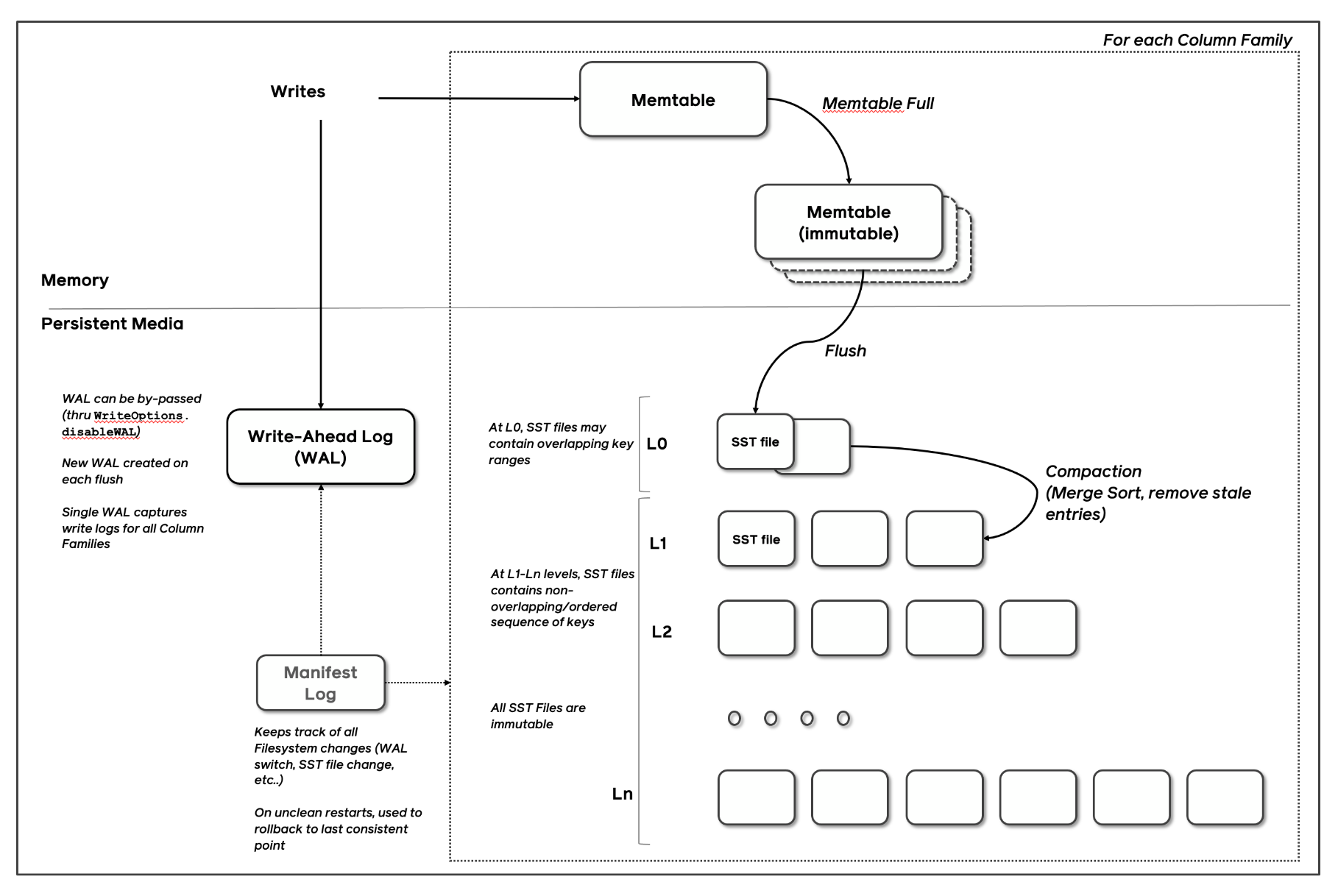

In LSM Tree architectures, new writes are written to an in-memory memtable and memtables are flushed, after they replenish, into immutable sorted strings desk (SST) recordsdata. Distant compactors, much like rubbish collectors in language runtimes, run periodically, take away stale variations of the info and stop database bloat.

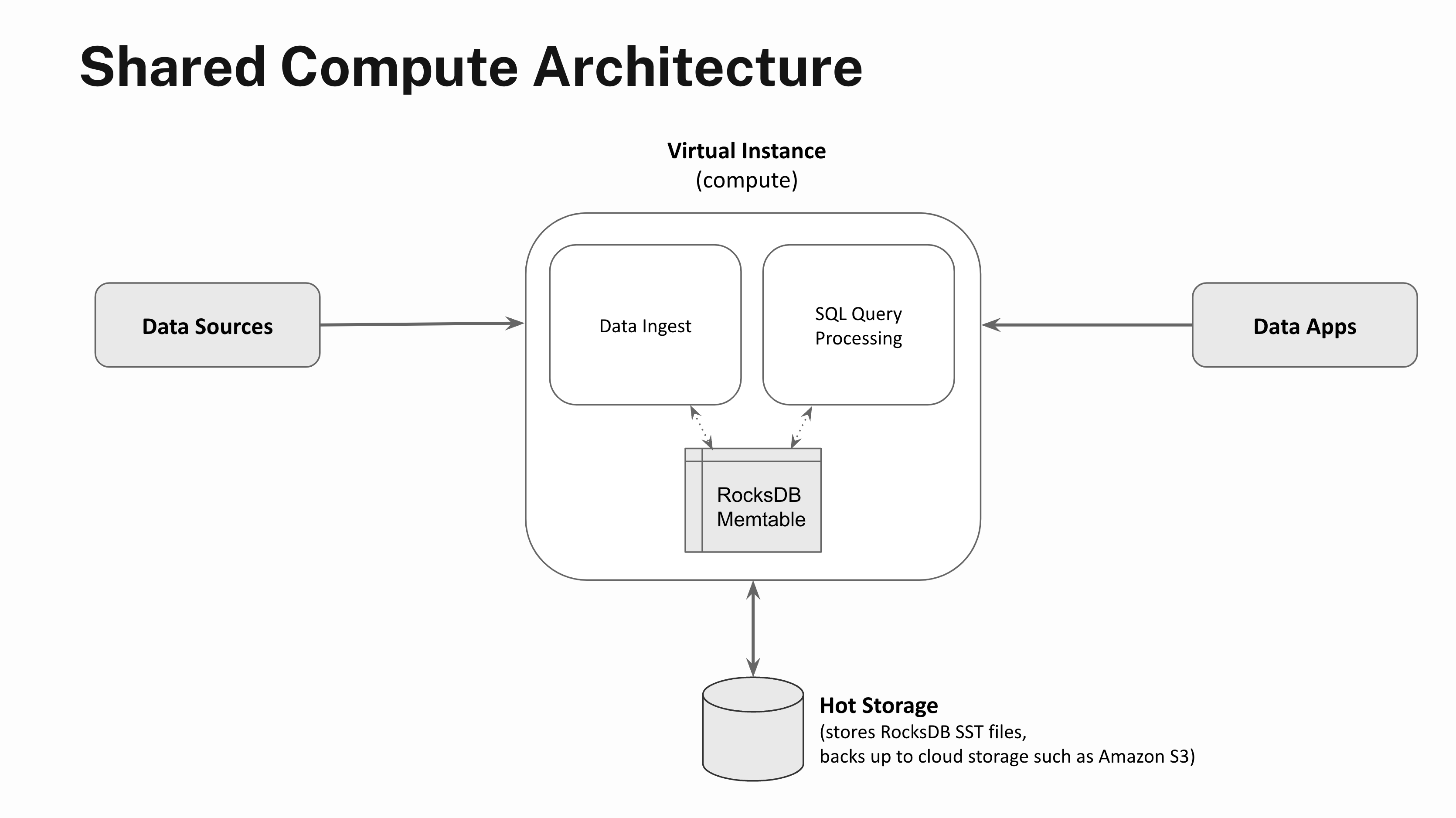

Each Rockset assortment makes use of a number of RocksDB situations to retailer the info. Information ingested right into a Rockset assortment can be written to the related RocksDB occasion. Rockset’s distributed SQL engine accesses information from the related RocksDB occasion throughout question processing.

Step 1: Separate Compute and Storage

One of many methods we first prolonged RocksDB to run within the cloud was by constructing RocksDB Cloud, wherein the SST recordsdata created upon a memtable flush are additionally backed into cloud storage reminiscent of Amazon S3. RocksDB Cloud allowed Rockset to utterly separate the “efficiency layer” of the info administration system accountable for quick and environment friendly information processing from the “sturdiness layer” accountable for making certain information isn’t misplaced.

Actual-time functions demand low-latency, high-concurrency question processing. So whereas constantly backing up information to Amazon S3 gives strong sturdiness ensures, information entry latencies are too sluggish to energy real-time functions. So, along with backing up the SST recordsdata to cloud storage, Rockset additionally employs an autoscaling sizzling storage tier backed by NVMe SSD storage that enables for full separation of compute and storage.

Compute models spun as much as carry out streaming information ingest or question processing are known as Digital Cases in Rockset. The new storage tier scales elastically primarily based on utilization and serves the SST recordsdata to Digital Cases that carry out information ingestion, question processing or information compactions. The new storage tier is about 100-200x sooner to entry in comparison with chilly storage reminiscent of Amazon S3, which in flip permits Rockset to supply low-latency, high-throughput question processing.

Step 2: Separate Information Ingestion and Question Processing Code Paths

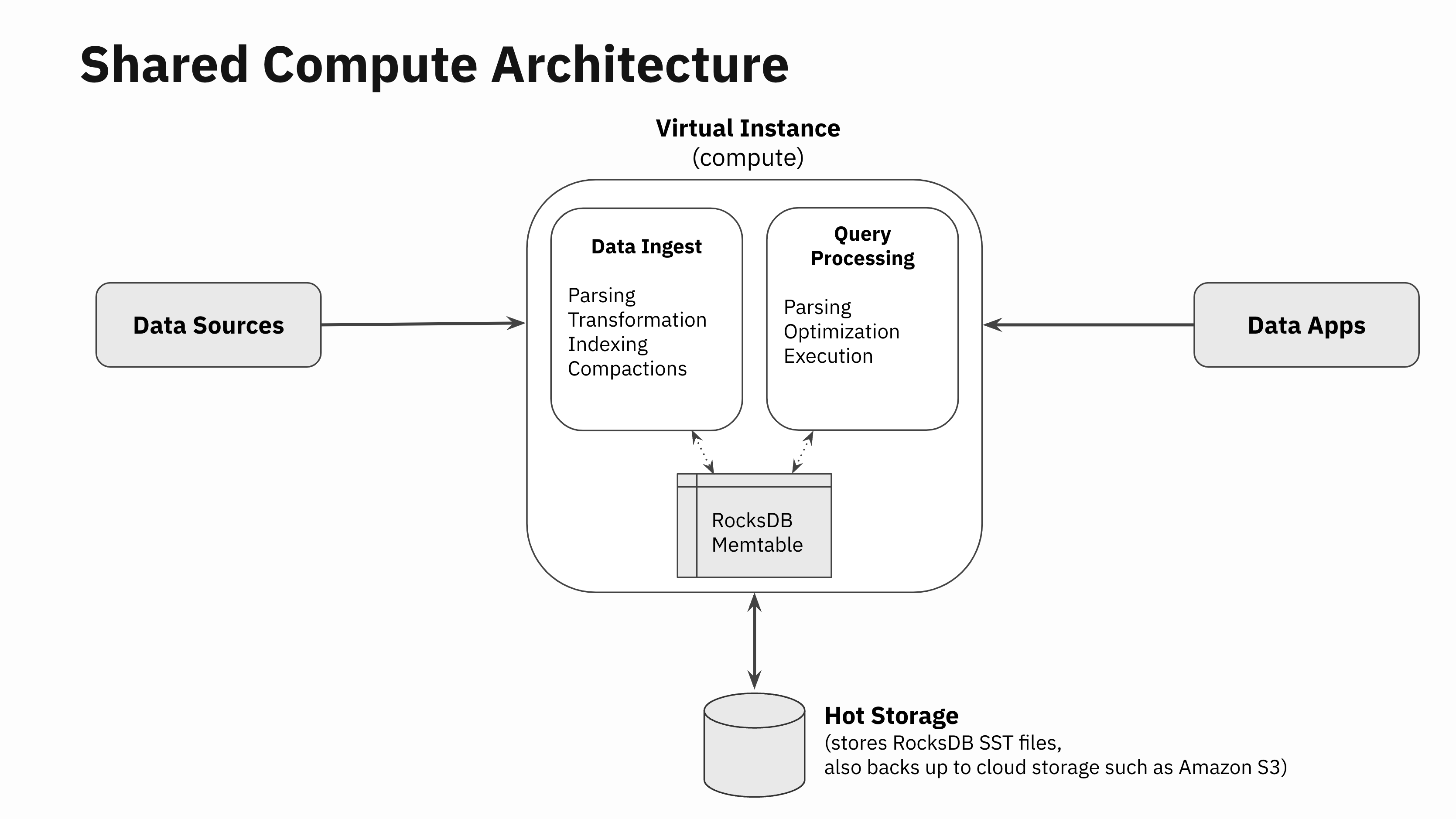

Let’s go one stage deeper and have a look at all of the totally different components of information ingestion. When information will get written right into a real-time database, there are basically 4 duties that should be finished:

- Information parsing: Downloading information from the info supply or the community, paying the community RPC overheads, information decompressing, parsing and unmarshalling, and so forth

- Information transformation: Information validation, enrichment, formatting, kind conversions and real-time aggregations within the type of rollups

- Information indexing: Information is encoded within the database’s core information buildings used to retailer and index the info for quick retrieval. In Rockset, that is the place Converged Indexing is carried out

- Compaction (or vacuuming): LSM engine compactors run within the background to take away stale variations of the info. Word that this half isn’t just particular to LSM engines. Anybody who has ever run a VACUUM command in PostgreSQL will know that these operations are important for storage engines to supply good efficiency even when the underlying storage engine shouldn’t be log structured.

The SQL processing layer goes by way of the everyday question parsing, question optimization and execution phases like some other SQL database.

Constructing compute-compute separation has been a long run purpose for us because the very starting. So, we designed Rockset’s SQL engine to be utterly separated from all of the modules that do information ingestion. There are not any software program artifacts reminiscent of locks, latches, or pinned buffer blocks which might be shared between the modules that do information ingestion and those that do SQL processing outdoors of RocksDB. The info ingestion, transformation and indexing code paths work utterly independently from the question parsing, optimization and execution.

RocksDB helps multi-version concurrency management, snapshots, and has an enormous physique of labor to make varied subcomponents multi-threaded, remove locks altogether and scale back lock rivalry. Given the character of RocksDB, sharing state in SST recordsdata between readers, writers and compactors will be achieved with little to no coordination. All these properties permit our implementation to decouple the info ingestion from question processing code paths.

So, the one motive SQL question processing is scheduled on the Digital Occasion doing information ingestion is to entry the in-memory state in RocksDB memtables that maintain essentially the most lately ingested information. For question outcomes to replicate essentially the most lately ingested information, entry to the in-memory state in RocksDB memtables is crucial.

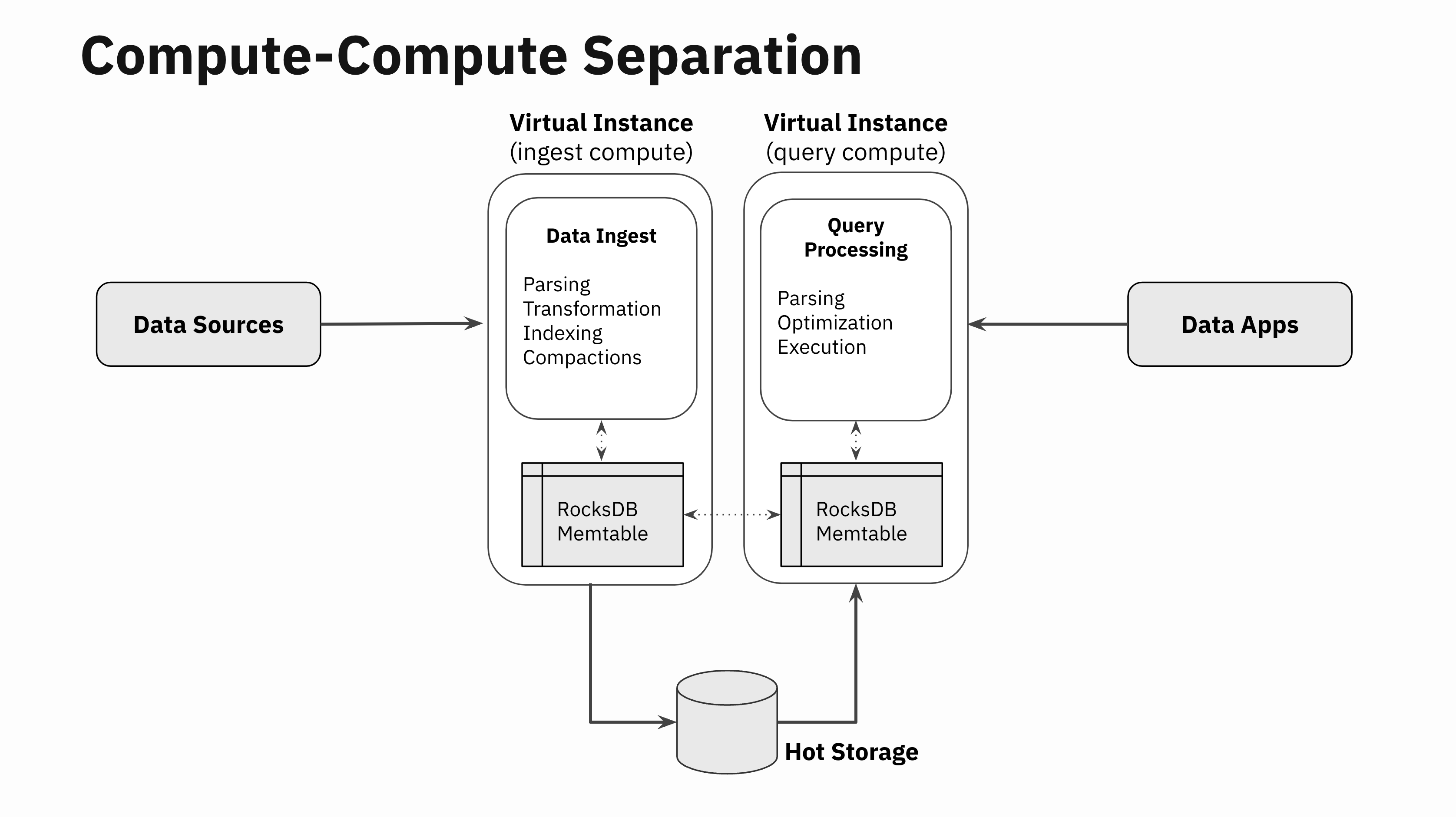

Step 3: Replicate In-Reminiscence State

Somebody within the Nineteen Seventies at Xerox took a photocopier, break up it right into a scanner and a printer, related these two components over a phone line and thereby invented the world’s first phone fax machine which utterly revolutionized telecommunications.

Related in spirit to the Xerox hack, in one of many Rockset hackathons a few 12 months in the past, two of our engineers, Nathan Bronson and Igor Canadi, took RocksDB, break up the half that writes to RocksDB memtables from the half that reads from the RocksDB memtable, constructed a RocksDB memtable replicator, and related it over the community. With this functionality, now you can write to a RocksDB occasion in a single Digital Occasion, and inside milliseconds replicate that to a number of distant Digital Cases effectively.

Not one of the SST recordsdata must be replicated since these recordsdata are already separated from compute and are saved and served from the autoscaling sizzling storage tier. So, this replicator solely focuses on replicating the in-memory state in RocksDB memtables. The replicator additionally coordinates flush actions in order that when the memtable is flushed on the Digital Occasion ingesting the info, the distant Digital Cases know to go fetch the brand new SST recordsdata from the shared sizzling storage tier.

This easy hack of replicating RocksDB memtables is an enormous unlock. The in-memory state of RocksDB memtables will be accessed effectively in distant Digital Cases that aren’t doing the info ingestion, thereby essentially separating the compute wants of information ingestion and question processing.

This specific methodology of implementation has few important properties:

- Low information latency: The extra information latency from when the RocksDB memtables are up to date within the ingest Digital Cases to when the identical modifications are replicated to distant Digital Cases will be saved to single digit milliseconds. There are not any massive costly IO prices, storage prices or compute prices concerned, and Rockset employs properly understood information streaming protocols to maintain information latencies low.

- Strong replication mechanism: RocksDB is a dependable, constant storage engine and may emit a “memtable replication stream” that ensures correctness even when the streams are disconnected or interrupted for no matter motive. So, the integrity of the replication stream will be assured whereas concurrently conserving the info latency low. It’s also actually vital that the replication is going on on the RocksDB key-value stage in spite of everything the key compute heavy ingestion work has already occurred, which brings me to my subsequent level.

- Low redundant compute expense: Little or no further compute is required to duplicate the in-memory state in comparison with the full quantity of compute required for the unique information ingestion. The way in which the info ingestion path is structured, the RocksDB memtable replication occurs after all of the compute intensive components of the info ingestion are full together with information parsing, information transformation and information indexing. Information compactions are solely carried out as soon as within the Digital Occasion that’s ingesting the info, and all of the distant Digital Cases will merely decide the brand new compacted SST recordsdata instantly from the new storage tier.

It ought to be famous that there are different naive methods to separate ingestion and queries. A technique can be by replicating the incoming logical information stream to 2 compute nodes, inflicting redundant computations and doubling the compute wanted for streaming information ingestion, transformations and indexing. There are numerous databases that declare related compute-compute separation capabilities by doing “logical CDC-like replication” at a excessive stage. Try to be doubtful of databases that make such claims. Whereas duplicating logical streams could seem “adequate” in trivial circumstances, it comes at a prohibitively costly compute value for large-scale use circumstances.

Leveraging Compute-Compute Separation

There are quite a few real-world conditions the place compute-compute separation will be leveraged to construct scalable, environment friendly and strong real-time functions: ingest and question compute isolation, a number of functions on shared real-time information, limitless concurrency scaling and dev/check environments.

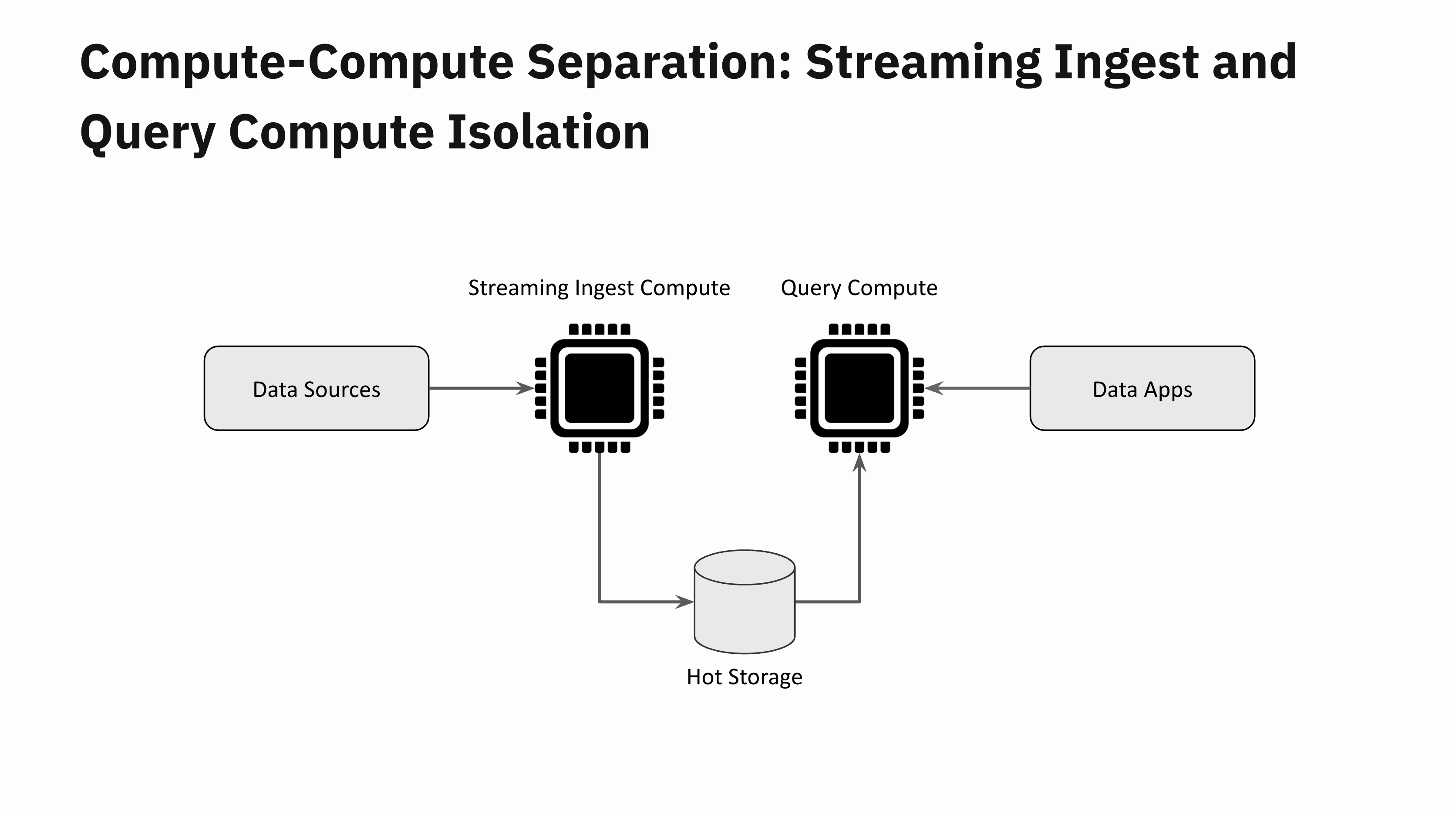

Ingest and Question Compute Isolation

Take into account a real-time software that receives a sudden flash flood of latest information. This ought to be fairly easy to deal with with compute-compute separation. One Digital Occasion is devoted to information ingestion and a distant Digital Occasion one for question processing. These two Digital Cases are totally remoted from one another. You’ll be able to scale up the Digital Occasion devoted to ingestion if you wish to preserve the info latencies low, however regardless of your information latencies, your software queries will stay unaffected by the info flash flood.

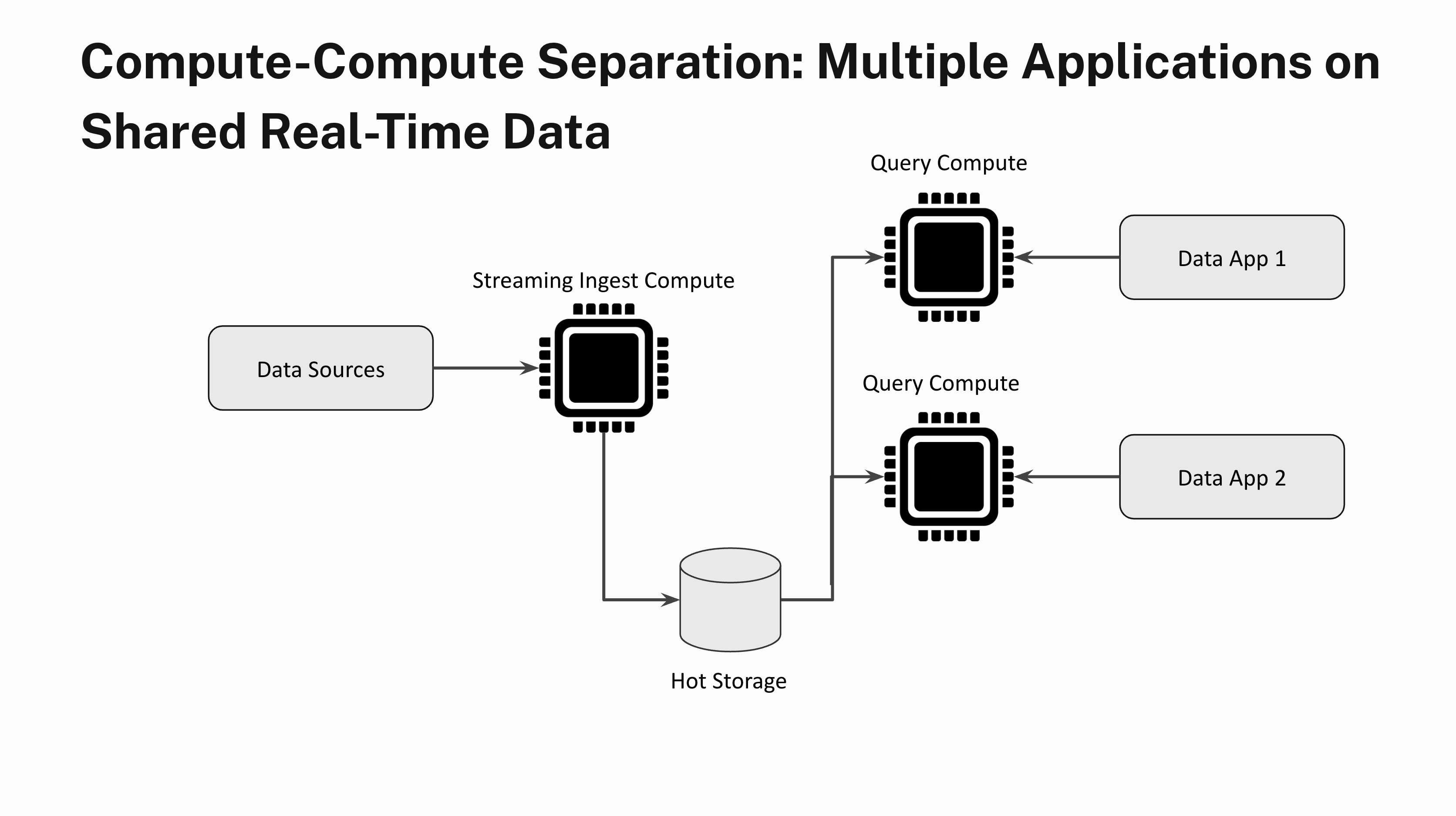

A number of Purposes on Shared Actual-Time Information

Think about constructing two totally different functions with very totally different question load traits on the identical real-time information. One software sends a small variety of heavy analytical queries that aren’t time delicate and the opposite software is latency delicate and has very excessive QPS. With compute-compute separation you possibly can totally isolate a number of software workloads by spinning up one Digital Occasion for the primary software and a separate Digital Occasion for the second software.

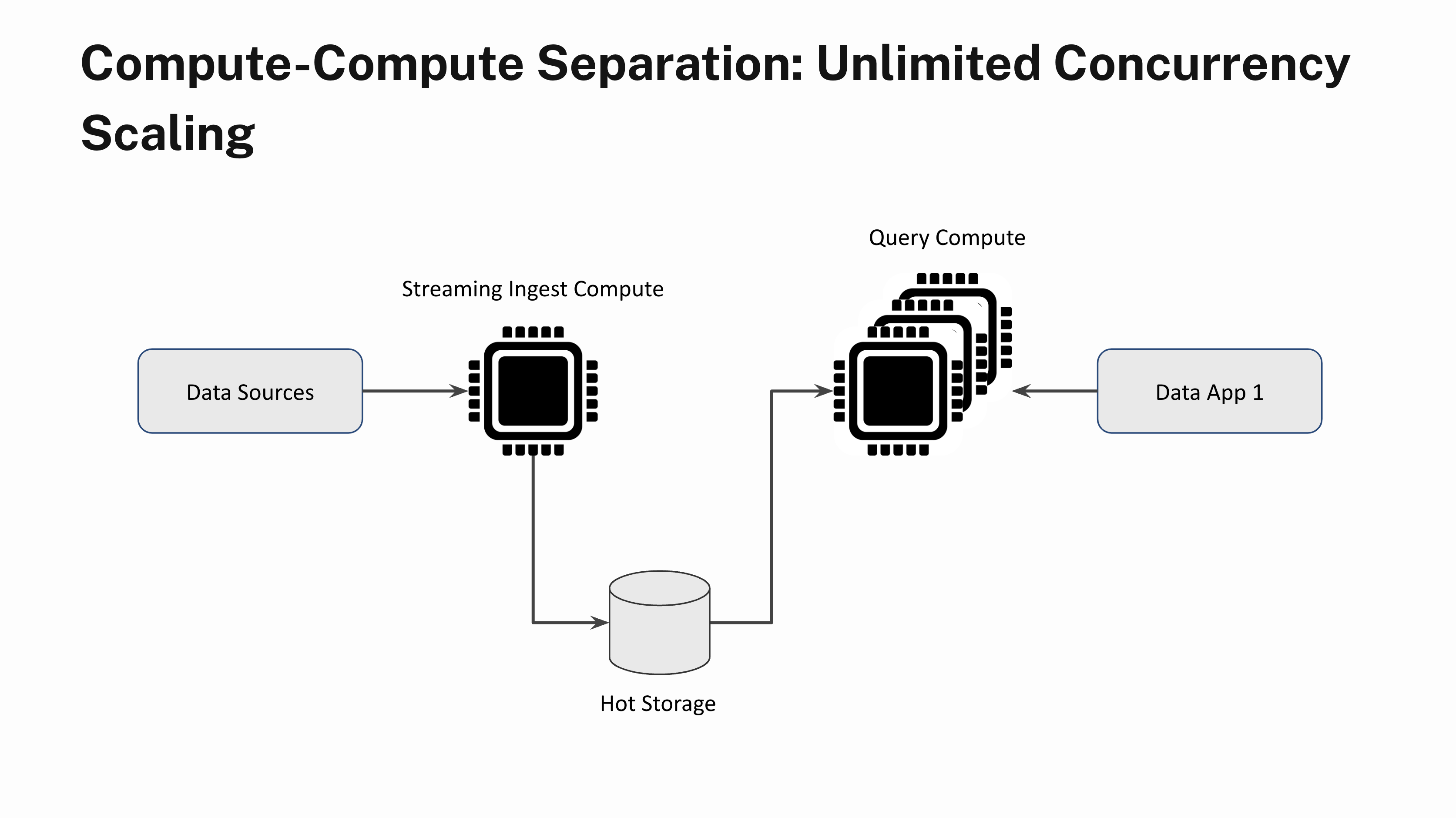

Limitless Concurrency Scaling

Limitless Concurrency Scaling

Say you have got a real-time software that sustains a gentle state of 100 queries per second. Sometimes, when a variety of customers login to the app on the similar time, you see question bursts. With out compute-compute separation, question bursts will end in a poor software efficiency for all customers during times of excessive demand. With compute-compute separation, you possibly can immediately add extra Digital Cases and scale out linearly to deal with the elevated demand. You can too scale the Digital Cases down when the question load subsides. And sure, you possibly can scale out with out having to fret about information lags or stale question outcomes.

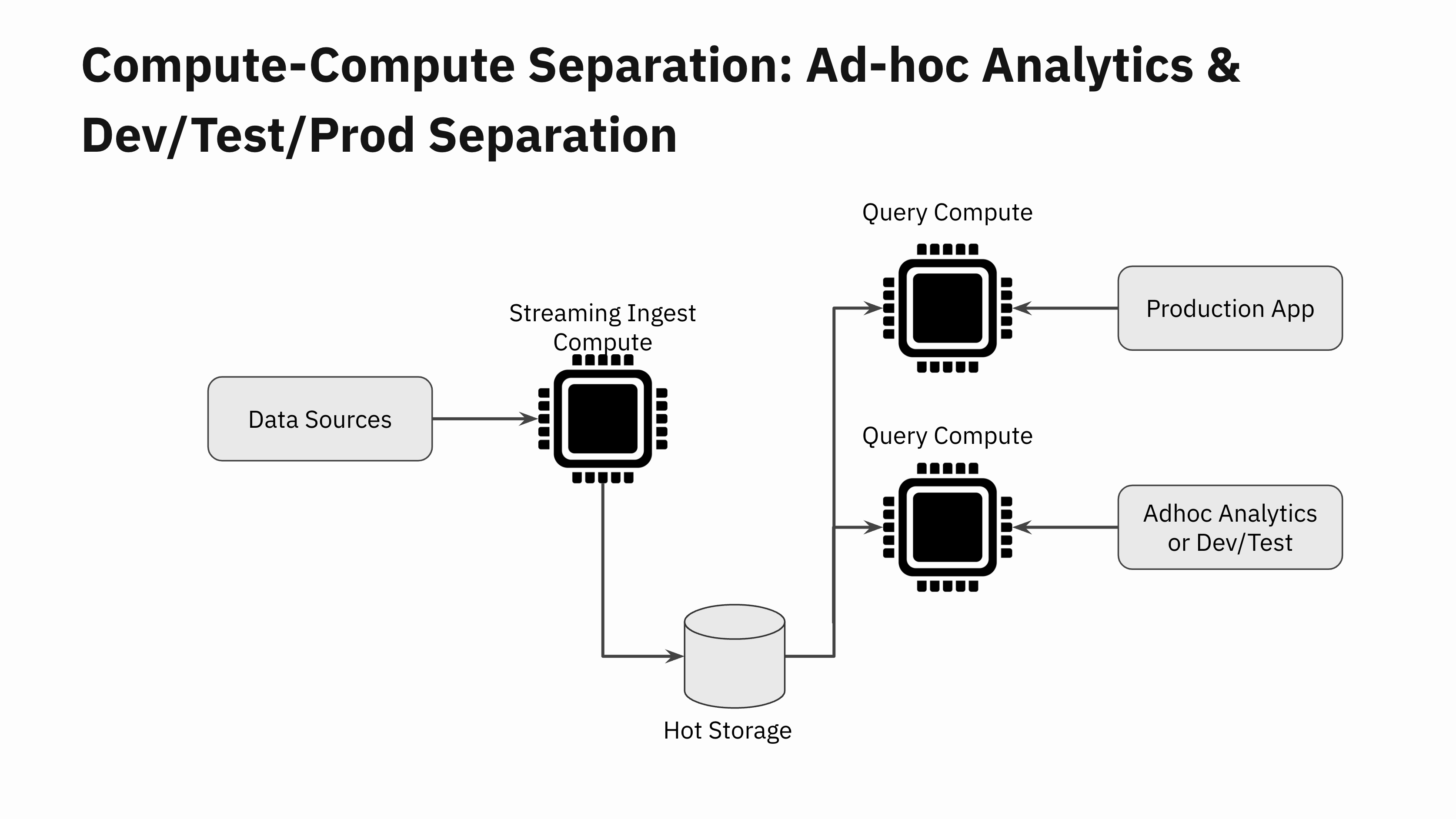

Advert-hoc Analytics and Dev/Check/Prod Separation

The subsequent time you carry out ad-hoc analytics for reporting or troubleshooting functions in your manufacturing information, you are able to do so with out worrying concerning the unfavourable impression of the queries in your manufacturing software.

Many dev/staging environments can not afford to make a full copy of the manufacturing datasets. So that they find yourself doing testing on a smaller portion of their manufacturing information. This could trigger sudden efficiency regressions when new software variations are deployed to manufacturing. With compute-compute separation, now you can spin up a brand new Digital Occasion and do a fast efficiency check of the brand new software model earlier than rolling it out to manufacturing.

The probabilities are infinite for compute-compute separation within the cloud.

Future Implications for Actual-Time Analytics

Ranging from the hackathon mission a 12 months in the past, it took a superb group of engineers led by Tudor Bosman, Igor Canadi, Karen Li and Wei Li to show the hackathon mission right into a manufacturing grade system. I’m extraordinarily proud to unveil the potential of compute-compute separation at this time to everybody.

That is an absolute sport changer. The implications for the way forward for real-time analytics are huge. Anybody can now construct real-time functions and leverage the cloud to get huge effectivity and reliability wins. Constructing huge scale real-time functions don’t must incur exorbitant infrastructure prices on account of useful resource overprovisioning. Purposes can dynamically and shortly adapt to altering workloads within the cloud, with the underlying database being operationally trivial to handle.

On this launch weblog, I’ve simply scratched the floor on the brand new cloud structure for compute-compute separation. I’m excited to delve additional into the technical particulars in a speak with Nathan Bronson, one of many brains behind the memtable replication hack and core contributor to Tao and F14 at Meta. Come be a part of us for the tech speak and look below the hood of the brand new structure and get your questions answered!

[ad_2]