[ad_1]

Dataricks is thrilled to announce the Normal Availability (GA) of Major Key (PK) and International Key (FK) constraints, beginning in Databricks Runtime 15.2 and Databricks SQL 2024.30. This launch follows a extremely profitable public preview, embraced by a whole lot of weekly energetic clients, and additional represents a big milestone in enhancing knowledge integrity and relational knowledge administration inside the Lakehouse.

Moreover, Databricks can now use these constraints to optimize queries and remove pointless operations from the question plan, delivering a lot sooner efficiency.

Major Key and International Key Constraints

Major Keys (PKs) and International Keys (FKs) are important parts in relational databases, appearing as basic constructing blocks for knowledge modeling. They supply details about the info relationships within the schema to customers, instruments and purposes; and allow optimizations that leverage constraints to hurry up queries. Major and international keys are actually usually obtainable on your Delta Lake tables hosted in Unity Catalog.

SQL Language

You may outline constraints if you create a desk:

CREATE TABLE Customers (

UserID INT NOT NULL PRIMARY KEY,

UserName STRING,

E-mail STRING,

SignUpDate DATE

);Within the above instance, we outline a major key constraint on the column UserID. Databricks additionally helps constraints on teams of columns as nicely.

You too can modify present Delta tables so as to add or take away constraints:

CREATE TABLE Merchandise (

ProductID INT NOT NULL,

ProductName STRING,

Value DECIMAL(10,2),

CategoryID INT

);

ALTER TABLE Merchandise ADD CONSTRAINT products_pk PRIMARY KEY (ProductID);

ALTER TABLE Merchandise DROP CONSTRAINT products_pk;Right here we create the first key named products_pk on the non-nullable column ProductID in an present desk. To efficiently execute this operation, you should be the proprietor of the desk. Word that constraint names should be distinctive inside the schema.

The following command removes the first key by specifying the identify.

The identical course of applies for international keys. The next desk defines two international keys at desk creation time:

CREATE TABLE Purchases (

PurchaseID INT PRIMARY KEY,

UserID INT,

ProductID INT,

PurchaseDate DATE,

Amount INT,

FOREIGN KEY (UserID) REFERENCES Customers(UserID),

FOREIGN KEY (ProductID) REFERENCES Merchandise(ProductID)

);Please seek advice from the documentation on CREATE TABLE and ALTER TABLE statements for extra particulars on the syntax and operations associated to constraints.

Major key and international key constraints aren’t enforced within the Databricks engine, however they could be helpful for indicating an information integrity relationship that’s meant to carry true. Databricks can as a substitute implement major key constraints upstream as a part of the ingest pipeline. See Managed knowledge high quality with Delta Stay Tables for extra info on enforced constraints. Databricks additionally helps enforced NOT NULL and CHECK constraints (see the Constraints documentation for extra info).

Companion Ecosystem

Instruments and purposes equivalent to the newest model of Tableau and PowerBI can routinely import and make the most of your major key and international key relationships from Databricks by way of JDBC and ODBC connectors.

View the constraints

There are a number of methods to view the first key and international key constraints outlined within the desk. You too can merely use SQL instructions to view constraint info with the DESCRIBE TABLE EXTENDED command:

> DESCRIBE TABLE EXTENDED Purchases

... (omitting different outputs)

# Constraints

purchases_pk PRIMARY KEY (`PurchaseID`)

purchases_products_fk FOREIGN KEY (`ProductID`) REFERENCES `fundamental`

.`instance`.`merchandise` (`ProductID`)

purchases_users_fk FOREIGN KEY (`UserID`) REFERENCES `fundamental`

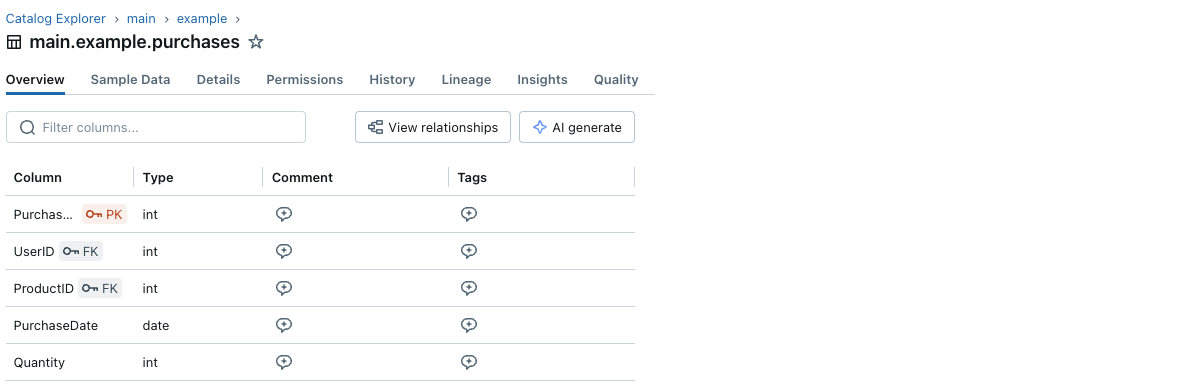

.`instance`.`customers` (`UserID`)Catalog Explorer and Entity Relationship Diagram

You too can view the constraints info by way of the Catalog Explorer:

Every major key and international key column has a small key icon subsequent to its identify.

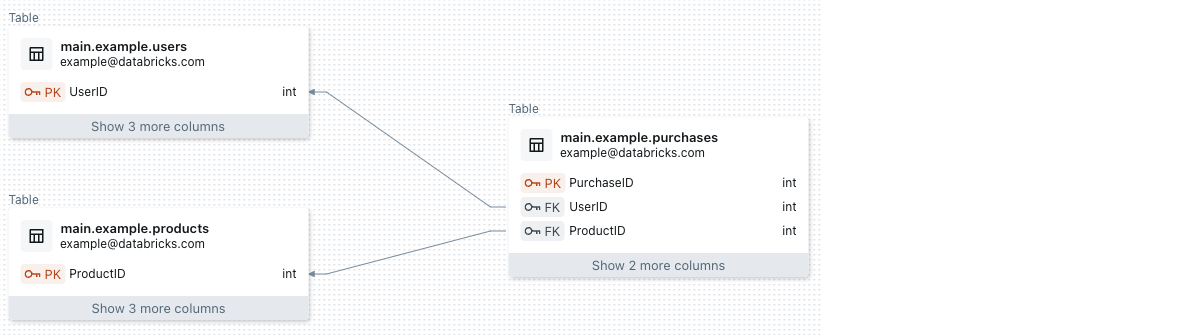

And you’ll visualize the first and international key info and the relationships between tables with the Entity Relationship Diagram in Catalog Explorer. Under is an instance of a desk purchases referencing two tables, customers and merchandise:

INFORMATION SCHEMA

The next INFORMATION_SCHEMA tables additionally present constraint info:

Use the RELY choice to allow optimizations

If you recognize that the first key constraint is legitimate, (for instance, as a result of your knowledge pipeline or ETL job enforces it) then you may allow optimizations based mostly on the constraint by specifying it with the RELY possibility, like:

PRIMARY KEY (c_customer_sk) RELYUtilizing the RELY possibility lets Databricks optimize queries in ways in which rely on the constraint’s validity, since you are guaranteeing that the info integrity is maintained. Train warning right here as a result of if a constraint is marked as RELY however the knowledge violates the constraint, your queries could return incorrect outcomes.

When you don’t specify the RELY possibility for a constraint, the default is NORELY, during which case constraints should still be used for informational or statistical functions, however queries is not going to depend on them to run appropriately.

The RELY possibility and the optimizations using it are at present obtainable for major keys, and also will be coming quickly for international keys.

You may modify a desk’s major key to vary whether or not it’s RELY or NORELY by utilizing ALTER TABLE, for instance:

ALTER TABLE buyer DROP PRIMARY KEY;

ALTER TABLE buyer ADD PRIMARY KEY (c_customer_sk) RELYVelocity up your queries by eliminating pointless aggregations

One easy optimization we are able to do with RELY major key constraints is eliminating pointless aggregates. For instance, in a question that’s making use of a definite operation over a desk with a major key utilizing RELY:

SELECT DISTINCT c_customer_sk FROM buyer;We will take away the pointless DISTINCT operation:

SELECT c_customer_sk FROM buyer;As you may see, this question depends on the validity of the RELY major key constraint – if there are duplicate buyer IDs within the buyer desk, then the reworked question will return incorrect duplicate outcomes. You might be liable for imposing the validity of the constraint in the event you set the RELY possibility.

If the first secret is NORELY (the default), then the optimizer is not going to take away the DISTINCT operation from the question. Then it could run slower however all the time returns appropriate outcomes even when there are duplicates. If the first secret is RELY, Databricks can take away the DISTINCT operation, which might vastly velocity up the question – by about 2x for the above instance.

Velocity up your queries by eliminating pointless joins

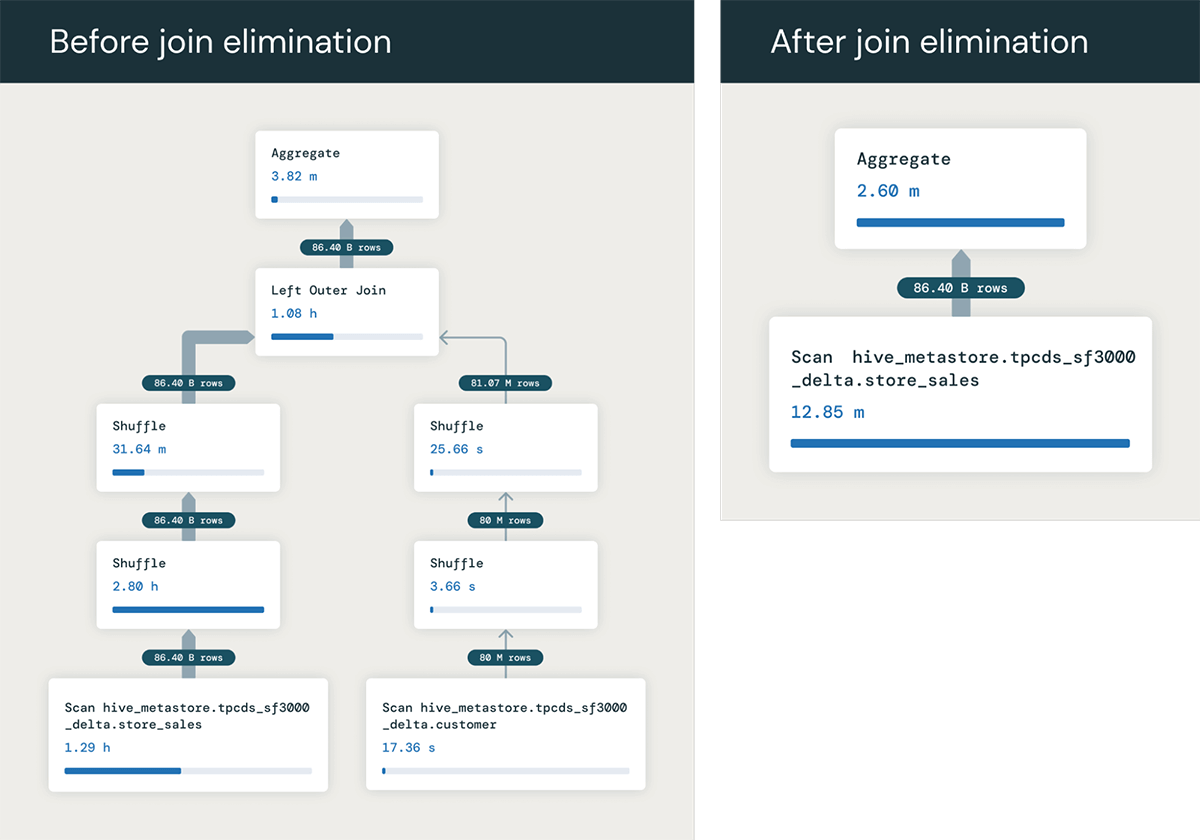

One other very helpful optimization we are able to carry out with RELY major keys is eliminating pointless joins. If a question joins a desk that isn’t referenced anyplace besides within the be part of situation, then the optimizer can decide that the be part of is pointless, and take away the be part of from the question plan.

To present an instance, as an instance we now have a question becoming a member of two tables, store_sales and buyer, joined on the first key of the shopper desk PRIMARY KEY (c_customer_sk) RELY.

SELECT SUM(ss_quantity)

FROM store_sales ss

LEFT JOIN buyer c

ON ss_customer_sk = c_customer_sk;If we did not have the first key, every row of store_sales may probably match a number of rows in buyer, and we might have to execute the be part of to compute the right SUM worth. However as a result of the desk buyer is joined on its major key, we all know that the be part of will output one row for every row of store_sales.

So the question solely truly wants the column ss_quantity from the actual fact desk store_sales. Subsequently, the question optimizer can completely remove the be part of from the question, reworking it into:

SELECT SUM(ss_quantity)

FROM store_sales ssThis runs a lot sooner by avoiding your entire be part of – on this instance we observe the optimization velocity up the question from 1.5 minutes to six seconds!. And the advantages might be even bigger when the be part of entails many tables that may be eradicated!

You could ask, why would anybody run a question like this? It is truly far more widespread than you would possibly suppose! One widespread purpose is that customers assemble views that be part of collectively a number of tables, equivalent to becoming a member of collectively many truth and dimension tables. They write queries over these views which regularly use columns from solely among the tables, not all – and so the optimizer can remove the joins in opposition to the tables that are not wanted in every question. This sample can also be widespread in lots of Enterprise Intelligence (BI) instruments, which regularly generate queries becoming a member of many tables in a schema even when a question solely makes use of columns from among the tables.

Conclusion

Since its public preview, over 2600 + Databricks clients have used major key and international key constraints. Right now, we’re excited to announce the final availability of this function, marking a brand new stage in our dedication to enhancing knowledge administration and integrity in Databricks.

Moreover, Databricks now takes benefit of key constraints with the RELY choice to optimize queries, equivalent to by eliminating pointless aggregates and joins, leading to a lot sooner question efficiency.

[ad_2]