[ad_1]

Ahead-looking: Nvidia will probably be showcasing its Blackwell tech stack at Scorching Chips 2024, with pre-event demonstrations this weekend and on the most important occasion subsequent week. It is an thrilling time for Nvidia lovers, who will get an in-depth take a look at a few of Group Inexperienced’s newest expertise. Nonetheless, what stays unstated are the potential delays reported for the Blackwell GPUs, which might affect the timelines of a few of these merchandise.

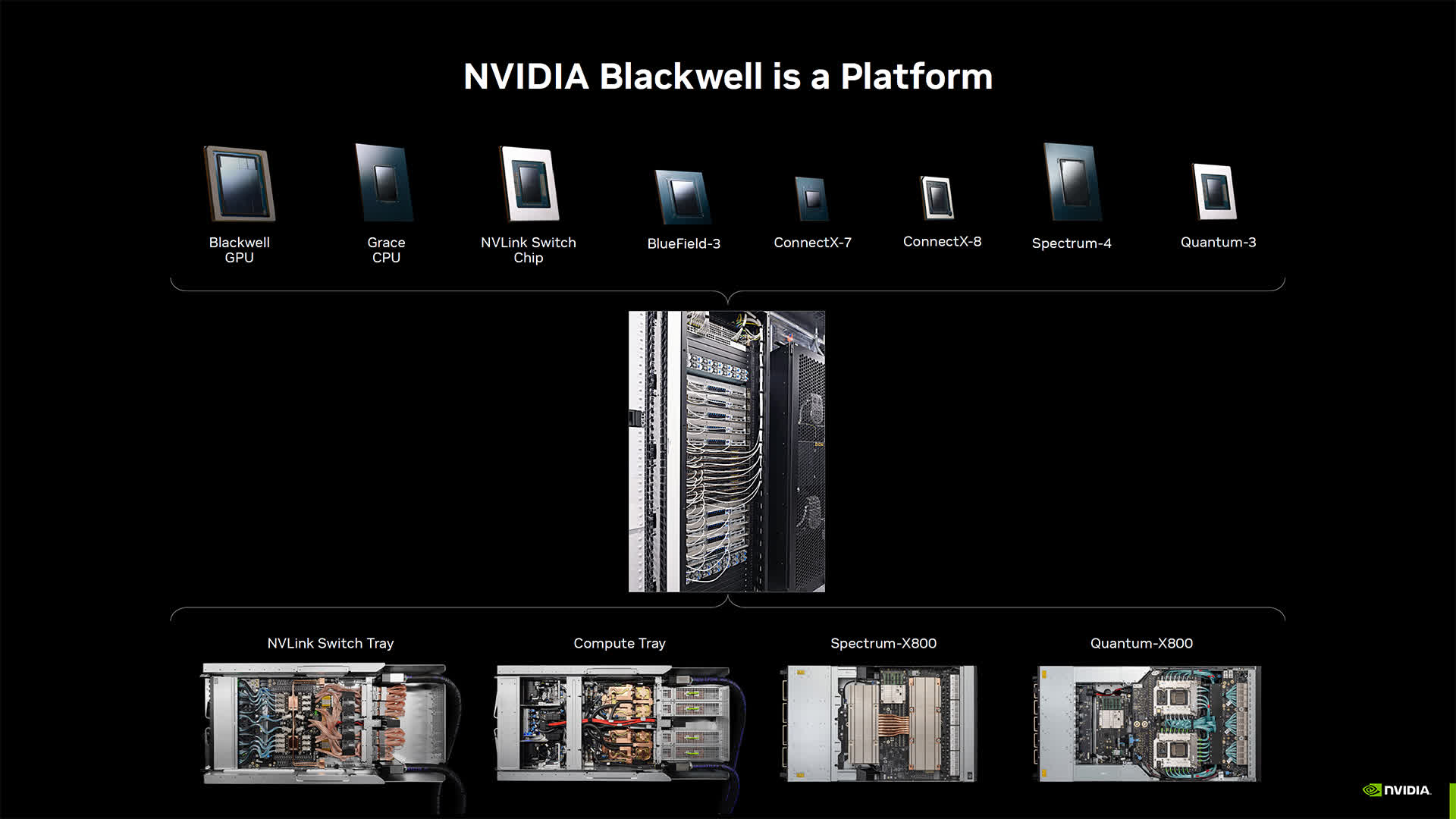

Nvidia is set to redefine the AI panorama with its Blackwell platform, positioning it as a complete ecosystem that goes past conventional GPU capabilities. Nvidia will showcase the setup and configuration of its Blackwell servers, in addition to the mixing of assorted superior parts, on the Scorching Chips 2024 convention.

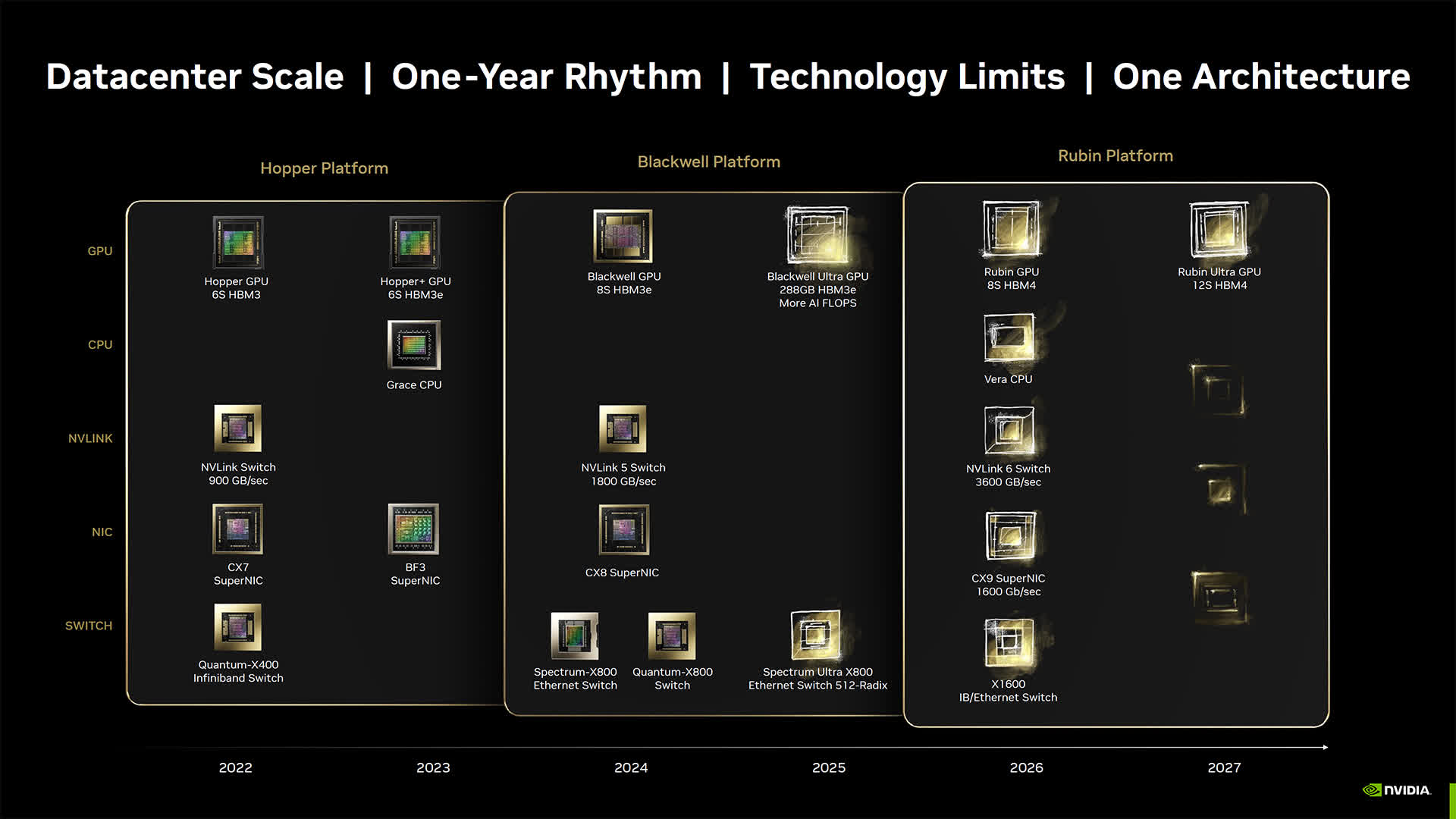

Lots of Nvidia’s upcoming shows will cowl acquainted territory, together with their knowledge heart and AI methods, together with the Blackwell roadmap. This roadmap outlines the discharge of the Blackwell Extremely subsequent yr, adopted by Vera CPUs and Rubin GPUs in 2026, and the Vera Extremely in 2027. This roadmap had already been shared by Nvidia at Computex final June.

For tech lovers desperate to dive deep into the Nvidia Blackwell stack and its evolving use instances, Scorching Chips 2024 will present a possibility to discover Nvidia’s newest developments in AI {hardware}, liquid cooling improvements, and AI-driven chip design.

One of many key shows will supply an in-depth take a look at the Nvidia Blackwell platform, which consists of a number of Nvidia parts, together with the Blackwell GPU, Grace CPU, BlueField knowledge processing unit, ConnectX community interface card, NVLink Swap, Spectrum Ethernet swap, and Quantum InfiniBand swap.

Moreover, Nvidia will unveil its Quasar Quantization System, which merges algorithmic developments, Nvidia software program libraries, and Blackwell’s second-generation Transformer Engine to reinforce FP4 LLM operations. This improvement guarantees important bandwidth financial savings whereas sustaining the high-performance requirements of FP16, representing a significant leap in knowledge processing effectivity.

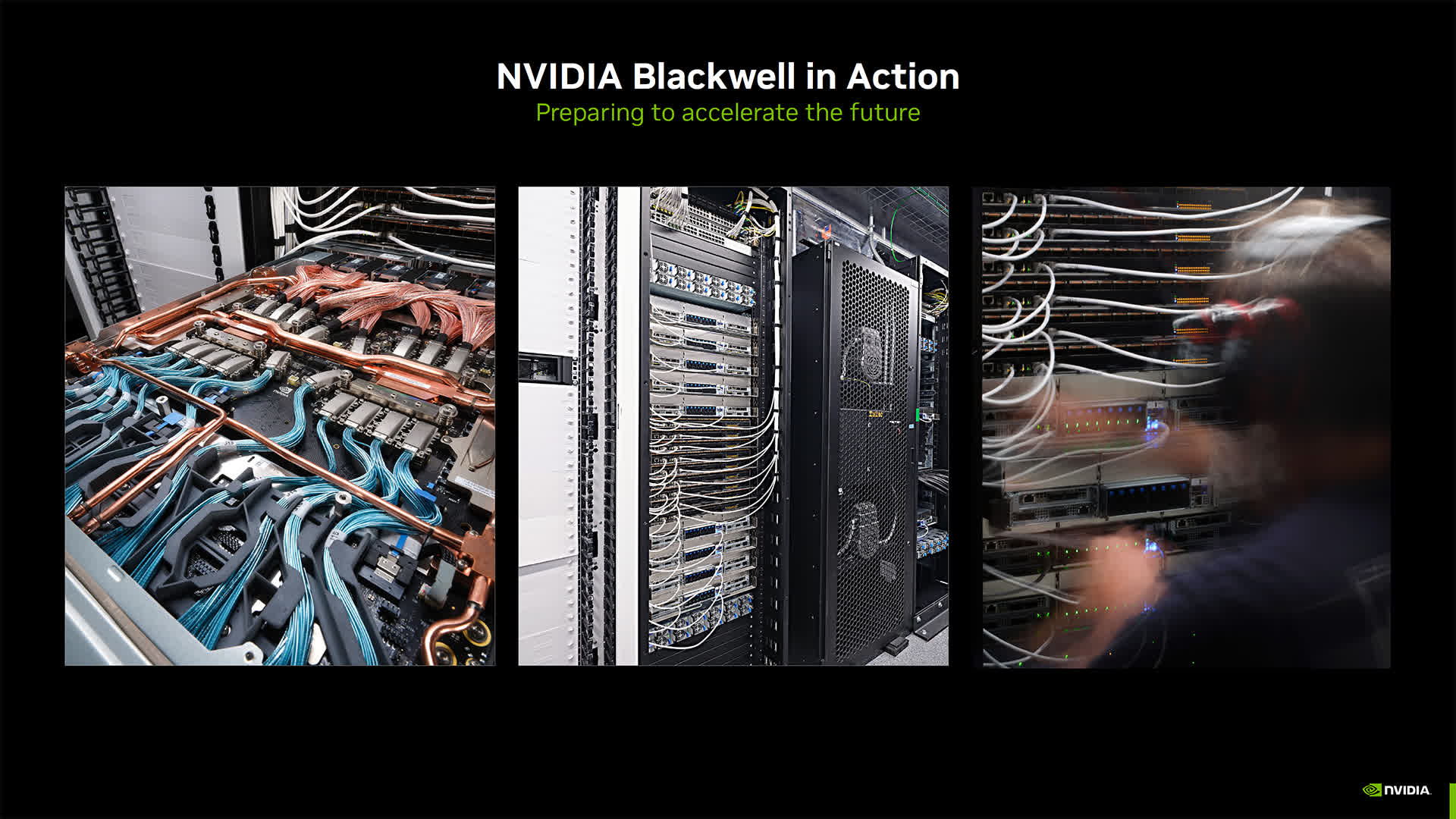

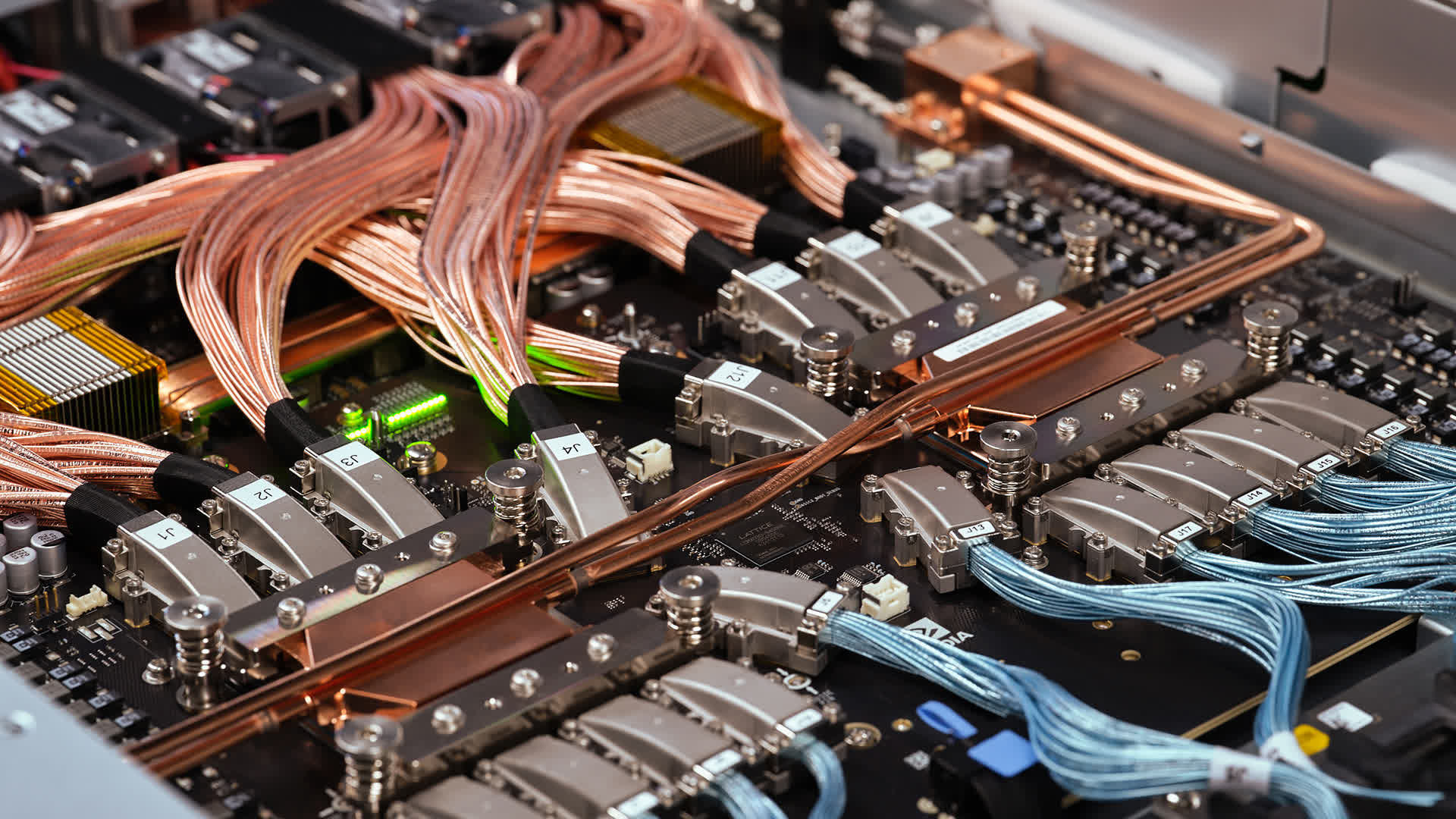

One other point of interest would be the Nvidia GB200 NVL72, a multi-node, liquid-cooled system that includes 72 Blackwell GPUs and 36 Grace CPUs. Attendees can even discover the NVLink interconnect expertise, which facilitates GPU communication with distinctive throughput and low-latency inference.

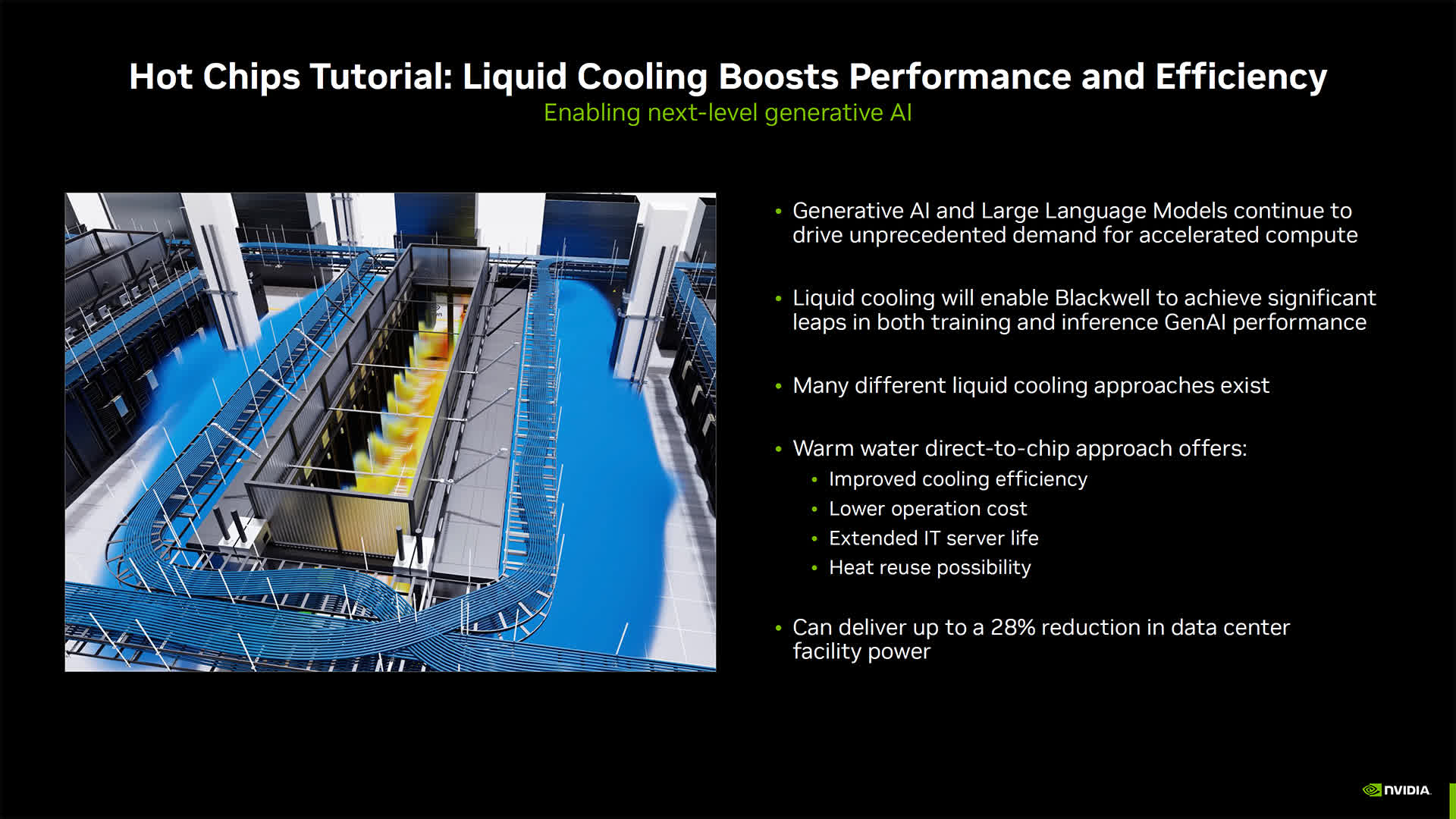

Nvidia’s progress in knowledge heart cooling can even be a subject of dialogue. The corporate is investigating the usage of heat water liquid cooling, a way that might scale back energy consumption by as much as 28%. This system not solely cuts vitality prices but additionally eliminates the need for beneath ambient cooling {hardware}, which Nvidia hopes will place it as a frontrunner in sustainable tech options.

According to these efforts, Nvidia’s involvement within the COOLERCHIPS program, a U.S. Division of Power initiative aimed toward advancing cooling applied sciences, will probably be highlighted. By this challenge, Nvidia is utilizing its Omniverse platform to develop digital twins that simulate vitality consumption and cooling effectivity.

In one other session, Nvidia will focus on its use of agent-based AI techniques able to autonomously executing duties for chip design. Examples of AI brokers in motion will embrace timing report evaluation, cell cluster optimization, and code era. Notably, the cell cluster optimization work was not too long ago acknowledged as one of the best paper on the inaugural IEEE Worldwide Workshop on LLM-Aided Design.

[ad_2]