[ad_1]

MongoDB’s Benefits & Disadvantages

MongoDB has complete aggregation capabilities. You possibly can run many analytic queries on MongoDB with out exporting your knowledge to a third-party device. Nevertheless, these aggregation queries are continuously CPU-intensive and may block or delay the execution of different queries. For instance, On-line Transactional Processing (OLTP) queries are often quick learn operations which have direct impacts on the person expertise. If an OLTP question is delayed as a result of a read-heavy aggregation question is working in your MongoDB cluster, your customers will expertise a decelerate. That is by no means a very good factor.

These delays may be prevented by offloading heavy learn operations, akin to aggregations for analytics, to a different layer and letting the MongoDB cluster deal with solely write and OLTP operations. On this scenario, the MongoDB cluster doesn’t must sustain with the learn requests. Offloading learn operations to a different database, akin to PostgreSQL, is one possibility that accomplishes this finish. After discussing what PostgreSQL is, this text will take a look at how you can offload learn operations to it. We’ll additionally look at a few of the tradeoffs that accompany this selection.

What Is PostgreSQL?

PostgreSQL is an open-source relational database that has been round for nearly three many years.

PostgreSQL has been gaining lots of traction just lately due to its potential to supply each RDBMS-like and NoSQL-like options which allow knowledge to be saved in conventional rows and columns whereas additionally offering the choice to retailer full JSON objects.

PostgreSQL options distinctive question operators which can be utilized to question key and worth pairs inside JSON objects. This functionality permits PostgreSQL for use as a doc database as properly. Like MongoDB, it supplies assist for JSON paperwork. However, in contrast to MongoDB, it makes use of a SQL-like question language to question even the JSON paperwork, permitting seasoned knowledge engineers to jot down advert hoc queries when required.

Not like MongoDB, PostgreSQL additionally lets you retailer knowledge in a extra conventional row and column association. This manner, PostgreSQL can act as a conventional RDBMS with highly effective options, akin to joins.

The distinctive potential of PostgreSQL to behave as each an RDBMS and a JSON doc retailer makes it an excellent companion to MongoDB for offloading learn operations.

Connecting PostgreSQL to MongoDB

MongoDB’s oplog is used to take care of a log of all operations being carried out on knowledge. It may be used to comply with the entire modifications taking place to the information in MongoDB and to duplicate or mimic the information in one other database, akin to PostgreSQL, in an effort to make the identical knowledge obtainable elsewhere for all learn operations. As a result of MongoDB makes use of its oplog internally to replicate knowledge throughout all reproduction units, it’s the best and most simple means of replicating MongoDB knowledge outdoors of MongoDB.

If you have already got knowledge in MongoDB and need it replicated in PostgreSQL, export the entire database as JSON paperwork. Then, write a easy service which reads these JSON recordsdata and writes their knowledge to PostgreSQL within the required format. In case you are beginning this replication when MongoDB remains to be empty, no preliminary migration is critical, and you’ll skip this step.

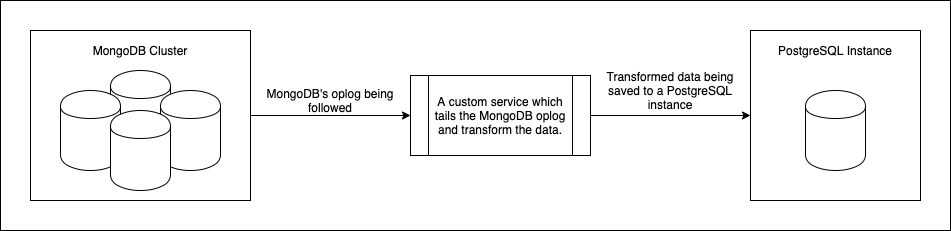

After you’ve migrated the present knowledge to PostgreSQL, you’ll have to jot down a service which creates an information move pipeline from MongoDB to PostgreSQL. This new service ought to comply with the MongoDB oplog and replicate the identical operations in PostgreSQL that have been working in MongoDB, much like the method proven in Determine 1 beneath. Each change taking place to the information saved in MongoDB ought to ultimately be recorded within the oplog. This will likely be learn by the service and utilized to the information in PostgreSQL.

Determine 1: An information pipeline which constantly copies knowledge from MongoDB to PostgreSQL

Schema Choices in PostgreSQL

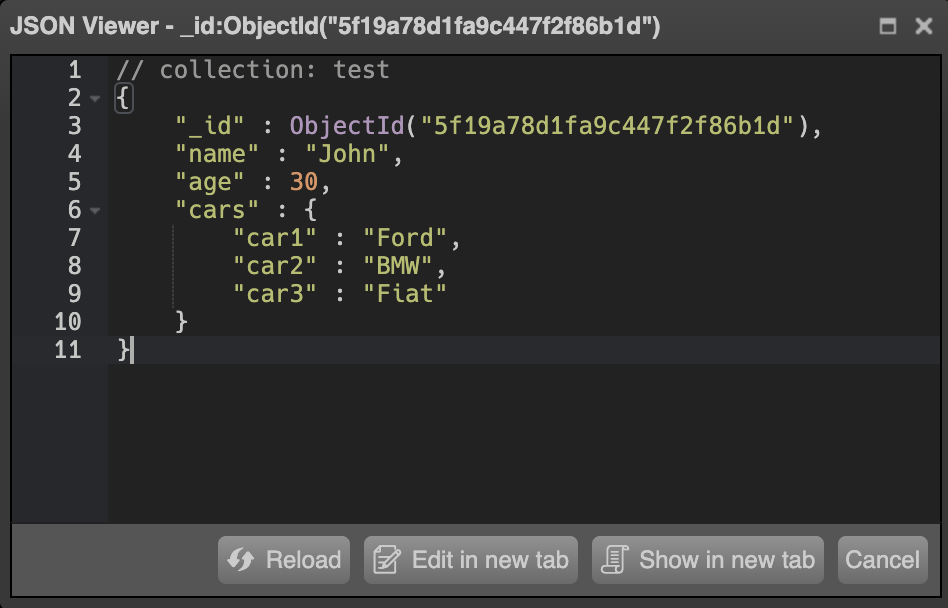

You now have to resolve the way you’ll be storing knowledge in PostgreSQL, for the reason that knowledge from MongoDB will likely be within the type of JSON paperwork, as proven in Determine 2 beneath.

Determine 2: An instance of information saved in MongoDB

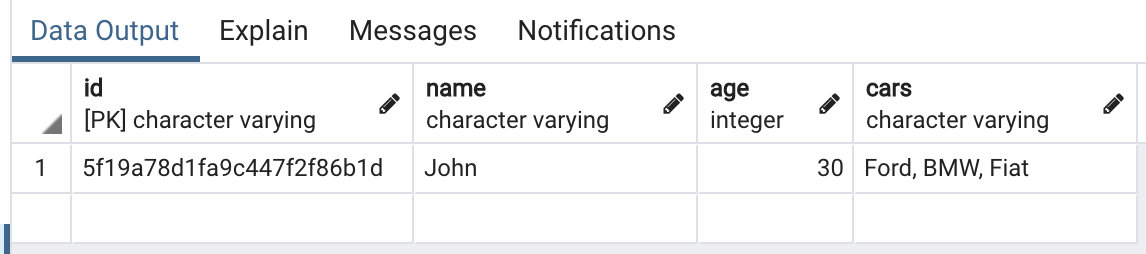

On the PostgreSQL finish, you could have two choices. You possibly can both retailer the entire JSON object as a column, or you may rework the information into rows and columns and retailer it within the conventional means, as proven in Determine 3 beneath. This choice needs to be primarily based on the necessities of your utility; there is no such thing as a proper or fallacious approach to do issues right here. PostgreSQL has question operations for each JSON columns and conventional rows and columns.

Determine 3: An instance of information saved in PostgreSQL in tabular format

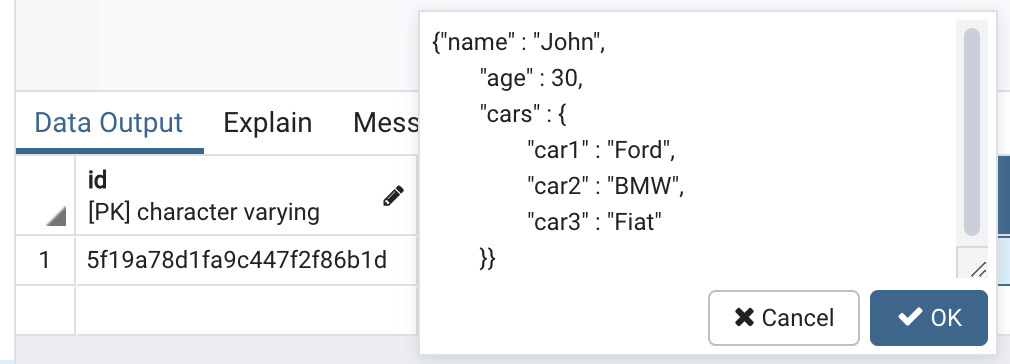

As soon as your migration service has the oplog knowledge, it may be reworked based on your small business wants. You possibly can break up one JSON doc from MongoDB into a number of rows and columns and even a number of tables in PostgreSQL. Or, you may simply copy the entire JSON doc into one column in a single desk in PostgreSQL, as proven in Determine 4 beneath. What you do right here relies on how you propose to question the information afterward.

Determine 4: An instance of information saved in PostgreSQL as a JSON column

Getting Knowledge Prepared for Querying in PostgreSQL

Now that your knowledge is being replicated and constantly up to date in PostgreSQL, you’ll have to ensure that it’s able to take over learn operations. To take action, work out what indexes you have to create by taking a look at your queries and ensuring that every one combos of fields are included within the indexes. This manner, every time there’s a learn question in your PostgreSQL database, these indexes will likely be used and the queries will likely be performant. As soon as all of that is arrange, you’re able to route all your learn queries from MongoDB to PostgreSQL.

The Benefits of Utilizing PostgreSQL for Actual-Time Reporting and Analytics

There are numerous benefits of utilizing PostgreSQL to dump learn operations from MongoDB. To start with, you may leverage the facility of the SQL question language. Though there are some third-party companies which offer a MongoDB SQL answer, they usually lack options that are important both for MongoDB customers or SQL queries.

One other benefit, in the event you resolve to rework your MongoDB knowledge into rows and columns, is the choice of splitting your knowledge into a number of tables in PostgreSQL to retailer it in a extra relational format. Doing so will mean you can use PostgreSQL’s native SQL queries as an alternative of MongoDB’s. When you break up your knowledge into a number of tables, you’ll clearly have the choice to affix tables in your queries to do extra with a single question. And, when you have joins and relational knowledge, you may run complicated SQL queries to carry out quite a lot of aggregations. You may also create a number of indexes in your tables in PostgreSQL for higher performing learn operations. Remember that there is no such thing as a elegant approach to be part of collections in MongoDB. Nevertheless, this doesn’t imply that MongoDB aggregations are weak or are lacking options.

After getting an entire pipeline arrange in PostgreSQL, you may simply swap the database from MongoDB to PostgreSQL for all your aggregation operations. At this level, your analytic queries gained’t have an effect on the efficiency of your major MongoDB database since you’ll have a totally separate arrange for analytic and transactional workloads.

The Disadvantages of Utilizing PostgreSQL for Actual-Time Reporting and Analytics

Whereas there are numerous benefits to offloading your learn operations to PostgreSQL, a lot of tradeoffs come together with the choice to take this step.

Complexity

To start with, there’s the apparent new transferring half within the structure you’ll have to construct and preserve—the information pipeline which follows MongoDB’s oplog and recreates it on the PostgreSQL finish. If this one pipeline fails, knowledge replication to PostgreSQL stops, making a scenario the place the information in MongoDB and the information in PostgreSQL aren’t the identical. Relying on the variety of write operations taking place in your MongoDB cluster, you would possibly wish to take into consideration scaling this pipeline to keep away from it changing into a bottleneck. It has the potential to change into the one level of failure in your utility.

Consistency

There can be points with knowledge consistency, as a result of it takes wherever from just a few milliseconds to a number of seconds for the information modifications in MongoDB to be replicated in PostgreSQL. This lag time may simply go as much as minutes in case your MongoDB write operations expertise lots of site visitors.

As a result of PostgreSQL, which is usually an RDBMS, is your learn layer, it may not be one of the best match for all functions. For instance, in functions that course of knowledge originating from quite a lot of sources, you may need to make use of a tabular knowledge construction in some tables and JSON columns in others. Among the advantageous options of an RDBMS, akin to joins, may not work as anticipated in these conditions. As well as, offloading reads to PostgreSQL may not be the best choice when the information you’re coping with is very unstructured. On this case, you’ll once more find yourself replicating the absence of construction even in PostgreSQL.

Scalability

Lastly, it’s essential to notice that PostgreSQL was not designed to be a distributed database. This implies there’s no approach to natively distribute your knowledge throughout a number of nodes. In case your knowledge is reaching the bounds of your node’s storage, you’ll must scale up vertically by including extra storage to the identical node as an alternative of including extra commodity nodes and making a cluster. This necessity would possibly stop PostgreSQL from being your finest answer.

Earlier than you make the choice to dump your learn operations to PostgreSQL—or every other SQL database, for that matter—ensure that SQL and RDBMS are good choices on your knowledge.

Concerns for Offloading Learn-Intensive Functions from MongoDB

In case your utility works largely with relational knowledge and SQL queries, offloading all your learn queries to PostgreSQL lets you take full benefit of the facility of SQL queries, aggregations, joins, and the entire different options described on this article. However, in case your utility offers with lots of unstructured knowledge coming from quite a lot of sources, this selection may not be a very good match.

It’s essential to resolve whether or not or not you wish to add an additional read-optimized layer early on within the improvement of the mission. In any other case, you’ll doubtless find yourself spending a major quantity of money and time creating indexes and migrating knowledge from MongoDB to PostgreSQL at a later stage. One of the simplest ways to deal with the migration to PostgreSQL is by transferring small items of your knowledge to PostgreSQL and testing the applying’s efficiency. If it really works as anticipated, you may proceed the migration in small items till, ultimately, the entire mission has been migrated.

Should you’re accumulating structured or semi-structured knowledge which works properly with PostgreSQL, offloading learn operations to PostgreSQL is an effective way to keep away from impacting the efficiency of your major MongoDB database.

Rockset & Elasticsearch: Options for Offloading From MongoDB

Should you’ve made the choice to dump reporting and analytics from MongoDB for the explanations mentioned above however have extra complicated scalability necessities or much less structured knowledge, you could wish to take into account different real-time databases, akin to Elasticsearch and Rockset. Each Elasticsearch and Rockset are scale-out alternate options that enable schemaless knowledge ingestion and leverage indexing to velocity up analytics. Like PostgreSQL, Rockset additionally helps full-featured SQL, together with joins.

Be taught extra about offloading from MongoDB utilizing Elasticsearch and Rockset choices in these associated blogs:

[ad_2]