[ad_1]

Introduction

Apache Iceberg has not too long ago grown in reputation as a result of it provides information warehouse-like capabilities to your information lake making it simpler to investigate all of your information—structured and unstructured. It presents a number of advantages akin to schema evolution, hidden partitioning, time journey, and extra that enhance the productiveness of knowledge engineers and information analysts. Nonetheless, it’s essential recurrently preserve Iceberg tables to maintain them in a wholesome state in order that learn queries can carry out quicker. This weblog discusses just a few issues that you just may encounter with Iceberg tables and presents methods on find out how to optimize them in every of these situations. You may make the most of a mix of the methods offered and adapt them to your explicit use circumstances.

Downside with too many snapshots

Everytime a write operation happens on an Iceberg desk, a brand new snapshot is created. Over a time period this will trigger the desk’s metadata.json file to get bloated and the variety of outdated and probably pointless information/delete recordsdata current within the information retailer to develop, growing storage prices. A bloated metadata.json file might improve each learn/write instances as a result of a big metadata file must be learn/written each time. Commonly expiring snapshots is really useful to delete information recordsdata which can be now not wanted, and to maintain the scale of desk metadata small. Expiring snapshots is a comparatively low cost operation and makes use of metadata to find out newly unreachable recordsdata.

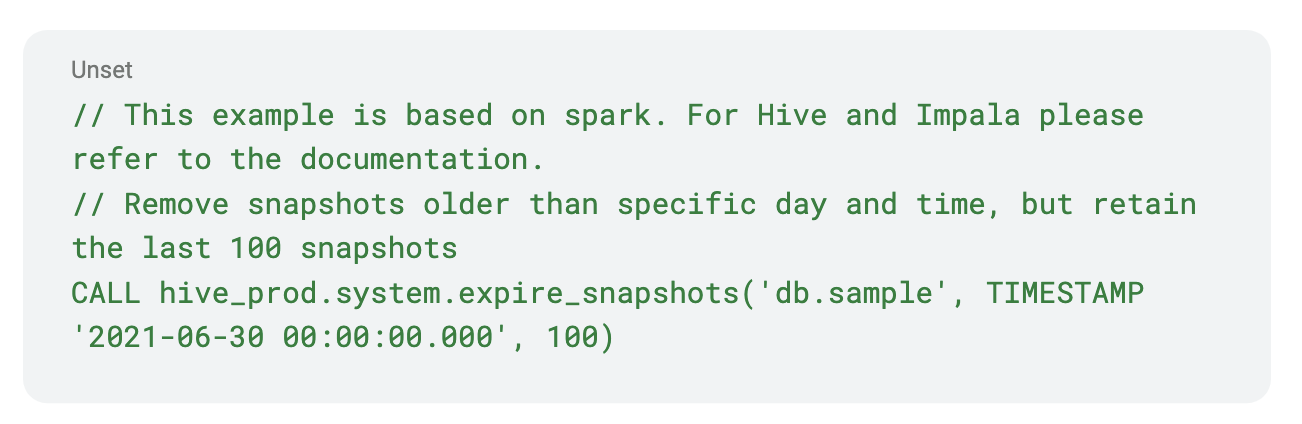

Resolution: expire snapshots

We will expire outdated snapshots utilizing expire_snapshots

Downside with suboptimal manifests

Over time the snapshots may reference many manifest recordsdata. This might trigger a slowdown in question planning and improve the runtime of metadata queries. Moreover, when first created the manifests could not lend themselves properly to partition pruning, which will increase the general runtime of the question. However, if the manifests are properly organized into discrete bounds of partitions, then partition pruning can prune away whole subtrees of knowledge recordsdata.

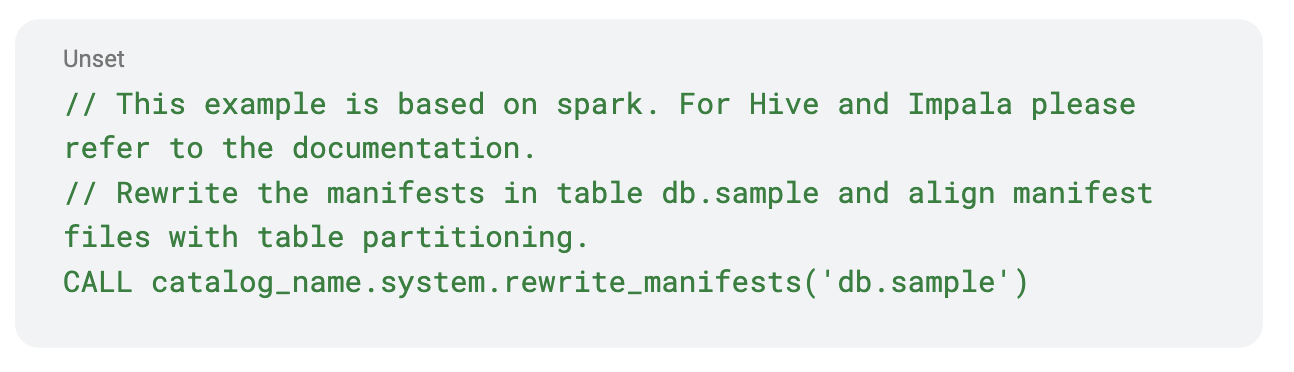

Resolution: rewrite manifests

We will remedy the too many manifest recordsdata drawback with rewrite_manifests and probably get a well-balanced hierarchical tree of knowledge recordsdata.

Downside with delete recordsdata

Background

merge-on-read vs copy-on-write

Since Iceberg V2, at any time when current information must be up to date (through delete, replace, or merge statements), there are two choices accessible: copy-on-write and merge-on-read. With the copy-on-write choice, the corresponding information recordsdata of a delete, replace, or merge operation can be learn and fully new information recordsdata can be written with the required write modifications. Iceberg doesn’t delete the outdated information recordsdata. So if you wish to question the desk earlier than the modifications have been utilized you should utilize the time journey characteristic of Iceberg. In a later weblog, we’ll go into particulars about find out how to make the most of the time journey characteristic. In the event you determined that the outdated information recordsdata aren’t wanted any extra then you’ll be able to eliminate them by expiring the older snapshot as mentioned above.

With the merge-on-read choice, as an alternative of rewriting the whole information recordsdata in the course of the write time, merely a delete file is written. This may be an equality delete file or a positional delete file. As of this writing, Spark doesn’t write equality deletes, however it’s able to studying them. The benefit of utilizing this selection is that your writes will be a lot faster as you aren’t rewriting a complete information file. Suppose you wish to delete a selected consumer’s information in a desk due to GDPR necessities, Iceberg will merely write a delete file specifying the areas of the consumer information within the corresponding information recordsdata the place the consumer’s information exist. So at any time when you might be studying the tables, Iceberg will dynamically apply these deletes and current a logical desk the place the consumer’s information is deleted though the corresponding data are nonetheless current within the bodily information recordsdata.

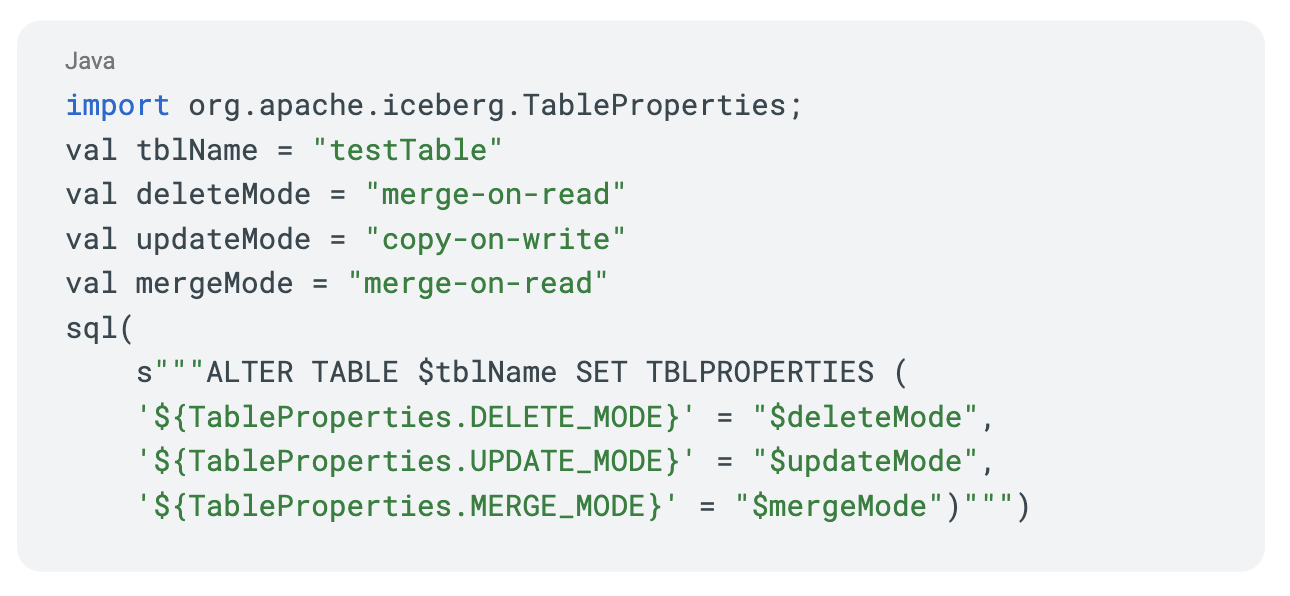

We allow the merge-on-read choice for our clients by default. You may allow or disable them by setting the next properties primarily based in your necessities. See Write properties.

Serializable vs snapshot isolation

The default isolation assure offered for the delete, replace, and merge operations is serializable isolation. You would additionally change the isolation stage to snapshot isolation. Each serializable and snapshot isolation ensures present a read-consistent view of your information. Serializable Isolation is a stronger assure. For example, you’ve gotten an worker desk that maintains worker salaries. Now, you wish to delete all data comparable to workers with wage larger than $100,000. Let’s say this wage desk has 5 information recordsdata and three of these have data of workers with wage larger than $100,000. If you provoke the delete operation, the three recordsdata containing worker salaries larger than $100,000 are chosen, then in case your “delete_mode” is merge-on-read a delete file is written that factors to the positions to delete in these three information recordsdata. In case your “delete_mode” is copy-on-write, then all three information recordsdata are merely rewritten.

No matter the delete_mode, whereas the delete operation is going on, assume a brand new information file is written by one other consumer with a wage larger than $100,000. If the isolation assure you selected is snapshot, then the delete operation will succeed and solely the wage data comparable to the unique three information recordsdata are eliminated out of your desk. The data within the newly written information file whereas your delete operation was in progress, will stay intact. However, in case your isolation assure was serializable, then your delete operation will fail and you’ll have to retry the delete from scratch. Relying in your use case you may wish to scale back your isolation stage to “snapshot.”

The issue

The presence of too many delete recordsdata will ultimately scale back the learn efficiency, as a result of in Iceberg V2 spec, everytime a knowledge file is learn, all of the corresponding delete recordsdata additionally have to be learn (the Iceberg group is at the moment contemplating introducing an idea referred to as “delete vector” sooner or later and which may work in another way from the present spec). This may very well be very pricey. The place delete recordsdata may include dangling deletes, as in it might need references to information which can be now not current in any of the present snapshots.

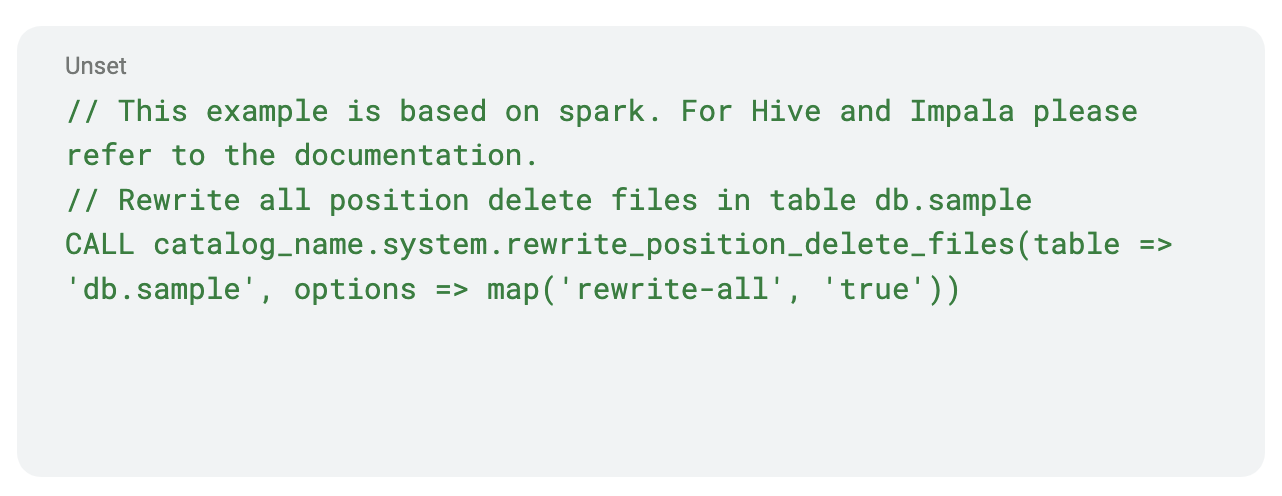

Resolution: rewrite place deletes

For place delete recordsdata, compacting the place delete recordsdata mitigates the issue a bit bit by decreasing the variety of delete recordsdata that have to be learn and providing quicker efficiency by higher compressing the delete information. As well as the process additionally deletes the dangling deletes.

Rewrite place delete recordsdata

Iceberg gives a rewrite place delete recordsdata process in Spark SQL.

However the presence of delete recordsdata nonetheless pose a efficiency drawback. Additionally, regulatory necessities may drive you to ultimately bodily delete the info moderately than do a logical deletion. This may be addressed by doing a serious compaction and eradicating the delete recordsdata totally, which is addressed later within the weblog.

Downside with small recordsdata

We sometimes wish to reduce the variety of recordsdata we’re touching throughout a learn. Opening recordsdata is expensive. File codecs like Parquet work higher if the underlying file dimension is giant. Studying extra of the identical file is cheaper than opening a brand new file. In Parquet, sometimes you need your recordsdata to be round 512 MB and row-group sizes to be round 128 MB. Throughout the write part these are managed by “write.target-file-size-bytes” and “write.parquet.row-group-size-bytes” respectively. You may wish to go away the Iceberg defaults alone until what you might be doing.

In Spark for instance, the scale of a Spark job in reminiscence will have to be a lot larger to succeed in these defaults, as a result of when information is written to disk, will probably be compressed in Parquet/ORC. So getting your recordsdata to be of the fascinating dimension just isn’t straightforward until your Spark job dimension is large enough.

One other drawback arises with partitions. Except aligned correctly, a Spark job may contact a number of partitions. Let’s say you’ve gotten 100 Spark duties and every of them wants to write down to 100 partitions, collectively they may write 10,000 small recordsdata. Let’s name this drawback partition amplification.

Resolution: use distribution-mode in write

The amplification drawback may very well be addressed at write time by setting the suitable write distribution mode in write properties. Insert distribution is managed by “write.distribution-mode” and is defaulted to none by default. Delete distribution is managed by “write.delete.distribution-mode” and is defaulted to hash, Replace distribution is managed by “write.replace.distribution-mode” and is defaulted to hash and merge distribution is managed by “write.merge.distribution-mode” and is defaulted to none.

The three write distribution modes which can be accessible in Iceberg as of this writing are none, hash, and vary. When your mode is none, no information shuffle happens. You need to use this mode solely if you don’t care in regards to the partition amplification drawback or when that every job in your job solely writes to a selected partition.

When your mode is ready to hash, your information is shuffled by utilizing the partition key to generate the hashcode so that every resultant job will solely write to a selected partition. When your distribution mode is vary, your information is distributed such that your information is ordered by the partition key or kind key if the desk has a SortOrder.

Utilizing the hash or vary can get tough as you are actually repartitioning the info primarily based on the variety of partitions your desk might need. This will trigger your Spark duties after the shuffle to be both too small or too giant. This drawback will be mitigated by enabling adaptive question execution in spark by setting “spark.sql.adaptive.enabled=true” (that is enabled by default from Spark 3.2). A number of configs are made accessible in Spark to regulate the habits of adaptive question execution. Leaving the defaults as is until precisely what you might be doing might be the best choice.

Regardless that the partition amplification drawback may very well be mitigated by setting appropriate write distribution mode applicable in your job, the resultant recordsdata might nonetheless be small simply because the Spark duties writing them may very well be small. Your job can not write extra information than it has.

Resolution: rewrite information recordsdata

To deal with the small recordsdata drawback and delete recordsdata drawback, Iceberg gives a characteristic to rewrite information recordsdata. This characteristic is at the moment accessible solely with Spark. The remainder of the weblog will go into this in additional element. This characteristic can be utilized to compact and even develop your information recordsdata, incorporate deletes from delete recordsdata comparable to the info recordsdata which can be being rewritten, present higher information ordering in order that extra information may very well be filtered immediately at learn time, and extra. It is among the strongest instruments in your toolbox that Iceberg gives.

RewriteDataFiles

Iceberg gives a rewrite information recordsdata process in Spark SQL.

See RewriteDatafiles JavaDoc to see all of the supported choices.

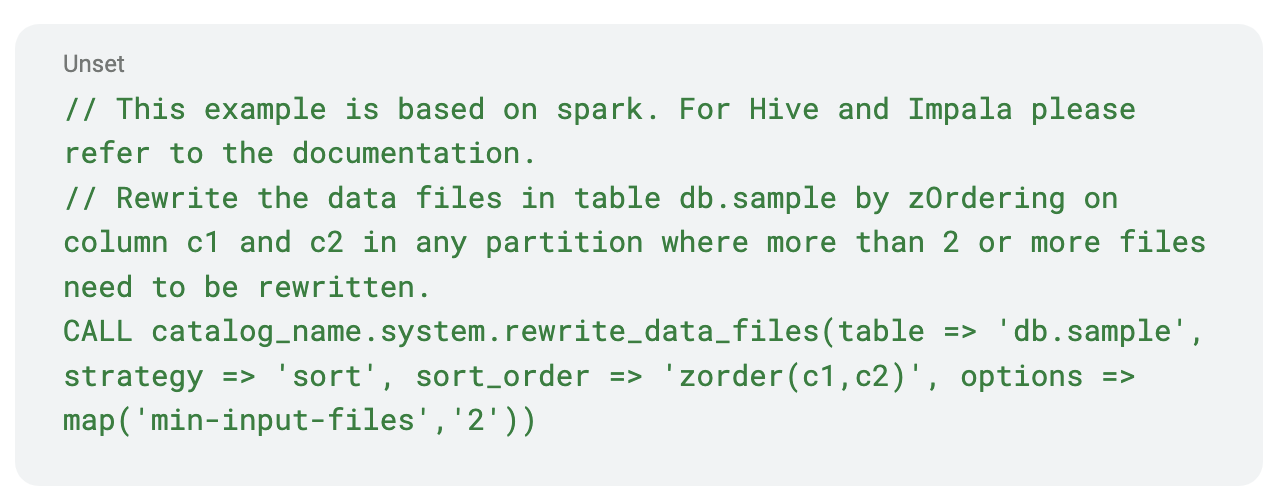

Now let’s focus on what the technique choice means as a result of it is very important perceive to get extra out of the rewrite information recordsdata process. There are three technique choices accessible. They’re Bin Pack, Kind, and Z Order. Notice that when utilizing the Spark process the Z Order technique is invoked by merely setting the sort_order to “zorder(columns…).”

Technique choice

- Bin Pack

- It’s the most cost-effective and quickest.

- It combines recordsdata which can be too small and combines them utilizing the bin packing strategy to scale back the variety of output recordsdata.

- No information ordering is modified.

- No information is shuffled.

- Kind

- Rather more costly than Bin Pack.

- Supplies whole hierarchical ordering.

- Learn queries solely profit if the columns used within the question are ordered.

- Requires information to be shuffled utilizing vary partitioning earlier than writing.

- Z Order

- Most costly of the three choices.

- The columns which can be getting used ought to have some sort of intrinsic clusterability and nonetheless must have a ample quantity of knowledge in every partition as a result of it solely helps in eliminating recordsdata from a learn scan, not from eliminating row teams. In the event that they do, then queries can prune quite a lot of information throughout learn time.

- It solely is smart if a couple of column is used within the Z order. If just one column is required then common kind is the higher choice.

- See https://weblog.cloudera.com/speeding-up-queries-with-z-order/ to be taught extra about Z ordering.

Commit conflicts

Iceberg makes use of optimistic concurrency management when committing new snapshots. So, after we use rewrite information recordsdata to replace our information a brand new snapshot is created. However earlier than that snapshot is dedicated, a test is completed to see if there are any conflicts. If a battle happens all of the work finished might probably be discarded. It is very important plan upkeep operations to attenuate potential conflicts. Allow us to focus on a few of the sources of conflicts.

- If solely inserts occurred between the beginning of rewrite and the commit try, then there are not any conflicts. It’s because inserts end in new information recordsdata and the brand new information recordsdata will be added to the snapshot for the rewrite and the commit reattempted.

- Each delete file is related to a number of information recordsdata. If a brand new delete file corresponding to an information file that’s being rewritten is added in future snapshot (B), then a battle happens as a result of the delete file is referencing a knowledge file that’s already being rewritten.

Battle mitigation

- In the event you can, strive pausing jobs that may write to your tables in the course of the upkeep operations. Or not less than deletes shouldn’t be written to recordsdata which can be being rewritten.

- Partition your desk in such a means that each one new writes and deletes are written to a brand new partition. For example, in case your incoming information is partitioned by date, all of your new information can go right into a partition by date. You may run rewrite operations on partitions with older dates.

- Make the most of the filter choice within the rewrite information recordsdata spark motion to finest choose the recordsdata to be rewritten primarily based in your use case in order that no delete conflicts happen.

- Enabling partial progress will assist save your work by committing teams of recordsdata previous to the whole rewrite finishing. Even when one of many file teams fails, different file teams might succeed.

Conclusion

Iceberg gives a number of options {that a} fashionable information lake wants. With a bit care, planning and understanding a little bit of Iceberg’s structure one can take most benefit of all of the superior options it gives.

To strive a few of these Iceberg options your self you’ll be able to sign up for considered one of our subsequent dwell hands-on labs.

You too can watch the webinar to be taught extra about Apache Iceberg and see the demo to be taught the newest capabilities.

[ad_2]