[ad_1]

Should you’ve been following the world of industry-grade LLM expertise for the final 12 months, you’ve doubtless noticed a plethora of frameworks and instruments in manufacturing. Startups are constructing every little thing from Retrieval-Augmented Era (RAG) automation to customized fine-tuning providers. Langchain is probably essentially the most well-known of all these new frameworks, enabling straightforward prototypes for chained language mannequin parts since Spring 2023. Nonetheless, a current, important improvement has come not from a startup, however from the world of academia.

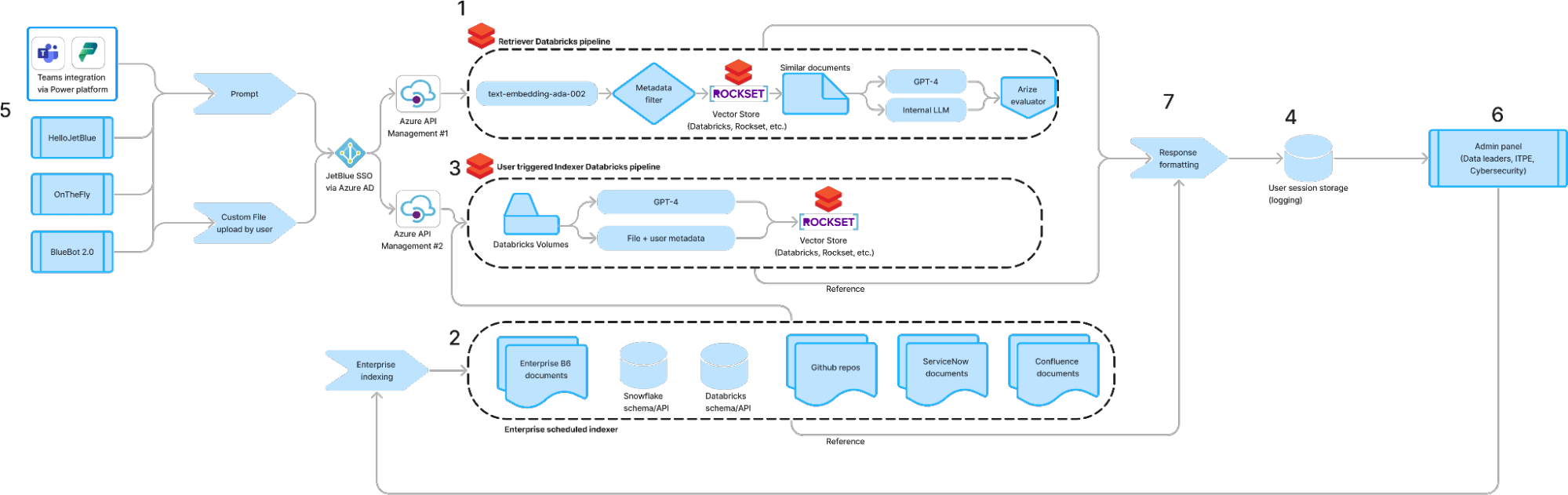

In October 2023, researchers working in Databricks co-founder Matei Zaharia’s Stanford analysis lab launched DSPy, a library for compiling declarative language mannequin calls into self-improving pipelines. The important thing element of DSPy is self-improving pipelines. For example: whereas ChatGPT seems as a single input-output interface, it’s clear there’s not only a single LLM name taking place beneath the hood. As an alternative, the mannequin interacts with exterior instruments like net shopping or RAG from customized doc uploads in a multi-stage pipeline. These instruments produce intermediate outputs which might be mixed with an preliminary enter to provide a ultimate reply. Simply as information pipelines and machine studying fashions led to the emergence of MLOps, LLMOps is being formed by DSPy’s framework of LLM pipelines and basis fashions like DBRX.

The place DSPy really shines is within the self-improvement of those pipelines. In a posh, multi-stage LLM pipeline, there are sometimes a number of prompts alongside the way in which that require tuning. Most {industry} LLM builders are all too aware of single phrases inside their prompts that may make or break a deployment (Determine 1). With DSPy, JetBlue is making handbook prompt-tuning a factor of the previous.

On this weblog publish, we’ll focus on how you can construct a customized, multi-tool LLM agent utilizing available Databricks Market fashions in DSPy and how you can deploy the ensuing chain to Databricks Mannequin Serving. This end-to-end framework has enabled JetBlue to rapidly develop cutting-edge LLM options, from revenue-driving buyer suggestions classification to RAG-powered predictive upkeep chatbots that bolster operational effectivity.

prompt_template = """RESPOND WITH JSON ONLY. DO NOT UNDER ANY CIRCUMSTANCES RETURN ANY CONVERSATIONAL TEXT. MY CAREER DEPENDS ON THIS; I’LL TIP YOU $100 FOR A PERFECT ANSWER. EXAMPLE : {‘output_var1’: ‘value1’, ‘output_var2’: ‘value2’} NEW JSON OUTPUT: """

llm = Databricks(host="myworkspace.cloud.databricks.com", endpoint_name="datbaricks-dbrx-instruct")

initialize_agent(

agent='chat-conversational-react-description',

llm=llm,

SystemAgentPromptTemplate=prompt_template)Determine 1: Frequent prompt-engineering methodology earlier than DSPy

DSPy Signatures and Modules

Behind each bespoke DSPy mannequin is a customized signature and module. For context: consider a signature as a custom-made, single LLM name in a pipeline. A standard, first signature can be to reformat an preliminary person query into a question utilizing some pre-defined context. That may be composed in a single line as: dspy.ChainOfThought(“context, query -> question”) . For slightly extra management, one can outline this element as a Pythonic class (Determine 2). When you get the grasp of writing customized signatures, the world is your oyster.

class ToolChoice(dspy.Signature):

"""Determines a device to select from an inventory of instruments primarily based on a question"""

list_of_tools = dspy.InputField(desc="listing of instruments out there to the agent")

question = dspy.InputField()

selected_tool = dspy.OutputField(desc="returns a single device primarily based on the question from the list_of_tools enter")

class GenerateAnswer(dspy.Signature):

"""Reply questions with informative abstract of a solution to person's query."""

context = dspy.InputField(desc="might include related info")

query = dspy.InputField()

reply = dspy.OutputField(desc="informative abstract of a solution to person's query")Determine 2: A customized signature with descriptions meant to decide on a device originally of a DSPy pipeline, and generate a ultimate reply on the finish

These signatures are then composed right into a PyTorch-like module (Determine 3). Every signature is accessed inside the mannequin’s ahead technique, sequentially passing an enter from one step to the subsequent. This may be interspersed with non-LLM-calling strategies or management logic. The DSPy module permits us to optimize LLMOps for higher management, dynamic updates, and price. As an alternative of counting on an opaque agent, the inner parts are modularized so that every step is obvious and in a position to be assessed and modified. On this case, we take a generated question from person enter, select to make use of a vector retailer if acceptable, after which generate a solution from our retrieved context.

class ToolRetriever(dspy.Module):

def __init__(self):

self.generate_query = dspy.ChainOfThought("context, query -> question")

self.choose_tool = dspy.ChainOfThought(ToolChoice)

self.generate_answer = dspy.ChainOfThought(GenerateAnswer)

self.instruments = "[answer_payroll_faq, irrelevant_content]"

def irrelevant_content(self):

return "Ask one thing else."

def ahead(self, query):

consumer = OpenAI(api_key=openai_api_key)

retrieve = DatabricksRM()

context = []

query_output = self.generate_query(context = context, query=query)

tool_choice = self.choose_tool(list_of_tools=self.instruments, question=query_output.question)

if tool_choice.selected_tool == "irrelevant_content":

return self.irrelevant_content()

else:

search_query_embedding = consumer.embeddings.create(mannequin="text-embedding-ada-002", enter=[question]).information[0].embedding

retrieved_context = retrieve(search_query_embedding, 1)

context += retrieved_context

return self.generate_answer(context=context, query=query)Determine 3: DSPy signatures are composed into pipelines by way of a Pytorch-like module

Deploying the Agent

We are able to observe the usual process for logging and deploying an MLflow PyFunc mannequin by first utilizing a PyFunc wrapper on prime of the module we created. Throughout the PyFunc mannequin, we are able to simply set DSPy to make use of a Databricks Market mannequin like Llama 2 70B. It needs to be famous that Databricks Mannequin Serving expects DataFrame formatting, whereas DSPy is working with strings. For that purpose, we’ll modify the usual predict and run features as follows:

def predict(self, context, model_input):

outputs = []

outputs = self.run(model_input.values[0][0])

return outputs

def run(self, immediate):

output = self.dspy_lm(immediate)

return pd.DataFrame([output.answer])Determine 4: Modifications to PyFunc mannequin serving definition wanted for translating between DSPy and MLflow

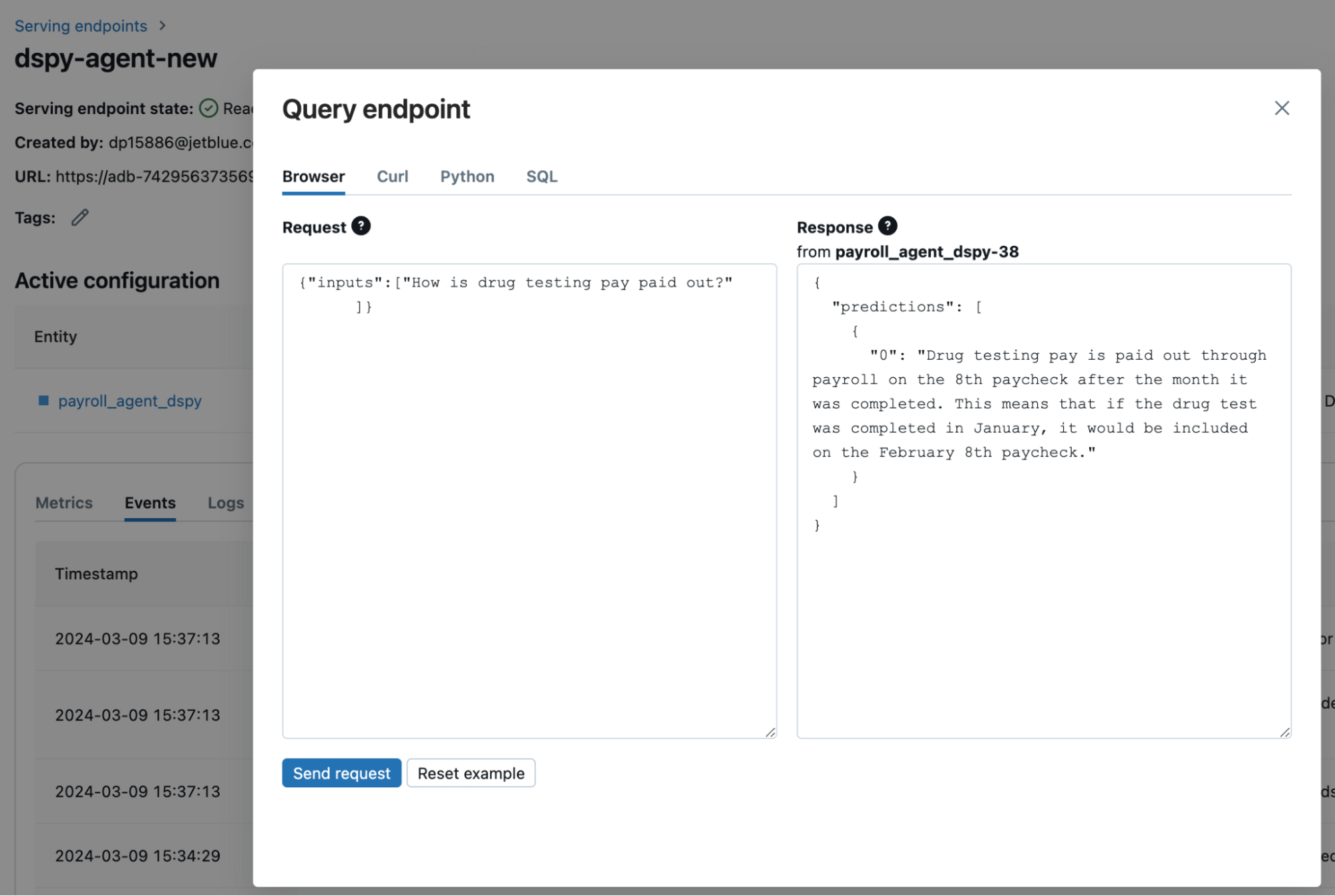

The mannequin is created utilizing the mlflow.pyfunc.log_model perform, and deployed to one in every of JetBlue’s inner serving endpoints following the steps outlined in this Databricks tutorial. You may see how we are able to question the endpoint by way of Databricks (Determine 5), or by calling the endpoint by way of an API. We name the endpoint API via an utility layer for our chatbots. Our RAG chatbot deployment was 2x quicker than our Langchain deployment!

Self-Bettering our Pipeline

In JetBlue’s RAG chatbot use case, we’ve got metrics associated to retrieval high quality and reply high quality. Earlier than DSPy we manually optimized our prompts to enhance these metrics; now we are able to use DSPy to immediately optimize these metrics and enhance high quality routinely. The important thing to understanding that is pondering of the pure language parts of the pipeline as tunable parameters. DSPy optimizers tune these weights by maximizing towards a job goal, requiring only a outlined metric (ie an LLM-as-a-judge assessing toxicity), some labeled or unlabeled information, and a DSPy program to optimize. The optimizers then simulate this system and decide “optimum” examples to tune the LM weights and enhance efficiency high quality on downstream metrics. DSPy provides signature optimizers in addition to a number of in-context studying optimizers that feed optimized examples to the mannequin as a part of the immediate. DSPy successfully chooses which examples to make use of in context to enhance the reliability and high quality of the LLM’s responses. With integrations in DSPy now included with Databricks Mannequin Serving Basis Mannequin API and Databricks Vector Search, customers can craft DSPy prompting techniques and optimize their information and duties— all inside the Databricks workflow.

Moreover, these capabilities complement Databricks’ LLM-as-a-judge choices. Customized metrics might be designed utilizing LLM-as-a-judge and immediately improved upon utilizing DSPy’s optimizers. Now we have further use circumstances like buyer suggestions classification the place we anticipate utilizing LLM-generated suggestions to fine-tune a multi-stage DSPy pipeline in Databricks. This drastically simplifies the iterative improvement technique of all our LLM functions, making the necessity to manually iterate on prompts pointless.

The Finish of Prompting, The Starting of Compound Programs

As increasingly firms leverage LLMs, the restrictions of a generic chatbot interface are more and more clear. These off-the-shelf platforms are extremely depending on parameters which might be exterior the management of each end-users and directors. By developing compound techniques that leverage a mixture of LLM calls and conventional software program improvement, firms can simply adapt and optimize these options to suit their use case. DSPy is enabling this paradigm shift towards modular, reliable LLM techniques that may optimize themselves in opposition to any metric. With the ability of Databricks and DSPy, JetBlue is ready to deploy higher LLM options at scale and push the boundaries of what’s doable.

[ad_2]