[ad_1]

Methods of Offering Knowledge to a Mannequin

Many organizations are actually exploring the ability of generative AI to enhance their effectivity and acquire new capabilities. Normally, to totally unlock these powers, AI will need to have entry to the related enterprise information. Giant Language Fashions (LLMs) are educated on publicly obtainable information (e.g. Wikipedia articles, books, internet index, and so forth.), which is sufficient for a lot of general-purpose functions, however there are many others which can be extremely depending on non-public information, particularly in enterprise environments.

There are three primary methods to supply new information to a mannequin:

- Pre-training a mannequin from scratch. This hardly ever is sensible for many firms as a result of it is rather costly and requires quite a lot of sources and technical experience.

- High-quality-tuning an current general-purpose LLM. This could cut back the useful resource necessities in comparison with pre-training, however nonetheless requires important sources and experience. High-quality-tuning produces specialised fashions which have higher efficiency in a site for which it’s finetuned for however could have worse efficiency in others.

- Retrieval augmented era (RAG). The concept is to fetch information related to a question and embrace it within the LLM context in order that it may “floor” its personal outputs in that info. Such related information on this context is known as “grounding information”. RAG enhances generic LLM fashions, however the quantity of knowledge that may be supplied is restricted by the LLM context window dimension (quantity of textual content the LLM can course of without delay, when the data is generated).

At present, RAG is probably the most accessible approach to supply new info to an LLM, so let’s concentrate on this technique and dive just a little deeper.

Retrieval Augmented Technology

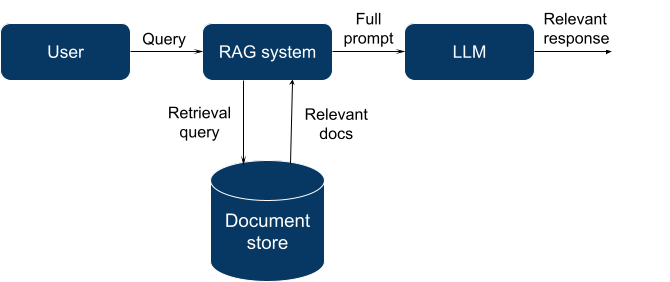

Usually, RAG means utilizing a search or retrieval engine to fetch a related set of paperwork for a specified question.

For this goal, we are able to use many current methods: a full-text search engine (like Elasticsearch + conventional info retrieval methods), a general-purpose database with a vector search extension (Postgres with pgvector, Elasticsearch with vector search plugin), or a specialised database that was created particularly for vector search.

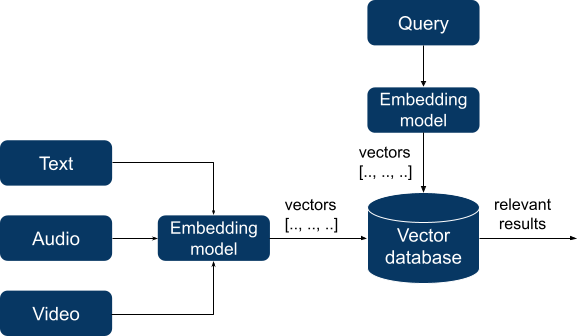

In two latter circumstances, RAG is much like semantic search. For a very long time, semantic search was a extremely specialised and sophisticated area with unique question languages and area of interest databases. Indexing information required in depth preparation and constructing data graphs, however current progress in deep studying has dramatically modified the panorama. Trendy semantic search functions now depend upon embedding fashions that efficiently be taught semantic patterns in offered information. These fashions take unstructured information (textual content, audio, and even video) as enter and remodel them into vectors of numbers of a hard and fast size, thus turning unstructured information right into a numeric type that might be used for calculations Then it turns into potential to calculate the space between vectors utilizing a selected distance metric, and the ensuing distance will mirror the semantic similarity between vectors and, in flip, between items of unique information.

These vectors are listed by a vector database and, when querying, our question can be reworked right into a vector. The database searches for the N closest vectors (in line with a selected distance metric like cosine similarity) to a question vector and returns them.

A vector database is answerable for these 3 issues:

- Indexing. The database builds an index of vectors utilizing some built-in algorithm (e.g. locality-sensitive hashing (LSH) or hierarchical navigable small world (HNSW)) to precompute information to hurry up querying.

- Querying. The database makes use of a question vector and an index to seek out probably the most related vectors in a database.

- Publish-processing. After the outcome set is shaped, typically we would need to run an extra step like metadata filtering or re-ranking inside the outcome set to enhance the end result.

The aim of a vector database is to supply a quick, dependable, and environment friendly approach to retailer and question information. Retrieval velocity and search high quality might be influenced by the number of index sort. Along with the already talked about LSH and HNSW there are others, every with its personal set of strengths and weaknesses. Most databases make the selection for us, however in some, you possibly can select an index sort manually to regulate the tradeoff between velocity and accuracy.

At DataRobot, we imagine the method is right here to remain. High-quality-tuning can require very refined information preparation to show uncooked textual content into training-ready information, and it’s extra of an artwork than a science to coax LLMs into “studying” new details by way of fine-tuning whereas sustaining their normal data and instruction-following conduct.

LLMs are sometimes superb at making use of data provided in-context, particularly when solely probably the most related materials is supplied, so a great retrieval system is essential.

Observe that the selection of the embedding mannequin used for RAG is important. It’s not part of the database and selecting the proper embedding mannequin to your software is important for attaining good efficiency. Moreover, whereas new and improved fashions are continuously being launched, altering to a brand new mannequin requires reindexing your whole database.

Evaluating Your Choices

Selecting a database in an enterprise atmosphere isn’t a straightforward process. A database is usually the guts of your software program infrastructure that manages an important enterprise asset: information.

Typically, once we select a database we wish:

- Dependable storage

- Environment friendly querying

- Skill to insert, replace, and delete information granularly (CRUD)

- Arrange a number of customers with numerous ranges of entry for them (RBAC)

- Knowledge consistency (predictable conduct when modifying information)

- Skill to get well from failures

- Scalability to the scale of our information

This checklist isn’t exhaustive and may be a bit apparent, however not all new vector databases have these options. Typically, it’s the availability of enterprise options that decide the ultimate alternative between a well known mature database that gives vector search by way of extensions and a more recent vector-only database.

Vector-only databases have native help for vector search and might execute queries very quick, however usually lack enterprise options and are comparatively immature. Remember that it takes years to construct advanced options and battle-test them, so it’s no shock that early adopters face outages and information losses. Alternatively, in current databases that present vector search by way of extensions, a vector isn’t a first-class citizen and question efficiency might be a lot worse.

We are going to categorize all present databases that present vector search into the next teams after which focus on them in additional element:

- Vector search libraries

- Vector-only databases

- NoSQL databases with vector search

- SQL databases with vector search

- Vector search options from cloud distributors

Vector search libraries

Vector search libraries like FAISS and ANNOY will not be databases – quite, they supply in-memory vector indices, and solely restricted information persistence choices. Whereas these options will not be perfect for customers requiring a full enterprise database, they’ve very quick nearest neighbor search and are open supply. They provide good help for high-dimensional information and are extremely configurable (you possibly can select the index sort and different parameters).

General, they’re good for prototyping and integration in easy functions, however they’re inappropriate for long-term, multi-user information storage.

Vector-only databases

This group consists of various merchandise like Milvus, Chroma, Pinecone, Weaviate, and others. There are notable variations amongst them, however all of them are particularly designed to retailer and retrieve vectors. They’re optimized for environment friendly similarity search with indexing and help high-dimensional information and vector operations natively.

Most of them are newer and won’t have the enterprise options we talked about above, e.g. a few of them don’t have CRUD, no confirmed failure restoration, RBAC, and so forth. For probably the most half, they’ll retailer the uncooked information, the embedding vector, and a small quantity of metadata, however they’ll’t retailer different index varieties or relational information, which implies you’ll have to use one other, secondary database and preserve consistency between them.

Their efficiency is usually unmatched and they’re a great choice when having multimodal information (photographs, audio or video).

NoSQL databases with vector search

Many so-called NoSQL databases lately added vector search to their merchandise, together with MongoDB, Redis, neo4j, and ElasticSearch. They provide good enterprise options, are mature, and have a powerful neighborhood, however they supply vector search performance by way of extensions which could result in lower than perfect efficiency and lack of first-class help for vector search. Elasticsearch stands out right here as it’s designed for full-text search and already has many conventional info retrieval options that can be utilized along side vector search.

NoSQL databases with vector search are a good selection if you find yourself already invested in them and want vector search as an extra, however not very demanding function.

SQL databases with vector search

This group is considerably much like the earlier group, however right here we now have established gamers like PostgreSQL and ClickHouse. They provide a wide selection of enterprise options, are well-documented, and have sturdy communities. As for his or her disadvantages, they’re designed for structured information, and scaling them requires particular experience.

Their use case can be related: sensible choice when you have already got them and the experience to run them in place.

Vector search options from cloud distributors

Hyperscalers additionally provide vector search providers. They normally have fundamental options for vector search (you possibly can select an embedding mannequin, index sort, and different parameters), good interoperability inside the remainder of the cloud platform, and extra flexibility in relation to price, particularly for those who use different providers on their platform. Nonetheless, they’ve completely different maturity and completely different function units: Google Cloud vector search makes use of a quick proprietary index search algorithm known as ScaNN and metadata filtering, however isn’t very user-friendly; Azure Vector search affords structured search capabilities, however is in preview part and so forth.

Vector search entities might be managed utilizing enterprise options of their platform like IAM (Identification and Entry Administration), however they don’t seem to be that straightforward to make use of and suited to normal cloud utilization.

Making the Proper Alternative

The primary use case of vector databases on this context is to supply related info to a mannequin. To your subsequent LLM venture, you possibly can select a database from an current array of databases that supply vector search capabilities by way of extensions or from new vector-only databases that supply native vector help and quick querying.

The selection will depend on whether or not you want enterprise options, or high-scale efficiency, in addition to your deployment structure and desired maturity (analysis, prototyping, or manufacturing). One must also contemplate which databases are already current in your infrastructure and whether or not you have got multimodal information. In any case, no matter alternative you’ll make it’s good to hedge it: deal with a brand new database as an auxiliary storage cache, quite than a central level of operations, and summary your database operations in code to make it straightforward to regulate to the subsequent iteration of the vector RAG panorama.

How DataRobot Can Assist

There are already so many vector database choices to select from. They every have their execs and cons – nobody vector database will probably be proper for all your group’s generative AI use circumstances. That’s the reason it’s essential to retain optionality and leverage an answer that means that you can customise your generative AI options to particular use circumstances, and adapt as your wants change or the market evolves.

The DataRobot AI Platform permits you to convey your personal vector database – whichever is correct for the answer you’re constructing. Should you require modifications sooner or later, you possibly can swap out your vector database with out breaking your manufacturing atmosphere and workflows.

Concerning the writer

Nick Volynets is a senior information engineer working with the workplace of the CTO the place he enjoys being on the coronary heart of DataRobot innovation. He’s taken with massive scale machine studying and obsessed with AI and its affect.

[ad_2]