[ad_1]

In context: Now that the crypto mining increase is over, Nvidia has but to return to its earlier gaming-centric focus. As an alternative, it has jumped into the AI increase, offering GPUs to energy chatbots and AI providers. It presently has a nook in the marketplace, however a consortium of firms is seeking to change that by designing an open communication customary for AI processors.

A few of the largest expertise firms within the {hardware} and AI sectors have shaped a consortium to create a brand new business customary for GPU connectivity. The Extremely Accelerator Hyperlink (UALink) group goals to develop open expertise options to profit your entire AI ecosystem slightly than counting on a single firm like Nvidia and its proprietary NVLink expertise.

The UALink group consists of AMD, Broadcom, Cisco, Google, Hewlett Packard Enterprise (HPE), Intel, Meta, and Microsoft. Based on its press launch, the open business customary developed by UALink will allow higher efficiency and effectivity for AI servers, making GPUs and specialised AI accelerators talk “extra successfully.”

Firms similar to HPE, Intel, and Cisco will carry their “intensive” expertise in creating large-scale AI options and high-performance computing techniques to the group. As demand for AI computing continues quickly rising, a strong, low-latency, scalable community that may effectively share computing assets is essential for future AI infrastructure.

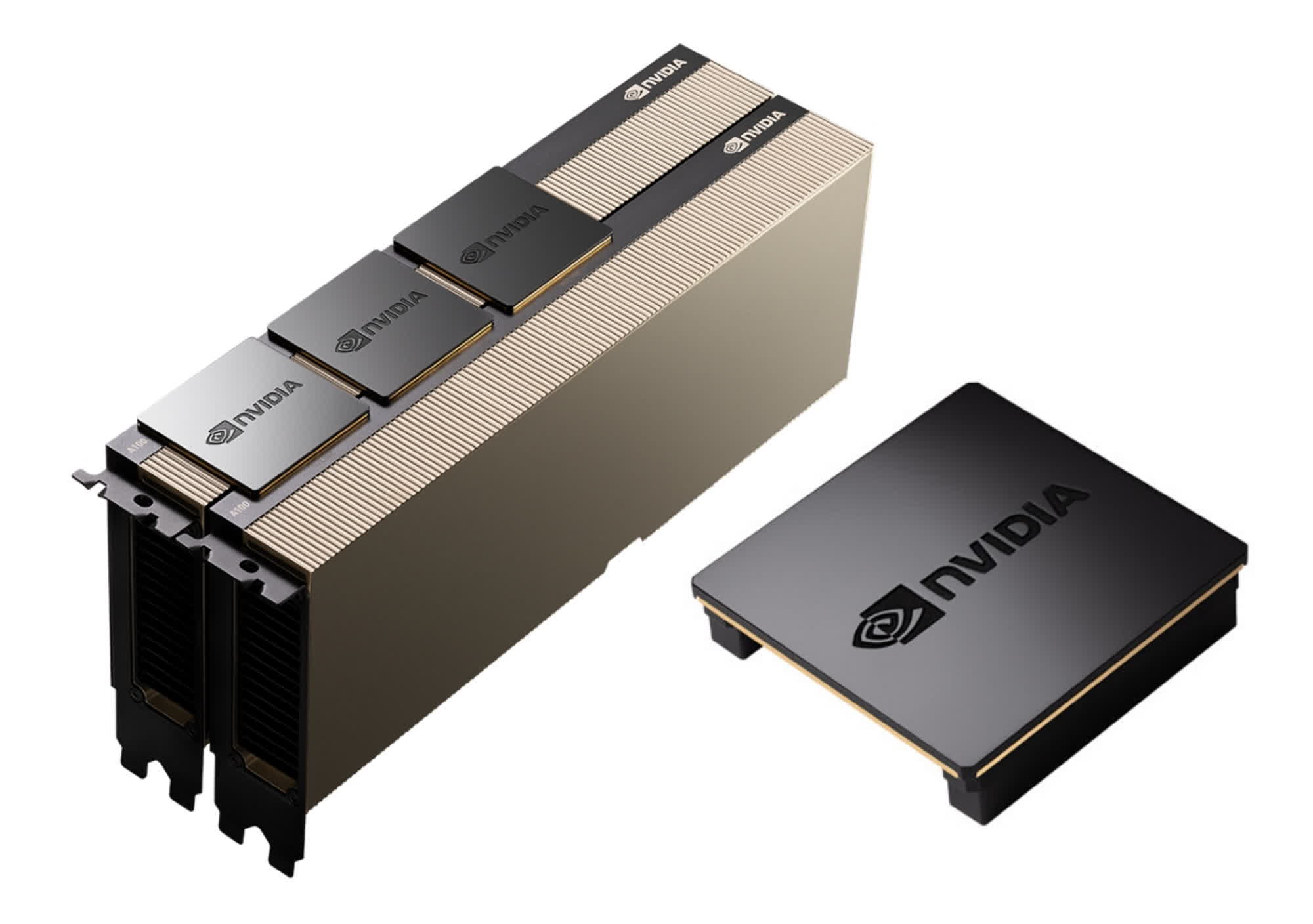

At present, Nvidia offers essentially the most highly effective accelerators to energy the biggest AI fashions. Its NVLink expertise helps facilitate the speedy information change between a whole lot of GPUs put in in these AI server clusters. UALink hopes to outline a normal interface for AI and machine studying, HPC, and cloud computing, with high-speed and low-latency communications for all manufacturers of AI accelerators, not simply Nvidia’s.

The group expects an preliminary 1.0 specification to land in the course of the third quarter of 2024. The usual will allow communications for 1,024 accelerators inside an “AI computing pod,” permitting GPUs to entry masses and shops between their connected reminiscence parts straight.

AMD VP Forrest Norrod famous that the work the UALink group is doing is important for the way forward for AI functions. Likewise, Broadcom stated it was “proud” to be a founding member of the UALink consortium to help an open ecosystem for AI connectivity.

[ad_2]