[ad_1]

Picture by Writer

Operating LLMs (Massive Language Fashions) regionally has grow to be common because it offers safety, privateness, and extra management over mannequin outputs. On this mini tutorial, we be taught the best method of downloading and utilizing the Llama 3 mannequin.

Llama 3 is Meta AI’s newest household of LLMs. It’s open-source, comes with superior AI capabilities, and improves response era in comparison with Gemma, Gemini, and Claud 3.

What’s Ollama?

Ollama/ollama is an open-source device for utilizing LLMs like Llama 3 in your native machine. With new analysis and improvement, these giant language fashions don’t require giant VRam, computing, or storage. As a substitute, they’re optimized to be used in laptops.

There are a number of instruments and frameworks obtainable so that you can use LLMs regionally, however Ollama is the best to arrange and use. It enables you to use LLMs instantly from a terminal or Powershell. It’s quick and comes with core options that can make you begin utilizing it instantly.

One of the best a part of Ollama is that it integrates with every kind of software program, extensions, and purposes. For instance, you should utilize the CodeGPT extension in VScode and join Ollama to begin utilizing Llama 3 as your AI code assistant.

Putting in Ollama

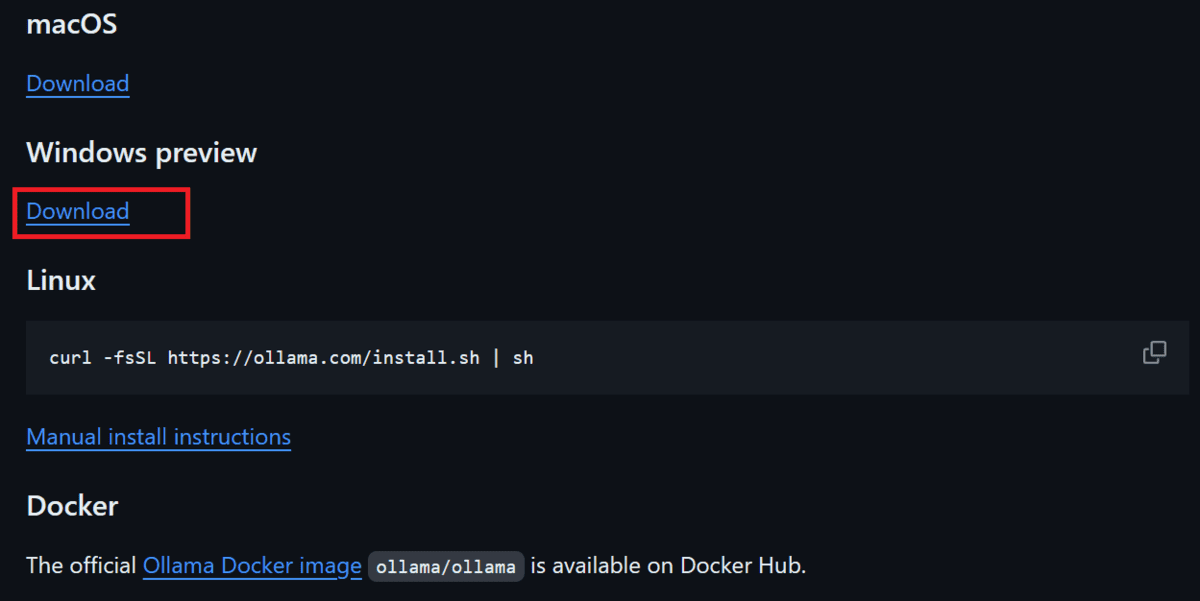

Obtain and Set up Ollama by going to the GitHub repository Ollama/ollama, scrolling down, and clicking the obtain hyperlink in your working system.

Picture from ollama/ollama | Obtain choice for varied working programs

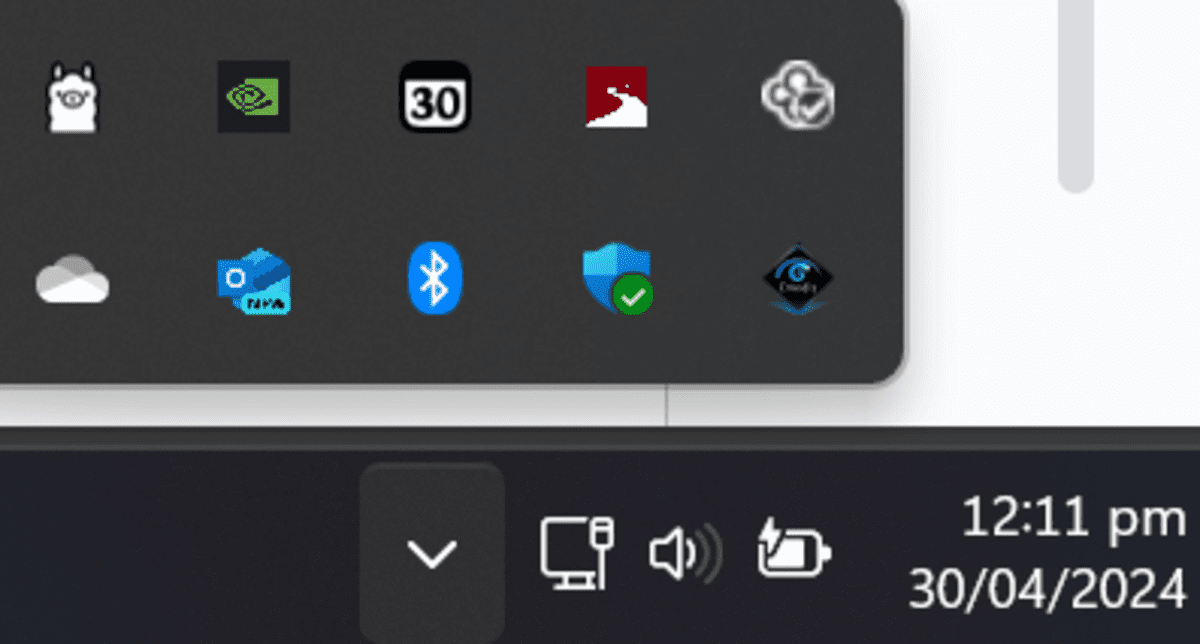

After Ollama is efficiently put in it would present within the system tray as proven beneath.

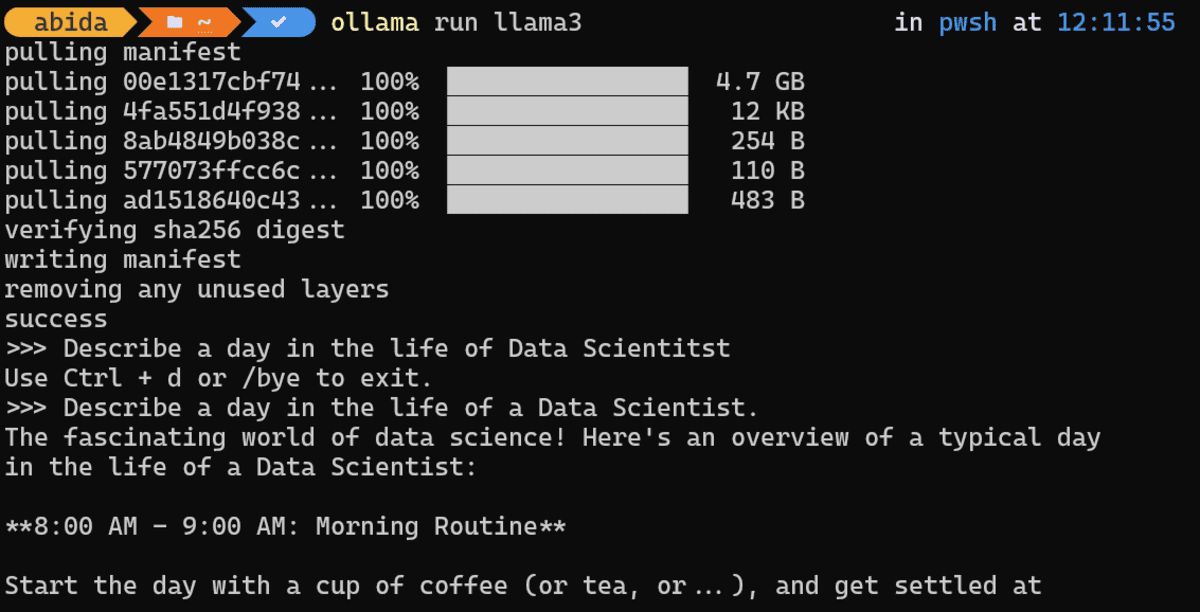

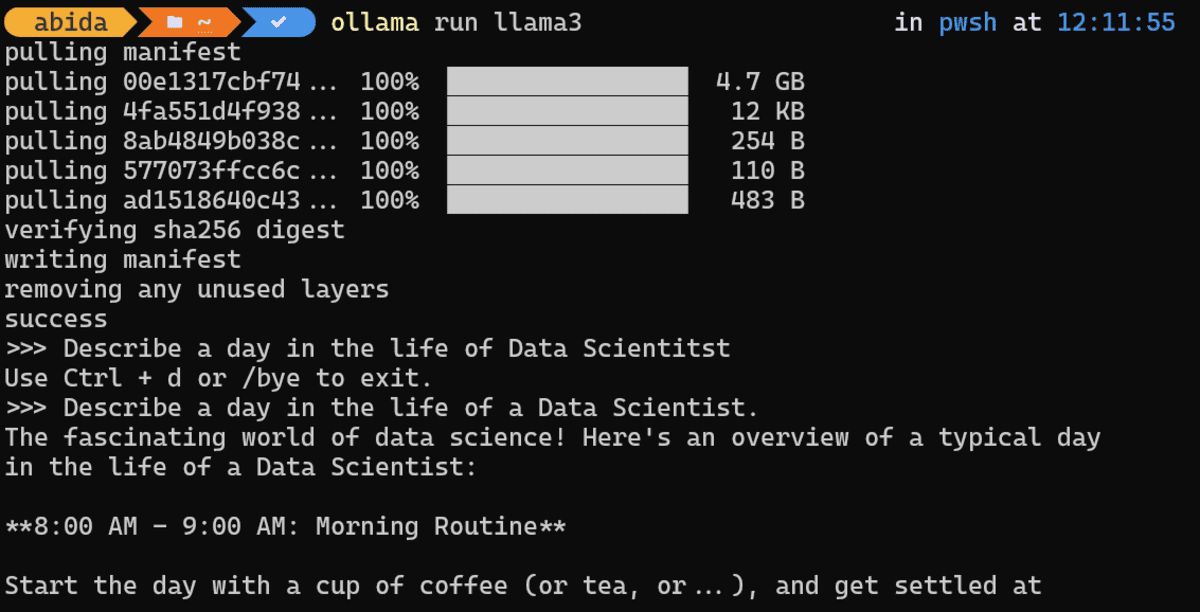

Downloading and Utilizing Llama 3

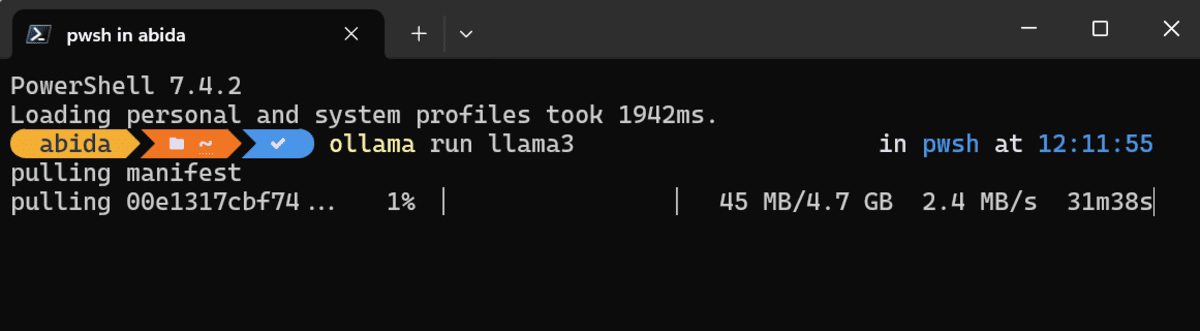

To obtain the Llama 3 mannequin and begin utilizing it, it’s important to sort the next command in your terminal/shell.

Relying in your web pace, it would take nearly half-hour to obtain the 4.7GB mannequin.

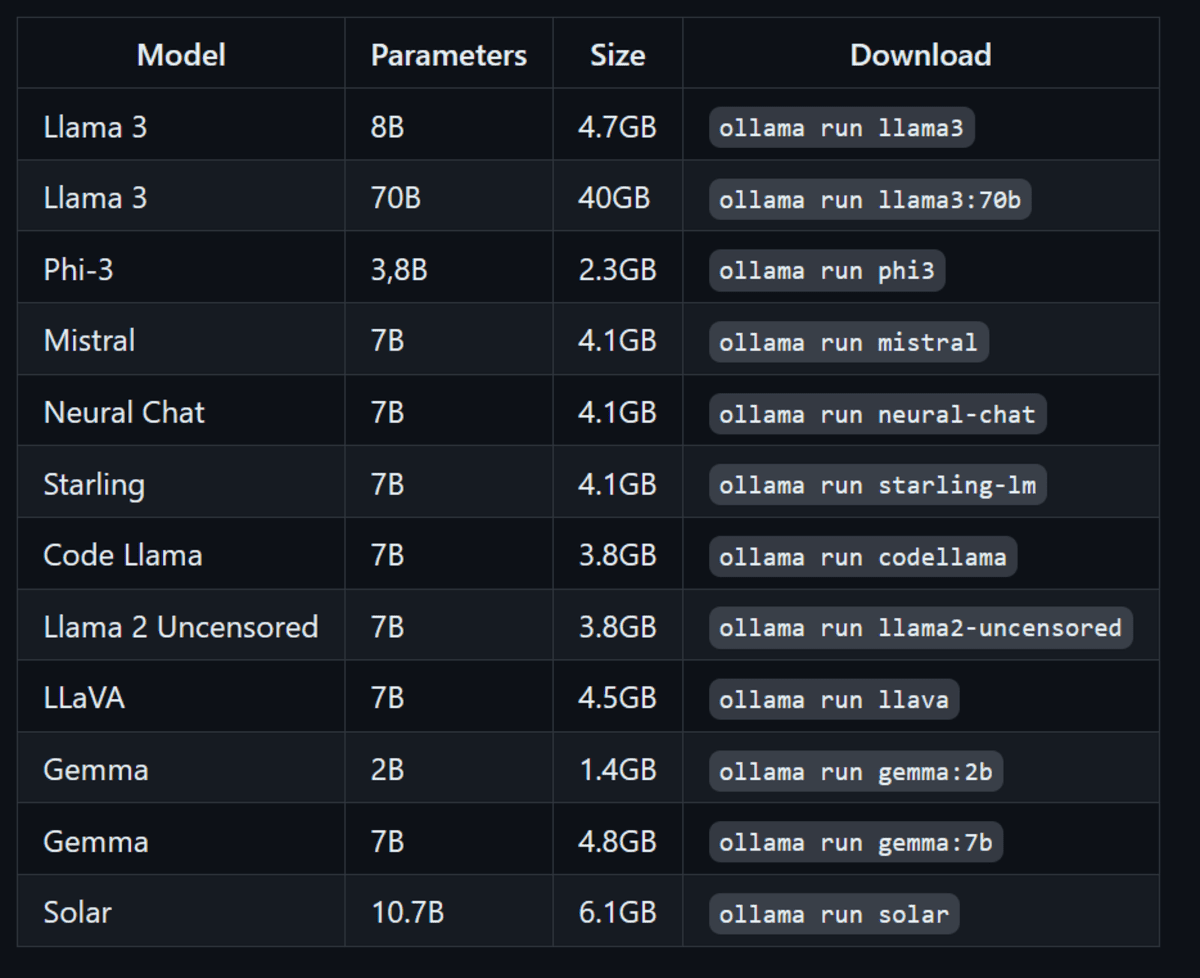

Other than the Llama 3 mannequin, it’s also possible to set up different LLMs by typing the instructions beneath.

Picture from ollama/ollama | Operating different LLMs utilizing Ollama

As quickly as downloading is accomplished, it is possible for you to to make use of the LLama 3 regionally as if you’re utilizing it on-line.

Immediate: “Describe a day within the lifetime of a Information Scientist.”

To display how briskly the response era is, I’ve hooked up the GIF of Ollama producing Python code after which explaining it.

Notice: If in case you have Nvidia GPU in your laptop computer and CUDA put in, Ollama will mechanically use GPU as an alternative of CPU to generate a response. Which is 10 higher.

Immediate: “Write a Python code for constructing the digital clock.”

You’ll be able to exit the chat by typing /bye after which begin once more by typing ollama run llama3.

Last Ideas

Open-source frameworks and fashions have made AI and LLMs accessible to everybody. As a substitute of being managed by just a few firms, these regionally run instruments like Ollama make AI obtainable to anybody with a laptop computer.

Utilizing LLMs regionally offers privateness, safety, and extra management over response era. Furthermore, you do not have to pay to make use of any service. You’ll be able to even create your individual AI-powered coding assistant and use it in VSCode.

If you wish to find out about different purposes to run LLMs regionally, then you need to learn 5 Methods To Use LLMs On Your Laptop computer.

Abid Ali Awan (@1abidaliawan) is a licensed information scientist skilled who loves constructing machine studying fashions. Presently, he’s specializing in content material creation and writing technical blogs on machine studying and information science applied sciences. Abid holds a Grasp’s diploma in expertise administration and a bachelor’s diploma in telecommunication engineering. His imaginative and prescient is to construct an AI product utilizing a graph neural community for college kids combating psychological sickness.

[ad_2]