[ad_1]

Picture by Writer

Giant Language Fashions have revolutionized the Pure Language Processing subject, providing unprecedented capabilities in duties like language translation, sentiment evaluation, and textual content technology.

Nevertheless, coaching such fashions is each time-consuming and costly. Because of this fine-tuning has develop into a vital step for tailoring these superior algorithms to particular duties or domains.

Simply to verify we’re on the identical web page, we have to recall two ideas:

- Pre-trained language fashions

- Fantastic-tuning

So let’s break down these two ideas.

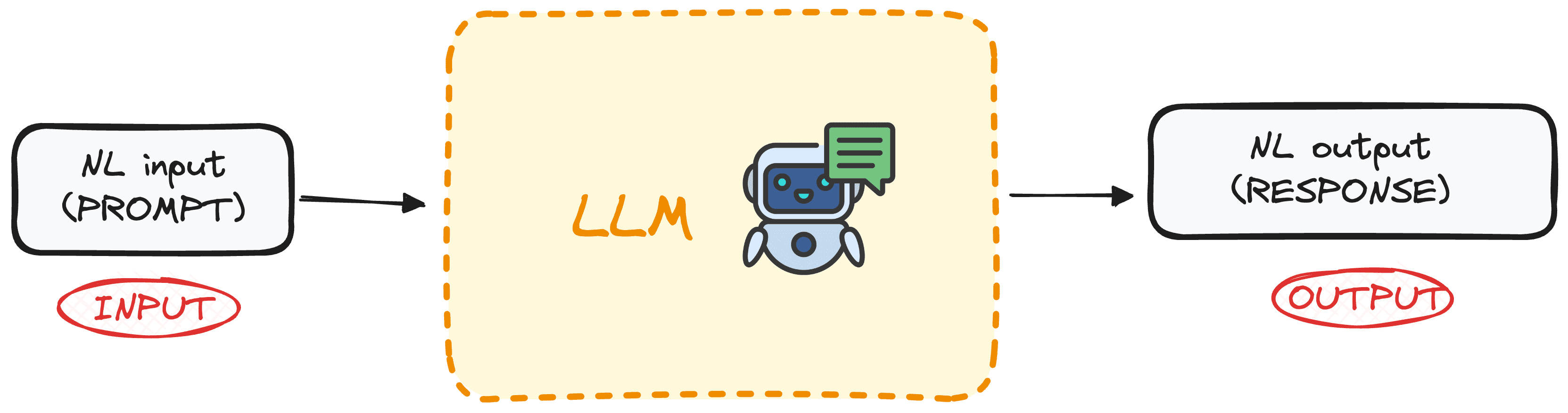

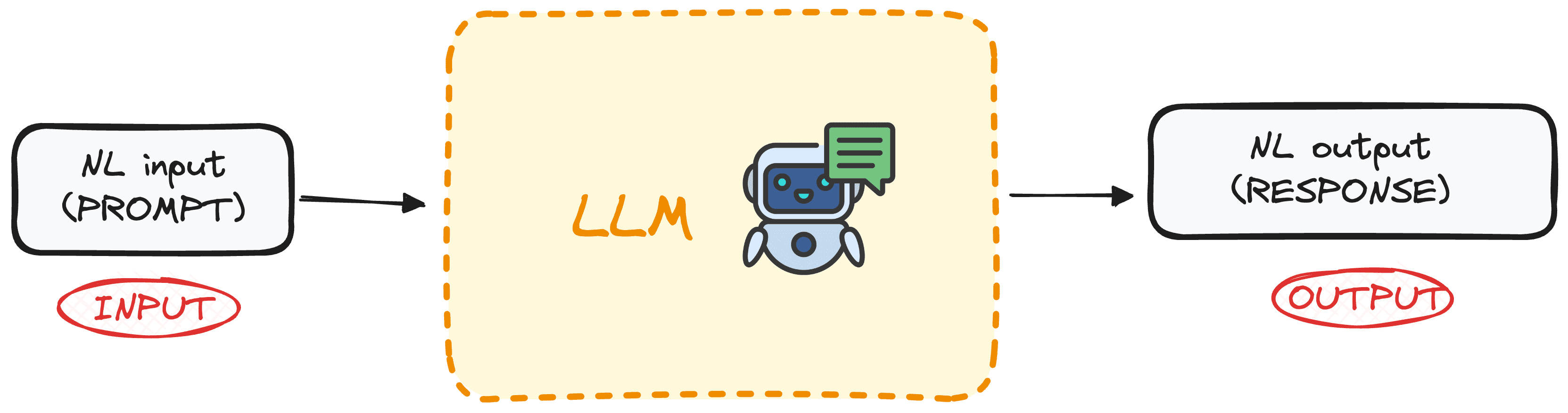

What’s a Pre-trained Giant Language Mannequin?

LLMs are a particular class of Machine Studying meant to foretell the subsequent phrase in a sequence based mostly on the context offered by the earlier phrases. These fashions are based mostly on the Transformers structure and are skilled on in depth textual content knowledge, enabling them to know and generate human-like textual content.

The perfect a part of this new know-how is its democratization, as most of those fashions are underneath open-source license or are accessible by means of APIs at low prices.

Picture by Writer

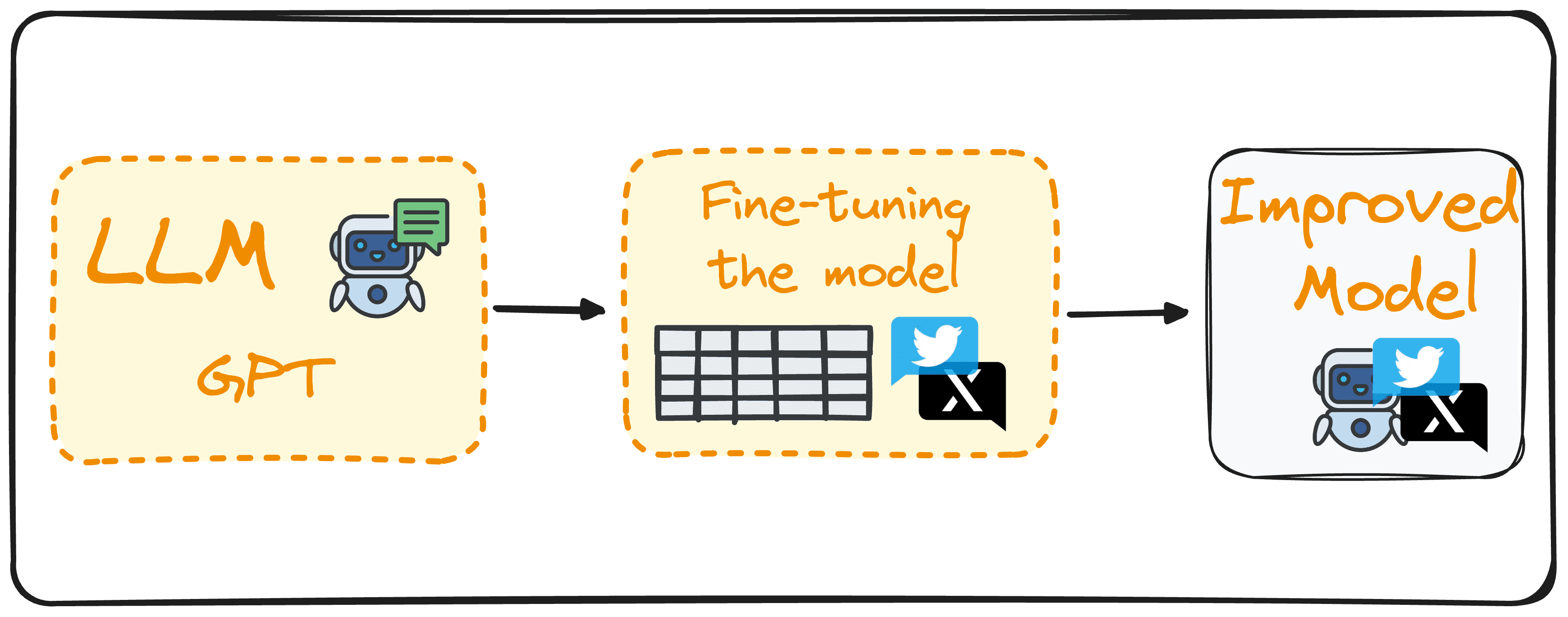

What’s Fantastic-tuning?

Fantastic-tuning includes utilizing a Giant Language Mannequin as a base and additional coaching it with a domain-based dataset to reinforce its efficiency on particular duties.

Let’s take for example a mannequin to detect sentiment out of tweets. As a substitute of making a brand new mannequin from scratch, we might make the most of the pure language capabilities of GPT-3 and additional practice it with a knowledge set of tweets labeled with their corresponding sentiment.

This is able to enhance this mannequin in our particular activity of detecting sentiments out of tweets.

This course of reduces computational prices, eliminates the necessity to develop new fashions from scratch and makes them more practical for real-world functions tailor-made to particular wants and objectives.

Picture by Writer

So now that we all know the fundamentals, you possibly can discover ways to fine-tune your mannequin following these 7 steps.

Numerous Approaches to Fantastic-tuning

Fantastic-tuning may be applied in several methods, every tailor-made to particular targets and focuses.

Supervised Fantastic-tuning

This frequent methodology includes coaching the mannequin on a labeled dataset related to a particular activity, like textual content classification or named entity recognition. For instance, a mannequin may very well be skilled on texts labeled with sentiments for sentiment evaluation duties.

Few-shot Studying

In conditions the place it is not possible to collect a big labeled dataset, few-shot studying comes into play. This methodology makes use of just a few examples to offer the mannequin a context of the duty, thus bypassing the necessity for in depth fine-tuning.

Switch Studying

Whereas all fine-tuning is a type of switch studying, this particular class is designed to allow a mannequin to sort out a activity completely different from its preliminary coaching. It makes use of the broad information acquired from a basic dataset and applies it to a extra specialised or associated activity.

Area-specific Fantastic-tuning

This method focuses on getting ready the mannequin to grasp and generate textual content for a particular business or area. By fine-tuning the mannequin on textual content from a focused area, it good points higher context and experience in domain-specific duties. As an example, a mannequin is likely to be skilled on medical data to tailor a chatbot particularly for a medical utility.

Greatest Practices for Efficient Fantastic-tuning

To carry out a profitable fine-tuning, some key practices have to be thought of.

Knowledge High quality and Amount

The efficiency of a mannequin throughout fine-tuning tremendously relies on the standard of the dataset used. At all times take into accout:

Rubbish in, rubbish out.

Due to this fact, it is essential to make use of clear, related, and adequately massive datasets for coaching.

Hyperparameter Tuning

Fantastic-tuning is an iterative course of that usually requires changes. Experiment with completely different studying charges, batch sizes, and coaching durations to search out the optimum configuration in your venture.

Exact tuning is crucial to environment friendly studying and adapting to new knowledge, serving to to keep away from overfitting.

Common Analysis

Repeatedly monitor the mannequin’s efficiency all through the coaching course of utilizing a separate validation dataset.

This common analysis helps observe how effectively the mannequin is acting on the supposed activity and checks for any indicators of overfitting. Changes needs to be made based mostly on these evaluations to fine-tune the mannequin’s efficiency successfully.

Navigating Pitfalls in LLM Fantastic-Tuning

This course of can result in unsatisfactory outcomes if sure pitfalls should not prevented as effectively:

Overfitting

Coaching the mannequin with a small dataset or present process too many epochs can result in overfitting. This causes the mannequin to carry out effectively on coaching knowledge however poorly on unseen knowledge, and due to this fact, have a low accuracy for real-world functions.

Underfitting

It happens when the coaching is simply too transient or the training price is ready too low, leading to a mannequin that does not be taught the duty successfully. This produces a mannequin that doesn’t know learn how to carry out our particular objective.

Catastrophic Forgetting

When fine-tuning a mannequin on a particular activity, there is a threat of the mannequin forgetting the broad information it initially had. This phenomenon, often known as catastrophic forgetting, reduces the mannequin’s effectiveness throughout numerous duties, particularly when contemplating pure language abilities.

Knowledge Leakage

Be sure that your coaching and validation datasets are utterly separate to keep away from knowledge leakage. Overlapping datasets can falsely inflate efficiency metrics, giving an inaccurate measure of mannequin effectiveness.

Closing Ideas and Future Steps

Beginning the method of fine-tuning massive language fashions presents an enormous alternative to enhance the present state of fashions for particular duties.

By greedy and implementing the detailed ideas, finest practices, and crucial precautions, you possibly can efficiently customise these strong fashions to swimsuit particular necessities, thereby absolutely leveraging their capabilities.

Josep Ferrer is an analytics engineer from Barcelona. He graduated in physics engineering and is presently working within the knowledge science subject utilized to human mobility. He’s a part-time content material creator targeted on knowledge science and know-how. Josep writes on all issues AI, overlaying the applying of the continued explosion within the subject.

[ad_2]