[ad_1]

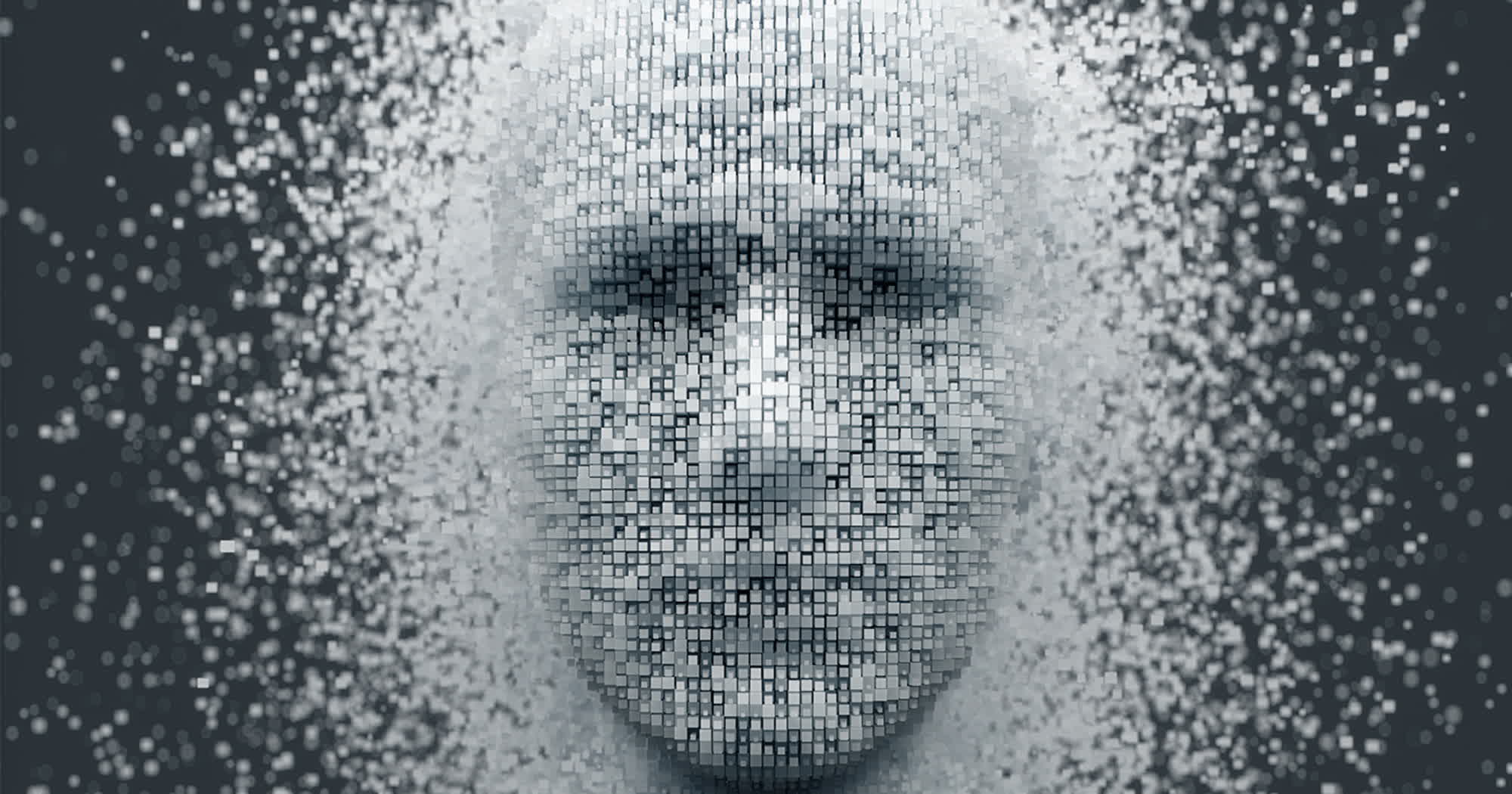

Dystopian Kurzweil: As Large Tech continues frantically pushing AI improvement and funding, many customers have turn into involved in regards to the consequence and risks of the newest AI developments. Nonetheless, one man is greater than offered on AI’s capability to convey humanity to its subsequent evolutionary degree.

Raymond Kurzweil is a widely known laptop scientist, creator, and synthetic intelligence fanatic. Through the years, he has promoted radical ideas akin to transhumanism and technological singularity, the place humanity and superior expertise merge to create an developed hybrid species. Kurzweil’s newest predictions on AI and the way forward for tech basically double down on twenty-year-old predictions.

In a latest interview with the Guardian, Kurzweil launched his newest guide, “The Singularity Is Nearer,” a sequel to his bestselling 2005 guide, “The Singularity Is Close to: When People Transcend Biology.” Kurzweil predicted that AI would attain human-level intelligence by 2029, with the merging between computer systems and people (the singularity) taking place in 2045. Now that AI has turn into probably the most talked-about subject, he believes his predictions nonetheless maintain.

Kurzweil believes that in 5 years, machine studying will possess the identical talents as probably the most expert people in virtually each discipline. Just a few “high people” able to writing Oscar-level screenplays or conceptualizing deep new philosophical insights will nonetheless be capable of beat AI, however all the pieces will change when synthetic basic intelligence (AGI) lastly surpasses people at all the pieces.

Bringing giant language fashions (LLM) to the following degree merely requires extra computing energy. Kurzweil famous that the computing paradigm we’ve got at the moment is “principally good,” and it’ll simply get higher and higher over time. The creator would not imagine that quantum computing will flip the world the wrong way up. He says there are too some ways to proceed enhancing trendy chips, akin to 3D and vertically stacked designs.

Kurzweil predicts that machine-learning engineers will ultimately clear up the problems brought on by hallucinations, uncanny AI-generated photos, and different AI anomalies with extra superior algorithms educated on extra information. The singularity remains to be taking place and can arrive as soon as folks begin merging their brains with the cloud. Developments in brain-computer interfaces (BCIs) are already occurring. These BCIs, ultimately comprised of nanobots “noninvasively” coming into the mind by way of capillaries, will allow people to own a mixture of pure and cybernetic intelligence.

Kurzweil’s imaginative nature as a guide creator and enthusiastic transhumanist is apparent to see. Science nonetheless hasn’t found an efficient method to ship medication straight into the mind as a result of human physiology would not work the best way the futurist thinks. Nonetheless, he stays assured that nanobots will make people “a millionfold” extra clever inside the subsequent twenty years.

Kurzweil concedes that AI will transform society and create a worldwide automated economic system. Folks will lose jobs however may also adapt to new employment roles and alternatives superior tech brings. A common primary earnings may also ease the ache. He expects the primary tangible transformative plans will emerge within the 2030s. The inevitable Singularity will allow people to dwell ceaselessly or lengthen our dwelling prospects indefinitely. Expertise may even resurrect the useless by way of AI avatars and digital actuality.

Kurzweil says persons are misdirecting their worries concerning AI.

“It’s not going to be us versus AI: AI goes inside ourselves,” he mentioned. “It is going to enable us to create new issues that weren’t possible earlier than. It’s going to be a reasonably improbable future.”

[ad_2]