[ad_1]

In 2023, I had the pleasure of working with a gaggle of gifted interns who joined the Software program Engineering Institute (SEI) for the summer season to achieve perception into the analysis we conduct and expertise in making use of the ideas we research and develop. This SEI weblog publish, authored by the interns themselves, describes their work on an instance case by which they designed and carried out a microservices-based software program utility together with an accompanying DevSecOps pipeline. Within the course of, they expanded the idea of minimal viable product to minimal viable course of. I’d wish to thank the next interns for his or her exhausting work and contributions:

- Evan Chen, College of California, San Diego

- Zoe Angell, Carnegie Mellon College

- Emily Wolfe and Sam Shadle, Franciscan College of Steubenville

- Meryem Marasli and Berfin Bircan, College of Pittsburgh

- Genavive Chick, College of Georgia

Demonstrating DevSecOps Practices in a Actual-World Improvement Setting

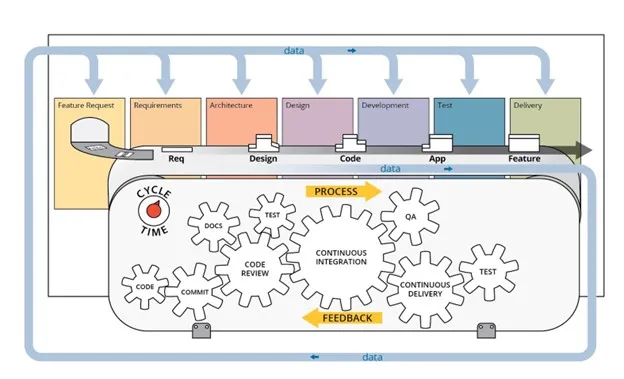

DevSecOps is a set of ideas and practices that fosters a collaborative setting by which builders, safety professionals, IT operations, and different stakeholders collaborate to supply dependable and safe software program. The DevSecOps pipeline may be considered the equipment that permits stakeholders to collaborate and implement requirements or insurance policies in an automatic style. Each an utility and a steady integration/steady deployment (CI/CD) pipeline have to be configured to particularly help a software program growth lifecycle (SDLC), as a result of a selected software program structure would possibly require a novel set of CI/CD capabilities (Determine 1).

Our objective throughout our time on the SEI was to implement DevSecOps by way of a web-based utility utilizing a CI/CD pipeline. This pipeline automates procedures to allow higher communication, setting parity, testing, and safety to fulfill stakeholder wants. In conducting this work, we realized simply how exhausting it’s to implement correct DevSecOps practices: We discovered it was straightforward to get sidetracked and lose sight of the capabilities required for the undertaking. We realized many classes taking over these challenges.

Determine 1: DevSecOps Course of Breakdown

Improvement Method: Minimal Viable Course of

The time period minimal viable product (MVP) defines the minimal options wanted for a product to work at its most elementary type. The strategy of articulating an MVP is highly effective as a result of it removes pointless complexity from a product when attempting to outline and develop its primary structure. Using a DevSecOps strategy to our software program growth lifecycle resulted in shifting focus from producing an MVP to as an alternative making a minimal viable course of.

A minimal viable course of contains defining and implementing the levels essential to create a functioning CI/CD pipeline. By implementing a minimal viable course of earlier than leaping into growth, we spurred the engagement of all stakeholders early. Doing so meant that growth, safety, and operations teams may work collectively to determine the structure of the appliance, together with wanted libraries, packages, safety measures, and the place the appliance will probably be served.

By making use of a minimal viable course of, main issues with the appliance (e.g., a weak library) could possibly be addressed at first of the event cycle somewhat than after months of growth. Discovering and correcting issues early is understood to avoid wasting each money and time, however one other aspect impact of a minimal viable course of is the identification of coaching and personnel wants together with information and metrics for steady enchancment. Our staff adopted these pointers by growing a functioning pipeline earlier than including options to the microservice structure.

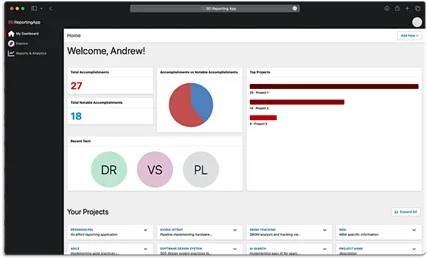

Instance Case: Inside Reporting

Since a DevSecOps pipeline just isn’t practically as attention-grabbing with out an utility to attempt it on, we selected a undertaking each attention-grabbing and helpful for the staff. Our SEI group hoped to streamline its present strategy to producing weekly, month-to-month, and yearly stories from manually edited,(wiki-based textual content paperwork (Determine 2) to a purpose-built internet app (Determine 3). Our objective was to design, develop, and ship a microservice-based internet utility and the CI/CD tooling, automation, and different capabilities needed to supply a high quality product.

Determine 2: A Snapshot of the Workforce’s Present Reporting Technique

We developed the optimized reporting utility proven in Determine 3 through the use of a PostgreSQL again finish with Tortoise ORM and Pydantic serialization, a VueJS entrance finish, and FastAPI to help the appliance connection from outdated to new. Most significantly, we didn’t begin any growth on the internet app till the pipeline was completed utilizing DevSecOps finest practices.

Determine 3: Our Optimized Reporting Software

Our Pipeline: 5 Levels

Our pipeline consisted of 5 levels:

- Static Evaluation

- Docker Construct

- SBOM Evaluation

- Safe Signing

- Docker Push

The next sections describe these 5 levels.

Determine 4: Pipeline Levels and Jobs in GitLab

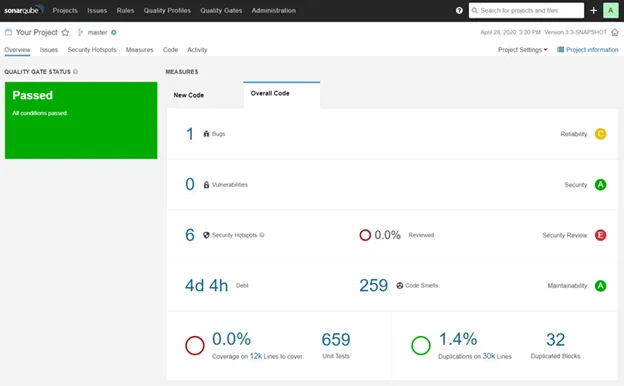

Stage 1: Static Evaluation with SonarQube

Static Evaluation entails inspecting the code in-place, with out executing it, for linting, formatting, and discovery of bugs. Initially, we arrange separate linting instruments particular to the languages we have been utilizing: Python and VueJS. These 5 instruments we used have been

These instruments had some overlapping capabilities, conflicting outcomes, restricted debugging performance, and required separate configuration. To optimize this configuration course of, we switched from utilizing 5 particular person instruments to utilizing only one, SonarQube. SonarQube is an all-in-one device that supplied vulnerability and bug checks towards an up-to-date database, linting, and formatting viewable in a handy dashboard (Determine 5). This answer was simpler to implement, supplied enhanced performance, and simplified integration with our CI/CD pipeline.

Determine 5: SonarQube Dashboard

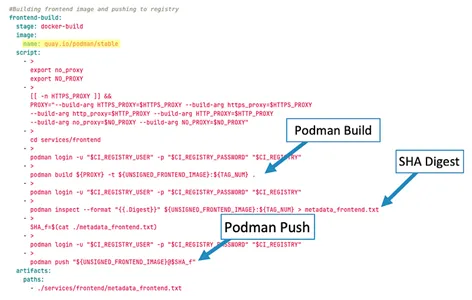

Stage 2: Docker Construct

Within the pipeline, we constructed two Docker pictures: one for the entrance finish, which included VueJS, and one for the again finish, which had the imports wanted to make use of FastAPI. To construct the photographs, we used Podman-in-Podman as an alternative of Docker-in-Docker. This selection offered us with the identical capabilities as Docker-in-Docker, however offered enhanced safety by way of its default daemon-less structure.

We simplified our Docker-build stage (Determine 6) into three predominant steps:

- The pipeline constructed the Docker picture with the Podman construct command.

- We used Podman examine to amass the safe hashing algorithm (SHA) digest for the Docker picture.

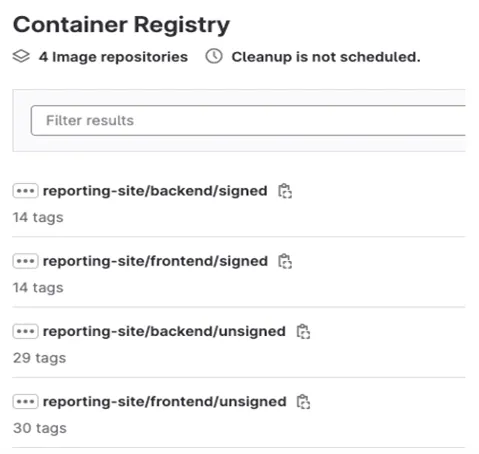

- We pinned the Docker picture with its SHA digest and pushed it to our GitLab container registry, deliberately placing the picture in a folder referred to as “unsigned.”

Determine 6: Docker Construct Script

Ideally, we’d not have pushed an unsigned Docker picture to the GitLab container registry. Nevertheless, the sizes of the photographs have been too giant to be saved as artifacts on our runner. Our answer was due to this fact to push the unsigned docker pictures whereas concurrently separating our container registry between signed and unsigned pictures.

Stage 3: SBOM Evaluation

Our subsequent stage is the software program invoice of supplies (SBOM) evaluation stage. An SBOM is akin to an ingredient checklist of all of the completely different parts contained within the software program. SBOMs present an important part for correct vulnerability detection, monitoring and securing provide chains.

We accomplished the SBOM evaluation stage with three completely different jobs. The primary job was to generate the SBOMs utilizing Grype, a vulnerability scanner device that may additionally generate SBOMs. This device scanned the beforehand constructed containers after which generated an SBOM for the frontend and backend of the appliance. Subsequent, the SBOMs have been pushed to an SBOM evaluation platform referred to as Dependency Monitor (Determine 7). The Dependency Monitor analyzes the SBOMs and determines which parts are weak. Lastly, the information from Dependency Monitor is pulled again into the pipeline to simply view what number of coverage violations and vulnerabilities are within the frontend and backend of the appliance.

Determine 7: Dependency Monitor Dashboard

Stage 4: Safe Signing

When making a pipeline that generates artifacts, it is very important have a safe signing stage to make sure that the generated artifacts haven’t been tampered with. The generated artifacts for this pipeline are the containers and SBOMs. To signal these artifacts, we used Cosign, which is a device for signing, verifying, and managing container pictures and different artifacts.

Cosign doesn’t straight signal SBOMs. Reasonably, it makes use of an In-toto attestation that generates verifiable claims about how the SBOM was produced and packaged right into a container. To signal containers, Cosign has built-in signal and confirm strategies which are very straightforward to make use of in a pipeline (Determine 8).

Determine 8: Safe Signing Utilizing Cosign

On this stage, the containers have been referred to with an SHA digest as an alternative of a tag. When a digest is used, Docker refers to an actual picture. This element is necessary as a result of after the safe signing stage is accomplished, builders will know precisely which picture is securely signed because it was signed with the digest. Cosign will ultimately cease supporting signing with a tag and at that time another technique to our present strategy can be needed.

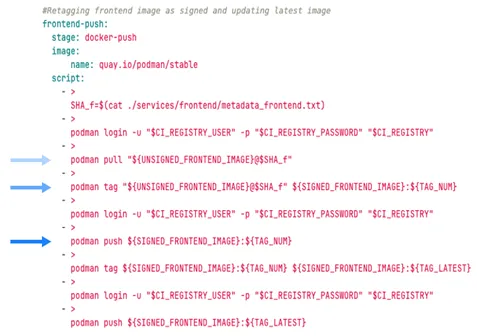

Stage 5: Docker Push

Within the closing stage of the pipeline, the signed Docker pictures are pushed to the GitLab container registry (Determine 9). Much like Docker construct, this stage is separated into two jobs: One is pushing the signed front-end picture and the opposite is pushing the signed back-end picture. The three predominant steps of this stage are pulling the picture from the cloud registry, altering the picture vacation spot from an unsigned to a signed folder, and, lastly, pushing the picture (Determine 10).

Determine 9: The Docker Push Stage

Determine 10: Pushing Signed Photographs to Cloud Registry

The Docker Push stage pushes the identical picture twice: one with the construct pipeline quantity because the tag and the opposite with “newest” because the tag. This strategy offers a file of earlier signed Docker pictures within the pipeline whereas making certain that the picture tagged “newest” is the newest Docker picture.

Technical Takeaways for Utilizing DevSecOps Pipelines

This instance case, by which we labored to show insurance policies, finest practices, and different reference design materials into follow in a real-world growth setting, illustrated the significance of the next:

- Conduct in depth analysis earlier than diving into pipeline growth. Using one device (SonarQube) was much better, each for performance and ease of use, than the five-plus linting instruments we utilized in our preliminary effort. DevSecOps is not only an array of instruments: These instruments, chosen fastidiously and used intelligently, should work collectively to deliver the disparate processes of growth, safety, and operations into one cohesive pipeline.

- Know your growth setting and runner effectively. We spent important time working to implement Docker-in-Docker within the pipeline solely to be taught it was not supported by our runner. According to DevSecOps finest practices, a staff works finest when every member has a working data of the total stack, together with the runner’s configuration.

- Actively preserve and monitor the pipeline, even throughout growth and deployment. As soon as the pipeline was full, we moved to growth solely to find a number of weeks later that SonarQube was exhibiting many linting errors and a failing pipeline. Resolving these points periodically, somewhat than permitting them to construct up over weeks, facilitates the continual supply integral to DevSecOps.

- Set up a Minimal Viable Course of. A profitable DevSecOps implementation requires collaboration and actually shines when stakeholders are engaged early within the SDLC. By establishing a minimal viable course of, you actually embrace DevSecOps tradition by offering an setting that permits for steady studying, steady enchancment, and early identification of metrics.

Subsequent Steps for Enhancing the DSO Pipeline

In ahead work we plan so as to add In-toto, DefectDojo, and app deployment to our pipeline to enhance undertaking safety, as described beneath:

- In-toto will signal every step of the pipeline, making a documented log of who did what and in what order, thus, making a safe pipeline.

- DefectDojo will assist with app safety. It can permit for simple administration of a number of safety instruments checking for vulnerabilities, duplications, and different unhealthy practices. As well as, it can observe very important product data, corresponding to language composition, applied sciences, consumer information, and extra. This functionality will create an outline for the whole undertaking.

- Including deployment as a part of the pipeline ensures that any modifications to our undertaking will probably be totally examined earlier than implementation in our website; consequently, reducing the probabilities crashing.

[ad_2]