[ad_1]

Intro

In recent times, Kafka has turn into synonymous with “streaming,” and with options like Kafka Streams, KSQL, joins, and integrations into sinks like Elasticsearch and Druid, there are extra methods than ever to construct a real-time analytics utility round streaming knowledge in Kafka. With all of those stream processing and real-time knowledge retailer choices, although, additionally comes questions for when every needs to be used and what their execs and cons are. On this publish, I’ll focus on some widespread real-time analytics use-cases that we now have seen with our clients right here at Rockset and the way completely different real-time analytics architectures swimsuit every of them. I hope by the tip you end up higher knowledgeable and fewer confused in regards to the real-time analytics panorama and are able to dive in to it for your self.

First, an compulsory apart on real-time analytics.

Traditionally, analytics have been performed in batch, with jobs that will run at some specified interval and course of some nicely outlined quantity of information. Over the past decade nonetheless, the web nature of our world has led rise to a distinct paradigm of information technology during which there isn’t any nicely outlined begin or finish to the information. These unbounded “streams” of information are sometimes comprised of buyer occasions from an internet utility, sensor knowledge from an IoT gadget, or occasions from an inner service. This shift in the best way we take into consideration our enter knowledge has necessitated an identical shift in how we course of it. In spite of everything, what does it imply to compute the min or max of an unbounded stream? Therefore the rise of real-time analytics, a self-discipline and methodology for how one can run computation on knowledge from real-time streams to provide helpful outcomes. And since streams additionally have a tendency have a excessive knowledge velocity, real-time analytics is usually involved not solely with the correctness of its outcomes but additionally its freshness.

Kafka match itself properly into this new motion as a result of it’s designed to bridge knowledge producers and customers by offering a scalable, fault-tolerant spine for event-like knowledge to be written to and browse from. Over time as they’ve added options like Kafka Streams, KSQL, joins, Kafka ksqlDB, and integrations with varied knowledge sources and sinks, the barrier to entry has decreased whereas the facility of the platform has concurrently elevated. It’s essential to additionally observe that whereas Kafka is kind of highly effective, there are numerous issues it self-admittedly is just not. Particularly, it isn’t a database, it isn’t transactional, it isn’t mutable, its question language KSQL is just not absolutely SQL-compliant, and it isn’t trivial to setup and keep.

Now that we’ve settled that, let’s contemplate a number of widespread use circumstances for Kafka and see the place stream processing or a real-time database may go. We’ll focus on what a pattern structure may seem like for every.

Use Case 1: Easy Filtering and Aggregation

A quite common use case for stream processing is to supply fundamental filtering and predetermined aggregations on high of an occasion stream. Let’s suppose we now have clickstream knowledge coming from a shopper internet utility and we wish to decide the variety of homepage visits per hour.

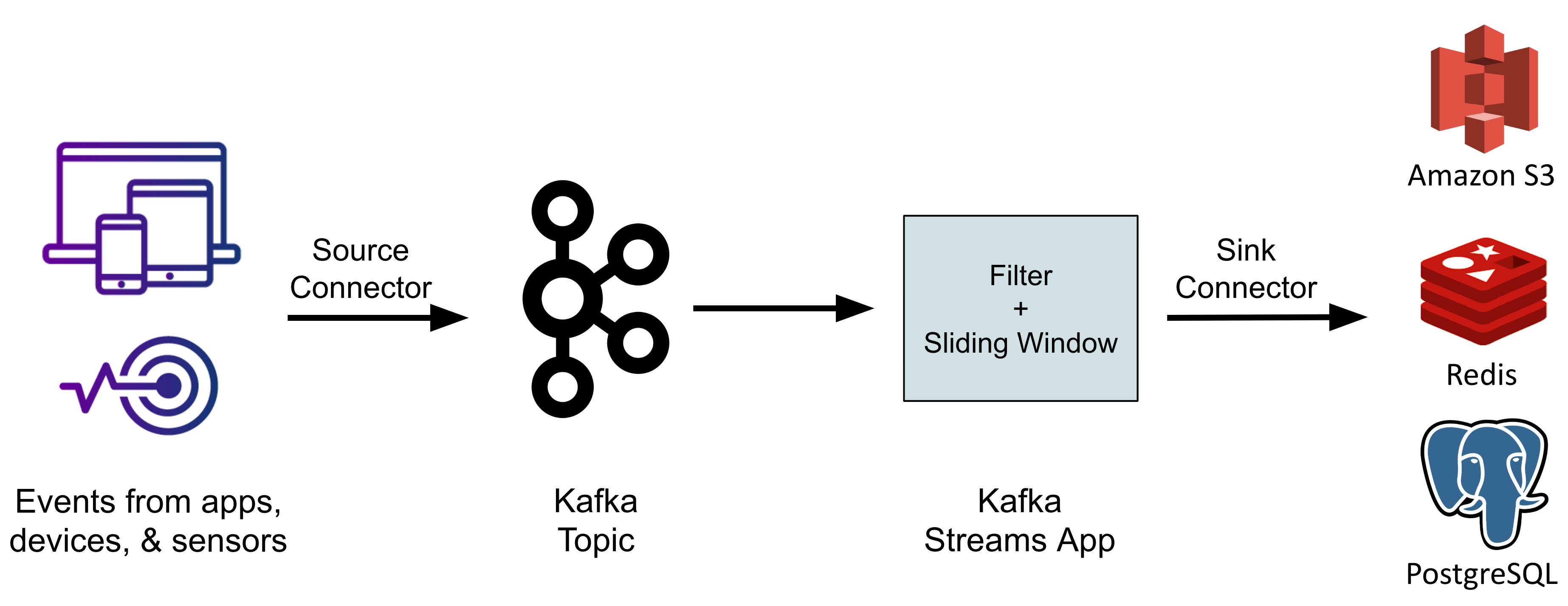

To perform this we are able to use Kafka streams and KSQL. Our internet utility writes occasions right into a Kafka subject referred to as clickstream. We are able to then create a Kafka stream primarily based on this subject that filters out all occasions the place endpoint != '/' and applies a sliding window with an interval of 1 hour over the stream and computes a rely(*). This ensuing stream can then dump the emitted data into your sink of alternative– S3/GCS, Elasticsearch, Redis, Postgres, and so forth. Lastly your inner utility/dashboard can pull the metrics from this sink and show them nonetheless you want.

Word: Now with ksqlDB you possibly can have a materialized view of a Kafka stream that’s immediately queryable, so it’s possible you’ll not essentially have to dump it right into a third-party sink.

This sort of setup is form of the “good day world” of Kafka streaming analytics. It’s so easy however will get the job performed, and consequently this can be very widespread in real-world implementations.

Professionals:

- Easy to setup

- Quick queries on the sinks for predetermined aggregations

Cons:

- It’s important to outline a Kafka stream’s schema at stream creation time, that means future modifications within the utility’s occasion payload may result in schema mismatches and runtime points

- There’s no alternate option to slice the information after-the-fact (i.e. views/minute)

Use Case 2: Enrichment

The following use case we’ll contemplate is stream enrichment– the method of denormalizing stream knowledge to make downstream analytics easier. That is typically referred to as a “poor man’s be a part of” since you are successfully becoming a member of the stream with a small, static dimension desk (from SQL parlance). For instance, let’s say the identical clickstream knowledge from earlier than contained a subject referred to as countryId. Enrichment may contain utilizing the countryId to search for the corresponding nation title, nationwide language, and so forth. and inject these extra fields into the occasion. This could then allow downstream functions that have a look at the information to compute, for instance, the variety of non-native English audio system who load the English model of the web site.

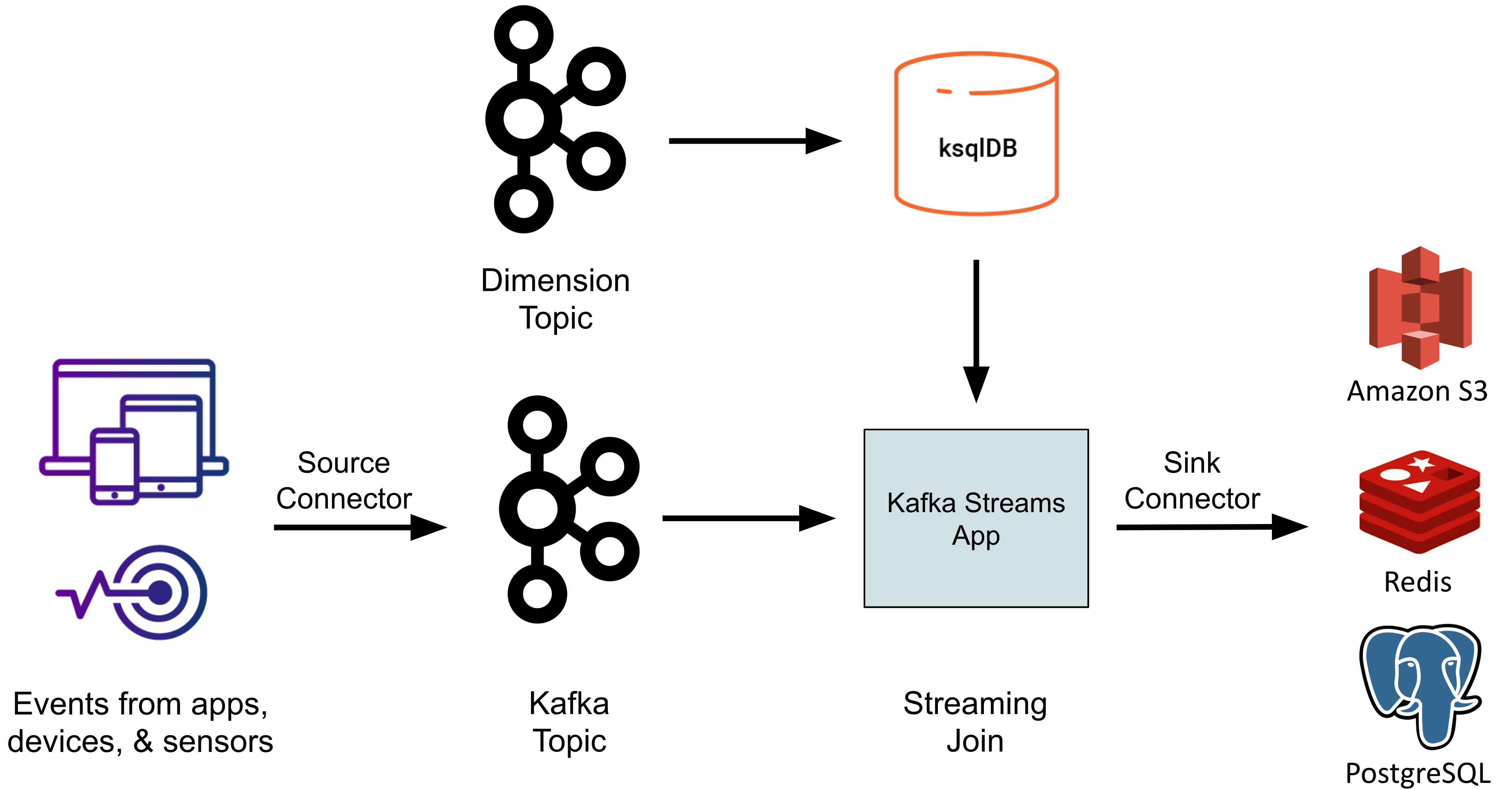

To perform this, step one is to get our dimension desk mapping countryId to call and language accessible in Kafka. Since all the things in Kafka is a subject, even this knowledge have to be written to some new subject, let’s say referred to as international locations. Then we have to create a KSQL desk on high of that subject utilizing the CREATE TABLE KSQL DDL. This requires the schema and first key be specified at creation time and can materialize the subject as an in-memory desk the place the most recent report for every distinctive major key worth is represented. If the subject is partitioned, KSQL could be sensible right here and partition this in-memory desk as nicely, which is able to enhance efficiency. Beneath the hood, these in-memory tables are literally situations of RocksDB, an extremely highly effective, embeddable key worth retailer created at Fb by the identical engineers who’ve now constructed Rockset (small world!).

Then, like earlier than, we have to create a Kafka stream on high of the clickstream Kafka subject. Let’s name this stream S. Then utilizing some SQL-like semantics, we are able to outline one other stream, let’s name it T which would be the output of the be a part of between that Kafka stream and our Kafka desk from above. For every report in our stream S, it should lookup the countryId within the Kafka desk we outlined and add the countryName and language fields to the report and emit that report to stream T.

Professionals:

- Downstream functions now have entry to fields from a number of sources multi function place

Cons:

- Kafka desk is just keyed on one subject, so joins for an additional subject require creating one other desk on the identical knowledge that’s keyed in a different way

- Kafka desk being in-memory means dimension tables have to be small-ish

- Early materialization of the be a part of can result in stale knowledge. For instance if we had a userId subject that we had been attempting to hitch on to complement the report with the consumer’s complete visits, the data in stream

Twouldn’t replicate the up to date worth of the consumer’s visits after the enrichment takes place

Use Case 3: Actual-Time Databases

The following step within the maturation of streaming analytics is to start out working extra intricate queries that deliver collectively knowledge from varied sources. For instance, let’s say we wish to analyze our clickstream knowledge in addition to knowledge about our promoting campaigns to find out how one can most successfully spend our advert {dollars} to generate a rise in visitors. We want entry to knowledge from Kafka, our transactional retailer (i.e. Postgres), and possibly even knowledge lake (i.e. S3) to tie collectively all the size of our visits.

To perform this we have to choose an end-system that may ingest, index, and question all these knowledge. Since we wish to react in real-time to tendencies, a knowledge warehouse is out of query since it could take too lengthy to ETL the information there after which attempt to run this evaluation. A database like Postgres additionally wouldn’t work since it’s optimized for level queries, transactions, and comparatively small knowledge sizes, none of that are related/superb for us.

You would argue that the strategy in use case #2 may go right here since we are able to arrange one connector for every of our knowledge sources, put all the things in Kafka matters, create a number of ksqlDBs, and arrange a cluster of Kafka streams functions. When you may make that work with sufficient brute drive, if you wish to assist ad-hoc slicing of your knowledge as an alternative of simply monitoring metrics, in case your dashboards and functions evolve with time, or in order for you knowledge to all the time be contemporary and by no means stale, that strategy received’t lower it. We successfully want a read-only duplicate of our knowledge from its varied sources that helps quick queries on massive volumes of information; we’d like a real-time database.

Professionals:

- Help ad-hoc slicing of information

- Combine knowledge from a wide range of sources

- Keep away from stale knowledge

Cons:

- One other service in your infrastructure

- One other copy of your knowledge

Actual-Time Databases

Fortunately we now have a number of good choices for real-time database sinks that work with Kafka.

The primary choice is Apache Druid, an open-source columnar database. Druid is nice as a result of it might probably scale to petabytes of information and is extremely optimized for aggregations. Sadly although it doesn’t assist joins, which suggests to make this work we must carry out the enrichment forward of time in another service earlier than dumping the information into Druid. Additionally, its structure is such that spikes in new knowledge being written can negatively have an effect on queries being served.

The following choice is Elasticsearch which has turn into immensely well-liked for log indexing and search, in addition to different search-related functions. For level lookups on semi-structured or unstructured knowledge, Elasticsearch could also be the best choice on the market. Like Druid, you’ll nonetheless have to pre-join the information, and spikes in writes can negatively affect queries. Not like Druid, Elasticsearch received’t be capable to run aggregations as rapidly, and it has its personal visualization layer in Kibana, which is intuitive and nice for exploratory level queries.

The ultimate choice is Rockset, a serverless real-time database that helps absolutely featured SQL, together with joins, on knowledge from a wide range of sources. With Rockset you possibly can be a part of a Kafka stream with a CSV file in S3 with a desk in DynamoDB in real-time as in the event that they had been all simply common tables in the identical SQL database. No extra stale, pre-joined knowledge! Nevertheless Rockset isn’t open supply and received’t scale to petabytes like Druid, and it’s not designed for unstructured textual content search like Elastic.

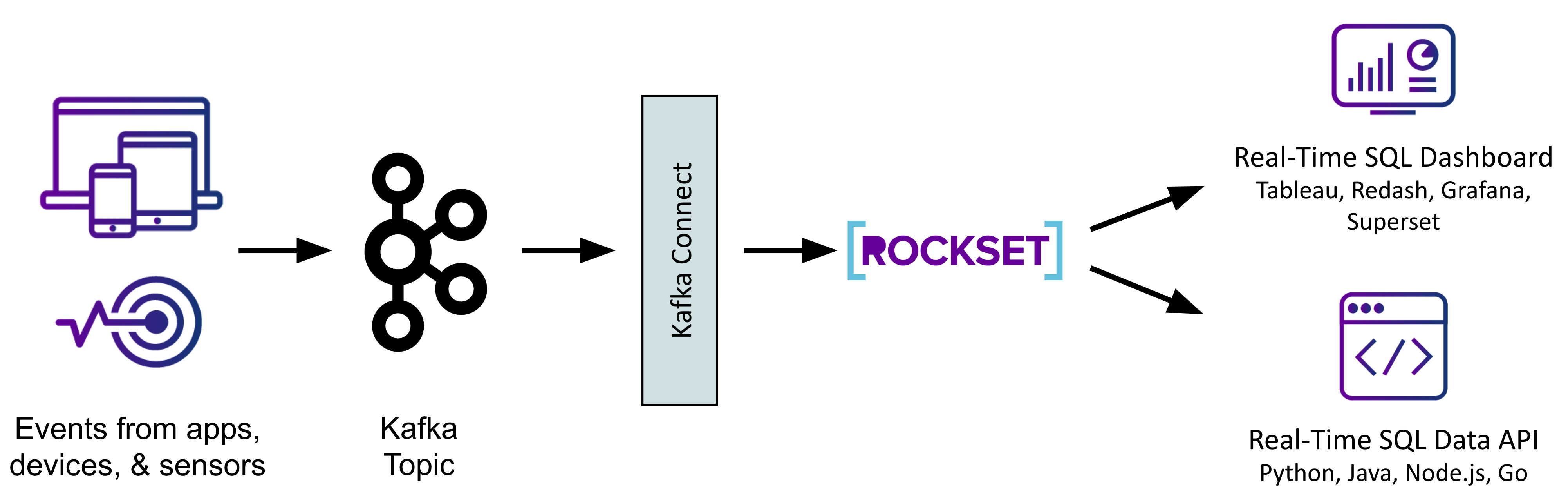

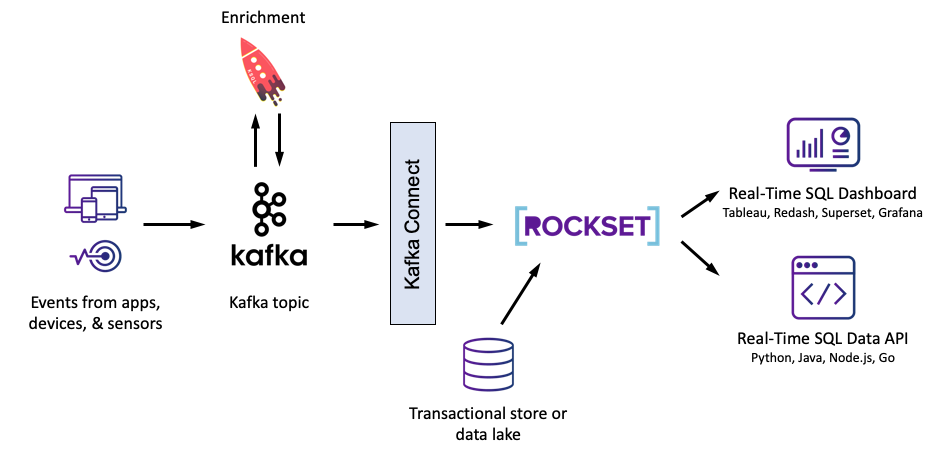

Whichever choice we choose, we are going to arrange our Kafka subject as earlier than and this time join it utilizing the suitable sink connector to our real-time database. Different sources can even feed immediately into the database, and we are able to level our dashboards and functions to this database as an alternative of on to Kafka. For instance, with Rockset, we may use the net console to arrange our different integrations with S3, DynamoDB, Redshift, and so forth. Then via Rockset’s on-line question editor, or via the SQL-over-REST protocol, we are able to begin querying all of our knowledge utilizing acquainted SQL. We are able to then go forward and use a visualization software like Tableau to create a dashboard on high of our Kafka stream and our different knowledge sources to raised view and share our findings.

For a deeper dive evaluating these three, take a look at this weblog.

Placing It Collectively

Within the earlier sections, we checked out stream processing and real-time databases, and when finest to make use of them along side Kafka. Stream processing, with KSQL and Kafka Streams, needs to be your alternative when performing filtering, cleaning, and enrichment, whereas utilizing a real-time database sink, like Rockset, Elasticsearch, or Druid, is smart if you’re constructing knowledge functions that require extra complicated analytics and advert hoc queries.

You would conceivably make use of each in your analytics stack in case your necessities contain each filtering/enrichment and sophisticated analytic queries. For instance, we may use KSQL to complement our clickstreams with geospatial knowledge and likewise use Rockset as a real-time database downstream, bringing in buyer transaction and advertising and marketing knowledge, to serve an utility making suggestions to customers on our web site.

Hopefully the use circumstances mentioned above have resonated with an actual downside you are attempting to unravel. Like every other expertise, Kafka could be extraordinarily highly effective when used appropriately and intensely clumsy when not. I hope you now have some extra readability on how one can strategy a real-time analytics structure and might be empowered to maneuver your group into the information future.

[ad_2]