[ad_1]

Introduction

Synthetic intelligence has revolutionized due to Secure Diffusion, which makes producing high-quality photographs from noise or textual content descriptions attainable. A number of important components come collectively on this potent generative mannequin to create superb visible results. The 5 important elements of Diffusion Fashions—the ahead and reverse processes, the noise schedule, positional encoding, and neural community structure—will all be coated on this article.

We’ll implement elements of diffusion fashions as we undergo the article. We shall be utilizing the Trend MNIST Dataset for this.

Overview

- Uncover how Secure Diffusion transforms AI picture era, bringing high-quality visuals from mere noise or textual content descriptions.

- Find out how photographs degrade to noise, coaching AI fashions to grasp the artwork of visible reconstruction.

- Discover how AI reconstructs high-quality photographs from noise, reversing the degradation course of step-by-step.

- Perceive the position of distinctive vector representations in guiding AI by various noise ranges throughout picture era.

- Delve into the symmetrical encoder-decoder construction of UNet, which excels at producing high quality particulars and international constructions.

- Look at the crucial noise schedule in diffusion fashions, balancing era high quality and computational effectivity for high-fidelity AI outputs.

Ahead Diffusion Course of

The ahead course of is the preliminary stage of Secure Diffusion, the place a picture is step by step reworked into noise. This course of is essential for coaching the mannequin to know how photographs degrade over time.

Necessary facets of the ahead course of encompass:

- Gaussian noise is step by step added to the picture by the mannequin over a number of timesteps in tiny increments.

- The Markov property states that each step in a ahead course of solely is determined by the step earlier than it, making a Markov chain.

- Gaussian convergence: The info distribution converges to a Gaussian distribution after a adequate variety of steps.

Listed below are the elements of the diffusion mannequin:

Implementation of the Ahead Diffusion Course of

The code on this pocket book is customized from Brian Pulfer’s DDPM implementation in his GitHub repo.

Importing essential libraries

# Import of libraries

import random

import imageio

import numpy as np

from argparse import ArgumentParser

from tqdm.auto import tqdm

import matplotlib.pyplot as plt

import einops

import torch

import torch.nn as nn

from torch.optim import Adam

from torch.utils.information import DataLoader

from torchvision.transforms import Compose, ToTensor, Lambda

from torchvision.datasets.mnist import MNIST, FashionMNIST

# Setting reproducibility

SEED = 0

random.seed(SEED)

np.random.seed(SEED)

torch.manual_seed(SEED)

# Definitions

STORE_PATH_MNIST = f"ddpm_model_mnist.pt"

STORE_PATH_FASHION = f"ddpm_model_fashion.pt"Setting SEED for reproducibility

no_train = False

trend = True

batch_size = 128

n_epochs = 20

lr = 0.001

store_path = "ddpm_fashion.pt" if trend else "ddpm_mnist.pt"Setting some parameters, no_train is ready to False. This means that we are going to prepare the mannequin, and never use any pretrained mannequin. Batch_size, n_epochs, and lr are normal deep-learning parameters. We shall be utilizing the Trend MNIST dataset right here.

Loading Knowledge

# Loading the info (changing every picture right into a tensor and normalizing between [-1, 1])

remodel = Compose([

ToTensor(),

Lambda(lambda x: (x - 0.5) * 2)]

)

ds_fn = FashionMNIST if trend else MNIST

dataset = ds_fn("./datasets", obtain=True, prepare=True, remodel=remodel)

loader = DataLoader(dataset, batch_size, shuffle=True)We’ll use the pytorch information loader to load our Trend MNIST Dataset.

Ahead Diffusion Course of operate

def ahead(self, x0, t, eta=None):

n, c, h, w = x0.form

a_bar = self.alpha_bars[t]

if eta is None:

eta = torch.randn(n, c, h, w).to(self.system)

noisy = a_bar.sqrt().reshape(n, 1, 1, 1) * x0 + (1 - a_bar).sqrt().reshape(n, 1, 1, 1) * eta

return noisyThe above operate implements the ahead diffusion equation on to the specified step. Observe: Right here, we don’t induce noise at every timestep; as an alternative, we learn the picture on the timestep instantly.

def show_forward(ddpm, loader, system):

# Displaying the ahead course of

for batch in loader:

imgs = batch[0]

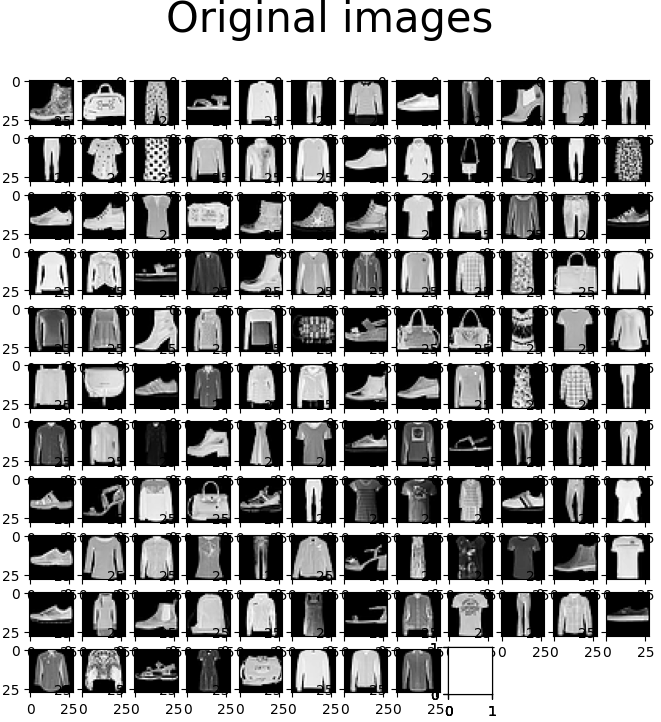

show_images(imgs, "Unique photographs")

for p.c in [0.25, 0.5, 0.75, 1]:

show_images(

ddpm(imgs.to(system),

[int(percent * ddpm.n_steps) - 1 for _ in range(len(imgs))]),

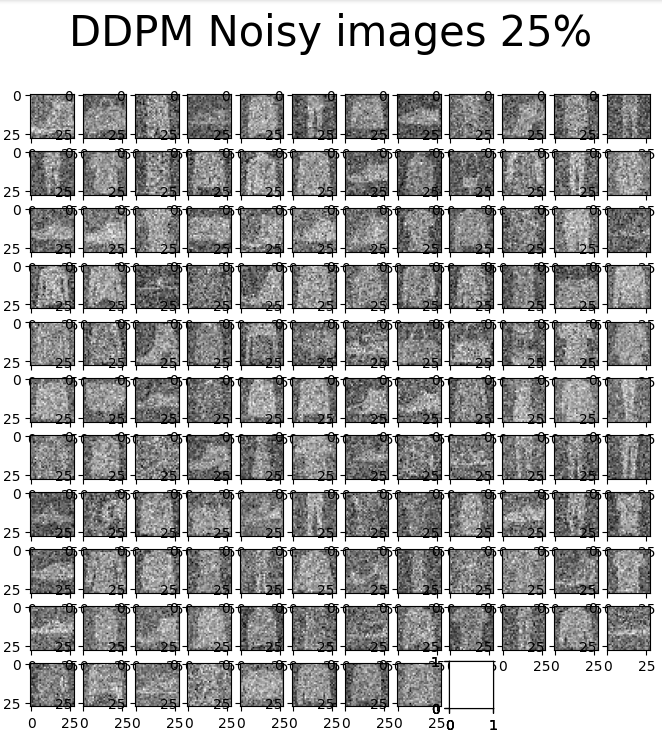

f"DDPM Noisy photographs {int(p.c * 100)}%"

)

breakThe above code will assist us visualize the picture noise at completely different ranges: 25%, 50%, 75%, and 100%.

Reverse Diffusion Course of

The core of steady diffusion is the reverse course of, which teaches the mannequin to piece collectively noisy photographs into high-quality ones. This course of, employed for coaching and picture era, is the alternative of the ahead course of.

Necessary facets of the alternative process:

- Iterative denoising: The unique picture is progressively revealed because the mannequin step by step removes the noise.

- Noise prediction: The mannequin makes predictions in regards to the noise within the present picture at every step.

- Managed era: Extra management over the creation of photographs is feasible due to the reverse course of, which allows interventions at specific timesteps.

Additionally Learn: Unraveling the Energy of Diffusion Fashions in Fashionable AI

Implementation of Reverse Diffusion Course of

def backward(self, x, t):

# Run every picture by the community for every timestep t within the vector t.

# The community returns its estimation of the noise that was added.

return self.community(x, t)Properly chances are you’ll say that the reverse diffusion course of operate may be very easy, it’s as a result of in reverse diffusion the community (we are going to look into the community half quickly) predicts the quantity of noise, calculates the loss with the unique noise to be taught. Therefore, the code may be very easy. Beneath is the code for your complete DDPM – Denoise Diffusion Probabilistic Mannequin.

The under code creates a Denoising Diffusion Probabilistic Mannequin (DDPM) and defines the MyDDPM PyTorch module. The ahead diffusion course of is carried out by the ahead approach, which provides noise to an enter picture primarily based on a predetermined timestep. The backward strategy, important for the reverse diffusion course of, estimates the noise in a given noisy picture at a selected timestep utilizing a neural community. The category additionally initializes diffusion course of parameters comparable to alpha and beta schedules.

# DDPM class

class MyDDPM(nn.Module):

def __init__(self, community, n_steps=200, min_beta=10 ** -4, max_beta=0.02, system=None, image_chw=(1, 28, 28)):

tremendous(MyDDPM, self).__init__()

self.n_steps = n_steps

self.system = system

self.image_chw = image_chw

self.community = community.to(system)

self.betas = torch.linspace(min_beta, max_beta, n_steps).to(

system) # Variety of steps is often within the order of hundreds

self.alphas = 1 - self.betas

self.alpha_bars = torch.tensor([torch.prod(self.alphas[:i + 1]) for i in vary(len(self.alphas))]).to(system)

def ahead(self, x0, t, eta=None):

# Make enter picture extra noisy (we will instantly skip to the specified step)

n, c, h, w = x0.form

a_bar = self.alpha_bars[t]

if eta is None:

eta = torch.randn(n, c, h, w).to(self.system)

noisy = a_bar.sqrt().reshape(n, 1, 1, 1) * x0 + (1 - a_bar).sqrt().reshape(n, 1, 1, 1) * eta

return noisy

def backward(self, x, t):

# Run every picture by the community for every timestep t within the vector t.

# The community returns its estimation of the noise that was added.

return self.community(x, t)The parameters are n_steps, which tells us the variety of timesteps within the coaching course of. min_beta and max_beta point out the noise schedule, which we are going to focus on quickly.

def generate_new_images(ddpm, n_samples=16, system=None, frames_per_gif=100, gif_name="sampling.gif", c=1, h=28, w=28):

"""Given a DDPM mannequin, numerous samples to be generated and a tool, returns some newly generated samples"""

frame_idxs = np.linspace(0, ddpm.n_steps, frames_per_gif).astype(np.uint)

frames = []

with torch.no_grad():

if system is None:

system = ddpm.system

# Ranging from random noise

x = torch.randn(n_samples, c, h, w).to(system)

for idx, t in enumerate(checklist(vary(ddpm.n_steps))[::-1]):

# Estimating noise to be eliminated

time_tensor = (torch.ones(n_samples, 1) * t).to(system).lengthy()

eta_theta = ddpm.backward(x, time_tensor)

alpha_t = ddpm.alphas[t]

alpha_t_bar = ddpm.alpha_bars[t]

# Partially denoising the picture

x = (1 / alpha_t.sqrt()) * (x - (1 - alpha_t) / (1 - alpha_t_bar).sqrt() * eta_theta)

if t > 0:

z = torch.randn(n_samples, c, h, w).to(system)

# Possibility 1: sigma_t squared = beta_t

beta_t = ddpm.betas[t]

sigma_t = beta_t.sqrt()

# Possibility 2: sigma_t squared = beta_tilda_t

# prev_alpha_t_bar = ddpm.alpha_bars[t-1] if t > 0 else ddpm.alphas[0]

# beta_tilda_t = ((1 - prev_alpha_t_bar)/(1 - alpha_t_bar)) * beta_t

# sigma_t = beta_tilda_t.sqrt()

# Including some extra noise like in Langevin Dynamics trend

x = x + sigma_t * z

# Including frames to the GIF

if idx in frame_idxs or t == 0:

# Placing digits in vary [0, 255]

normalized = x.clone()

for i in vary(len(normalized)):

normalized[i] -= torch.min(normalized[i])

normalized[i] *= 255 / torch.max(normalized[i])

# Reshaping batch (n, c, h, w) to be a (as a lot because it will get) sq. body

body = einops.rearrange(normalized, "(b1 b2) c h w -> (b1 h) (b2 w) c", b1=int(n_samples ** 0.5))

body = body.cpu().numpy().astype(np.uint8)

# Rendering body

frames.append(body)

# Storing the gif

with imageio.get_writer(gif_name, mode="I") as author:

for idx, body in enumerate(frames):

# Convert grayscale body to RGB

rgb_frame = np.repeat(body, 3, axis=-1)

author.append_data(rgb_frame)

if idx == len(frames) - 1:

for _ in vary(frames_per_gif // 3):

author.append_data(rgb_frame)

return xThe above code is our operate for producing new photographs. It creates 16 new photographs. The reverse technique of these 16 new photographs is captured at every timestep, however solely 100 are taken from 200 timesteps. Then, these 100 frames are changed into GIFs to indicate the visualization of our model-generating photographs.

The above code shall be generated as soon as the community is ready. Now, let’s look into the neural community.

Additionally learn: Implementing Diffusion Fashions for Inventive AI Artwork Era

Neural Community Structure

Earlier than we glance into the structure of our neural community, which we are going to use to generate photographs. We must always know that the diffusion mannequin’s parameters are shared throughout completely different timesteps. It should take away noise from photographs with broadly completely different ranges of noise. Therefore, we have now positional encoding, which encodes the timestep utilizing a sinusoidal operate to handle this.

Implementation of Positional Encoding

Key facets of positional encoding:

- Distinct illustration: Every timestep is given a novel vector illustration.

- Noise stage consciousness: Helps the mannequin perceive the present noise stage, permitting for acceptable denoising selections.

- Course of steering: Guides the mannequin by completely different levels of the diffusion course of.

def sinusoidal_embedding(n, d):

# Returns the usual positional embedding

embedding = torch.zeros(n, d)

wk = torch.tensor([1 / 10_000 ** (2 * j / d) for j in range(d)])

wk = wk.reshape((1, d))

t = torch.arange(n).reshape((n, 1))

embedding[:,::2] = torch.sin(t * wk[:,::2])

embedding[:,1::2] = torch.cos(t * wk[:,::2])

return embeddingNow that we have now seen positional encoding to tell apart between timesteps, we are going to look into our Neural Community Structure. UNet is the most typical structure used within the diffusion mannequin as a result of it really works on the picture’s pixel stage. It contains a symmetric encoder-decoder construction with skip connections between corresponding layers. In Secure Diffusion, U-Internet predicts the noise at every denoising step. Its potential to seize and mix options at completely different scales makes it notably efficient for picture era duties, permitting the mannequin to keep up high quality particulars and international construction within the generated photographs.

Let’s declare UNet for our steady diffusion course of.

class MyUNet(nn.Module):

'''

Vanilla UNet Implementation with Timesteps Positional Ecndoing being utilized in each block along with Traditional enter from earlier block

'''

def __init__(self, n_steps=1000, time_emb_dim=100):

tremendous(MyUNet, self).__init__()

# Sinusoidal embedding

self.time_embed = nn.Embedding(n_steps, time_emb_dim)

self.time_embed.weight.information = sinusoidal_embedding(n_steps, time_emb_dim)

self.time_embed.requires_grad_(False)

# First half

self.te1 = self._make_te(time_emb_dim, 1)

self.b1 = nn.Sequential(

MyBlock((1, 28, 28), 1, 10),

MyBlock((10, 28, 28), 10, 10),

MyBlock((10, 28, 28), 10, 10)

)

self.down1 = nn.Conv2d(10, 10, 4, 2, 1)

self.te2 = self._make_te(time_emb_dim, 10)

self.b2 = nn.Sequential(

MyBlock((10, 14, 14), 10, 20),

MyBlock((20, 14, 14), 20, 20),

MyBlock((20, 14, 14), 20, 20)

)

self.down2 = nn.Conv2d(20, 20, 4, 2, 1)

self.te3 = self._make_te(time_emb_dim, 20)

self.b3 = nn.Sequential(

MyBlock((20, 7, 7), 20, 40),

MyBlock((40, 7, 7), 40, 40),

MyBlock((40, 7, 7), 40, 40)

)

self.down3 = nn.Sequential(

nn.Conv2d(40, 40, 2, 1),

nn.SiLU(),

nn.Conv2d(40, 40, 4, 2, 1)

)

# Bottleneck

self.te_mid = self._make_te(time_emb_dim, 40)

self.b_mid = nn.Sequential(

MyBlock((40, 3, 3), 40, 20),

MyBlock((20, 3, 3), 20, 20),

MyBlock((20, 3, 3), 20, 40)

)

# Second half

self.up1 = nn.Sequential(

nn.ConvTranspose2d(40, 40, 4, 2, 1),

nn.SiLU(),

nn.ConvTranspose2d(40, 40, 2, 1)

)

self.te4 = self._make_te(time_emb_dim, 80)

self.b4 = nn.Sequential(

MyBlock((80, 7, 7), 80, 40),

MyBlock((40, 7, 7), 40, 20),

MyBlock((20, 7, 7), 20, 20)

)

self.up2 = nn.ConvTranspose2d(20, 20, 4, 2, 1)

self.te5 = self._make_te(time_emb_dim, 40)

self.b5 = nn.Sequential(

MyBlock((40, 14, 14), 40, 20),

MyBlock((20, 14, 14), 20, 10),

MyBlock((10, 14, 14), 10, 10)

)

self.up3 = nn.ConvTranspose2d(10, 10, 4, 2, 1)

self.te_out = self._make_te(time_emb_dim, 20)

self.b_out = nn.Sequential(

MyBlock((20, 28, 28), 20, 10),

MyBlock((10, 28, 28), 10, 10),

MyBlock((10, 28, 28), 10, 10, normalize=False)

)

self.conv_out = nn.Conv2d(10, 1, 3, 1, 1)

def ahead(self, x, t):

# x is (N, 2, 28, 28) (picture with positional embedding stacked on channel dimension)

t = self.time_embed(t)

n = len(x)

out1 = self.b1(x + self.te1(t).reshape(n, -1, 1, 1)) # (N, 10, 28, 28)

out2 = self.b2(self.down1(out1) + self.te2(t).reshape(n, -1, 1, 1)) # (N, 20, 14, 14)

out3 = self.b3(self.down2(out2) + self.te3(t).reshape(n, -1, 1, 1)) # (N, 40, 7, 7)

out_mid = self.b_mid(self.down3(out3) + self.te_mid(t).reshape(n, -1, 1, 1)) # (N, 40, 3, 3)

out4 = torch.cat((out3, self.up1(out_mid)), dim=1) # (N, 80, 7, 7)

out4 = self.b4(out4 + self.te4(t).reshape(n, -1, 1, 1)) # (N, 20, 7, 7)

out5 = torch.cat((out2, self.up2(out4)), dim=1) # (N, 40, 14, 14)

out5 = self.b5(out5 + self.te5(t).reshape(n, -1, 1, 1)) # (N, 10, 14, 14)

out = torch.cat((out1, self.up3(out5)), dim=1) # (N, 20, 28, 28)

out = self.b_out(out + self.te_out(t).reshape(n, -1, 1, 1)) # (N, 1, 28, 28)

out = self.conv_out(out)

return out

def _make_te(self, dim_in, dim_out):

return nn.Sequential(

nn.Linear(dim_in, dim_out),

nn.SiLU(),

nn.Linear(dim_out, dim_out)

)Instantiating the mannequin

# Defining mannequin

n_steps, min_beta, max_beta = 1000, 10 ** -4, 0.02 # Initially utilized by the authors

ddpm = MyDDPM(MyUNet(n_steps), n_steps=n_steps, min_beta=min_beta, max_beta=max_beta, system=system)

#Variety of parameters within the mannequin to be realized.

sum([p.numel() for p in ddpm.parameters()])Visualization of Ahead diffusion

show_forward(ddpm, loader, system)

The above-mentioned photographs are authentic Trend MNIST photographs with none noise. Right here, we are going to take these photographs and slowly inducing noise into them.

We will observe from the above-mentioned photographs that there’s noise within the photographs, however it isn’t troublesome to acknowledge them. We add noise as per our noise schedule. The above photographs include 25% of the noise as per the linear noise schedule.

We will see that the noise is being step by step added till 100% of the picture is noise. The above picture reveals 50% of the noise added as per noise schedule and at 50% we’re unable to recognise photographs, that is thought-about a disadvantage of linear noise schedule and up to date diffusion fashions use extra superior methods to induce noise.

Producing Photographs Earlier than Coaching

generated = generate_new_images(ddpm, gif_name="before_training.gif")

show_images(generated, "Photographs generated earlier than coaching")We will see that the mannequin is aware of nothing in regards to the dataset and may generate solely noise. Earlier than we begin coaching our mannequin, we are going to focus on the noise schedule.

Noise Schedule

The noise schedule is a crucial part in diffusion fashions. It determines how noise is added throughout the ahead course of and eliminated throughout the reverse course of. It additionally defines the speed at which info is destroyed and reconstructed, considerably impacting the mannequin’s efficiency and the standard of generated samples.

A well-designed noise schedule balances the trade-off between era high quality and computational effectivity. Too fast noise addition can result in info loss and poor reconstruction, whereas too sluggish a schedule may end up in unnecessarily lengthy computation instances. Superior methods like cosine schedules can optimize this course of, permitting for sooner sampling with out sacrificing output high quality. The noise schedule additionally influences the mannequin’s potential to seize completely different ranges of element, from coarse constructions to high quality textures, making it a key consider reaching high-fidelity generations.

In our DDPM mannequin, we are going to use a Linear Schedule the place noise is added linearly, however there are different latest developments in Secure diffusion. Now that we perceive the Noise schedule let’s prepare our mannequin.

Mannequin Coaching

In mannequin coaching, we absorb our Neural Community and prepare them upon the photographs that we get from ahead diffusion; the under operate takes our mannequin, dataset, variety of epochs, and optimizer used. eta is the unique quantity of noise added to the picture, and eta_theta is the noise predicted by the mannequin. Upon realizing the MSE loss, utilizing the eta and eta_theta mannequin, it learns to foretell noise current within the picture.

def training_loop(ddpm, loader, n_epochs, optim, system, show=False, store_path="ddpm_model.pt"):

mse = nn.MSELoss()

best_loss = float("inf")

n_steps = ddpm.n_steps

for epoch in tqdm(vary(n_epochs), desc=f"Coaching progress", color="#00ff00"):

epoch_loss = 0.0

for step, batch in enumerate(tqdm(loader, depart=False, desc=f"Epoch {epoch + 1}/{n_epochs}", color="#005500")):

# Loading information

x0 = batch[0].to(system)

n = len(x0)

# Selecting some noise for every of the photographs within the batch, a timestep and the respective alpha_bars

eta = torch.randn_like(x0).to(system)

t = torch.randint(0, n_steps, (n,)).to(system)

# Computing the noisy picture primarily based on x0 and the time-step (ahead course of)

noisy_imgs = ddpm(x0, t, eta)

# Getting mannequin estimation of noise primarily based on the photographs and the time-step

eta_theta = ddpm.backward(noisy_imgs, t.reshape(n, -1))

# Optimizing the MSE between the noise plugged and the anticipated noise

loss = mse(eta_theta, eta)

optim.zero_grad()

loss.backward()

optim.step()

epoch_loss += loss.merchandise() * len(x0) / len(loader.dataset)

# Show photographs generated at this epoch

if show:

show_images(generate_new_images(ddpm, system=system), f"Photographs generated at epoch {epoch + 1}")

log_string = f"Loss at epoch {epoch + 1}: {epoch_loss:.3f}"

# Storing the mannequin

if best_loss > epoch_loss:

best_loss = epoch_loss

torch.save(ddpm.state_dict(), store_path)

log_string += " --> Finest mannequin ever (saved)"

print(log_string)

# Coaching

# Estimate - on T4 it takes round 9 minutes to do 20 epochs

store_path = "ddpm_fashion.pt" if trend else "ddpm_mnist.pt"

if not no_train:

training_loop(ddpm, loader, n_epochs, optim=Adam(ddpm.parameters(), lr), system=system, store_path=store_path)An individual with fundamental pytorch and deep studying data would say that that is simply regular mannequin coaching, and sure, it’s. We’ve got predicted noise from our mannequin and true noise from ahead diffusion. Utilizing these two, we discover loss utilizing MSE and replace our community’s weightage to discover ways to predict and take away noise.

Mannequin Testing

# Loading the skilled mannequin

best_model = MyDDPM(MyUNet(), n_steps=n_steps, system=system)

best_model.load_state_dict(torch.load(store_path, map_location=system))

best_model.eval()

print("Mannequin loaded")

print("Producing new photographs")

generated = generate_new_images(

best_model,

n_samples=100,

system=system,

gif_name="trend.gif" if trend else "mnist.gif"

)

show_images(generated, "Ultimate end result")We’ll attempt producing new photographs (100 photographs), seize the reverse course of, and make it right into a gif.

from IPython.show import Picture

Picture(open('trend.gif' if trend else 'mnist.gif','rb').learn())

The above GIF reveals us our community producing 100 photographs; it begins from pure noise and does a reverse diffusion course of; therefore, in the long run, we get 100 newly generated photographs primarily based on the educational from our MNIST dataset.

Conclusion

Secure Diffusion’s spectacular picture era capabilities end result from the intricate interaction of those 5 key elements. The ahead and reverse processes work in tandem to be taught the connection between clear and noisy photographs. The noise schedule optimizes the addition and elimination of noise, whereas positional encoding supplies essential temporal info. Lastly, the neural community structure combines all the things, studying to generate high-quality photographs from noise or textual content descriptions.

As analysis advances, we will anticipate additional refinements in every part, doubtlessly resulting in extra spectacular image-generation capabilities. The way forward for AI-generated artwork and content material seems to be brighter than ever, due to the strong basis laid by Secure Diffusion and its key elements.

If you wish to grasp steady diffusion, checkout our unique GenAI Pinnacle Program immediately!

Often Requested Questions

Ans. The ahead course of step by step provides noise to a picture, whereas the reverse course of removes noise to generate a high-quality picture.

Ans. The noise schedule determines how noise is added and eliminated, considerably impacting the mannequin’s efficiency and the standard of generated photographs.

Ans. Positional encoding helps the mannequin perceive the present noise stage and stage of the diffusion course of, offering a novel illustration for every timestep.

Ans. U-Internet and Transformer architectures are generally used because the spine for Secure Diffusion fashions.

Ans. The reverse diffusion course of iteratively removes noise from a loud enter, step by step reconstructing a high-quality picture by a number of denoising steps.

[ad_2]